当前位置:网站首页>Hands on deep learning (43) -- machine translation and its data construction

Hands on deep learning (43) -- machine translation and its data construction

2022-07-04 09:41:00 【Stay a little star】

List of articles

This article Blog Start introducing translation , All are NLP Related content of , Translation and the sequence prediction we mentioned earlier (RNN、LSTM、GRU wait )、 Fill in the blanks (Bi-RNN) And so on, what are the connections and differences ?

One 、 Machine translation

Machine translation (machine translation) It refers to the automatic translation of sequences from one language to another . in fact , This research field can be traced back to shortly after the invention of digital computer 20 century 40 years , Especially in the Second World War, computers were used to crack language codes . For decades, , Before the rise of end-to-end learning using neural networks , Statistical methods have always been dominant in this field Brown.Cocke.Della-Pietra.ea.1988, Brown.Cocke.Della-Pietra.ea.1990 . because Statistical machine translation (statistical machine translation) It involves the statistical analysis of translation model, language model and other components , Therefore, the method based on neural network is usually called Neural machine translation (neural machine translation), Used to distinguish the two translation models .( Statistical machine translation and neural machine translation )

We mainly focus on neural machine translation methods , It emphasizes end-to-end learning . It is different from the language model in which the corpus in the language model is a single language , The data set of machine translation is composed of text sequence pairs of source language and target language . therefore , We need a completely different approach to preprocessing machine translation data sets , Instead of reusing the preprocessor of the language model . below , We will show how to load the preprocessed data into a small batch for training .

Two 、 Machine translation dataset

import os

import torch

from d2l import torch as d2l

1. Downloading and preprocessing datasets

First , Download one from Tatoeba The bilingual sentences of the project are right Composed of “ Britain - Law ” Data sets , Each row in the dataset is a tab delimited text sequence pair , Sequence pairs consist of English text sequences and translated French text sequences . Please note that , Each text sequence can be a sentence , It can also be a paragraph containing multiple sentences . In this machine translation problem of translating English into French , English is a source language (source language), French is target language (target language).

d2l.DATA_HUB['fra-eng'] = (d2l.DATA_URL + 'fra-eng.zip',

'94646ad1522d915e7b0f9296181140edcf86a4f5')

def read_data_nmt():

""" load “ English - French ” Data sets """

data_dir = d2l.download_extract('fra-eng')

with open(os.path.join(data_dir,'fra.txt'),'r') as f:

return f.read()

raw_text = read_data_nmt()

print(raw_text[:80])

Go. Va !

Hi. Salut !

Run! Cours !

Run! Courez !

Who? Qui ?

Wow! Ça alors !

Fire!

1.1 Text preprocessing

- Use spaces instead of uninterrupted spaces (non-breaking space)

- Use lowercase letters instead of uppercase letters

- Insert spaces between words and punctuation

def preprocess_nmt(text):

""" Preprocessing """

# Replace uninterrupted spaces with spaces ,(\xa0 Is a character in the Latin extended character set , Represents an uninterrupted white space )

# Replace uppercase letters with lowercase letters

text = text.replace('\u202f', ' ').replace('\xa0', ' ').lower()

# Insert spaces between words and punctuation

out = ''

for i,char in enumerate(text):

if i>0 and char in (',','!','.','?') and text[i-1] !=' ':

out += ' '

out +=char

# The following is the original code of Mu God , The feeling I wrote above is easy to understand

# def no_space(char,prev_char):

# return char in set(',.!?') and prev_char != ' '

# out = [

# ' ' + char if i > 0 and no_space(char, text[i - 1]) else char

# for i, char in enumerate(text)]

return ''.join(out)

text = preprocess_nmt(raw_text)

print(text[:80])

go . va !

hi . salut !

run ! cours !

run ! courez !

who ? qui ?

wow ! ça alors !

1.2 Word metabolization tokenization

My personal understanding : Sentence / Paragraphs are divided into vectors of words . It's like cutting a handful according to its scale

def tokenize_nmt(text,num_examples=None):

""" Tokenize the dataset """

source,target = [],[]

for i,line in enumerate(text.split('\n')):

if num_examples and i>num_examples:

break

parts = line.split('\t')

if len(parts)==2:

source.append(parts[0].split(' '))

target.append(parts[1].split(' '))

return source,target

source, target = tokenize_nmt(text)

source[:6], target[:6]

([['go', '.'],

['hi', '.'],

['run', '!'],

['run', '!'],

['who', '?'],

['wow', '!']],

[['va', '!'],

['salut', '!'],

['cours', '!'],

['courez', '!'],

['qui', '?'],

['ça', 'alors', '!']])

# Draw a histogram of the number of tags contained in each text sequence

# The length of the sentence is not long , Usually less than 20

d2l.set_figsize()

_, _, patches = d2l.plt.hist([[len(l) for l in source],

[len(l) for l in target]],

label=['source', 'target'])

for patch in patches[1].patches:

patch.set_hatch('/')

d2l.plt.legend(loc='upper right');

1.3 glossary ( word embedding)

Since the machine translation dataset consists of language pairs , Therefore, we can build two vocabularies for the source language and the target language . When using word level tokenization , The vocabulary will be significantly larger than when using character level tokenization . To alleviate this problem , Here we will appear less than 2 The second low frequency mark is regarded as the same unknown (“<unk>”) Mark . besides , We also specify additional specific tags , For example, in a small batch, it is used to fill the sequence to the filling mark of the same length (“<pad>”), And the beginning of the sequence (“<bos>”) And closing marks (“<eos>”). These special tags are commonly used in natural language processing tasks .

src_vocab = d2l.Vocab(source, min_freq=2,

reserved_tokens=['<pad>', '<bos>', '<eos>'])

len(src_vocab),list(src_vocab.token_to_idx.items())[:10]

(10012,

[('<unk>', 0),

('<pad>', 1),

('<bos>', 2),

('<eos>', 3),

('.', 4),

('i', 5),

('you', 6),

('to', 7),

('the', 8),

('?', 9)])

2. Load data set

The sequence samples in the language model have a fixed length , Whether the sample is part of a sentence or a fragment spanning multiple sentences . This fixed length is specified by the number of time steps or the number of tags parameter . In machine translation , Each sample is a text sequence pair composed of source and target , Each of these text sequences may have a different length .

In order to improve the calculation efficiency , We can still go through truncation (truncation) and fill (padding) Only one small batch of text sequences can be processed at a time . Suppose that each sequence in the same small batch should have the same length n, So if the number of tags in the text sequence is less than this length n when , We will continue to add specific... At the end “<pad>” Mark , Until its length reaches a uniform length ; conversely , We will truncate the text sequence , Just take the first n A sign , And discard the remaining tags . such , Each text sequence will have the same length , In order to load in small batches of the same shape .

( Actually, I have a problem here : We first added at the end pad Mark , The truncation operation is applied later , So if it is longer than our limit pad It must have been cut , Add this to these sequences pad In fact, it is more than one . Of course , This does not affect our training , It's just a little thought when I see this logic )

def truncate_pad(line,num_steps,padding_token):

""" Truncate or fill the text sequence """

if len(line)>num_steps:

return line[:num_steps] # Cut off the extra

return line + [padding_token]*(num_steps -len(line)) # Fill in the missing

# hypothesis num_step by 10, The filling symbol is <pad>, Operate on each sentence

truncate_pad(src_vocab[source[0]], 10, src_vocab['<pad>'])

[47, 4, 1, 1, 1, 1, 1, 1, 1, 1]

Now let's define a function , Text sequences can be converted into small batch data sets for training . We will be specific “<eos>” Tags are added to the end of all sequences , Used to indicate the end of a sequence . When the model generates a sequence for prediction by tag after tag , Generated “<eos>” The mark indicates that the sequence output work has been completed . Besides , We also recorded the length of each text sequence , Fill marks are excluded from length statistics , Some models that will be introduced later will need this length information .

def build_array_nmt(lines,vocab,num_steps):

""" Convert machine translated text sequences into small batches """

lines = [vocab[l] for l in lines]

lines = [l+[vocab['<eos>']] for l in lines] # Add an end mark

array = torch.tensor([

truncate_pad(l,num_steps,vocab['<pad>']) for l in lines])

valid_len = (array != vocab['<pad>']).type(torch.int32).sum(1) # Save the length of the fill

return array,valid_len

# Be careful eos yes 3

array,valid_len = build_array_nmt(source,src_vocab ,10)

array[1],valid_len[1]

(tensor([113, 4, 3, 1, 1, 1, 1, 1, 1, 1]), tensor(3))

# Organize data loading and processing

def load_data_nmt(batch_size,num_steps,num_examples=600):

""" Returns the iterator and vocabulary of the translation dataset """

text = preprocess_nmt(read_data_nmt()) # Preprocessing

source, target = tokenize_nmt(text, num_examples) # Word metabolization

src_vocab = d2l.Vocab(source, min_freq=2,

reserved_tokens=['<pad>', '<bos>', '<eos>'])

tgt_vocab = d2l.Vocab(target, min_freq=2,

reserved_tokens=['<pad>', '<bos>', '<eos>']) # Building a vocabulary

src_array, src_valid_len = build_array_nmt(source, src_vocab, num_steps)

tgt_array, tgt_valid_len = build_array_nmt(target, tgt_vocab, num_steps)

data_arrays = (src_array, src_valid_len, tgt_array, tgt_valid_len)

data_iter = d2l.load_array(data_arrays, batch_size)

return data_iter, src_vocab, tgt_vocab

train_iter, src_vocab, tgt_vocab = load_data_nmt(batch_size=2, num_steps=8)

for X, X_valid_len, Y, Y_valid_len in train_iter:

print('X:', X.type(torch.int32))

print('valid lengths for X:', X_valid_len)

print('Y:', Y.type(torch.int32))

print('valid lengths for Y:', Y_valid_len)

break

X: tensor([[16, 51, 4, 3, 1, 1, 1, 1],

[ 6, 0, 4, 3, 1, 1, 1, 1]], dtype=torch.int32)

valid lengths for X: tensor([4, 4])

Y: tensor([[ 35, 37, 11, 5, 3, 1, 1, 1],

[ 21, 51, 134, 4, 3, 1, 1, 1]], dtype=torch.int32)

valid lengths for Y: tensor([5, 5])

Summary

- Machine translation refers to the automatic translation of text sequences from one language to another .

- Vocabulary when using word level tokenization , Will be significantly larger than the vocabulary when using character level tokenization . To alleviate this problem , We can treat the low-frequency marker as the same unknown marker .

- By truncating and filling the text sequence , It can ensure that all text sequences have the same length , In order to load... In small batches .

practice

- stay

load_data_nmtTry differentnum_examplesParameter values . How does this affect the vocabulary of the source and target languages ?

Well understood. : If num_examples The bigger it is , It means that the more low-frequency words we keep , Then the corresponding vocabulary will increase relatively . And the more vocabulary , Its combination will also increase . It has a great impact on the cost of our training and prediction . The test adjusted this value to 800 and 1200 Value .

- Some languages ( For example, Chinese and Japanese ) The text of does not have a word boundary indicator ( Such as spaces ). In this case , Is word level tokenization still a good idea ? Why? ?

Reference resources Blog:NLP+ Lexical series ( One )︱ Summary of Chinese word segmentation technology 、 Introduction and comparison of several word segmentation engines

I haven't done it personally NLP Translation work , But in my simple idea : Can our Chinese characters be marked separately , As for how to split , Words are individual individuals, equivalent to letters , It's not even impossible to recognize by bytes , After all, Chinese has two bytes per word .

边栏推荐

- Golang Modules

- 2022-2028 global elastic strain sensor industry research and trend analysis report

- mmclassification 标注文件生成

- 2022-2028 global small batch batch batch furnace industry research and trend analysis report

- 2022-2028 global gasket plate heat exchanger industry research and trend analysis report

- Reading notes on how to connect the network - hubs, routers and routers (III)

- Fatal error in golang: concurrent map writes

- 26. Delete duplicates in the ordered array (fast and slow pointer de duplication)

- Launpad | 基礎知識

- Four common methods of copying object attributes (summarize the highest efficiency)

猜你喜欢

H5 audio tag custom style modification and adding playback control events

2022-2028 global probiotics industry research and trend analysis report

自动化的优点有哪些?

Log cannot be recorded after log4net is deployed to the server

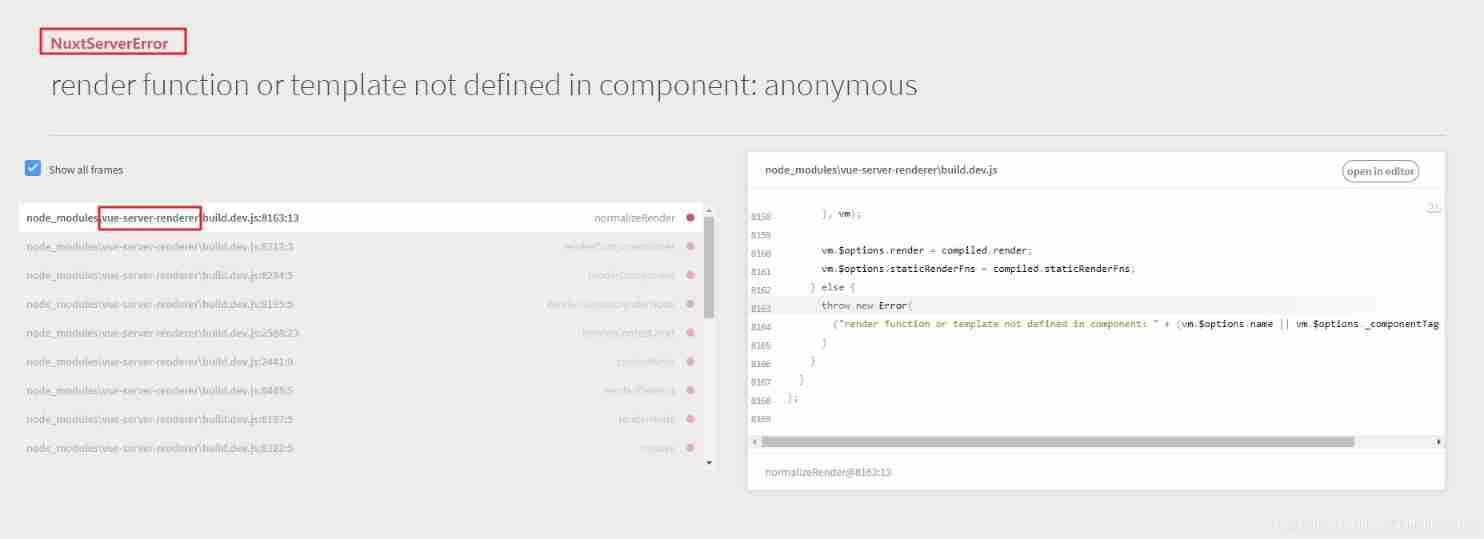

Nuxt reports an error: render function or template not defined in component: anonymous

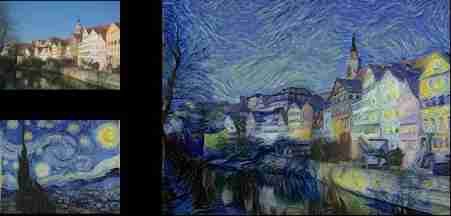

Hands on deep learning (33) -- style transfer

技术管理进阶——如何设计并跟进不同层级同学的绩效

ArrayBuffer

2022-2028 global elastic strain sensor industry research and trend analysis report

2022-2028 global intelligent interactive tablet industry research and trend analysis report

随机推荐

Hands on deep learning (35) -- text preprocessing (NLP)

26. Delete duplicates in the ordered array (fast and slow pointer de duplication)

What are the advantages of automation?

If you can quickly generate a dictionary from two lists

华为联机对战如何提升玩家匹配成功几率

2022-2028 research and trend analysis report on the global edible essence industry

Write a jison parser from scratch (6/10): parse, not define syntax

PHP personal album management system source code, realizes album classification and album grouping, as well as album image management. The database adopts Mysql to realize the login and registration f

Golang Modules

Four common methods of copying object attributes (summarize the highest efficiency)

Trees and graphs (traversal)

回复评论的sql

技术管理进阶——如何设计并跟进不同层级同学的绩效

libmysqlclient.so.20: cannot open shared object file: No such file or directory

In the case of easyUI DataGrid paging, click the small triangle icon in the header to reorder all the data in the database

百度研发三面惨遭滑铁卢:面试官一套组合拳让我当场懵逼

C语言指针经典面试题——第一弹

Go context 基本介绍

Regular expression (I)

el-table单选并隐藏全选框