当前位置:网站首页>Scala104 - Built-in datetime functions for Spark.sql

Scala104 - Built-in datetime functions for Spark.sql

2022-08-04 18:32:00 【51CTO】

Sometimes we use it directlydf.createOrReplaceTempView(temp)创建临时表,用sql去计算.sparkSQL有些语法和hql不一样,做个笔记.

- <scala.version>2.11.12</scala.version>

- <spark.version>2.4.3</spark.version>

val

builder

=

SparkSession

.

builder()

.

appName(

"learningScala")

.

config(

"spark.executor.heartbeatInterval",

"60s")

.

config(

"spark.network.timeout",

"120s")

.

config(

"spark.serializer",

"org.apache.spark.serializer.KryoSerializer")

.

config(

"spark.kryoserializer.buffer.max",

"512m")

.

config(

"spark.dynamicAllocation.enabled",

false)

.

config(

"spark.sql.inMemoryColumnarStorage.compressed",

true)

.

config(

"spark.sql.inMemoryColumnarStorage.batchSize",

10000)

.

config(

"spark.sql.broadcastTimeout",

600)

.

config(

"spark.sql.autoBroadcastJoinThreshold",

-

1)

.

config(

"spark.sql.crossJoin.enabled",

true)

.

master(

"local[*]")

val

spark

=

builder.

getOrCreate()

spark.

sparkContext.

setLogLevel(

"ERROR")

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

builder: org.apache.spark.sql.SparkSession.Builder = [email protected]

spark: org.apache.spark.sql.SparkSession = [email protected]

- 1.

- 2.

var

df1

=

Seq(

(

1,

"2019-04-01 11:45:50",

11.15,

"2019-04-02 11:45:49"),

(

2,

"2019-05-02 11:56:50",

10.37,

"2019-05-02 11:56:51"),

(

3,

"2019-07-21 12:45:50",

12.11,

"2019-08-21 12:45:50"),

(

4,

"2019-08-01 12:40:50",

14.50,

"2020-08-03 12:40:50"),

(

5,

"2019-01-06 10:00:50",

16.39,

"2019-01-05 10:00:50")

).

toDF(

"id",

"startTimeStr",

"payamount",

"endTimeStr")

df1

=

df1.

withColumn(

"startTime",

$

"startTimeStr".

cast(

"Timestamp"))

.

withColumn(

"endTime",

$

"endTimeStr".

cast(

"Timestamp"))

df1.

printSchema

df1.

show()

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

root

|-- id: integer (nullable = false)

|-- startTimeStr: string (nullable = true)

|-- payamount: double (nullable = false)

|-- endTimeStr: string (nullable = true)

|-- startTime: timestamp (nullable = true)

|-- endTime: timestamp (nullable = true)

+---+-------------------+---------+-------------------+-------------------+-------------------+

| id| startTimeStr|payamount| endTimeStr| startTime| endTime|

+---+-------------------+---------+-------------------+-------------------+-------------------+

| 1|2019-04-01 11:45:50| 11.15|2019-04-02 11:45:49|2019-04-01 11:45:50|2019-04-02 11:45:49|

| 2|2019-05-02 11:56:50| 10.37|2019-05-02 11:56:51|2019-05-02 11:56:50|2019-05-02 11:56:51|

| 3|2019-07-21 12:45:50| 12.11|2019-08-21 12:45:50|2019-07-21 12:45:50|2019-08-21 12:45:50|

| 4|2019-08-01 12:40:50| 14.5|2020-08-03 12:40:50|2019-08-01 12:40:50|2020-08-03 12:40:50|

| 5|2019-01-06 10:00:50| 16.39|2019-01-05 10:00:50|2019-01-06 10:00:50|2019-01-05 10:00:50|

+---+-------------------+---------+-------------------+-------------------+-------------------+

df1: org.apache.spark.sql.DataFrame = [id: int, startTimeStr: string ... 4 more fields]

df1: org.apache.spark.sql.DataFrame = [id: int, startTimeStr: string ... 4 more fields]

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

timestamp转string

把timestampConvert to the corresponding format string

- date_format把timestamp转换成对应的字符串

- String format is used"yyyyMMdd"表示

root

|-- yyyyMMdd: string (nullable = true)

|-- yyyy_MM_dd: string (nullable = true)

|-- yyyy: string (nullable = true)

+--------+----------+----+

|yyyyMMdd|yyyy_MM_dd|yyyy|

+--------+----------+----+

|20190401|2019-04-01|2019|

|20190502|2019-05-02|2019|

|20190721|2019-07-21|2019|

|20190801|2019-08-01|2019|

|20190106|2019-01-06|2019|

+--------+----------+----+

sql: String =

"

SELECT date_format(startTime,'yyyyMMdd') AS yyyyMMdd,

date_format(startTime,'yyyy-MM-dd') AS yyyy_MM_dd,

date_format(startTime,'yyyy') AS yyyy

FROM TEMP

"

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

timestamp转date

- to_date可以把timestamp转换成date类型

root

|-- startTime: timestamp (nullable = true)

|-- endTime: timestamp (nullable = true)

|-- startDate: date (nullable = true)

|-- endDate: date (nullable = true)

+-------------------+-------------------+----------+----------+

| startTime| endTime| startDate| endDate|

+-------------------+-------------------+----------+----------+

|2019-04-01 11:45:50|2019-04-02 11:45:49|2019-04-01|2019-04-02|

|2019-05-02 11:56:50|2019-05-02 11:56:51|2019-05-02|2019-05-02|

|2019-07-21 12:45:50|2019-08-21 12:45:50|2019-07-21|2019-08-21|

|2019-08-01 12:40:50|2020-08-03 12:40:50|2019-08-01|2020-08-03|

|2019-01-06 10:00:50|2019-01-05 10:00:50|2019-01-06|2019-01-05|

+-------------------+-------------------+----------+----------+

sql: String =

SELECT startTime,endTime,

to_date(startTime) AS startDate,

to_date(endTime) AS endDate

FROM TEMP

df2: org.apache.spark.sql.DataFrame = [startTime: timestamp, endTime: timestamp ... 2 more fields]

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

- 26.

- 27.

- 28.

求时间差

- Day difference functiondatediff可以应用在timestamp中,Can also be applied in date类型中,The unit is natural days,而不是24小时

- month difference functionmonths_between同样可以,The monthly unit does not seem to be fixed,即31天or30天

df2.

createOrReplaceTempView(

"temp")

var

sql

=

"""

SELECT startTime,

endTime,

datediff(endTime,startTime) AS dayInterval1,

datediff(endDate,startDate) AS dayInterval2,

months_between(endTime,startTime) AS monthInterval1,

months_between(endDate,startDate) AS monthInterval2

FROM TEMP

"""

// spark.sql(sql).printSchema

spark.

sql(

sql).

show()

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

+-------------------+-------------------+------------+------------+--------------+--------------+

| startTime| endTime|dayInterval1|dayInterval2|monthInterval1|monthInterval2|

+-------------------+-------------------+------------+------------+--------------+--------------+

|2019-04-01 11:45:50|2019-04-02 11:45:49| 1| 1| 0.03225769| 0.03225806|

|2019-05-02 11:56:50|2019-05-02 11:56:51| 0| 0| 0.0| 0.0|

|2019-07-21 12:45:50|2019-08-21 12:45:50| 31| 31| 1.0| 1.0|

|2019-08-01 12:40:50|2020-08-03 12:40:50| 368| 368| 12.06451613| 12.06451613|

|2019-01-06 10:00:50|2019-01-05 10:00:50| -1| -1| -0.03225806| -0.03225806|

+-------------------+-------------------+------------+------------+--------------+--------------+

sql: String =

"

SELECT startTime,

endTime,

datediff(endTime,startTime) AS dayInterval1,

datediff(endDate,startDate) AS dayInterval2,

months_between(endTime,startTime) AS monthInterval1,

months_between(endDate,startDate) AS monthInterval2

FROM TEMP

"

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

- 12.

- 13.

- 14.

- 15.

- 16.

- 17.

- 18.

- 19.

- 20.

- 21.

- 22.

- 23.

- 24.

- 25.

Ref

2020-03-24 于南京市江宁区九龙湖

边栏推荐

- LVS+Keepalived群集

- localstorage本地存储的方法

- DOM Clobbering的原理及应用

- 网页端IM即时通讯开发:短轮询、长轮询、SSE、WebSocket

- 企业即时通讯软件有哪些功能?对企业有什么帮助?

- 如何模拟后台API调用场景,很细!

- Introduction of three temperature measurement methods for PT100 platinum thermal resistance

- Regardless of whether you are a public, professional or non-major class, I have been sorting out the learning route for a long time here, and the learning route I have summarized is not yet rolled up

- 开发那些事儿:如何通过EasyCVR平台获取监控现场的人流量统计数据?

- 2019 Haidian District Youth Programming Challenge Activity Elementary Group Rematch Test Questions Detailed Answers

猜你喜欢

随机推荐

DHCP&OSPF combined experimental demonstration (Huawei routing and switching equipment configuration)

curl命令的那些事

数据集成:holo数据同步至redis。redis必须是集群模式?

Nintendo won't launch any new hardware until March 2023, report says

袋鼠云思枢:数驹DTengine,助力企业构建高效的流批一体数据湖计算平台

dotnet core 使用 CoreRT 将程序编译为 Native 程序

【web自动化测试】playwright安装失败怎么办

谁能解答?从mysql的binlog读取数据到kafka,但是数据类型有Insert,updata,

Codeforces积分系统介绍

八猴渲染器是什么?它能干什么?八猴软件的界面讲解

FE01_OneHot-Scala应用

单行、多行文本超出显示省略号

通配符SSL证书不支持多域名吗?

哈夫曼树(暑假每日一题 15)

DHCP&OSPF组合实验演示(Huawei路由交换设备配置)

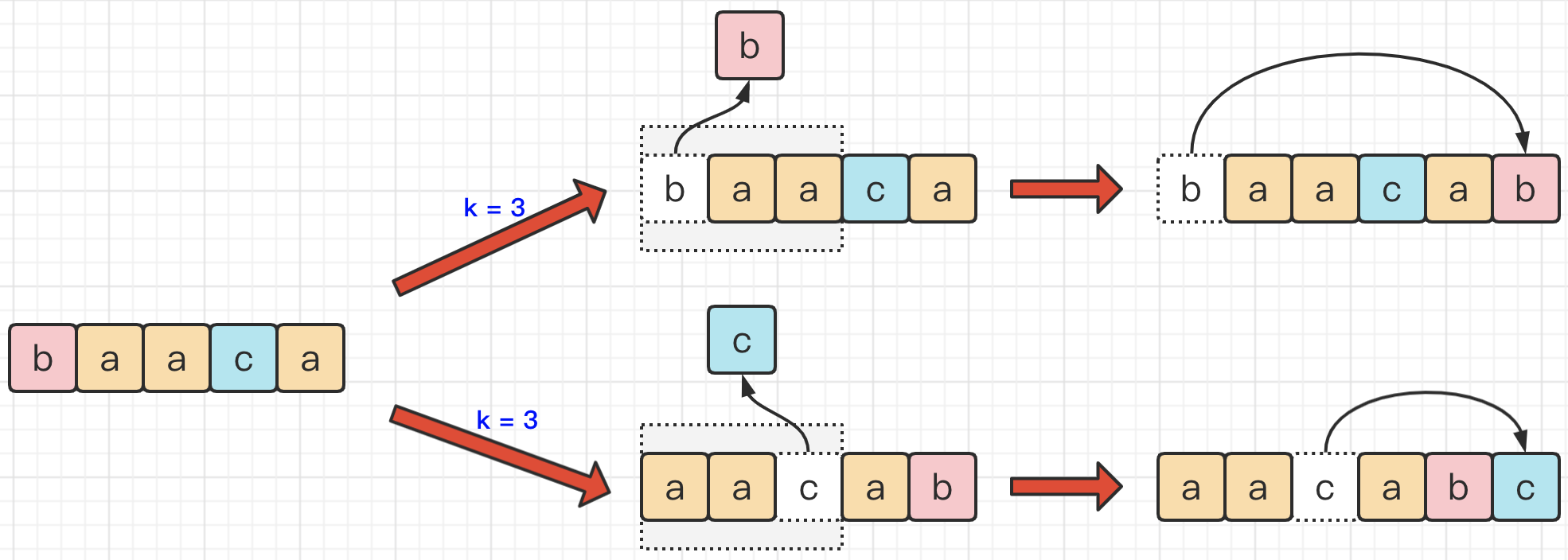

图解LeetCode——899. 有序队列(难度:困难)

EuROC 数据集格式及相关代码

合宙Cat1 4G模块Air724UG配置RNDIS网卡或PPP拨号,通过RNDIS网卡使开发板上网(以RV1126/1109开发板为例)

vantui 组件 van-field 路由切换时,字体样式混乱问题

使用bash语句,清空aaa文件夹下的所有文件