当前位置:网站首页>Run faster with go: use golang to serve machine learning

Run faster with go: use golang to serve machine learning

2022-07-05 14:37:00 【fifteen billion two hundred and thirty-one million one hundred 】

use Go Run faster : Use Golang Serve machine learning

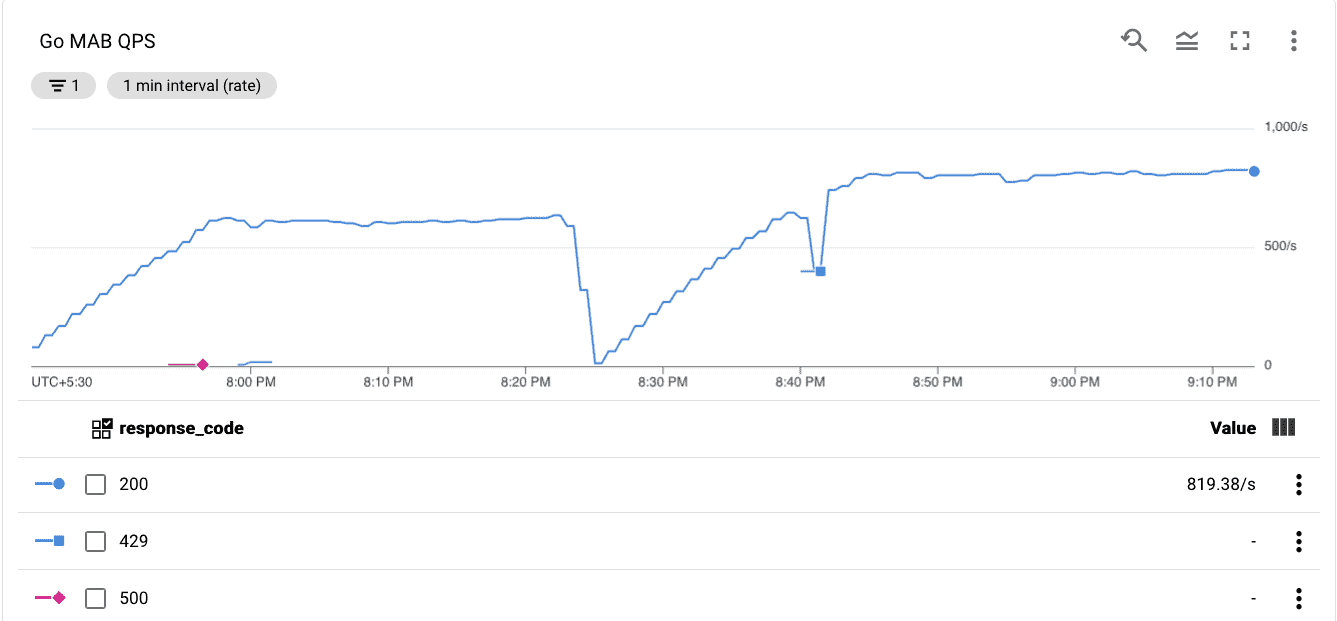

therefore , Our requirement is to complete every second with as few resources as possible 300 Ten thousand predictions . thankfully , This is a relatively simple recommendation system model , That is, dobby slot machine (MAB). Dobby slot machines usually involve from Beta Distribution Sampling in equal distribution . This is also where it takes the most time . If we can do as many samples as possible at the same time , We can make good use of resources . Maximizing resource utilization is the key to reducing the overall resources required by the model .

Our current forecasting service uses Python Written microservices , They follow the following general structure :

request -> Function acquisition -> forecast -> Post processing -> return

A request may require us to respond to thousands of users 、 Score the content . with GIL And multi process Python The handling performance is very good , We have achieved the goal based on cython and C++ Batch sampling method , Around GIL, We use many based on the number of cores workers To handle requests concurrently .

Currently, single node Python Service can be done 192 individual RPS , Each about 400 Yes . Average CPU Utilization is only 20% about . The limiting factor now is language 、 Service framework and network call to storage function .

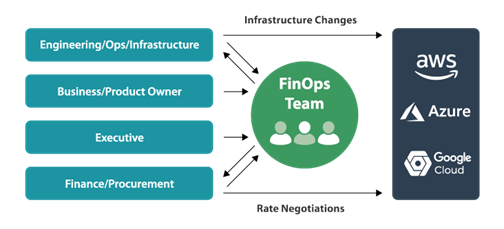

Why Golang?

Golang It's a statically typed language , It is very instrumental . This means that errors will be detected early , And it's easy to refactor code .Golang Concurrency of is native , This is for machine learning algorithms that can run in parallel and for Featurestore Concurrent network calls are very important . It is here One of the fastest service languages in the benchmark . It is also a compilation language , So it can be optimized at compile time .

Transplant the existing MAB To Golang On

The basic idea , Divide the system into 3 Parts of :

Basic for prediction and health REST API And stub Featurestore Acquisition , To do this, implement a module Use cgo Ascension and transfer c++ Sampling code

The first part is easy , I chose Fiber Framework for REST API. It seems to be the most popular , Well documented , similar Expressjs Of API. And it performs quite well in the benchmark .

Early code :

func main() {

// setup fiber

app := fiber.New()

// catch all exception

app.Use(recover.New())

// load model struct

ctx := context.Background()

md, err := model.NewModel(ctx)

if err != nil {

fmt.Println(err)

}

defer md.Close()

// health API

app.Get("/health", func(c *fiber.Ctx) error {

if err != nil {

return fiber.NewError(

fiber.StatusServiceUnavailable,

fmt.Sprintf("Model couldn't load: %v", err))

}

return c.JSON(&fiber.Map{

"status": "ok",

})

})

// predict API

app.Post("/predict", func(c *fiber.Ctx) error {

var request map[string]interface{}

err := json.Unmarshal(c.Body(), &request)

if err != nil {

return err

}

return c.JSON(md.Predict(request))

})

That's it , Once the task is completed . It took less than an hour .

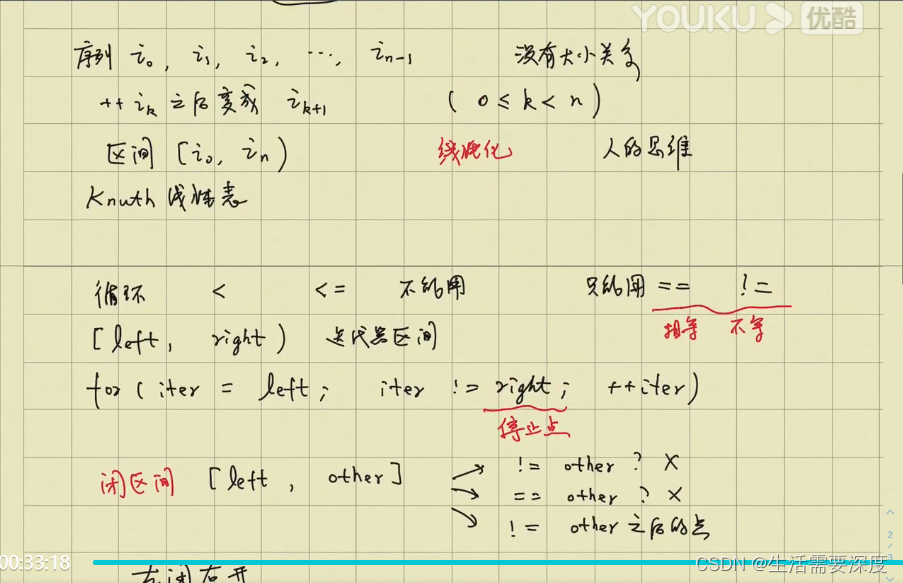

In the second part , You need to learn a little about how to write Structure with method and goroutines . And C++ and Python One of the main differences is ,Golang Full object-oriented programming is not supported , Mainly, inheritance is not supported . Its method on the structure is also completely different from other languages I have encountered .

What we use Featurestore Yes Golang client , All I have to do is write a wrapper around it to read a large number of concurrent entities .

The basic structure I want is :

type VertexFeatureStoreClient struct {

//client reference to gcp's client

}

func NewVertexFeatureStoreClient(ctx context.Context,) (*VertexFeatureStoreClient, error) {

// client creation code

}

func (vfs *VertexFeatureStoreClient) GetFeaturesByIdsChunk(ctx context.Context, featurestore, entityName string, entityIds []string, featureList []string) (map[string]map[string]interface{}, error) {

// fetch code for 100 items

}

func (vfs *VertexFeatureStoreClient) GetFeaturesByIds(ctx context.Context, featurestore, entityName string, entityIds []string, featureList []string) (map[string]map[string]interface{}, error) {

const chunkSize = 100 // limit from GCP

// code to run each fetch concurrently

featureChannel := make(chan map[string]map[string]interface{})

errorChannel := make(chan error)

var count = 0

for i := 0; i < len(entityIds); i += chunkSize {

end := i + chunkSize

if end > len(entityIds) {

end = len(entityIds)

}

go func(ents []string) {

features, err := vfs.GetFeaturesByIdsChunk(ctx, featurestore, entityName, ents, featureList)

if err != nil {

errorChannel <- err

return

}

featureChannel <- features

}(entityIds[i:end])

count++

}

results := make(map[string]map[string]interface{}, len(entityIds))

for {

select {

case err := <-errorChannel:

return nil, err

case res := <-featureChannel:

for k, v := range res {

results[k] = v

}

}

count--

if count < 1 {

break

}

}

return results, nil

}

func (vfs *VertexFeatureStoreClient) Close() error {

//close code

}

About Goroutine A hint of

Use as many channels as possible , There are many tutorials to use Goroutine Of sync workgroups. Those are lower level API, In most cases, you don't need . The channel is running Goroutine In an elegant way , Even if you don't need to pass data , You can send flags in the channel to collect .goroutines Is a cheap virtual thread , You don't have to worry about making too many threads and running on multiple cores . Abreast of the times golang It can run across cores for you .

About the third part , This is the hardest part . It took about a day to debug it . therefore , If your use case does not require complex sampling and C++, I suggest using it directly Gonum , You'll save yourself a lot of time .

I didn't realize , from cython when , I have to compile it manually C++ file , And load it into cgo include flags in .

The header file :

#ifndef BETA_DIST_H

#define BETA_DIST_H

#ifdef __cplusplus

extern "C"

{

#endif

double beta_sample(double, double, long);

#ifdef __cplusplus

}

#endif

#endif

Be careful extern C , This is a C++ Code in go Need to be used in , because mangling ,C Unwanted . Another problem is , I can't do anything in the header file #include sentence , under these circumstances cgo link failure ( Unknown cause ). So I moved these statements to .cpp In file .

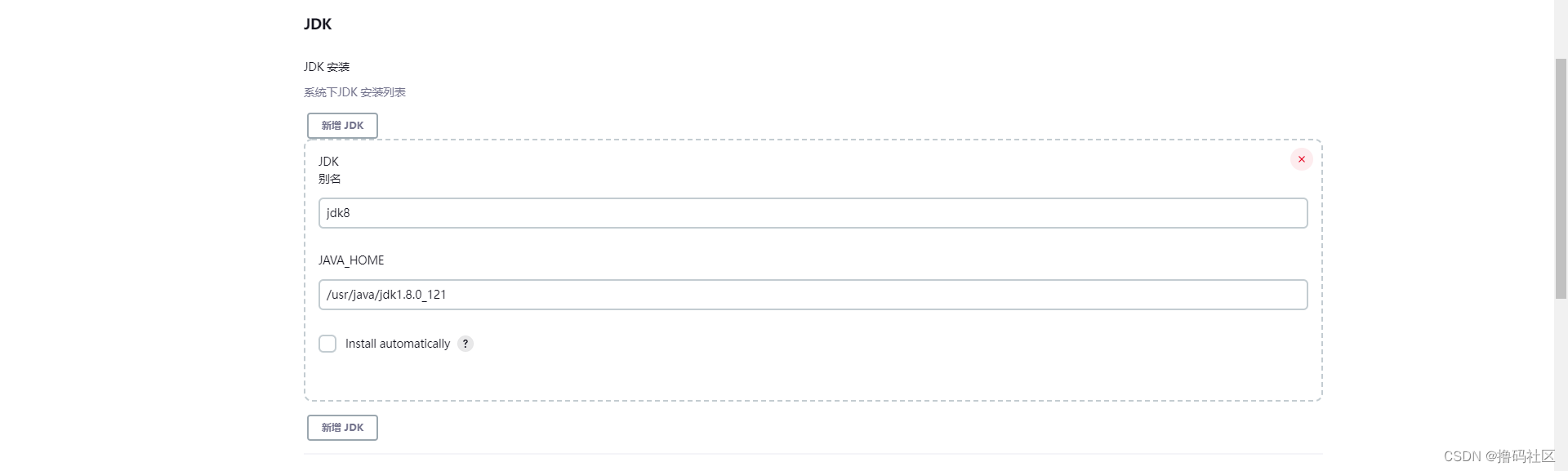

Compile it :

g++ -fPIC -I/usr/local/include -L/usr/local/lib betadist.cpp -shared -o libbetadist.so

Once the compilation is complete , You can use it cgo.

cgo Packaging documents :

/*

#cgo CPPFLAGS: -I${SRCDIR}/cbetadist

#cgo CPPFLAGS: -I/usr/local/include

#cgo LDFLAGS: -Wl,-rpath,${SRCDIR}/cbetadist

#cgo LDFLAGS: -L${SRCDIR}/cbetadist

#cgo LDFLAGS: -L/usr/local/lib

#cgo LDFLAGS: -lstdc++

#cgo LDFLAGS: -lbetadist

#include <betadist.hpp>

*/

import "C"

func Betasample(alpha, beta float64, random int) float64 {

return float64(C.beta_sample(C.double(alpha), C.double(beta), C.long(random)))

}

Be careful LDFLAGS Medium -lbetadist Is used to link libbetadist.so Of . You must also run export DYLD_LIBRARY_PATH=/fullpath_to/folder_containing_so_file/ . Then I can run go run . , It can be like go Work like a project .

It is very simple to integrate them with simple model structure and prediction methods , And it takes less time .

result

| Metric | Python | Go |

|---|---|---|

| Max RPS | 192 | 819 |

| Max latency | 78ms | 110ms |

| Max CPU util. | ~20% | ~55% |

That's right RPS Of 4.3 times The promotion of , This makes our minimum number of nodes from 80 Reduce to 19 individual , This is a huge cost advantage . The maximum delay is slightly higher , But it's acceptable , because python The service is 192 It is already saturated by o'clock , If the flow exceeds this figure , It will decrease significantly .

I should convert all my models into Golang Do you ?

A short answer : no need .

Long answer .Go It has great advantages in service , but Python It is still the king of experiments . I only recommend using it in the basic model with simple model and long-term operation Go, Not experiments .Go For complex ML For use cases still Not very mature .

So the elephant in the room , Why not Rust ?

Um. , Schiff did it . Have a look . It's even better than Go faster .

边栏推荐

猜你喜欢

随机推荐

mysql8.0JSON_ Instructions for using contains

Thymeleaf 常用函数

Webrtc learning (II)

实现一个博客系统----使用模板引擎技术

裁员下的上海

Microframe technology won the "cloud tripod Award" at the global Cloud Computing Conference!

外盘入金都不是对公转吗,那怎么保障安全?

不相交集

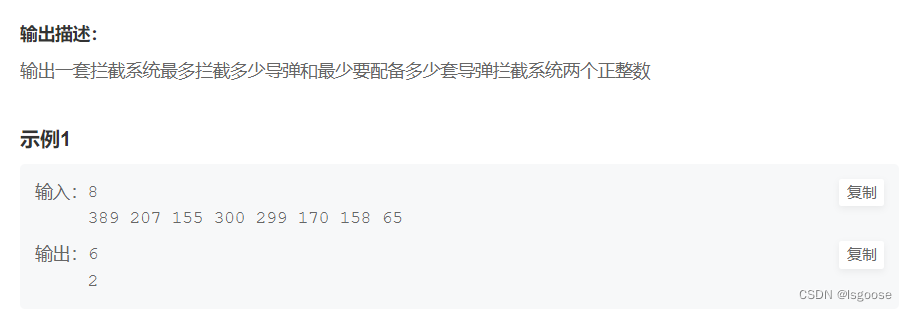

Niuke: intercepting missiles

Photoshop插件-动作相关概念-非加载执行动作文件中动作-PS插件开发

How can non-technical departments participate in Devops?

Implement a blog system -- using template engine technology

03_ Dataimport of Solr

ASP.NET大型外卖订餐系统源码 (PC版+手机版+商户版)

危机重重下的企业发展,数字化转型到底是不是企业未来救星

【華為機試真題詳解】歡樂的周末

Thymeleaf th:with局部变量的使用

【学习笔记】阶段测试1

最长公共子序列 - 动态规划

CPU设计相关笔记

![[detailed explanation of Huawei machine test] character statistics and rearrangement](/img/0f/972cde8c749e7b53159c9d9975c9f5.png)

![[learning notes] stage test 1](/img/22/ad16375d8d1510c2ec75c56403a8bf.png)