当前位置:网站首页>Video player (II): video decoding

Video player (II): video decoding

2022-06-30 07:19:00 【Wood fire zero】

List of articles

1. FFmpeg Decoding process

2. Code

std::string input_file = "1.mp4";

std::string output_file = "1.yuv";

// Create output file

FILE *out_fd = nullptr;

out_fd = fopen(output_file.c_str(), "wb");

if (!out_fd)

{

printf("can't open output file");

return;

}

AVFormatContext *fmt_ctx = nullptr;

fmt_ctx = avformat_alloc_context();

// Open the input video file

int ret = avformat_open_input(&fmt_ctx, input_file.c_str(), nullptr, nullptr);

if(ret < 0)

{

av_log(nullptr, AV_LOG_ERROR, "can not open input: %s \n", err2str(ret).c_str());

return;

}

// Get video file information

ret = avformat_find_stream_info(fmt_ctx, nullptr);

if(ret < 0)

{

av_log(nullptr, AV_LOG_ERROR, "avformat_find_stream_info failed: %s \n", err2str(ret).c_str());

return;

}

// Print video information

//av_dump_format(fmt_ctx, 0, input_file.c_str(), 0); // Fourth parameter , The input stream is 0, The output stream is 1

// Find video stream sequence number

int video_index = -1;

for (int i = 0; i < fmt_ctx->nb_streams; ++i)

{

if(fmt_ctx->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO)

{

video_index = i;

}

}

if(video_index == -1)

{

av_log(nullptr, AV_LOG_ERROR, "can not find video \n");

return;

}

// Find the video stream decoder

const AVCodec *video_codec = avcodec_find_decoder(fmt_ctx->streams[video_index]->codecpar->codec_id);

AVCodecContext *codec_ctx = avcodec_alloc_context3(video_codec);

avcodec_parameters_to_context(codec_ctx, fmt_ctx->streams[video_index]->codecpar);

// Turn on the video decoder

ret = avcodec_open2(codec_ctx, video_codec, nullptr);

if(ret < 0)

{

av_log(nullptr, AV_LOG_ERROR, "avcodec_open2 failed: %s \n", err2str(ret).c_str());

return;

}

// other YUV Format to YUV420P

SwsContext *img_convert_ctx = nullptr;

img_convert_ctx = sws_getContext(codec_ctx->width, codec_ctx->height, codec_ctx->pix_fmt,

codec_ctx->width, codec_ctx->height, AV_PIX_FMT_YUV420P, SWS_BICUBIC, NULL, NULL, NULL);

// establish packet, Used to store data before decoding

AVPacket packet;

av_init_packet(&packet);

// establish Frame, Used to store decoded data

AVFrame *frame = av_frame_alloc();

frame->width = fmt_ctx->streams[video_index]->codecpar->width;

frame->height = fmt_ctx->streams[video_index]->codecpar->height;

frame->format = fmt_ctx->streams[video_index]->codecpar->format;

av_frame_get_buffer(frame, 32);

// establish YUV Frame, Used to store decoded data

AVFrame *yuv_frame = av_frame_alloc();

yuv_frame->width = fmt_ctx->streams[video_index]->codecpar->width;

yuv_frame->height = fmt_ctx->streams[video_index]->codecpar->height;

yuv_frame->format = AV_PIX_FMT_YUV420P;

av_frame_get_buffer(yuv_frame, 32);

// while loop , Read one frame at a time , Parallel transcoding

while (av_read_frame(fmt_ctx, &packet) >= 0)

{

if(packet.stream_index == video_index)

{

// Start decoding

// Send data to the decoding queue

// used API:avcodec_decode_video2

// new API:avcodec_send_packet And avcodec_receive_frame

ret = avcodec_send_packet(codec_ctx, &packet);

if (ret < 0)

{

av_log(nullptr, AV_LOG_ERROR, "avcodec_send_packet failed: %s \n", err2str(ret).c_str());

break;

}

while (avcodec_receive_frame(codec_ctx, frame) >= 0)

{

//

sws_scale(img_convert_ctx,

(const uint8_t **)frame->data,

frame->linesize,

0,

codec_ctx->height,

yuv_frame->data,

yuv_frame->linesize);

// Data written to yuv In file

int y_size = codec_ctx->width * codec_ctx->height;

fwrite(yuv_frame->data[0], 1, y_size, out_fd);

fwrite(yuv_frame->data[1], 1, y_size/4, out_fd);

fwrite(yuv_frame->data[2], 1, y_size/4, out_fd);

}

}

av_packet_unref(&packet);

}

if (out_fd)

{

fclose(out_fd);

}

avcodec_free_context(&codec_ctx);

avformat_close_input(&fmt_ctx);

avformat_free_context(fmt_ctx);

among :

std::string err2str(int err)

{

char errStr[1024] = {

0};

av_strerror(err, errStr, sizeof(errStr));

return errStr;

}

After transcoding , Use pplay Play :

convert to yuv after , Play : ffplay -s 640x352 -pix_fmt yuv420p 1.yuv

-s 640x352 For video wide x high

3. explain

3.1 sws_getContext

struct SwsContext* sws_getContext(int srcW,

int srcH,

enum AVPixelFormat srcFormat,

int dstW,

int dstH,

enum AVPixelFormat dstFormat,

int flags,

SwsFilter *srcFilter,

SwsFilter *dstFilter,

const double *param )

Parameters :

- srcW Of the source video frame width;

- srcH Of the source video frame height;

- srcFormat The pixel format of the source video frame format;

- dstW Of converted video frames width;

- dstH Of converted video frames height;

- dstFormat The pixel format of the converted video frame format;

- flags Algorithm of transformation

- srcFilter、dstFilter Define the inputs separately / Output image filter information , If you do not filter the front and back images , Input NULL;

- param Define the parameters required for a particular scaling algorithm , The default is NULL

The function returns SwsContext Structure , Basic transformation information is defined

Example :

sws_getContext(w, h, YV12, w, h, NV12, 0, NULL, NULL, NULL); // YV12->NV12 Color space conversion

sws_getContext(w, h, YV12, w/2, h/2, YV12, 0, NULL, NULL, NULL); // YV12 Reduce the image to the original image 1/4

sws_getContext(w, h, YV12, 2w, 2h, YN12, 0, NULL, NULL, NULL); // YV12 Enlarge the image to the original 4 times , And converted to NV12 structure

3.2 sws_scale

int sws_scale(struct SwsContext *c,

const uint8_t *const srcSlice[],

const int srcStride[],

int srcSliceY,

int srcSliceH,

uint8_t *const dst[],

const int dstStride[] )

Parameters :

- c By sws_getContext Obtained parameters ;

- srcSlice[] input data buffer;

- srcStride[] For each column byte Count , Than actual width It's worth more ;

- srcSliceY The first column deals with the location ; Here I deal with it from the beginning , So fill it directly 0;

- srcSliceH Height ;

- dst[] Target data buffer;

- dstStride[] Same as srcStride[]

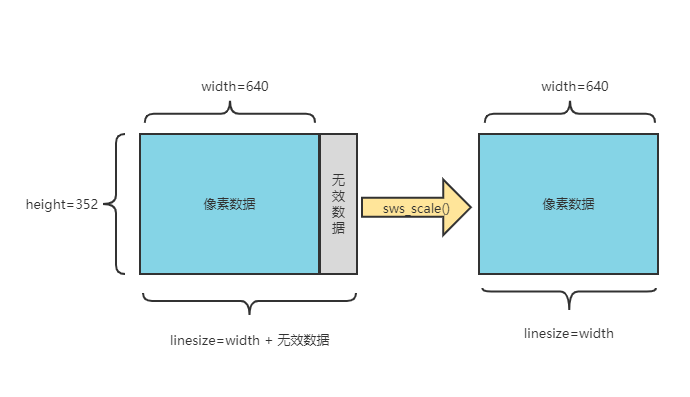

After decoding YUV The video pixel data in format is saved in AVFrame Of data[0]、data[1]、data[2] in , But these pixel values are not stored continuously , Some invalid pixels are stored after each row of valid pixels .

With brightness Y Take the data ,data[0] It includes linesize[0]*height Data . But in the consideration of optimization, etc ,linesize[0] Actually, it's not equal to the width width, It's a value larger than the width . Therefore, it is necessary to use sws_scale() convert , Invalid data is removed after conversion ,width And linesize[0] The values are equal .

4. Reference material

https://ffmpeg.org/doxygen/trunk/group__libsws.html#gae531c9754c9205d90ad6800015046d74

https://www.cnblogs.com/cyyljw/p/8676062.html

ffmpeg Code implementation h264 turn yuv

《 be based on FFmpeg + SDL The production of video player 》 Video of the course

边栏推荐

- Grep command usage

- SwiftUI打造一款美美哒自定义按压反馈按钮

- Class template case - encapsulation of array classes

- Write about your feelings about love and express your emotions

- 系统软件开发基础知识

- Detailed analysis of message signals occupying multiple bytes and bits

- [most complete] install MySQL on a Linux server

- Network security - detailed explanation of VLAN and tunk methods

- Thread network

- Minecraft 1.16.5模组开发(五十) 书籍词典 (Guide Book)

猜你喜欢

Can introduction

Linux服務器安裝Redis

Daemon and user threads

Nested if statement in sum function in SQL Server2005

我今年毕业,但我不知道我要做什么

RT thread kernel application development message queue experiment

Deploying web projects using idea

FreeRTOS timer group

Raspberry pie trivial configuration

![[most complete] install MySQL on a Linux server](/img/5d/8d95033fe577c161dfaedd2accc533.png)

[most complete] install MySQL on a Linux server

随机推荐

failed to create symbolic link ‘/usr/bin/mysql’: File exists

如果我在珠海,到哪里开户比较好?另外,手机开户安全么?

Golan common shortcut key settings

视频播放器(二):视频解码

Introduction to go language pointer

【已解决】Failed! Error: Unknown error 1130

Egret engine P2 physics engine (2) - Funny physical phenomenon of small balls hitting the ground

Merge: extension click the El table table data to expand

Linu foundation - zoning planning and use

app quits unexpectedly

[docsify basic use]

1.someip introduction

Stm32g0 Tim interrupt use

The maximum expression in Oracle database message list is 1000 error

Minecraft 1.16.5模组开发(五十) 书籍词典 (Guide Book)

MySQL encounters the problem of expression 1 of select list is not in group by claim and contains nonaggre

视频播放器(一):流程

Out of class implementation of member function of class template

What does the real name free domain name mean?

Thread network