当前位置:网站首页>Swin transformer code explanation

Swin transformer code explanation

2022-06-12 16:45:00 【QT-Smile】

Swin Transformer Code explanation

The subsampling is 4 times , therefore patch_size=4

2.

3.

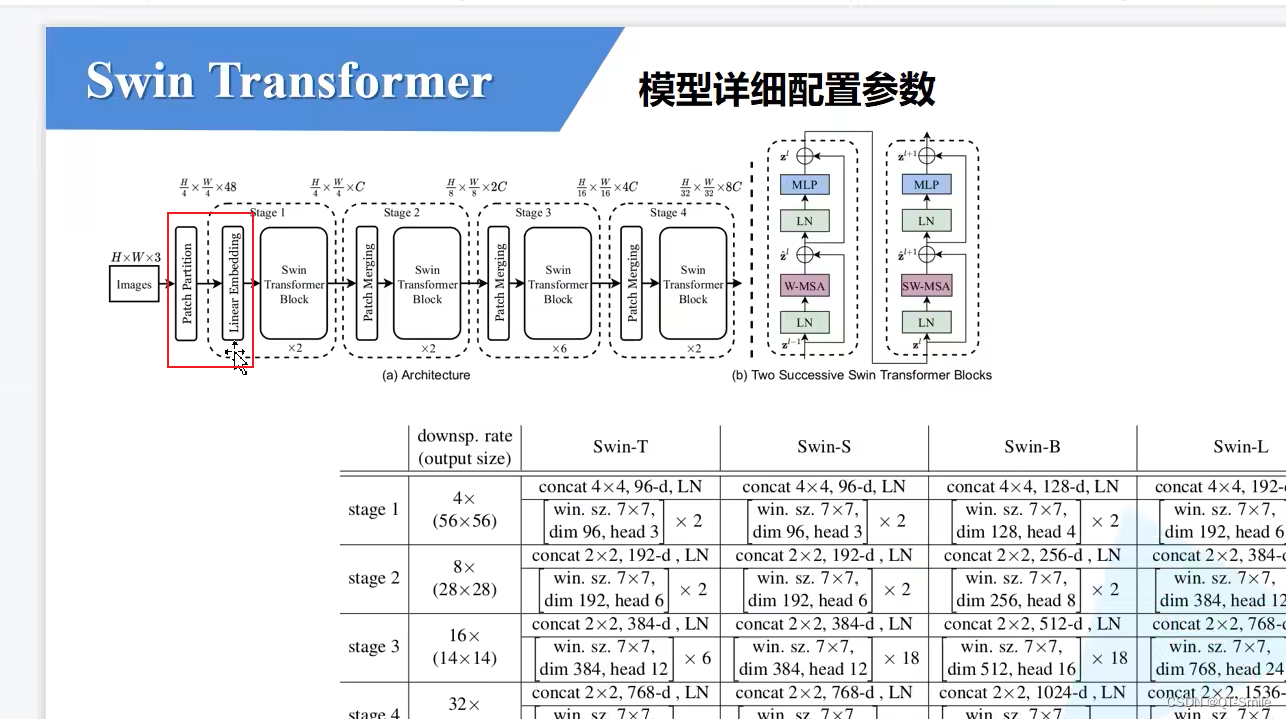

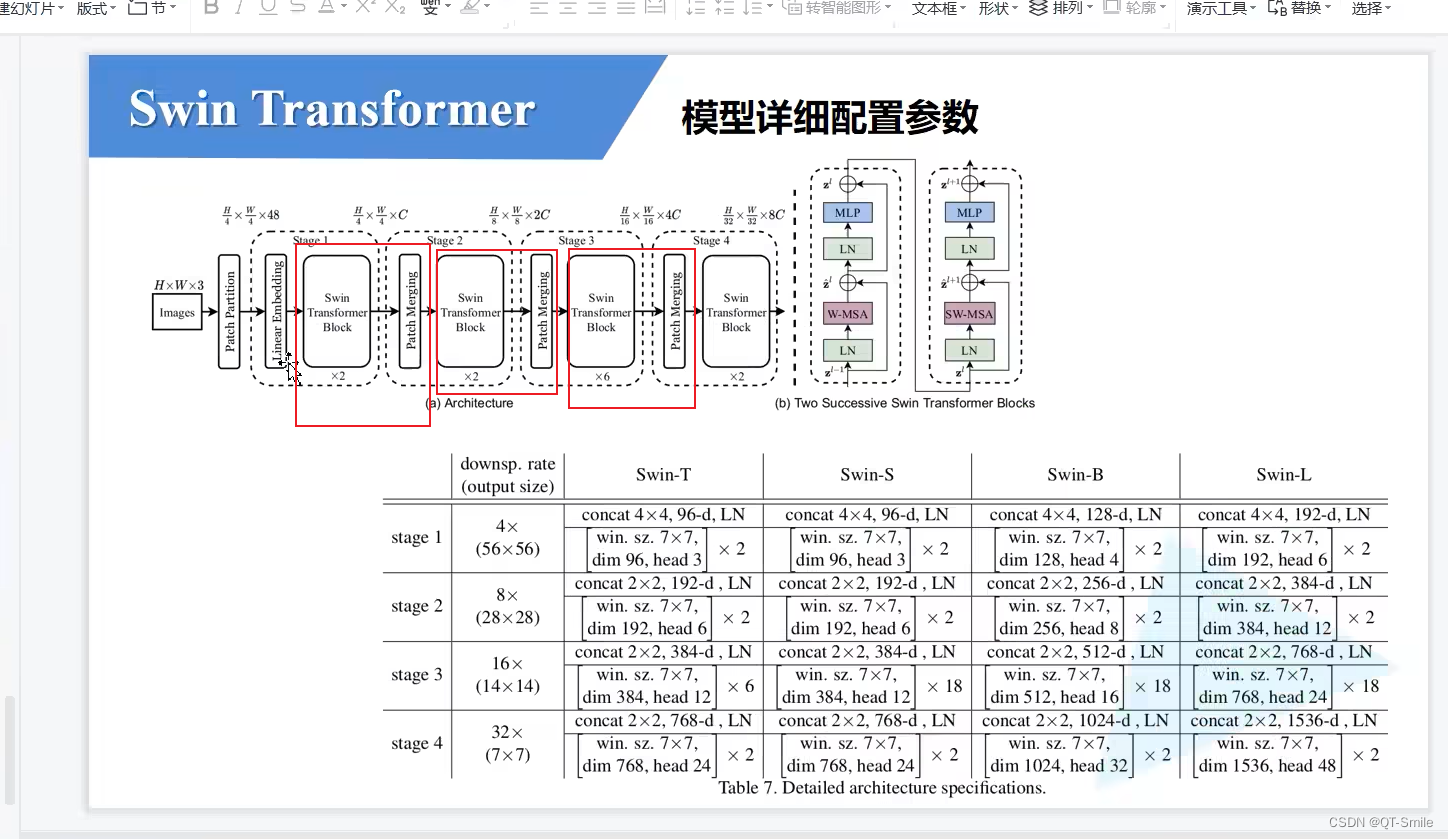

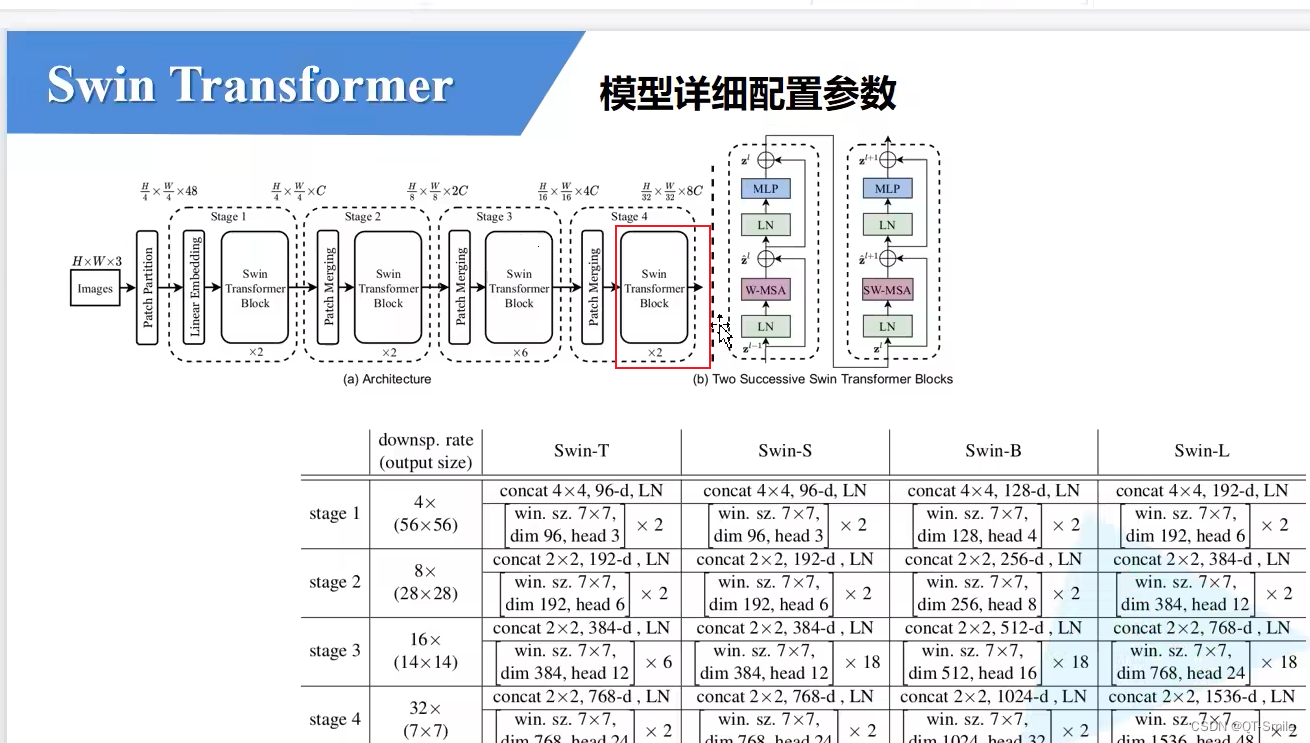

emded_dim=96 It is in the following picture C, After the first Linear Embedding Number of channels after processing

4.

After the first full connection layer, the number of channels is doubled

6.

stay Muhlti-Head Attention Whether to use qkv——bias, The default here is to use

7.

first drop_rate Connect to PatchEmbed Back

the second drop_rate stay Multi-Head Attention Used in the process

9.

Third drop_rate It means in every Swin Transformer Blocks Used in , It's from 0 Slowly grow to 0.1 Of

10.

patch_norm Default grounding PatchEmbed hinder

11.

Default not to use , Use the words , It just saves memory

12.

Corresponds to each stage in Swin Transformer Blocks The number of

13.

Corresponding stage4 Number of output channels of

14.

PatchEmbed It is to divide the pictures into non overlapping ones patches

15.

PatchEmbed Corresponding to the high in the structure drawing Patch Partition and Linearing Embedding

16.

Patch Partition It is really realized by a convolution

17.

On the right in the width direction pad And at the bottom of the height direction pad

18.

From the dimension 2 Beginning to flatten

19.

Here is the first one mentioned above drop_rate Connect directly to patchEmbed Back

20.

For the used Swin Transformer Blocks Set up a drop_path_rate. from 0 Start all the way to drop_path_rate

21.

Traversal generates each stage, And in the code stage And in the paper stage There's a little difference

about stage4, It has no Patch Merging, Only Swin Transformer Blocks

In the stage Layers that need to be stacked Swin Transformer Blocks The number of times

23.

drop_rate: Connect directly to patchEmbed Back

attn_drop: The stage What is used

dpr: The stage Different from Swin Traansformer Blocks What is used

24.

Before building 3 individual stage Yes, there is PatchMerging, But the last one stage It's not PatchMerging

ad locum self.num_layers=4

25.

26.

In the following Patch Merging Of the characteristic matrix passed in shape yes x: B , H*W , C In this way

28.

When x Of h and w No 2 The integral times of , Need padding, Need to be on the right padding A column of 0, Hereunder padding a line 0.

29.

30.

31.

For the classification model, you need to add the following code

32.

Initialize the weight of the whole model

33.

Conduct 4 Double down sampling

34.

L:H*W

35.

Lose in a certain proportion

36.

Go through each stage

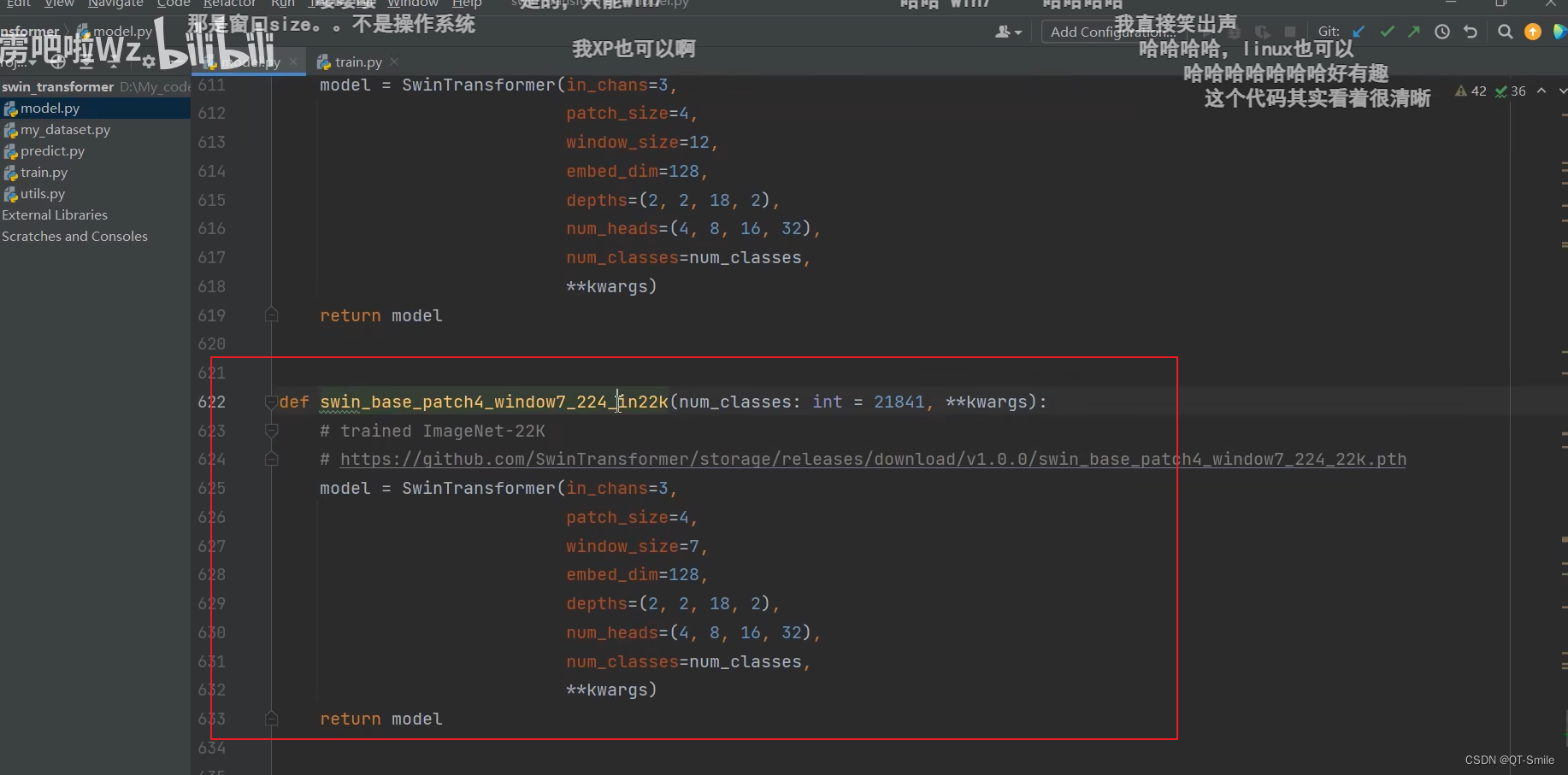

The following code is to build Swin-T Model

Swin_T stay imagenet-1k Pre training weights on

39.

Swin_B(window7_224)

Swin_B(window7_384)

41.

The original characteristic matrix needs to be moved to the right and down , But the specific distance to the right and down is equal to : The size of the window divided by 2, Round down again

self.shift_size Is the distance to the right and down

42.

One stage Medium Swin Trasformer Blocks The number of

When shift_size=0: Use W-MSA

When shift_size It's not equal to 0: Use SW-MSA,shift_size = self.shift_size

44.

Swin Transformer Blocks It could be W-MSA It could be SW-MSA. It is not used to W-MSA and SW-MSA Think of it as a Swin Transformer Blocks

45.

depth Represents the numbers circled in the figure below

46.

The down sampling here uses Patch Merging Realized

47.

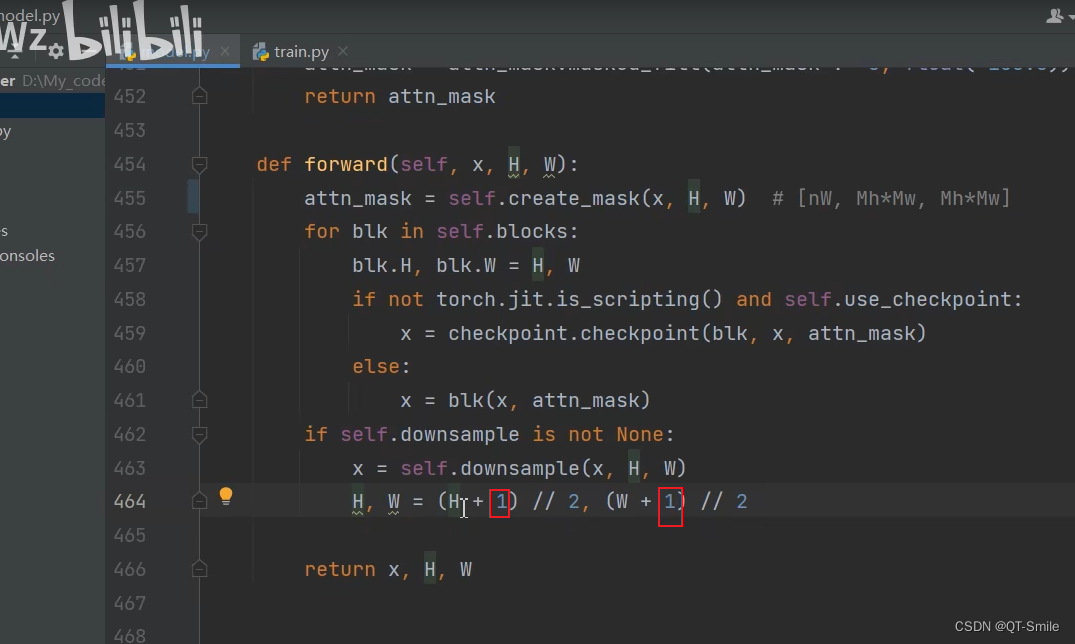

This is really SW-MSA The use of

48.

Swin Transformer Blocks Will not change the height and width of the characteristic matrix , So every one SW-MSA It's all the same , therefore attn_mask The size of will not change , therefore attn_mask Just create it once

49.

50.

This is the end of creating a stage All of Swin Transformer Blocks

51.

This is down sampling Patch Merging, The down sampling height and width are reduced by two times

52.

there +1, Is to prevent incoming H and W Is odd , So you need padding

53.

54.

55.

56.

58.

59.

The next two x Of shape It's the same

60.

边栏推荐

- 如何基于CCS_V11新建TMS320F28035的工程

- Gopher to rust hot eye grammar ranking

- generate pivot data 1

- js監聽用戶是否打開屏幕焦點

- calibration of sth

- <山东大学项目实训>渲染引擎系统(八-完)

- generate pivot data 0

- Large scale real-time quantile calculation -- a brief history of quantitative sketches

- [fishing artifact] UI library second change lowcode tool -- List part (I) design and Implementation

- Leetcode 2194. Excel 錶中某個範圍內的單元格(可以,已解决)

猜你喜欢

generate pivot data 0

Gopher to rust hot eye grammar ranking

有哪些特容易考上的院校?

Leetcode 2194. Cellules dans une plage dans un tableau Excel (OK, résolu)

Leetcode 2194. Excel 錶中某個範圍內的單元格(可以,已解决)

Swin Transformer代码讲解

MySQL interview arrangement

Leetcode 2194. Cells within a range in Excel table (yes, solved)

Servlet API

MongoDB 学习整理(用户,数据库,集合,文档 的基础命令学习)

随机推荐

The C Programming Language(第 2 版) 笔记 / 8 UNIX 系统接口 / 8.2 低级 I/O(read 和 write)

Probation period and overtime compensation -- knowledge before and after entering the factory labor law

generate pivot data 2

有哪些特容易考上的院校?

Postgresql源码(53)plpgsql语法解析关键流程、函数分析

【DSP视频教程】DSP视频教程第8期:DSP库三角函数,C库三角函数和硬件三角函数的性能比较,以及与Matlab的精度比较(2022-06-04)

从50亿图文中提取中文跨模态新基准Zero,奇虎360全新预训练框架超越多项SOTA

[DSP video tutorial] DSP video tutorial Issue 8: performance comparison of DSP library trigonometric function, C library trigonometric function and hardware trigonometric function, and accuracy compar

Anfulai embedded weekly report no. 268: May 30, 2022 to June 5, 2022

Collect | 22 short videos to learn Adobe Illustrator paper graphic editing and typesetting

acwing 2816. Judgement subsequence

MySQL面试整理

js監聽用戶是否打開屏幕焦點

【摸鱼神器】UI库秒变LowCode工具——列表篇(一)设计与实现

The C programming language (version 2) notes / 8 UNIX system interface / 8.4 random access (lseek)

Cookie 和 Session

PostgreSQL source code (53) plpgsql syntax parsing key processes and function analysis

key为断言的map是怎么玩的

如何基于CCS_V11新建TMS320F28035的工程

Recurrent+Transformer 视频恢复领域的‘德艺双馨’