当前位置:网站首页>Usage of collect_list in Scala105-Spark.sql

Usage of collect_list in Scala105-Spark.sql

2022-08-04 18:33:00 【51CTO】

SQL的基础逻辑,按照id分组,组内按照startTimeStr排序,拼接payamount组成array,array中元素排序,按照startTimeStr升序排列

2020-05-28 于南京市江宁区九龙湖

边栏推荐

猜你喜欢

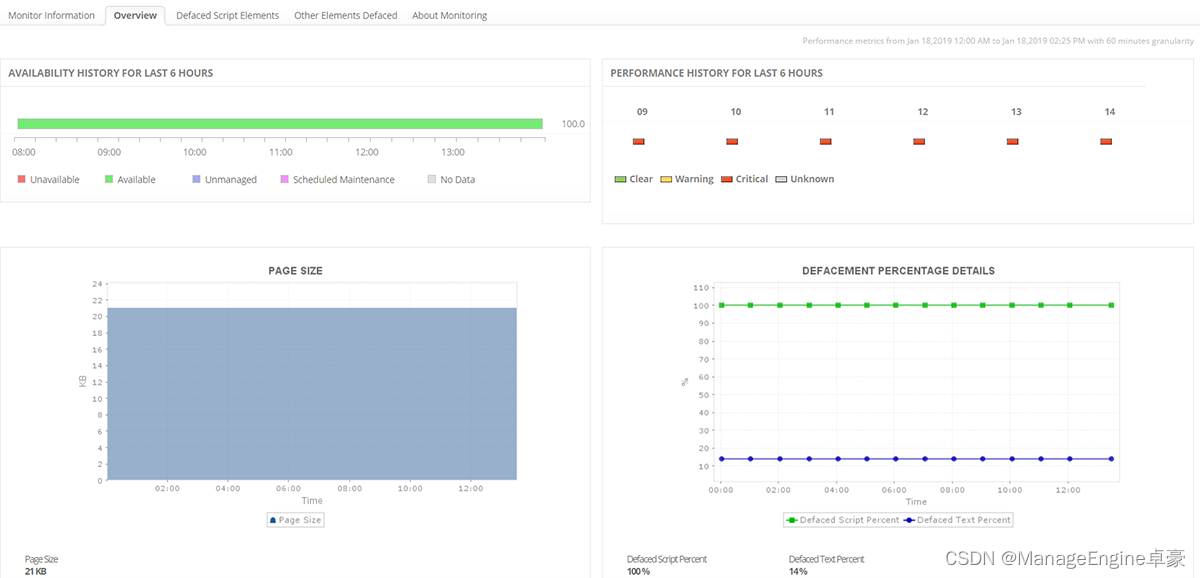

什么是网站监控,网站监控软件有什么用?

作业8.3 线程同步互斥机制条件变量

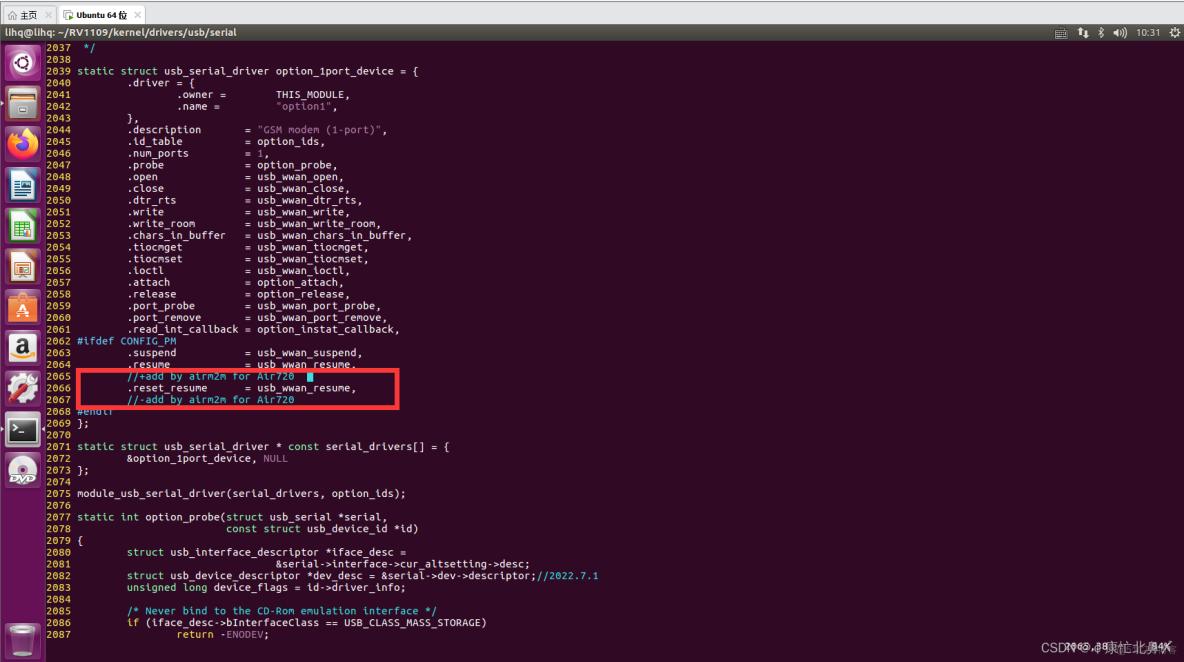

合宙Cat1 4G模块Air724UG配置RNDIS网卡或PPP拨号,通过RNDIS网卡使开发板上网(以RV1126/1109开发板为例)

网页端IM即时通讯开发:短轮询、长轮询、SSE、WebSocket

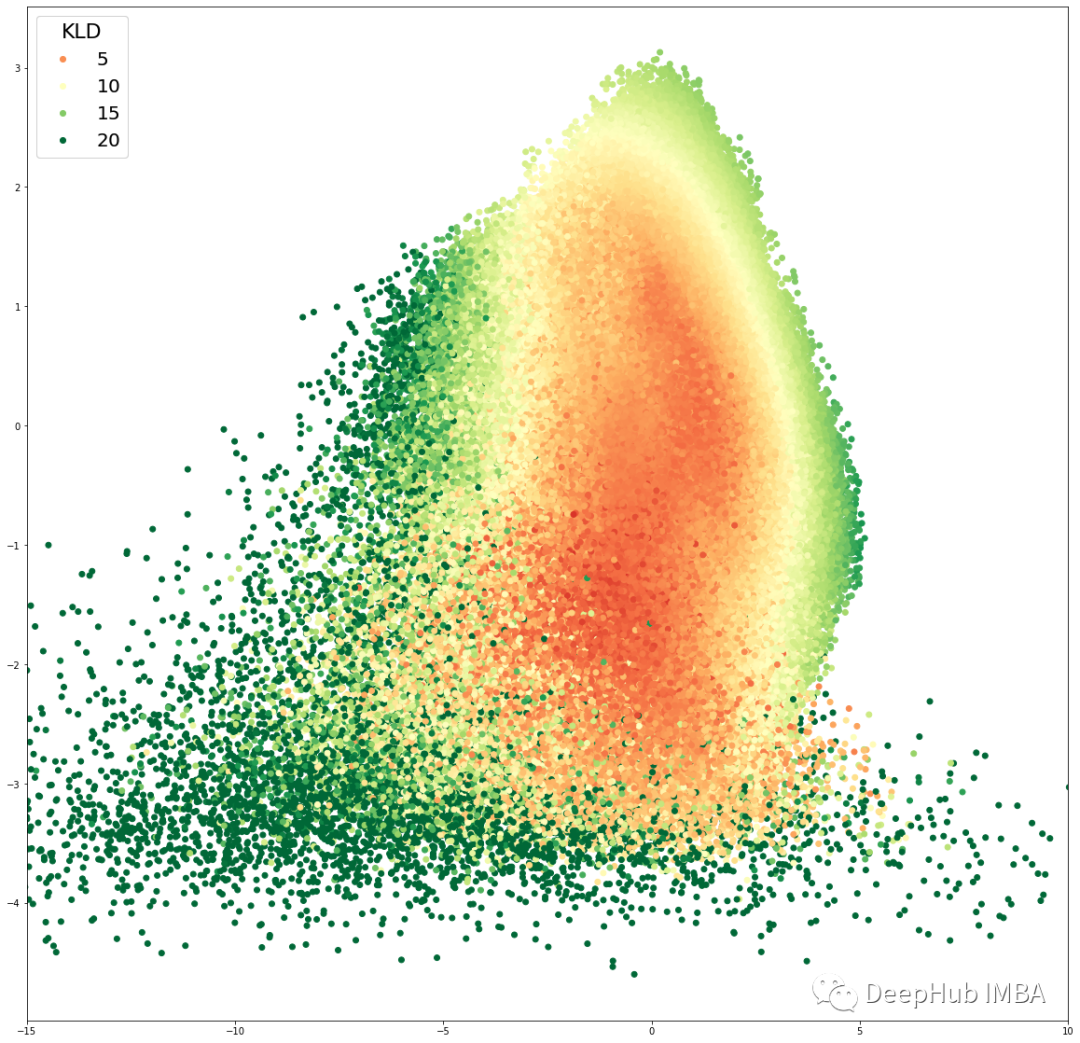

在表格数据集上训练变分自编码器 (VAE)示例

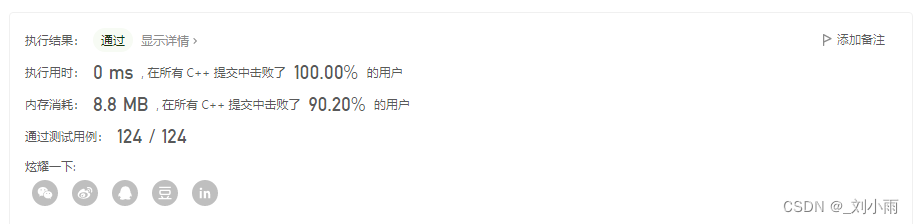

leetcode 14. 最长公共前缀

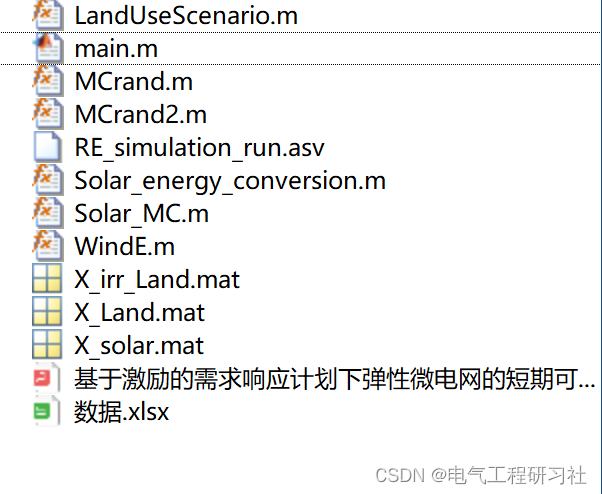

基于激励的需求响应计划下弹性微电网的短期可靠性和经济性评估(Matlab代码实现)

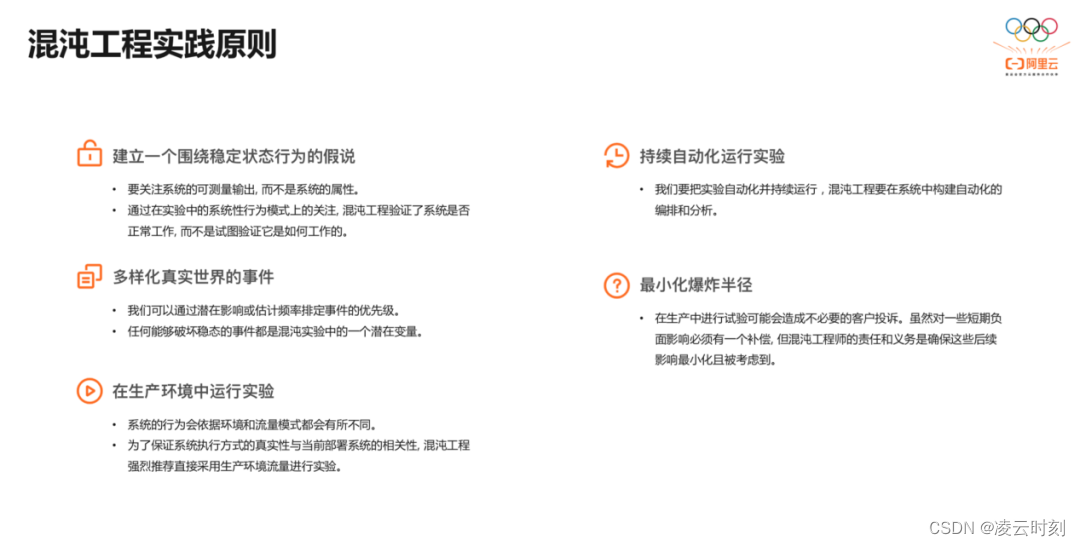

阿里云技术专家秦隆:云上如何进行混沌工程?

Flink/Scala - Storing data with RedisSink

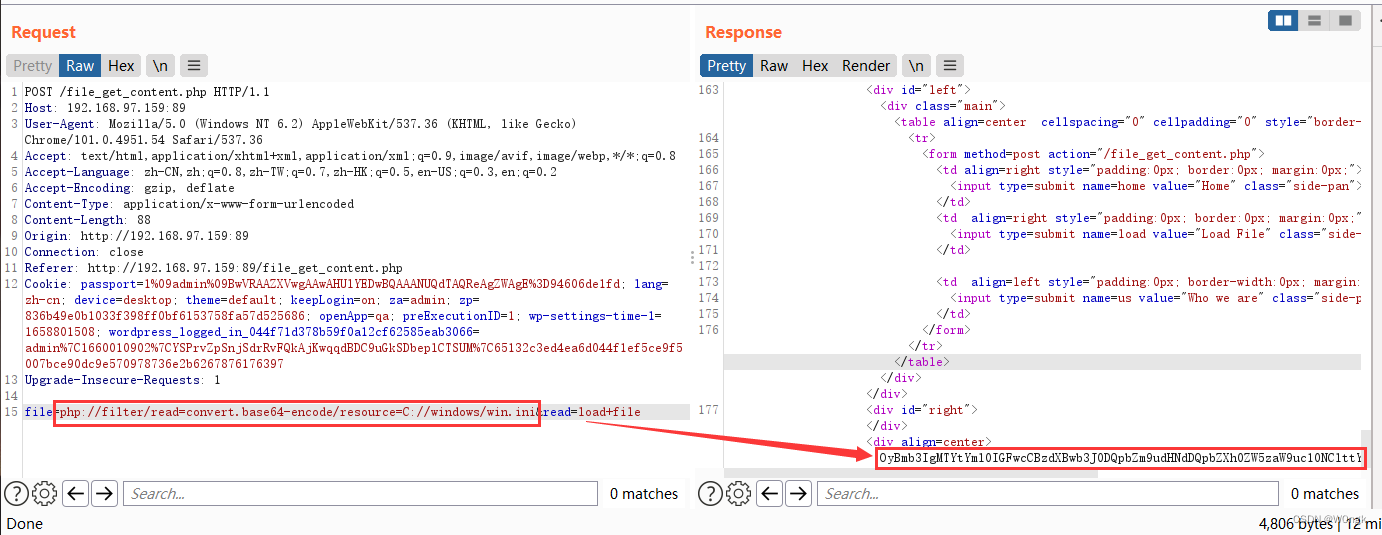

PHP代码审计8—SSRF 漏洞

随机推荐

DOM Clobbering的原理及应用

服务器

VPC2187/8 电流模式 PWM 控制器 4-100VIN 超宽压启动、高度集成电源控制芯片推荐

Global electronics demand slows: Samsung's Vietnam plant significantly reduces capacity

C#爬虫之通过Selenium获取浏览器请求响应结果

July 31, 2022 Summary of the third week of summer vacation

路由懒加载

LVS负载均衡群集之原理叙述

Hezhou Cat1 4G module Air724UG is configured with RNDIS network card or PPP dial-up, and the development board is connected to the Internet through the RNDIS network card (taking the RV1126/1109 devel

Kubernetes入门到精通- Operator 模式入门

开篇-开启全新的.NET现代应用开发体验

Enterprise survey correlation analysis case

LVS+NAT 负载均衡群集,NAT模式部署

容器化 | 在 NFS 备份恢复 RadonDB MySQL 集群数据

图解LeetCode——899. 有序队列(难度:困难)

股票开户广发证券,网上开户安全吗?

Flink/Scala - Storing data with RedisSink

自己经常使用的三种调试:Pycharm、Vscode、pdb调试

Thrift installation configuration

浅谈web网站架构演变过程