当前位置:网站首页>Example of embedding code for continuous features

Example of embedding code for continuous features

2022-08-03 07:04:00 【WGS.】

Why do continuous features tooemb?

● On the one hand continuous featuresembIt can then be more fully intersected with other features;

● On the other hand, it can make learning more fully,Avoid drastic changes in forecast results due to small changes in values.

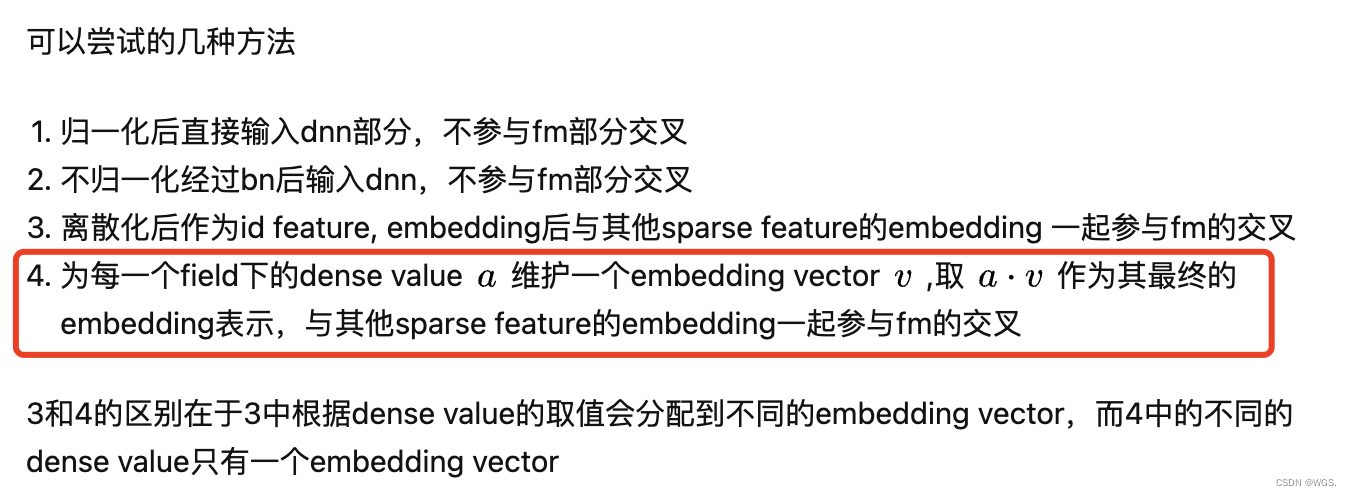

实现思路:

- Normalize continuous values;

- Then add a new column to do itlabel encoder;

- codedtensor做emb;

- Take out consecutive valuestensor,然后相乘;

ddd = pd.DataFrame({

'x1': [0.001, 0.002, 0.003], 'x2': [0.1, 0.1, 0.2]})

'''Spell back the encoded value'''

dense_cols = ['x1', 'x2']

dense_cols_enc = [c + '_enc' for c in dense_cols]

for i in range(len(dense_cols)):

enc = LabelEncoder()

ddd[dense_cols_enc[i]] = enc.fit_transform(ddd[dense_cols[i]].values).copy()

print(ddd)

'''计算fields'''

dense_fields = ddd[dense_cols_enc].max().values + 1

dense_fields = dense_fields.astype(np.int32)

offsets = np.array((0, *np.cumsum(dense_fields)[:-1]), dtype=np.longlong)

print(dense_fields, offsets)

'''Do it with the encoded oneemb'''

tensor = torch.tensor(ddd.values)

emb_tensor = tensor[:, -2:] + tensor.new_tensor(offsets).unsqueeze(0)

emb_tensor = emb_tensor.long()

embedding = nn.Embedding(sum(dense_fields) + 1, embedding_dim=4)

torch.nn.init.xavier_uniform_(embedding.weight.data)

dense_emb = embedding(emb_tensor)

print('---', dense_emb.shape)

print(dense_emb.data)

# print(embedding.weight.shape)

# print(embedding.weight.data)

# print(embedding.weight.data[1])

'''Take the original numerical features and increase the dimension for multiplication'''

dense_tensor = torch.unsqueeze(tensor[:, :2], dim=-1)

print('---', dense_tensor.shape)

print(dense_tensor)

dense_emb = dense_emb * dense_tensor

print(dense_emb)

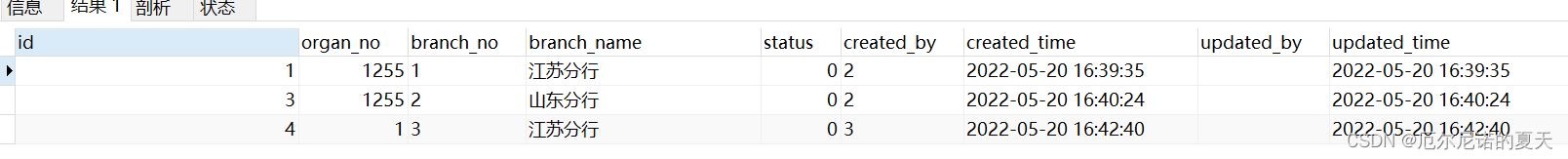

x1 x2 x1_enc x2_enc

0 0.001 0.1 0 0

1 0.002 0.1 1 0

2 0.003 0.2 2 1

[3 2] [0 3]

--- torch.Size([3, 2, 4])

tensor([[[-0.1498, -0.5054, 0.0211, -0.2746],

[ 0.0133, 0.3257, -0.2117, -0.0956]],

[[-0.1296, -0.4524, 0.5334, 0.0894],

[ 0.0133, 0.3257, -0.2117, -0.0956]],

[[ 0.5597, 0.3630, -0.7686, -0.1408],

[ 0.6840, -0.5328, 0.0422, -0.6365]]])

--- torch.Size([3, 2, 1])

tensor([[[0.0010],

[0.1000]],

[[0.0020],

[0.1000]],

[[0.0030],

[0.2000]]], dtype=torch.float64)

tensor([[[-1.4985e-04, -5.0542e-04, 2.1051e-05, -2.7457e-04],

[ 1.3284e-03, 3.2572e-02, -2.1174e-02, -9.5578e-03]],

[[-2.5924e-04, -9.0472e-04, 1.0668e-03, 1.7884e-04],

[ 1.3284e-03, 3.2572e-02, -2.1174e-02, -9.5578e-03]],

[[ 1.6790e-03, 1.0891e-03, -2.3059e-03, -4.2229e-04],

[ 1.3679e-01, -1.0656e-01, 8.4448e-03, -1.2731e-01]]],

dtype=torch.float64, grad_fn=<MulBackward0>)

reference:

https://www.zhihu.com/question/352399723/answer/869939360

有关offsets可以看:

https://blog.csdn.net/qq_42363032/article/details/125928623?spm=1001.2014.3001.5501

边栏推荐

猜你喜欢

随机推荐

你真的了解volatile关键字吗?

Embedding的两种实现方式torch代码

CISP-PTE真题演示

5G网络入门基础--5G网络的架构与基本原理

Shell脚本--信号发送与捕捉

El - tree to set focus on selected highlight highlighting, the selected node deepen background and change the font color, etc

C语言实现通讯录功能(400行代码实现)

Docker安装Mysql

Multi-Head-Attention原理及代码实现

链表之打基础--基本操作(必会)

Mysql去除重复数据

pyspark --- 空串替换为None

连续型特征做embedding代码示例

关于Attention的超详细讲解

Composer require 报错 Installation failed, reverting ./composer.json and ./composer.lock to their ...

Basic syntax of MySQL DDL and DML and DQL

ES6中 async 函数、await表达式 的基本用法

AutoInt网络详解及pytorch复现

【云原生 · Kubernetes】Kubernetes基础环境搭建

npx 有什么作用跟意义?为什么要有 npx?什么场景使用?