当前位置:网站首页>Safety reinforcement learning based on linear function approximation safe RL with linear function approximation translation 1

Safety reinforcement learning based on linear function approximation safe RL with linear function approximation translation 1

2022-07-04 10:05:00 【Baishui】

The paper :Safe Reinforcement Learning with Linear Function Approximation

Download address :http://proceedings.mlr.press/v139/amani21a/amani21a.pdf

meeting / year :PMLR / 2021

Word Version download address ( Hard work ):https://download.csdn.net/download/baishuiniyaonulia/85863332

The translation of this article belongs to semi manual , Please forgive me for any mistakes .

List of articles

- Abstract Abstract

- 1. Introduce Introduction

- 2. Safe linearity UCB Of Q、V Value iteration Safe Linear UCB Q/V Iteration

- 3. SLUCB-QVI It's a theoretical guarantee Theoretical guarantees of SLUCB-QVI

- 4、5、6

Abstract Abstract

In recent years , The security of reinforcement learning becomes more and more important . However , Existing solutions either cannot strictly avoid choosing unsafe actions , This may lead to catastrophic results for safety critical systems , Or it cannot provide a regrettable guarantee for the environment that needs to learn security constraints . In this paper , We solve these two problems by first modeling security as an unknown linear cost function of state and action , It must always be below a certain threshold . then , We propose an algorithm , be called SLUCB-QVI and RSLUCB-QVI, For finite level Markov decision processes with linear function approximation (MDP). We proved that SLUCB-QVI and RSLUCB-QVI Without violating security , Realized O ~ ( κ d 3 H 3 T ) \widetilde{\mathcal{O}}\left( \kappa \sqrt{ { {d}^{3}}{ {H}^{3}}T} \right) O(κd3H3T) regret , It almost matches the most advanced unsafe Algorithm , among H H H Is the duration of each round , d d d Is the dimension of feature mapping , κ κ κ Is a constant representing a security constraint , T T T Is the total number of actions . We further propose numerical simulations to confirm our theoretical findings .

1. Introduce Introduction

Reinforcement learning (RL) It's a kind of research , That is, a subject tries to maximize its expected cumulative rewards through interaction with the unknown environment . In most classic RL In the algorithm, , The goal of an agent is to maximize long-term gain by exploring all possible actions . However , In many real-world systems , Free exploration of all behaviors, even unsafe games, can also be harmful actions that can lead to disastrous results . therefore ,RL The security of algorithm has become a serious problem , Limit RL The applicability of the algorithm in many real systems . for example , In the automatic driving vehicle , Explore those that avoid collisions and damage to cars 、 The policy of people and property is crucial . Conversion cost restrictions in medical applications and legal restrictions in financial management are other examples of safety critical applications . All of the above safety critical environments introduce a new challenge of balancing the goal of maximizing rewards with the limits of taking safety actions .

In order to solve this main problem , The learning algorithm needs to ensure that it does not violate certain security constraints . From the perspective of robber optimization , A linear robber problem is studied , In each round , Both need high probability to satisfy the linear cost constraint . For this question , They proposed a no regret algorithm with high probability that does not violate constraints . When the environment passes through the more challenging and complex unknown MDP When setting up modeling , To solve RL There has been a surge in research activities to explore security issues in . Many existing algorithms constrain Markov decision processes (CMDP) Yes RL Security Modeling , The classic MDP Extend to a range of settings that have additional constraints on the total expected cost . In order to solve CMDPs Safety requirements in , Different approaches have been proposed , As original - Dual strategy optimization 、 Constraint policy optimization and incentive constraint policy optimization . These algorithms either have no theoretical guarantee in batch offline settings , Or there is no guarantee of asymptotic convergence . In another study of online settings CMDP An algorithm of violating the sublinear limit of the constrained quantity is proposed in the work of . Besides , The security constraint considered in the above paper is defined by the cumulative expected cost below a certain threshold .

In this paper , We propose an upper trust realm (UCB)- Based on Algorithms - It is called safe linearity UCB Q/V iteration (SLUCB-QVI)- Focus on deterministic strategy choices , Respect the concept of stricter safety requirements , An action must be performed with a high probability at each time step . We also propose random SLUCB-QVI(RSLUCB-QVI), This is a safe algorithm , Focus on random strategy selection , Without violating any constraints . For both of these algorithms , We assume that the bottom MDP It has a linear structure , And proved that a regretful circle is equivalent to the unsafe part .

Our main technical contribution enables us to guarantee the sublinear regret constraint without violating the security constraint , Include :1) Conservatively select actions from the subset of the correctly defined unknown security set ;2) Use careful algorithm design to ensure optimism in the face of security constraints , That is, the value function of our proposed algorithm is larger than the optimal value function . See the first 2、3、4 section .

Symbol Notation

We first introduce a set of symbols used throughout the paper . We use lowercase letters to represent scalars , Use lowercase bold letters to represent vectors , Use capital bold letters to represent the matrix .x The Euclidean norm of is written as ∥ x ∥ 2 \Vert x \Vert_2 ∥x∥2. We use it x ⊤ x^\top x⊤ Represents any column vector x x x The transpose . For any vector x x x and y y y, We use it < x , y > <x,y> <x,y> To express their inner product . set up x x x Positive definite d × d d\times d d×d Matrix and ν ∈ R d \mathcal{ν}∈\mathbb{R}^d ν∈Rd. ν \mathcal{ν} ν be relative to A \mathbf{A} A A weighted 2- The norm is defined as ∥ ν ∥ 2 = ν ⊤ A ν \Vert \mathcal{ν} \Vert_2=\sqrt{\mathcal{ν}^\top\mathbf{A} \mathcal{ν}} ∥ν∥2=ν⊤Aν. For positive integers n n n, [ n ] [n] [n] Express { 1 , 2 , . . . , n } \{1,2,...,n\} { 1,2,...,n}. We use it e i e_i ei To represent the i i i Two standard basis vectors . Last , We ignore the large logarithm factor O O O Symbols use standard O ~ \tilde{\mathcal{O}} O~ Symbol .

1.1. The problem is formulated Problem formulation

Finite horizon Markov decision process Finite-horizon Markov decision process

We consider a finite level Markov decision process (MDP), We consider a finite level Markov decision process (MDP), Expressed as M = ( S , A , H , P , r , c ) M=(\mathcal{S},\mathcal{A}, H, \mathbb{P}, r,c) M=(S,A,H,P,r,c), among , S \mathcal{S} S It's a state set , A \mathcal{A} A It's an action set , H H H Is the length of each round ( The transverse ), P = { P h } h = 1 H \mathbb{P}=\{\mathbb{P_h}\}_{h=1}^H P={ Ph}h=1H Is the transition probability , r = { r h } h = 1 H r=\{r_h\}_{h=1}^H r={ rh}h=1H It's a reward function , c = { c h } c = 1 H c=\{c_h\}_{c=1}^H c={ ch}c=1H It's a safe quantity . For each time step h ∈ [ H ] h\in [H] h∈[H], P h ( s ′ ∣ s , a ) \mathbb{P}_h(s'|s,a) Ph(s′∣s,a) In state s s s Make an action a a a Transfer to the State s ′ s' s′ Probability , also r h : S × A → [ 0 , 1 ] r_h:\mathcal{S}\times\mathcal{A}\to[0,1] rh:S×A→[0,1], also c h : S × A → [ 0 , 1 ] c_h:\mathcal{S}\times\mathcal{A}\to[0,1] ch:S×A→[0,1] Is a reward and constraint function . We consider the S S S and A A A Known learning problems , And the transition probability P h \mathbb{P}_h Ph、 Reward r h r_h rh And safety quantity c h c_h ch It is unknown. , Must study online . Agents interact with unknown environments , The environment is described as M M M In each round . In practice , In each round k k k And time steps h ∈ [ H ] h\in[H] h∈[H], The agent observes the State s h k s^k_h shk, Make an action a h k ∈ A a^k_h\in A ahk∈A, Then observe a reward r h k : = r h ( s h k , a h k ) r^k_h:=r_h(s_h^k,a_h^k) rhk:=rh(shk,ahk), And a safety measure disturbed by noise z h k = c h ( s h k , a h k ) + ϵ h k z_h^k=c_h(s_h^k,a_h^k)+\epsilon_h^k zhk=ch(shk,ahk)+ϵhk, among ϵ h k \epsilon_h^k ϵhk It's a random noise .

Security constraints Safety Constraint

We assume that the security of the underlying system is crucial , The learning environment is constrained by the side that restricts the choice of action , In each round k k k And time steps h ∈ [ H ] h\in[H] h∈[H], In state s h k s^k_h shk when , The agent must choose a safe operation program , So that :

c h ( s h k , a h k ) ≤ τ { {c}_{h}}\left( s_{h}^{k},a_{h}^{k} \right)\le \tau ch(shk,ahk)≤τ

among τ \tau τ Is a known constant . therefore , We define the unknown set of security actions as :

A h safe ( s ) : = { a ∈ A : c h ( s , a ) ≤ τ } , ∀ ( s , h ) ∈ S × [ H ] \mathcal{A}_{h}^{\text{safe}}(s):=\left\{ a\in \mathcal{A}:{ {c}_{h}}(s,a)\le \tau \right\},\quad \forall (s,h)\in \mathcal{S}\times [H] Ahsafe(s):={ a∈A:ch(s,a)≤τ},∀(s,h)∈S×[H]

therefore , After observing the k k k The state of the round s h k s^k_h shk And time steps h ∈ [ H ] h\in [H] h∈[H] after , The action selection of the agent must probably belong to A h s a f e ( s h k ) \mathcal{A}_h^{safe}(s^k_h) Ahsafe(shk). As an example of heuristics , Consider a autonomous vehicle . One side , agent ( vehicle ) Get the reward from the first point to the second point as soon as possible . On the other hand , Driving behavior must be limited to compliance with traffic safety standards .

The goal is Goal

A secure deterministic strategy is a function π : S × [ H ] → A \pi : \mathcal{S}\times[H]\to \mathcal{A} π:S×[H]→A. such , π ( s , h ) ∈ A h s a f e ( s ) \pi(s,h)\in \mathcal{A}_h^{safe}(s) π(s,h)∈Ahsafe(s) It's a strategy π \pi π It is suggested that the agent in the time step h ∈ [ H ] h\in [H] h∈[H] And status s ∈ S s\in \mathcal{S} s∈S Safety actions taken when . therefore , We go through :

Π safe : = { π : π ( s , h ) ∈ A h safe ( s ) , ∀ ( s , h ) ∈ S × [ H ] } { {\Pi }^{\text{safe}}}:=\left\{ \pi :\pi (s,h)\in \mathcal{A}_{h}^{\text{safe}}(s),\forall (s,h)\in \mathcal{S}\times [H] \right\} Πsafe:={ π:π(s,h)∈Ahsafe(s),∀(s,h)∈S×[H]}

For each h ∈ [ H ] h\in [H] h∈[H], In a security policy π ∈ ∏ s a f e \pi \in \prod^{safe} π∈∏safe The cumulative expected reward obtained under , Called value function V h π : S → R V^\pi_h:\mathcal{S}\to \mathbb{R} Vhπ:S→R, Is defined as :

V h π ( s ) : = E [ ∑ h ′ = h H r h ′ ( s h ′ , π ( s h ′ , h ′ ) ) ∣ s h = s ] V_{h}^{\pi }(s):=\mathbb{E}\left[ \left. \sum\limits_{ {h}'=h}^{H}{ { {r}_{ { {h}'}}}}\left( { {s}_{ { {h}'}}},\pi \left( { {s}_{ { {h}'}}},{h}' \right) \right) \right|{ {s}_{h}}=s \right] Vhπ(s):=E[h′=h∑Hrh′(sh′,π(sh′,h′))∣∣∣∣∣sh=s]

Where expectations exceed the environment . We also define the state - Action value action Q h π : S × A h s a f e ( . ) → R Q^\pi_h:\mathcal{S}\times\mathcal{A}_h^{safe}(.)\to \mathbb{R} Qhπ:S×Ahsafe(.)→R For a security policy π ∈ ∏ s a f e \pi \in \prod^{safe} π∈∏safe In time step h ∈ [ H ] h\in [H] h∈[H] from :

Q h π ( s , a ) : = E [ ∑ h ′ = h + 1 H r h ′ ( s h ′ , π ( s h ′ , h ′ ) ) ∣ s h = s , a h = a ] Q_{h}^{\pi }(s,a):=\mathbb{E}\left[ \left. \sum\limits_{ {h}'=h+1}^{H}{ { {r}_{ { {h}'}}}}\left( { {s}_{ { {h}'}}},\pi \left( { {s}_{ { {h}'}}},{h}' \right) \right) \right|{ {s}_{h}}=s,{ {a}_{h}}=a \right] Qhπ(s,a):=E[h′=h+1∑Hrh′(sh′,π(sh′,h′))∣∣∣∣∣sh=s,ah=a]

To simplify symbols , For any function f, We mean [ P h f ] ( s , a ) : = E s ′ ∼ P h ( . ∣ s , a ) f ( s ′ ) [\mathbb{P}_hf](s,a):=\mathbb{E}_{s'\sim\mathbb{P_h}(.|s,a)}f(s') [Phf](s,a):=Es′∼Ph(.∣s,a)f(s′). Give Way π ∗ \pi_* π∗ Is the best security policy , such V h π ∗ ( s ) = s u p π ∈ ∏ s a f e V h π ( s ) V^{\pi_*}_h(s)=sup_{\pi\in\prod^{safe}}V^\pi_h(s) Vhπ∗(s)=supπ∈∏safeVhπ(s) For all ( s , h ) ∈ S × [ H ] (s,h)\in\mathcal{S}\times[H] (s,h)∈S×[H]. therefore , For all ( s , h ) ∈ S × [ H ] (s,h)\in\mathcal{S}\times[H] (s,h)∈S×[H] and a ∈ A h s a f e ( s ) a\in\mathcal{A}_h^{safe}(s) a∈Ahsafe(s), Any security policy π ∈ ∏ s a f e \pi \in \prod^{safe} π∈∏safe And optimal security policy Bellman The equation is :

Q h π ( s , a ) = r h ( s , a ) + [ P h V h + 1 π ] ( s , a ) , V h π ( s ) = Q h π ( s , π ( s , h ) ) , \begin{aligned} & Q_{h}^{\pi }(s,a)={ {r}_{h}}(s,a)+\left[ { {\mathbb{P}}_{h}}V_{h+1}^{\pi } \right](s,a), \\ & \quad V_{h}^{\pi }(s)=Q_{h}^{\pi }(s,\pi (s,h)), \\ \end{aligned} Qhπ(s,a)=rh(s,a)+[PhVh+1π](s,a),Vhπ(s)=Qhπ(s,π(s,h)), Q h ∗ ( s , a ) = r h ( s , a ) + [ P h V h + 1 ∗ ] ( s , a ) . V h ∗ ( s ) = max a ∈ A h safe ( s ) Q h ∗ ( s , a ) , \begin{aligned} & Q_{h}^{*}(s,a)={ {r}_{h}}(s,a)+\left[ { {\mathbb{P}}_{h}}V_{h+1}^{*} \right](s,a). \\ & \quad V_{h}^{*}(s)=\underset{a\in \mathcal{A}_{h}^{\text{safe }}(s)}{\mathop{\max }}\,Q_{h}^{*}(s,a), \\ \end{aligned} Qh∗(s,a)=rh(s,a)+[PhVh+1∗](s,a).Vh∗(s)=a∈Ahsafe (s)maxQh∗(s,a),

among V H + 1 π ( s ) = V ∗ H + 1 ( s ) = 0 V^\pi_{H+1}(s)=V^*{H+1}(s)=0 VH+1π(s)=V∗H+1(s)=0. Please note that , In the classic without security constraints RL in , Behrman optimality equation means that there is at least one deterministic optimal strategy . When considering solving the Behrman equation of the optimal strategy , The existence of security constraints is equivalent to that without constraints but with different constraints MDP The solution of ( s , h ) ∈ S × [ H ] (s,h)\in\mathcal{S}\times[H] (s,h)∈S×[H], namely A h s a f e ( s ) \mathcal{A}_h^{safe}(s) Ahsafe(s)

set up K K K Is the total number of events , s 1 k s^k_1 s1k For events k ∈ [ K ] k\in [K] k∈[K] Initial state at the beginning , π k \pi_k πk For agents in events k ∈ [ K ] k\in [K] k∈[K] High probability security strategy selected during . Then define cumulative pseudo regret :

R K : = ∑ k = 1 K V 1 ∗ ( s 1 k ) − V 1 π k ( s 1 k ) { {R}_{K}}:=\sum\limits_{k=1}^{K}{V_{1}^{*}}(s_{1}^{k})-V_{1}^{ { {\pi }_{k}}}(s_{1}^{k}) RK:=k=1∑KV1∗(s1k)−V1πk(s1k)

The goal of an agent is to maintain R K R_K RK As small as possible ( R K / K → 0 R_K/K\to0 RK/K→0 With K K K Bigger ), Instead of violating safety constraints in the process , namely , π k ∈ ∏ s a f e \pi_k \in \prod^{safe} πk∈∏safe For all k ∈ [ K ] k\in[K] k∈[K] There is a high probability .

Linear function approximation Linear Function Approximation

We are concerned with kernels with linear transfer 、 The reward and cost functions encapsulated in the following assumptions MDPs.

hypothesis 1 ( linear MDP) Assumption 1 (Linear MDP)

M = ( S , A , H , P , r , c ) M=(\mathcal{S},\mathcal{A},H,\mathbb{P},r,c) M=(S,A,H,P,r,c) It has feature mapping ϕ : S × Of Line sex M D P A → R d \phi :\mathcal{S}\times \mathcal{ Linearity of MDP A}\to { {\mathbb{R}}^{d}} ϕ:S× Of Line sex MDPA→Rd, If for any h ∈ [ H ] h\in [H] h∈[H], There are unknown measures μ h ∗ : = [ μ h ∗ ( 1 ) , … , μ h ∗ ( d ) ] ⊤ \mu _{h}^{*}:={ { \left[ \mu _{h}^{*(1)},\ldots ,\mu _{h}^{*(d)} \right]}^{\top }} μh∗:=[μh∗(1),…,μh∗(d)]⊤ exceed S \mathcal{S } S And unknown vectors θ h ∗ \theta _{h}^{*} θh∗, γ h ∗ ∈ R d \gamma _{h}^{*}\in { {\mathbb{R}}^{d}} γh∗∈Rd bring P h ( . ∣ s , a ) = * μ h ∗ ( . ) , ϕ ( s , a ) * , r h ( s , a ) = * θ h ∗ , ϕ ( s , a ) * { {\mathbb{P}}_{h}}(.|s,a)=\left\langle \mu _{h}^{*}(.),\phi (s,a) \right\rangle , { {r}_{h}}(s,a)=\left\langle \theta_{h}^{*},\phi (s,a)\right\rangle Ph(.∣s,a)=*μh∗(.),ϕ(s,a)*,rh(s,a)=*θh∗,ϕ(s,a)*, and c h ( s , a ) = * γ h ∗ , ϕ ( s , a ) * { {c}_ {h}}(s,a)=\left\langle \gamma _{h}^{*},\phi (s,a)\right\rangle ch(s,a)=*γh∗,ϕ(s,a)*.

This assumption emphasizes linearity MDP The definition of , Among them, Markov transition model 、 Reward function and cost function in feature mapping ϕ \phi ϕ Is linear .

1.2. Related work Related works

Safety reinforcement learning and randomization strategy Safe RL with randomized policies

This paper studies constrained Markov decision-making processes focusing on unknown dynamic and stochastic strategies (CMDP) Formulated safety RL problem . (Efroni et al., 2020; Turchetta et al., 2020; Garcelon et al., 2020; Zheng and Ratliff, 2020; Ding et al., 2020a; Qiu et al., 2020; Ding et al., 2020b; Xu et al., 2020; Kalagarla et al., 2020). In the above paper , The goal is to find the optimal random strategy , Make the reward value function V r π ( s ) V_r^\pi(s) Vrπ(s)( Expect total reward ) Maximize , At the same time, ensure the cost value function V c π ( s ) V_c^\pi(s) Vcπ(s)( Expected total cost ) Do not exceed a certain threshold . This safety requirement is defined in a range , According to the randomization of environment and policy , Therefore, it is not as strict as the safety requirements considered in this article , And the safety requirements must be met in each time step , Will play an action . In addition to different problem formulas , The theoretical guarantee of these works is fundamentally different from that provided in this paper . Recent closely related work (Ding et al., 2020a) Study constraints at finite levels MDP Linear structure is realized by primitive strategy optimization algorithm in our paper O ~ ( d H 2.5 T ) \tilde{\mathcal{O}}\bigl( dH^{2.5}\sqrt{T}\bigr) O~(dH2.5T) Regret and constraint violation , It can only be used to set limited action sets A \mathcal{A} A.(Efroni et al., 2020) The algorithm is optimized by linear programming and primal dual strategy , Get O ~ ( ∣ S ∣ H 2 ∣ S ∣ ∣ A ∣ T ) \tilde{\mathcal{O}}\bigl( |\mathcal{S}|H^2\sqrt{|\mathcal{S}||\mathcal{A}|T}\bigr) O~(∣S∣H2∣S∣∣A∣T) Regret and constraint violation . stay (Qiu et al., 2020) in , The author studied a case in O ~ ( ∣ S ∣ H ∣ A ∣ T ) \tilde{\mathcal{O}}\bigl( |\mathcal{S}|H\sqrt{|\mathcal{A}|T}\bigr) O~(∣S∣H∣A∣T) Regret and constraint violation constrained antagonistic stochastic shortest path problem .(Dingetal.,2020b) A primal dual algorithm for solving infinite horizon discount rate is proposed , The algorithm achieves the rate of optimality gap and constraint violation O ~ ( 1 / T ) \tilde{\mathcal{O}}\bigl( 1/\sqrt{T}\bigr) O~(1/T) Global convergence of . The above work can only guarantee the limit of the number of violations of constraints , Our algorithm has never violated security constraints in the learning process .

In addition to the primal dual method , stay (Chow et al., 2018) in , Lyapunov function is also used to deal with constraints .(Yu et al., 2019) This paper presents a constrained strategy gradient algorithm with convergence guarantee . The above two works focus on solving with the known transition model and constraint function CMDPs Model , Without warranty of regret .

Safety reinforcement learning and gp And deterministic transfer models and strategies Safe RL with GPs and deterministic transition model and policies

In another job ,(Turchetta et al., 2016; Berkenkamp et al., 2017; Wachi et al., 2018; Wachi and Sui, 2020) Use Gaussian process, use deterministic transformation and / Or value function modeling dynamics , In order to be able to estimate constraints and ensure safe learning . Although some of these algorithms are approximately secure , But analyzing its convergence is still challenging , And lack of regret analysis .

2. Safe linearity UCB Of Q、V Value iteration Safe Linear UCB Q/V Iteration

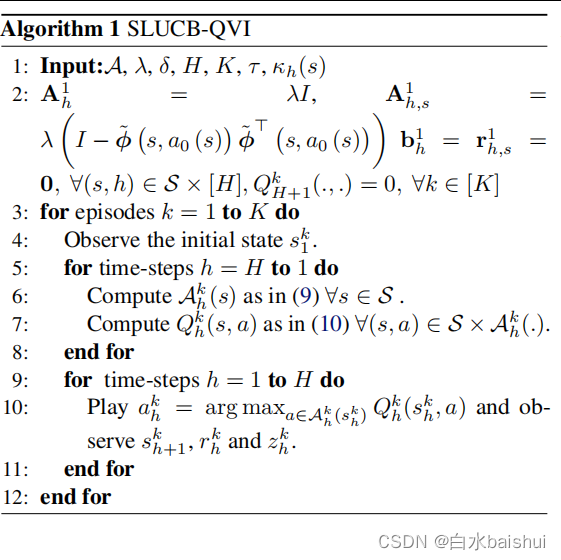

In this section , We will introduce the algorithm 1 The secure linear upper confidence bound summarized in Q/V iteration (SLUCB-QVI), Then in the first 2 Its performance is described at a high level in section . First , We will describe the algorithm in the next chapter 1 And the following necessary assumptions and symbol sets used in its analysis .

hypothesis 2 ( Non empty safety set ) Assumption 2 (Non-empty safe sets)

For all s ∈ S s\in \mathcal{S} s∈S, There is a known safety action a 0 ( s ) { {a}_{0}}(s) a0(s) bring a 0 ( s ) ∈ A h safe ( s ) { {a}_{0}}(s)\in \mathcal{A}_{h}^{\text{safe}}(s) a0(s)∈Ahsafe(s) Known safety measures τ h ( s ) : = * ϕ ( s , a 0 ( s ) ) , γ h ∗ * < τ { {\tau }_{h}}(s):=\left\langle \phi \left( s,{ {a}_{0}}(s) \right),\gamma _{h}^{*} \right\rangle <\tau τh(s):=*ϕ(s,a0(s)),γh∗*<τ For all h ∈ [ H ] h\in [H] h∈[H].

Understand safety actions a 0 ( s ) a_0(s) a0(s) For solving the problem of safety linearity in this paper MDP Settings are necessary , This requires that constraints be met from the first round (1). This assumption is also true in many practical examples , The known safety actions may be the actions recommended by the company's current strategy , Or cost neutral action , There is not necessarily a high return , But its cost is far from reaching the threshold . It can relax and understand safety actions τ h ( s ) \tau_h(s) τh(s) Cost assumptions . under these circumstances , The agent starts with the time step h h h Carry out a a 0 ( s ) a_0(s) a0(s) round , In order to construct the gap interval time interval τ − τ h ( s ) \tau-\tau_h(s) τ−τh(s) Conservative estimator . T h ( s ) T_h(s) Th(s) Choose in an adaptive way , In the appendix A.4 In, we show 16 log ( K ) ( τ − τ h ( s ) ) 2 ≤ T h ( s ) ≤ 64 log ( K ) ( τ − τ h ( s ) ) 2 \frac{16\log(K)}{(\tau-\tau_h(s))^2}\leq T_h(s)\leq \frac{64\log(K)}{(\tau-\tau_h(s))^2} (τ−τh(s))216log(K)≤Th(s)≤(τ−τh(s))264log(K), stay T h ( s ) T_h(s) Th(s) After wheel , The agent is calculating the estimated set of security policies ( later ) Depend on τ h ( s ) \tau _h(s) τh(s) Estimation ( Discussed later ).

Symbol Notation

For any vector x ∈ R d \mathbf{x}\in { {\mathbb{R}}^{d}} x∈Rd, Define normalized vector x ~ : = x ∥ x ∥ 2 \mathbf{\tilde{x}}:=\frac{\mathbf{x} }{\|\mathbf{x}{ {\|}_{2}}} x~:=∥x∥2x. We will feature safety ϕ ( s , a 0 ( s ) ) \phi \left( s,{ {a}_{0}}(s) \right) ϕ(s,a0(s)) The span of is defined as V s = span ( ϕ ( s , a 0 ( s ) ) ) : = { α ϕ ( s , a 0 ( s ) ) : α ∈ R } { {\mathcal{V}}_{s}}=\operatorname {span}\left( \phi \left( s,{ {a}_{0}}(s) \right) \right):=\left\{ \alpha \phi \left( s,{ {a} _{0}}(s) \right):\alpha \in \mathbb{R} \right\} Vs=span(ϕ(s,a0(s))):={ αϕ(s,a0(s)):α∈R} and V s { {\mathcal{V}}_{s}} Vs The orthogonal complement of is V s ⊥ : = { y ∈ R d : * y , x * = 0 , ∀ x ∈ V s } \mathcal {V}_{s}^{\bot }:=\left\{ \mathbf{y}\in { {\mathbb{R}}^{d}}:\langle \mathbf{y},\mathbf{ x}\rangle =0,\forall \mathbf{x}\in { {\mathcal{V}}_{s}} \right\} Vs⊥:={ y∈Rd:*y,x*=0,∀x∈Vs}. For any x ∈ R d \mathbf{x}\in { {\mathbb{R}}^{d}} x∈Rd, Write it down as Φ 0 ( s , x ) : = l e f t * x , ϕ ~ ( s , a 0 ( s ) ) * ϕ ~ ( s , a 0 ( s ) ) { {\Phi }_{0}}(s,\mathbf{x}):=\ left\langle \mathbf{x},\widetilde{\phi }\left( s,{ {a}_{0}}(s) \right) \rangle \widetilde{\phi }\left( s, { {a}_{0}}(s) \right) Φ0(s,x):= left*x,ϕ(s,a0(s))*ϕ(s,a0(s)) It's in V s { {\mathcal{V}}_{s}} Vs The projection on the , And through Φ 0 ⊥ ( s , x ) : = x − Φ 0 ( s , x ) \Phi _{0}^{\bot } (s,\mathbf{x}):=\mathbf{x}-{ {\Phi }_{0}}(s,\mathbf{x}) Φ0⊥(s,x):=x−Φ0(s,x) It is in the orthogonal subspace V \mathcal{V} V The projection on the s ⊥ {s}^{\bot } s⊥. Besides , For convenience of marking , Make ϕ h k : = ϕ ( s h k , a h k ) \phi _{h}^{k}:=\phi \left(s_{h}^{k},a_{h}^{k} \right) ϕhk:=ϕ(shk,ahk).

2.1. overview Overview

From a high-level Perspective , Our algorithm is (Jin et al., 2020) Proposed LSVI-UCB Security version of . especially , Each round consists of two cycles on all time steps . First cycle ( The first 5-8 That's ok ) Number of updates A h k \mathcal{A}_{h}^{k} Ahk, Estimated safety set sum Q h k Q_{h}^{k} Qhk, Action value function , That is, it is used to implement the upper confidence limit strategy a h k = arg max a ∈ A h k ( s h k ) Q h k ( s h k , a ) a_{h}^{k}=\arg { {\max }_{a\in \mathcal{A}_{h}^{k}(s_{h}^ {k})}}Q_{h}^{k}(s_{h}^{k},a) ahk=argmaxa∈Ahk(shk)Qhk(shk,a) In the second cycle ( The first 9-11 That's ok ). SLUCB-QVI and LSVI-UCB The main difference between them is the required action a h k a_{h}^{k} ahk Must always belong to an unknown security set A h safe ( s h k ) \mathcal{A}_{h}^{\text{safe }}(s_{h}^{k}) Ahsafe (shk). So , In every plot k ∈ [ K ] k\in [K] k∈[K] in , In the first cycle ( The first 6 That's ok ) In the additional steps of , Agent computes a set A h k ( s ) \mathcal{A}_{h}^{k}(s ) Ahk(s) For all s ∈ S s\in \mathcal{S} s∈S, We will prove that it is an unknown security set A h safe ( s ) \mathcal{A}_{h}^{\text{safe}}(s ) Ahsafe(s), therefore , From the second cycle ( The first 10 That's ok ) Choose the action a h k a_{h}^{k} ahk A good candidate for . A h k ( s ) \mathcal{A}_{h}^{k}(s) Ahk(s) The construction of depends on the unknown parameters used in the definition of security constraints γ h ∗ \gamma_{h}^{*} γh∗ ( See assumptions 1). Because the agent knows τ h ( s ) : = * ϕ ( s , a 0 ( s ) ) , g a m m a h ∗ * { {\tau }_{h}}(s):=\left\langle \phi \left(s,{ {a}_{0}}(s) \right),\ gamma _{h}^{*} \right\rangle τh(s):=*ϕ(s,a0(s)), gammah∗*( See assumptions 2), It can calculate z h , s k : = * Φ 0 ⊥ ( s , ϕ h k ) , Φ 0 ⊥ ( s , γ h ∗ ) * + ϵ h k = z h k − * ϕ h k , ϕ ~ ( s , a 0 ( s ) ) * 1 ∥ ϕ ( s , a 0 ( s ) ) ∥ 2 τ h ( s ) z_{h,s}^{k}:=\left\langle \Phi _{0}^{\bot }\left( s,\phi _{h}^{k} \right),\Phi _{0}^{\bot }\left(s,\gamma _{h}^{*} \right) \right\rangle +\epsilon _{h}^{k}=z_{h}^{k}-\frac{ { {\left\langle \phi _{h}^{k},\widetilde{\phi }\left( s,{ {a}_{0}}(s) \right) \right\rangle }_{1}}}{ { {\left\| \phi \left( s,{ {a}_{0}}(s) \right) \right\|}_{2}}}{ {\tau }_{h}}(s) zh,sk:=*Φ0⊥(s,ϕhk),Φ0⊥(s,γh∗)*+ϵhk=zhk−∥ϕ(s,a0(s))∥2*ϕhk,ϕ(s,a0(s))*1τh(s), namely a h k a_{h}^{k} ahk Along the subspace V s ⊥ \mathcal{V}_{s}^{\bot } Vs⊥ The cost incurred , It is associated with ϕ ( s , a 0 ( s ) ) \phi \left( s,{ {a} _{0}}(s)\right) ϕ(s,a0(s)). therefore , Agents do not need to follow the normalized safety feature vector ϕ ~ ( s , a 0 ( s ) ) \tilde{\phi }\left( s,{ {a}_{0 }}(s) \right) ϕ~(s,a0(s)). contrary , It is only in Φ 0 ⊥ ( s , γ h ∗ ) \Phi _{0}^{\bot }\left( s,\gamma _{h}^{*} \right) Φ0⊥(s,γh∗) Establish the following confidence set around , It follows The orthogonal direction of the wave sign ϕ ~ ( s , a 0 ( s ) ) \tilde{\phi }\left( s,{ {a}_{0}}(s) \right) ϕ~(s,a0(s)):

C h k ( s ) : = { ν ∈ R d : ∥ ν − γ h , s k ∥ A h , s k ≤ β } \mathcal{C}_{h}^{k}(s):=\left\{ \nu \in { {\mathbb{R}}^{d}}:{ {\left\| \nu -\gamma _{h,s}^{k} \right\|}_{\mathbf{A}_{h,s}^{k}}}\le \beta \right\} Chk(s):={ ν∈Rd:∥∥ν−γh,sk∥∥Ah,sk≤β}

among γ h , s k : = ( A h , s k ) − 1 r h , s k \gamma _{h,s}^{k}:={ {\left( \mathbf{A}_{h,s}^{k} \right)}^{-1}}\mathbf{r }_{h,s}^{k} γh,sk:=(Ah,sk)−1rh,sk yes Φ 0 ⊥ ( s , γ h ∗ ) \Phi_{0}^{\bot }\left( s,\gamma _{h}^{*} \right) Φ0⊥(s,γh∗) The regularized least squares estimator of is given by Gram Inverse calculation of matrix A h , s k : = λ ( I − ϕ ~ ( s , a 0 ( s ) ) ϕ ~ ⊤ ( s , a 0 ( s ) ) ) + ∑ j = 1 k − 1 Φ 0 ⊥ ( s , ϕ h j ) Φ 0 ⊥ , ⊤ ( s , ϕ h j ) \mathbf{A}_{h,s}^{k}:=\lambda \left( I-\tilde{\phi }\left( s,{ {a}_{ 0}}(s) \right){ { {\tilde{\phi }}}^{\top }}\left(s,{ {a}_{0}}(s) \right) \right)+ \sum\limits_{j=1}^{k-1}{\Phi_{0}^{\bot }}\left(s,\phi_{h}^{j} \right)\Phi_{ 0}^{\bot ,\top }\left( s,\phi _{h}^{j} \right) Ah,sk:=λ(I−ϕ~(s,a0(s))ϕ~⊤(s,a0(s)))+j=1∑k−1Φ0⊥(s,ϕhj)Φ0⊥,⊤(s,ϕhj) and r h , s k : = ∑ j = 1 k − 1 z h , s j Φ 0 ⊥ ( s , ϕ h j ) r_{h,s}^{k}:=\sum\limits_{j= 1}^{k-1}{z_{h,s}^{j}}\Phi_{0}^{\bot }\left(s,\phi_{h}^{j}\right) rh,sk:=j=1∑k−1zh,sjΦ0⊥(s,ϕhj) . Explore factors β Will soon be in theorem 1 In the definition of , To ensure the event :

E 1 : = { Φ 0 ⊥ ( s , γ h ∗ ) ∈ C h k ( s ) , ∀ ( s , h , k ) ∈ S × [ H ] × [ K ] } { {\mathcal{E}}_{1}}:=\left\{ \Phi _{0}^{\bot }\left( s,\gamma _{h}^{*} \right)\in \mathcal{C}_{h}^{k}(s),\forall (s,h,k)\in \mathcal{S}\times [H]\times [K] \right\} E1:={ Φ0⊥(s,γh∗)∈Chk(s),∀(s,h,k)∈S×[H]×[K]}

namely Φ 0 ⊥ ( s , γ h ∗ ) \Phi _{0}^{\bot }\left( s,\gamma _{h}^{*} \right) Φ0⊥(s,γh∗) It belongs to the confidence set C h k ( s ) \mathcal{C}_{h}^{ k}(s) Chk(s), It is likely to be established . In implementation , We will β It is regarded as adjusting parameters . With events E 1 { {\mathcal{E}}_{1}} E1 On condition that , The agent is ready to calculate the real unknown security set A h safe \mathcal{A}_{h}^{\text{safe}} Ahsafe The following internal approximation of all s ∈ S s\in \mathcal{S} s∈S The safety of the :

A h k ( s ) = { a ∈ A : * Φ 0 ( s , ϕ ( s , a ) ) , ϕ ~ ( s , a 0 ( s ) ) * ∥ ϕ ( s , a 0 ( s ) ) ∥ 2 τ h ( s ) + * γ h , s k , Φ 0 ⊥ ( s , ϕ ( s , a ) ) * + β ∥ Φ 0 ⊥ ( s , ϕ ( s , a ) ) ∥ ( A h , s k ) − 1 ≤ τ } \begin{aligned} & \mathcal{A}_{h}^{k}(s)=\left\{ a\in \mathcal{A}:\frac{\left\langle { {\Phi }_{0}}(s,\phi (s,a)),\widetilde{\phi }\left( s,{ {a}_{0}}(s) \right) \right\rangle }{ { {\left\| \phi \left( s,{ {a}_{0}}(s) \right) \right\|}_{2}}}{ {\tau }_{h}}(s) \right. \\ & \left. +\left\langle \gamma _{h,s}^{k},\Phi _{0}^{\bot }(s,\phi (s,a)) \right\rangle +\beta { {\left\| \Phi _{0}^{\bot }(s,\phi (s,a)) \right\|}_{ { {\left( \mathbf{A}_{h,s}^{k} \right)}^{-1}}}}\le \tau \right\} \\ \end{aligned} Ahk(s)=⎩⎨⎧a∈A:∥ϕ(s,a0(s))∥2*Φ0(s,ϕ(s,a)),ϕ(s,a0(s))*τh(s)+*γh,sk,Φ0⊥(s,ϕ(s,a))*+β∥∥Φ0⊥(s,ϕ(s,a))∥∥(Ah,sk)−1≤τ}

Be careful * Φ 0 ( s , ϕ ( s , a ) ) , ϕ ~ ( s , a 0 ( s ) ) * ∥ ϕ ( s , a 0 ( s ) ) ∥ 2 τ h ( s ) \frac{\left\langle { {\Phi }_{0}}(s,\phi (s,a)),\widetilde{\phi }\left( s,{ {a}_{0 }}(s) \right) \right\rangle }{ { {\left\| \phi \left( s,{ {a}_{0}}(s) \right) \right\|}_{2}}}{ {\tau }_{h}}(s) ∥ϕ(s,a0(s))∥2*Φ0(s,ϕ(s,a)),ϕ(s,a0(s))*τh(s) Is known in the state s Along the direction of ϕ ~ ( s , a 0 ( s ) ) \widetilde{\phi }\left( s,{ {a}_{0}}(s) \right) ϕ(s,a0(s)) and max ν ∈ C h k ( s ) * Φ 0 ⊥ ( s , ϕ ( s , a ) ) , ν * = * γ h , s k , Φ 0 ⊥ ( s , ϕ ( s , a ) ) * + β ∥ Φ 0 ⊥ ( s , ϕ ( s , a ) ) ∥ ( A h , s k ) − 1 \underset{\nu \in \mathcal{C}_{h}^{k}(s)}{\mathop{\max }}\,\left\langle \Phi _{0}^{\bot }(s,\phi (s,a)),\nu \right\rangle =\left\langle \gamma _{h,s}^{k},\Phi _{0}^{\bot }(s,\phi (s,a)) \right\rangle +\beta { {\left\| \Phi _{0}^{\bot }(s,\phi (s,a)) \right\|}_{ { {\left( \mathbf{A}_{h,s}^{k} \right)}^{-1}}}} ν∈Chk(s)max*Φ0⊥(s,ϕ(s,a)),ν*=*γh,sk,Φ0⊥(s,ϕ(s,a))*+β∥∥Φ0⊥(s,ϕ(s,a))∥∥(Ah,sk)−1 It is in orthogonal space V s ⊥ \mathcal{V}_{s}^{\bot } Vs⊥ The maximum possible cost of . therefore , * Φ 0 ( s , ϕ ( s , a ) ) , ϕ ~ ( s , a 0 ( s ) ) * ∥ ϕ ( s , a 0 ( s ) ) ∥ 2 τ h ( s ) + * γ h , s k , Φ 0 ⊥ ( s , ϕ ( s , a ) ) * + β ∥ Φ 0 ⊥ ( s , ϕ ( s , a ) ) ∥ ( A h , s k ) − 1 \frac{\left\langle { {\Phi }_{0}}(s,\phi (s,a)),\tilde{\phi }\left( s,{ {a}_{0}}(s) \right) \right\rangle }{ { {\left\| \phi \left( s,{ {a}_{0}}(s) \right) \right\|}_{2}}}{ {\tau }_{h}}(s)+\left\langle \gamma _{h,s}^{k},\Phi _{0}^{\bot }(s,\phi (s,a)) \right\rangle +\beta { {\left\| \Phi _{0}^{\bot }(s,\phi (s,a)) \right\|}_{ { {\left( \mathbf{A}_{h,s}^{k} \right)}^{-1}}}} ∥ϕ(s,a0(s))∥2*Φ0(s,ϕ(s,a)),ϕ~(s,a0(s))*τh(s)+*γh,sk,Φ0⊥(s,ϕ(s,a))*+β∥∥Φ0⊥(s,ϕ(s,a))∥∥(Ah,sk)−1 Is the real unknown cost * ϕ ( s , a ) , γ h ∗ * Of high General rate On limit \left\langle \phi (s,a),\gamma _{h}^{*} \right\rangle The upper limit of high probability *ϕ(s,a),γh∗* Of high General rate On limit , It means A h k ( s ) ⊂ A h safe ( s ) \mathcal{A}_{h}^{k}(s)\subset \mathcal{A}_{h}^{\text{safe}}(s) Ahk(s)⊂Ahsafe(s).

proposal 1 Proposition 1

With (8) Medium E 1 { {\mathcal{E}}_{1}} E1 On condition that , For all ( s , h , k ) ∈ S × [ H ] × [ K ] (s,h,k)\in \mathcal{S}\times [H]\times [K] (s,h,k)∈S×[H]×[K] , It thinks * ϕ ( s , a ) , γ h ∗ * ≤ τ , ∀ a ∈ A h k ( s ) \left\langle \phi (s,a),\mathbf{\gamma }_{h}^{*} \right\rangle \le \tau ,\forall a\in \mathcal{A} _{h}^{k}(s) *ϕ(s,a),γh∗*≤τ,∀a∈Ahk(s).

therefore , With E 1 { {\mathcal{E}}_{1}} E1 On condition that , Decision making rules a h k : = arg max a ∈ A h k ( s h k ) Q h k ( s h k , a ) a_{h}^{k}:=\arg { {\max }_{a\in \mathcal{A }_{h}^{k}\left(s_{h}^{k}\right)}}Q_{h}^{k}\left(s_{h}^{k},a \right) ahk:=argmaxa∈Ahk(shk)Qhk(shk,a) Algorithm 1 Of the 10 Line indication a h k a_{h}^{k} ahk Do not violate safety constraints . Be careful A h k ( s ) \mathcal{A}_{h}^{k}(s) Ahk(s) It's always non empty , Because as a hypothesis 2 Result , Safe action a 0 ( s ) { {a}_{0}}(s) a0(s) Always in . A h k ( s ) \mathcal{A}_{h}^{k}(s) Ahk(s) in .

Now that the estimated security set has been constructed A h k ( s ) \mathcal{A}_{h}^{k}(s) Ahk(s), We will describe how to calculate the action value function to be used Q h k Q_{h}^{k} Qhk stay UCB Decision rules , Select actions in the second cycle of the algorithm a h k a_{h}^{k} ahk. MDP The linear structure of allows us to pass through the linear form * w h ∗ , ϕ ( s , a ) * \left\langle \mathbf{w}_{h}^{*},\phi(s,a) \right\rangle *wh∗,ϕ(s,a)* A parameterized Q h ∗ ( s , a ) Q_{h}^{*}(s,a) Qh∗(s,a) , among w h ∗ : = θ h ∗ + ∫ S V h + 1 ∗ ( s ′ ) d μ ( s ′ ) \mathbf{w}_{h}^{*}:=\theta _{h}^{*}+\int_{\mathcal{S}}{V_ {h+1}^{*}}({s}')d\mu ({s}') wh∗:=θh∗+∫SVh+1∗(s′)dμ(s′). therefore , It is estimated that Q h ∗ ( s , a ) Q_{h}^{*}(s,a) Qh∗(s,a) A natural idea of is to solve w h ∗ \mathbf{w}_{h}^{*} wh∗ The least squares problem . in fact , For all ( s , a ) ∈ S × A h k ( . ) (s,a)\in \mathcal{S}\times \mathcal{A}_{h}^{k}(.) (s,a)∈S×Ahk(.), Agent computing Q h k ( s , a ) Q_{h}^{k} (s,a) Qhk(s,a) Defined as

Q h k ( s , a ) = min { * w h k , ϕ ( s , a ) * + κ h ( s ) β ∥ ϕ ( s , a ) ∥ ( A h k ) − 1 , H } Q_{h}^{k}(s,a)=\min \left\{ \left\langle \mathbf{w}_{h}^{k},\phi (s,a) \right\rangle +{ {\kappa }_{h}}(s)\beta { {\left\| \phi (s,a) \right\|}_{ { {\left( \mathbf{A}_{h}^{k} \right)}^{-1}}}},H \right\} Qhk(s,a)=min{ *whk,ϕ(s,a)*+κh(s)β∥ϕ(s,a)∥(Ahk)−1,H}

among w h k : = ( A h k ) − 1 b h k \mathbf{w}_{h}^{k}:={ {\left( \mathbf{A}_{h}^{k} \right)}^{-1}}\mathbf{b} _{h}^{k} whk:=(Ahk)−1bhk yes w h ∗ \mathbf{w}_{h}^{*} wh∗ Regularized least squares estimator of , from Gram matrix Inverse calculation of A h k : = λ I + ∑ j = 1 k − 1 ϕ h j ϕ h j ⊤ \mathbf{A}_{h}^{k}:=\lambda I+\sum\limits_{j=1}^{k-1}{\phi _{h}^{j}}\phi _{h}^{j\top } Ahk:=λI+j=1∑k−1ϕhjϕhj⊤ and b h k : = ∑ j = 1 k − 1 ϕ h j [ r h j + max a ∈ A h + 1 k ( s h + 1 j ) Q h + 1 k ( s h + 1 j , a ) ] \mathbf{b}_{h}^{k}:=\sum\limits_{j=1}^{k-1}{\phi _{h}^{j}}\left[ r_{h}^{j}+{ {\max }_{a\in \mathcal{A}_{h+1}^{k}\left( s_{h+1}^{j} \right)}}Q_{h+1}^{k}\left( s_{h+1}^{j},a \right) \right] bhk:=j=1∑k−1ϕhj[rhj+maxa∈Ah+1k(sh+1j)Qh+1k(sh+1j,a)]. here , κ h ( s ) β ∥ ϕ ( s , a ) ∥ ( A h k ) − 1 { {\kappa }_{h}}(s)\beta { {\left\| \phi (s,a) \right\|}_{ { {\left( \mathbf{A}_{h}^{k} \right)}^{-1}}}} κh(s)β∥ϕ(s,a)∥(Ahk)−1 Is the exploration reward, which is characterized by : 1) β Encourage the right r and P \mathbb{P} P To explore enough about the uncertainty of ; 2) κ h ( s ) > 1 { {\kappa }_{h}}(s)>1 κh(s)>1 Encourage the right c To explore enough about the uncertainty of . Although we use unsafe bandits and MDP (Abbasi-Yadkori et al., 2011) and (Jin et al., 2020) Standard analysis to define β, But quantify appropriately κ h ( s ) { {\kappa }_{h}}(s ) κh(s) Is not safe LSVI-UCB comparison , The existence of security constraints gives SLUCB-QVI The main challenges of analysis , This is lemma 1 It says .

3. SLUCB-QVI It's a theoretical guarantee Theoretical guarantees of SLUCB-QVI

In this section , We will discuss the technical challenges that the existence of security constraints brings to our analysis , And for SLUCB-QVI Provide a regret boundary . Before that , We make the remaining necessary assumptions , Our proposed algorithm runs under these assumptions and achieves good regret bounds .

hypothesis 3 ( Sub Gaussian noise ) Assumption 3 (Subgaussian Noise)

For all ( h , k ) ∈ [ H ] × [ K ] (h,k)\in [H]\times [K] (h,k)∈[H]×[K], ϵ h k \epsilon _{h}^{k} ϵhk Is a zero mean σ- Sub Gaussian noise A random variable .

hypothesis 4 ( bounded ) Assumption 4 (Boundedness)

No loss of generality , ∥ ϕ ( s , a ) ∥ 2 ≤ 1 { {\left\| \phi (s,a) \right\|}_{2}}\le 1 ∥ϕ(s,a)∥2≤1 For all ( s , a ) ∈ S × A (s,a)\in \mathcal{S}\times \mathcal{A} (s,a)∈S×A and max ( ∥ μ h ∗ ( S ) ∥ 2 , ∥ θ h ∗ ∥ 2 , ∥ γ h ∗ ∥ 2 ) ≤ d \max \left( { {\left\| \mu _{h}^{*}(\mathcal{S}) \right\|}_{2}},{ {\left\| \theta _{h}^{*} \right\|}_{2}},{ {\left\| \gamma _{h}^{*} \right\|}_{2}} \right)\le \sqrt{d} max(∥μh∗(S)∥2,∥θh∗∥2,∥γh∗∥2)≤d For all h ∈ [ H ] h\in [H] h∈[H].

hypothesis 5 ( Star convex set ) Assumption 5 (Star convex sets)

For all s ∈ S s\in \mathcal{S} s∈S, aggregate D ( s ) : = { ϕ ( s , a ) : a ∈ A } \mathcal{D}(s):=\{\phi (s,a):a\in \mathcal{A}\} D(s):={ ϕ(s,a):a∈A} It's a star Focus on safety features ϕ ( s , a 0 ( s ) ) \phi \left( s,{ {a}_{0}}(s) \right) ϕ(s,a0(s)) Convex set of , For all x ∈ D ( s ) \mathbf{x}\in \mathcal{D}(s ) x∈D(s) and α ∈ [ 0 , 1 ] \alpha \in [0,1] α∈[0,1], α x + ( 1 − α ) ϕ ( s , a 0 ( s ) ) ∈ D ( s ) \alpha \mathbf{x}+(1-\alpha )\phi \left( s,{ {a}_{0}}(s) \right)\in \mathcal{D}(s) αx+(1−α)ϕ(s,a0(s))∈D(s).

hypothesis 3 and 4 It's linear MDP And the standards in the slot machine literature (Jin wait forsomeone ,2020;Pacchiano wait forsomeone ,2020;Amani wait forsomeone ,2019). hypothesis 5 be necessary , To ensure that agents have the opportunity to explore a given security eigenvector ϕ ( s , a 0 ( s ) ) \phi\left(s,{ {a}_{0}}(s)\right) ϕ(s,a0(s)) Surrounding feature space . for example , Consider a simple setup , among S = { s 1 } , A = { a 1 , a 2 } , H = 1 , μ ∗ ( s 1 ) = ( 1 , 1 ) , θ ∗ = ( 0 , 1 ) \mathcal{S}=\{ { {s}_{1}}\},\mathcal{A}=\{ { {a}_{1}},{ {a }_{2}}\},H=1,{ {\mu }^{*}}({ {s}_{1}})=(1,1),{ {\theta }^{*} }=(0,1) S={ s1},A={ a1,a2},H=1,μ∗(s1)=(1,1),θ∗=(0,1), γ ∗ = ( 0 , 1 ) , τ = 2 , a 0 ( s 1 ) = a 2 { {\gamma }^{*}}=(0,1),\tau =2,{ {a}_{0}}({ {s}_{1} })={ {a}_{2}} γ∗=(0,1),τ=2,a0(s1)=a2, and D ( s 1 ) = { ϕ ( s 1 , a 1 ) , ϕ ( s 1 , a 2 ) } = { ( 0 , 1 ) , ( 1 , 0 ) } \mathcal{D}({ {s}_{1}})=\{\phi ({ {s}_{1}},{ {a}_{1}}),\phi ({ {s}_{1}},{ {a}_{2}})\}=\{(0,1),(1,0)\} D(s1)={ ϕ(s1,a1),ϕ(s1,a2)}={ (0,1),(1,0)}, It is not a convex set . ad locum , a 1 { {a}_{1}} a1 and a 2 { {a}_{2}} a2 Both actions are safe . The optimal security policy always uses a 1 { {a}_{1}} a1, It gives the highest reward . however , If D ( s 1 ) \mathcal{D}({ {s}_{1}}) D(s1) Do not include connections ( 1 , 0 ) (1,0) (1,0) and ( 0 , 1 ) (0,1) (0,1) Entire line of , Then the intelligent experience will continue to play a 2 { {a}_{2}} a2 And will not be able to explore other security operations and determine the optimal strategy will always choose a1. Besides , It is worth mentioning that , aggregate D ( s ) \mathcal{D}(s) D(s) Star convexity is safer than existing algorithms (Amani et al., 2019; Moradipari et al., 2019) The convexity assumption considered in the more moderate assumption ).

Theorem 1 (SLUCB-QVI Regret value ) Theorem 1 (Regret of SLUCB-QVI)

Assuming 1、2、3、4 and 5 Next , There is an absolute constant c β > 0 { {c}_{\beta }}>0 cβ>0 So that for any fixed δ ∈ ( 0 , 0 , 5 ) \delta \in (0,0,5) δ∈(0,0,5) , If we set β : = max ( σ d log ( 2 + 2 T λ δ ) + λ d , c β d H log ( d T δ ) ) \beta :=\max \left( \sigma \sqrt{d\log \left( \frac{2+\frac{2T}{\lambda }}{\delta } \right)}+\sqrt{\lambda d},{ {c}_{\beta }}dH\sqrt{\log \left( \frac{dT}{\delta } \right)} \right) β:=max(σdlog(δ2+λ2T)+λd,cβdHlog(δdT)) and κ h ( s ) : = 2 H τ − τ h ( s ) + 1 { {\kappa }_{h}}(s):=\frac{2H}{\tau -{ {\tau }_{h}}(s)}+1 κh(s):=τ−τh(s)2H+1, Then the probability is at least 1 − 2 δ 1-2\delta 1−2δ, It thinks R K ≤ 2 H T log ( d T δ ) + ( 1 + κ ) β 2 d H T log ( 1 + K d λ ) { {R}_{K}}\le 2H\sqrt{T\log \left( \frac{dT}{\delta } \right)}+(1+\kappa )\beta \sqrt{ 2dHT\log \left( 1+\frac{K}{d\lambda } \right)} RK≤2HTlog(δdT)+(1+κ)β2dHTlog(1+dλK), among κ : = max ( s , h ) ∈ S × [ H ] κ h ( s ) \kappa :={ {\max }_{(s,h)\in \mathcal{S}\times [H]}}{ {\kappa }_{h}}(s) κ:=max(s,h)∈S×[H]κh(s)

here , T = K H T=KH T=KH Is the total number of actions played . We observe that the regret bound is the same order of magnitude as the most advanced unsafe Algorithm , for example LSVI-UCB (Jin et al., 2020), There is only one additional factor in the second term κ. The complete certificate is in the appendix A.3 Middle Report . In the next section , We give a sketch of the proof .

3.1. Theorem 1 Proof outline of

First , We state from (Abbasi-Yadkori et al., 2011; Jin et al., 2020) The following theorem borrowed .

Theorem 2 Theorem 2 (Thm. 2 in (Abbasi-Yadkori et al., 2011) and Lemma B.4 in (Jin et al., 2020)).

For any fixed strategy π \pi π, Definition V h k ( s ) : = max a ∈ A h k ( s , a ) Q h k ( s , a ) V_{h}^{k}(s):={ {\max }_{a\in \mathcal{A}_{h}^{k}(s,a)} }Q_{h}^{k}(s,a) Vhk(s):=maxa∈Ahk(s,a)Qhk(s,a) And events

E 2 : = { ∣ * w h k , ϕ ( s , a ) * − Q h π ( s , a ) + [ P h ( V h + 1 π − V h + 1 k ) ] ( s , a ) ∣ ≤ β ∥ ϕ ( s , a ) ∥ ( A h k ) − 1 , ∀ ( a , s , h , k ) ∈ A × S × [ H ] × [ K ] } \begin{aligned} & { {\mathcal{E}}_{2}}:=\left\{ \left| \left\langle \mathbf{w}_{h}^{k},\phi (s,a) \right\rangle -Q_{h}^{\pi }(s,a)+\left[ { {\mathbb{P}}_{h}}\left( V_{h+1}^{\pi }-V_{h+1}^{k} \right) \right](s,a) \right| \right. \\ & \quad \ \left. \le \beta \|\phi (s,a){ {\|}_{ { {\left( \mathbf{A}_{h}^{k} \right)}^{-1}}}},\forall (a,s,h,k)\in \mathcal{A}\times \mathcal{S}\times [H]\times [K] \right\} \\ \end{aligned} E2:={ ∣∣*whk,ϕ(s,a)*−Qhπ(s,a)+[Ph(Vh+1π−Vh+1k)](s,a)∣∣ ≤β∥ϕ(s,a)∥(Ahk)−1,∀(a,s,h,k)∈A×S×[H]×[K]}

And recall (8) in E1 The definition of . that , Assuming 1、2、3、4 And Theorem 1 in β Under the definition of , There is an absolute constant c β > 0 { {c}_{\beta }}>0 cβ>0, So that for any fixed δ stay ( 0 , 0 , 5 ) \delta \ stay (0,0,5) δ stay (0,0,5) in , The probability is at least 1 − δ 1-\delta 1−δ, event E : = E 2 ⋂ E 1 \mathcal{E}:={ {\mathcal{E}}_{2}}\bigcap { {\mathcal{ E}}_{1}} E:=E2⋂E1 Retain .

As our main technical contribution , In lemma 1 in , We proved that when κ h ( s ) : = 2 H τ − τ h ( s ) + 1 { {\kappa }_{h}}(s):=\frac{2H}{\tau -{ {\tau }_{h}}( s)}+1 κh(s):=τ−τh(s)2H+1, Keep optimistic in the face of safety constraints , Guarantee Q h ∗ ( s , a ) ≤ Q h k ( s , a ) Q_{h}^{*}(s,a)\le Q_{h}^{k}(s,a) Qh∗(s,a)≤Qhk(s,a) . Intuitively speaking , This is necessary , Because the algorithm 1 The first 10 The maximization of row is not in the whole A h safe ( s h k ) \mathcal{A}_{h}^{\text{safe}}\left( s_{h}^{k} \right ) Ahsafe(shk), But it's just a subset . therefore , Need bigger κ h ( s ) { {\kappa }_{h}}(s) κh(s) value ( And unsafe algorithms LSVI-UCB Medium κ h ( s ) = 1 { {\kappa }_{h}}(s)=1 κh(s)=1 comparison ) Provide enough exploration for the algorithm , In order to A h k ( s h k ) \mathcal{A}_{h}^{k}\left(s_{h}^{k}\right) Ahk(shk) Selected actions in - Usually enough - optimistic , namely , Q h ∗ ( s , a ) ≤ Q h k ( s , a ) Q_{h}^{*}(s,a)\le Q_{h}^{k}(s,a) Qh∗(s,a)≤Qhk(s,a).

lemma 1 ( face SLUCB-QVI Optimistic attitude towards safety constraints ) Lemma 1 (Optimism in the face of safety constraint in SLUCB-QVI)

Make κ h ( s ) : = 2 H τ − τ h ( s ) + 1 { {\kappa }_{h}}(s):=\frac{2H}{\tau -{ {\tau }_{h}}(s)}+1 κh(s):=τ−τh(s)2H+1 And assumptions 1,2,3 ,4,5 keep . then , With E \mathcal{E} E On condition that , It thinks V h ∗ ( s ) ≤ V h k ( s ) , ∀ ( s , h , k ) ∈ S × [ H ] × [ K ] V_{h}^{*}(s)\le V_{h}^{k}(s),\forall (s,h,k) \in \mathcal{S}\times [H]\times [K] Vh∗(s)≤Vhk(s),∀(s,h,k)∈S×[H]×[K].

We're in the appendix A.2 Proof is reported in . As lemma 1 And Theorem 2 Events defined in E 2 { {\mathcal{E}}_{2}} E2 The direct conclusion of , We have :

Q h ∗ ( s , a ) ≤ * w h k , ϕ ( s , a ) * + β ∥ ϕ ( s , a ) ∥ ( A h k ) − 1 + [ P h V h + 1 ∗ − V h + 1 k ] ( s , a ) ≤ Q h k ( s , a ) Q_{h}^{*}(s,a)\le \left\langle \mathbf{w}_{h}^{k},\phi (s,a) \right\rangle +\beta { {\left\| \phi (s,a) \right\|}_{ { {\left( \mathbf{A}_{h}^{k} \right)}^{-1}}}}+\left[ { {\mathbb{P}}_{h}}V_{h+1}^{*}-V_{h+1}^{k} \right](s,a)\le Q_{h}^{k}(s,a) Qh∗(s,a)≤*whk,ϕ(s,a)*+β∥ϕ(s,a)∥(Ahk)−1+[PhVh+1∗−Vh+1k](s,a)≤Qhk(s,a) This is encapsulated in the following corollary .

inference 1 (UCB) Corollary 1 (UCB)

Give Way κ h ( s ) : = 2 H τ − τ h ( s ) + 1 { {\kappa }_{h}}(s):=\frac{2H}{\tau -{ {\tau }_{h}}(s)}+1 κh(s):=τ−τh(s)2H+1 And let assumptions 1,2, 3,4,5 keep . then , With E \mathcal{E} E On condition that , It thinks Q h ∗ ( s , a ) ≤ Q h k ( s , a ) , ∀ ( a , s , h , k ) ∈ A × S × [ H ] × [ K ] Q_{h}^{*}(s,a)\le Q_{h}^{k}(s,a),\forall (a, s,h,k)\in \mathcal{A}\times \mathcal{S}\times [H]\times [K] Qh∗(s,a)≤Qhk(s,a),∀(a,s,h,k)∈A×S×[H]×[K].

Using lemma 1 Proved SLUCB-QVI Of UCB After the nature , We are going to use classic unsafe LSVI-UCB (Jin et al., 2020) To complete the analysis and establish SLUCB-QVI The ultimate regret world .

4、5、6

Safety reinforcement learning based on linear function approximation Safe RL with Linear Function Approximation translate 2 —— https://blog.csdn.net/baishuiniyaonulia/article/details/125572881

边栏推荐

- MySQL develops small mall management system

- Vanishing numbers

- Lavel document reading notes -how to use @auth and @guest directives in lavel

- Web端自动化测试失败原因汇总

- Exercise 9-1 time conversion (15 points)

- AUTOSAR from getting started to mastering 100 lectures (106) - SOA in domain controllers

- Kubernetes CNI 插件之Fabric

- Laravel文档阅读笔记-How to use @auth and @guest directives in Laravel

- 基于线性函数近似的安全强化学习 Safe RL with Linear Function Approximation 翻译 1

- Hands on deep learning (46) -- attention mechanism

猜你喜欢

Hands on deep learning (40) -- short and long term memory network (LSTM)

品牌连锁店5G/4G无线组网方案

智能网关助力提高工业数据采集和利用

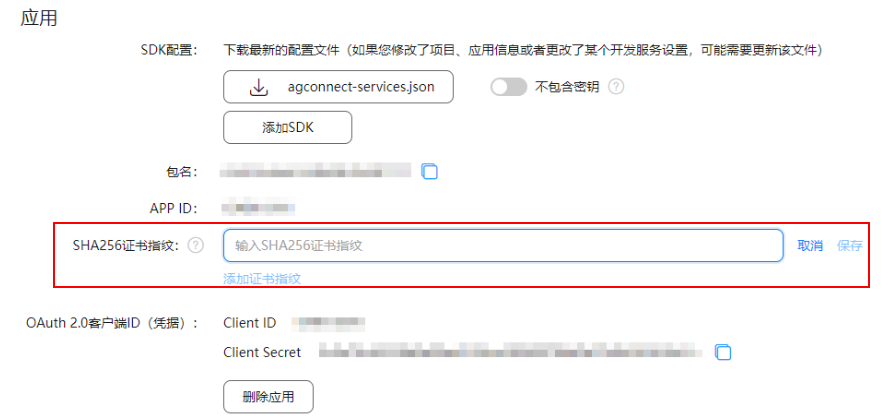

【FAQ】华为帐号服务报错 907135701的常见原因总结和解决方法

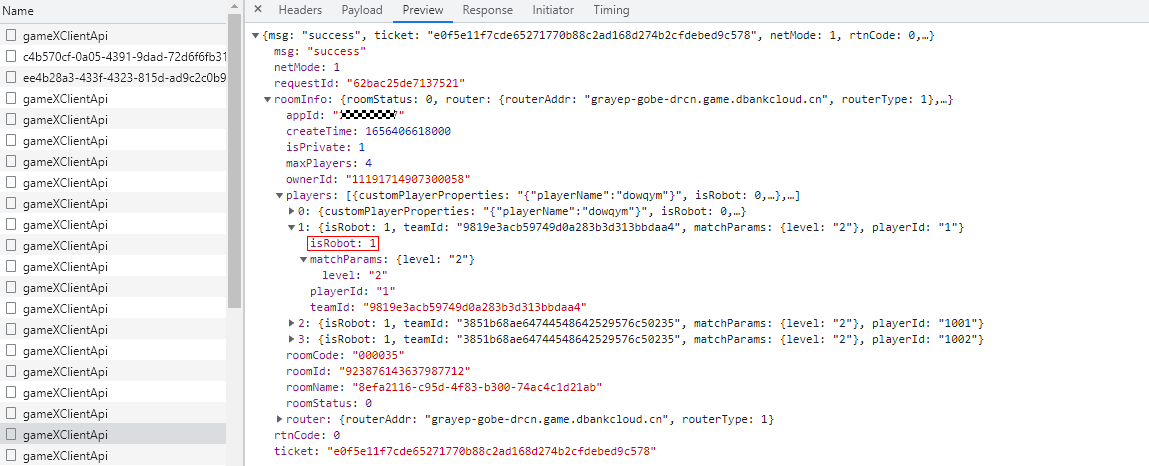

华为联机对战如何提升玩家匹配成功几率

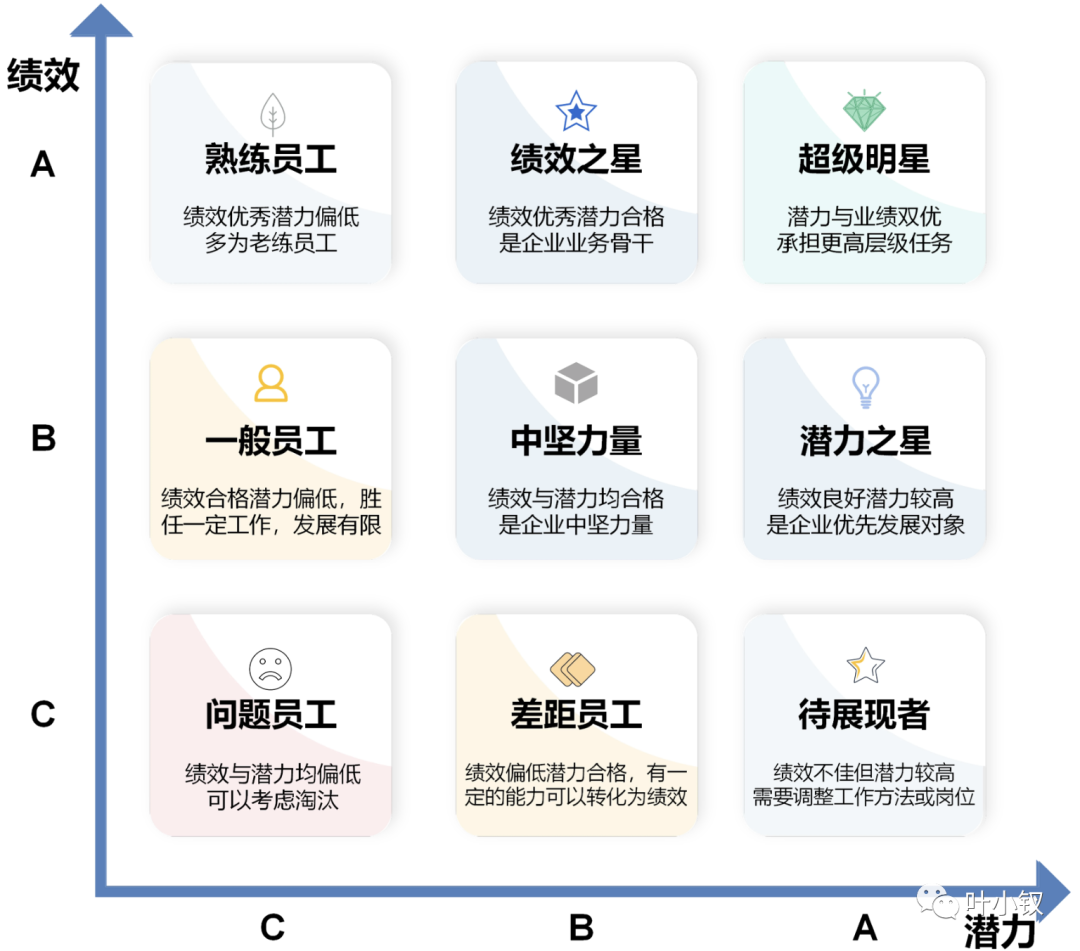

技术管理进阶——如何设计并跟进不同层级同学的绩效

![[200 opencv routines] 218 Multi line italic text watermark](/img/3e/537476405f02f0ebd6496067e81af1.png)

[200 opencv routines] 218 Multi line italic text watermark

C # use gdi+ to add text with center rotation (arbitrary angle)

Devop basic command

Web端自动化测试失败原因汇总

随机推荐

Work order management system OTRs

Kotlin 集合操作汇总

System. Currenttimemillis() and system Nanotime (), which is faster? Don't use it wrong!

mmclassification 标注文件生成

Custom type: structure, enumeration, union

【FAQ】华为帐号服务报错 907135701的常见原因总结和解决方法

System.currentTimeMillis() 和 System.nanoTime() 哪个更快?别用错了!

法向量点云旋转

什么是 DevSecOps?2022 年的定义、流程、框架和最佳实践

Launpad | Basics

今日睡眠质量记录78分

PHP book borrowing management system, with complete functions, supports user foreground management and background management, and supports the latest version of PHP 7 x. Database mysql

Web端自动化测试失败原因汇总

H5 audio tag custom style modification and adding playback control events

用数据告诉你高考最难的省份是哪里!

Hands on deep learning (39) -- gating cycle unit Gru

Pcl:: fromrosmsg alarm failed to find match for field 'intensity'

Hands on deep learning (45) -- bundle search

【Day1】 deep-learning-basics

Daughter love in lunch box