当前位置:网站首页>[AMEX] LGBM Optuna American Express Credit Card Fraud Contest kaggle

[AMEX] LGBM Optuna American Express Credit Card Fraud Contest kaggle

2022-08-01 00:03:00 【Artificial Intelligence Zeng Xiaojian】

Competition description:

Whether it's going out at a restaurant or buying concert tickets,Modern life depends on itThe convenience of a credit cardDo your daily shopping.It saves us from carrying a lot of cash,It can also be purchased in full in advance,And can be paid over time.How the card issuer knows we will reimburse the fees charged?This is a complex problem with many existing solutions,Even more potential improvements,To be explored in this competition.

Credit default prediction is the core of managing the risk of consumer loan business.Credit default prediction allows lenders to optimize lending decisions,The result is a better customer experience and a robust business economy.Current models can help manage risk.But it is possible to create better models,These models outperform the currently used models.

美国运通is a global integrated payment company.As the world's largest payment card issuer,They provide customers with products that enrich lives and build business success、Insights and experiences.

在本次比赛中,You will apply machine learning skills to predict credit defaults.具体来说,You will leverage industrial-scale datasets to build machine learning models,to challenge current models in production.训练、Validation and testing datasets include time-series behavioral data and anonymized customer profile information.You are free to explore any technique to create the most powerful models,From creating features to using data in a more organic way in models.

如果成功,It will be easier for you to get approved for your credit card,This helps create a better customer experience for cardholders.Top solutions could challenge credit default prediction models used by the world's largest payment card issuer——Earn cash prizes for you、Opportunity to be interviewed by American Express,And new careers that might pay off.

数据描述:

The purpose of this competition is based onCustomer monthly customer profilePredict the probability that a customer will not pay their credit card balance in the future.The target binary variable is by looking at the most recent credit card statement后 18 month's performance window来计算的,If the customer is after the last billing date 120 天内The amount due is not paid,is considered an event of default.

This dataset contains aggregated profile characteristics for each customer on each reporting date.Features are anonymous and normalized,into the following general categories:

D_* = delinquent variable Delinquency variables

S_* = spending variable Spend variables

P_* = payment variable Payment variables

B_* = 平衡变量Balance variables

R_* = risk variable Risk variables

Has the following classification characteristics:

['B_30'、'B_38'、'D_114'、'D_116'、'D_117'、'D_120'、'D_126'、'D_63'、'D_64'、'D_66'、'D_68']

Your task is for each customer_ID 预测The probability of default on future payments(目标 = 1).

请注意,The negative class of this dataset has been subsampled as 5%,Therefore obtained in the scoring index 20 倍的权重.

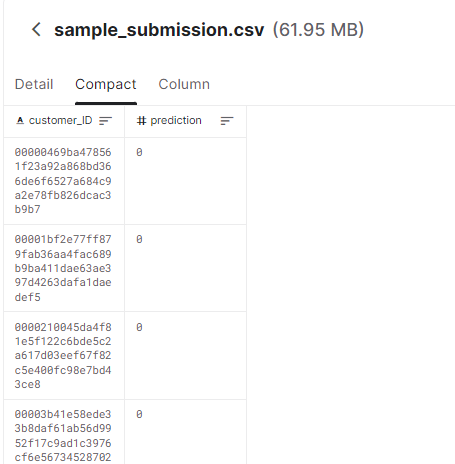

提交格式:

LGBM Optuna Starter

This notebook shows how to use Optuna in LGBM Models to automate hyper parameter searching.

References

(1) AMEX data - integer dtypes - parquet format | Kaggle

(2) XGBoost Starter - [0.793] | Kaggle

(3) American Express - Default Prediction | Kaggle

# LOAD LIBRARIES

import pandas as pd, numpy as np # CPU libraries

import cupy, cudf # GPU libraries

import matplotlib.pyplot as plt, gc, os

print('RAPIDS version',cudf.__version__)![]()

# VERSION NAME FOR SAVED MODEL FILES

VER = 1

# TRAIN RANDOM SEED

SEED = 42

# FILL NAN VALUE

NAN_VALUE = -127 # will fit in int8

# FOLDS PER MODEL

FOLDS = 5Process and feature engineer training data

我将使用 XGBoost Starter Notebook(2)The functions and data preprocessing methods described in

We will load from [email protected] Kaggle dataset and discussed here.Then we'll design in notebooks here and [email protected] 建议的功能.我们将使用 RAPIDS 和 GPU Create new features quickly.

def read_file(path = '', usecols = None):

# LOAD DATAFRAME

if usecols is not None: df = cudf.read_parquet(path, columns=usecols)

else: df = cudf.read_parquet(path)

# REDUCE DTYPE FOR CUSTOMER AND DATE

df['customer_ID'] = df['customer_ID'].str[-16:].str.hex_to_int().astype('int64')

df.S_2 = cudf.to_datetime( df.S_2 )

# SORT BY CUSTOMER AND DATE (so agg('last') works correctly)

#df = df.sort_values(['customer_ID','S_2'])

#df = df.reset_index(drop=True)

# FILL NAN

df = df.fillna(NAN_VALUE)

print('shape of data:', df.shape)

return dfdef process_and_feature_engineer(df):

# FEATURE ENGINEERING FROM

# https://www.kaggle.com/code/huseyincot/amex-agg-data-how-it-created

all_cols = [c for c in list(df.columns) if c not in ['customer_ID','S_2']]

cat_features = ["B_30","B_38","D_114","D_116","D_117","D_120","D_126","D_63","D_64","D_66","D_68"]

num_features = [col for col in all_cols if col not in cat_features]

test_num_agg = df.groupby("customer_ID")[num_features].agg(['mean', 'std', 'min', 'max', 'last'])

test_num_agg.columns = ['_'.join(x) for x in test_num_agg.columns]

test_cat_agg = df.groupby("customer_ID")[cat_features].agg(['count', 'last', 'nunique'])

test_cat_agg.columns = ['_'.join(x) for x in test_cat_agg.columns]

df = cudf.concat([test_num_agg, test_cat_agg], axis=1)

del test_num_agg, test_cat_agg

print('shape after engineering', df.shape )

return dfprint('Reading train data...')

TRAIN_PATH = '../input/amex-data-integer-dtypes-parquet-format/train.parquet'

train = read_file(path = TRAIN_PATH)

train = process_and_feature_engineer(train)![]()

targets = cudf.read_csv('../input/amex-default-prediction/train_labels.csv')

targets['customer_ID'] = targets['customer_ID'].str[-16:].str.hex_to_int().astype('int64')

targets.index = targets['customer_ID'].sort_index()

targets = targets.drop('customer_ID', axis=1)

train = train.join(targets,on =['customer_ID'] ).sort_index()

del targets

gc.collect()

# NEEDED TO MAKE CV DETERMINISTIC (cudf merge above randomly shuffles rows)

train = train.sort_index().reset_index()

# FEATURES

FEATURES = train.columns[1:-1]Faster metric Implementation

def amex_metric(y_true: np.array, y_pred: np.array) -> float:

# count of positives and negatives

n_pos = y_true.sum()

n_neg = y_true.shape[0] - n_pos

# sorting by descring prediction values

indices = np.argsort(y_pred)[::-1]

preds, target = y_pred[indices], y_true[indices]

# filter the top 4% by cumulative row weights

weight = 20.0 - target * 19.0

cum_norm_weight = (weight / weight.sum()).cumsum()

four_pct_filter = cum_norm_weight <= 0.04

# default rate captured at 4%

d = target[four_pct_filter].sum() / n_pos

# weighted gini coefficient

lorentz = (target / n_pos).cumsum()

gini = ((lorentz - cum_norm_weight) * weight).sum()

# max weighted gini coefficient

gini_max = 10 * n_neg * (1 - 19 / (n_pos + 20 * n_neg))

# normalized weighted gini coefficient

g = gini / gini_max

return 0.5 * (g + d)

def lgb_amex_metric(y_true, y_pred):

return ('Score',

amex_metric(y_true, y_pred),

True)import datetime

import warnings

import gc

import pickle

import sklearn

from sklearn.model_selection import StratifiedKFold, train_test_split

import lightgbm as lgb使用 Optuna 进行超参数调整

import optuna

train_pd = train.to_pandas()

del train

gc.collect()train_df, test_df = train_test_split(train_pd, test_size=0.25, stratify=train_pd['target'])

del train_pd

gc.collect()X_train = train_df.drop(['customer_ID', 'target'], axis=1)

X_test = test_df.drop(['customer_ID', 'target'], axis=1)

y_train = train_df['target']

y_test = test_df['target']

del train_df, test_df

gc.collect()# 1. Define an objective function to be maximized.

def objective(trial):

dtrain = lgb.Dataset( X_train, label=y_train)

# 2. Suggest values of the hyperparameters using a trial object.

param = {

'objective': 'binary',

'metric': 'binary_logloss',

'seed' : 42,

'lambda_l1': trial.suggest_float('lambda_l1', 1e-8, 10.0, log=True),

'lambda_l2': trial.suggest_float('lambda_l2', 1e-8, 10.0, log=True),

'num_leaves': trial.suggest_int('num_leaves', 2, 256),

'feature_fraction': trial.suggest_float('feature_fraction', 0.1, 1.0),

'bagging_fraction': trial.suggest_float('bagging_fraction', 0.1, 1.0),

'bagging_freq': trial.suggest_int('bagging_freq', 1, 7),

'min_data_in_leaf': trial.suggest_int('min_child_samples', 5, 100),

'learning_rate': trial.suggest_float('learning_rate', 0.001, 0.05, step=0.001),

'device' : 'gpu',

"verbosity": -1,

}

gbm = lgb.train(param, dtrain)

preds = gbm.predict(X_test)

pred_labels = np.rint(preds)

accuracy = sklearn.metrics.accuracy_score(y_test, pred_labels)

return accuracy边栏推荐

- SVN server construction + SVN client + TeamCity integrated environment construction + VS2019 development

- /etc/resolv.conf的作用

- How to import a Golang external package and use it?

- SQL injection Less47 (error injection) and Less49 (time blind injection)

- SQL injection Less46 (injection after order by + rand() Boolean blind injection)

- thymeleaf迭代map集合

- Basic use of vim - bottom line mode

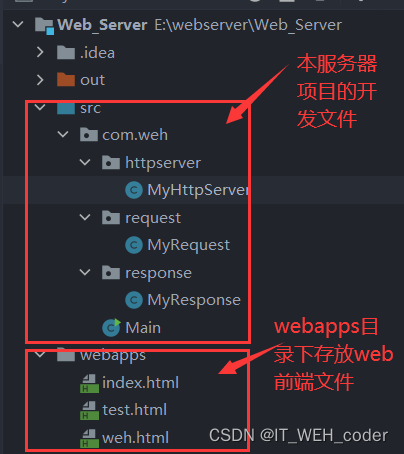

- 手写一个简单的web服务器(B/S架构)

- 简单的vim配置

- 清华大学陈建宇教授团队 | 基于接触丰富机器人操作的接触安全强化学习框架

猜你喜欢

随机推荐

Xinao Learning Plan The Road to Informatics Competition (2022.07.31)

Advanced Algebra _ Proof _ Any matrix is similar to an upper triangular matrix

助力数字政府建设,中科三方构建域名安全保障体系

NIO programming

UOS统信系统 - WindTerm使用

Thinking and Implementation of Object Cache Service

南方科技大学:Xiaoying Tang | AADG:视网膜图像分割领域泛化的自动增强

编译型语言和解释型语言的区别

IPD流程专业术语

/usr/local/bin和/usr/bin的区别

SQL注入 Less46(order by后的注入+rand()布尔盲注)

SVN server construction + SVN client + TeamCity integrated environment construction + VS2019 development

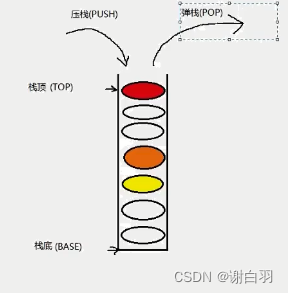

程序进程和线程(线程的并发与并行)以及线程的基本创建和使用

Design of Fire and Anti-theft System Based on Single Chip GSM

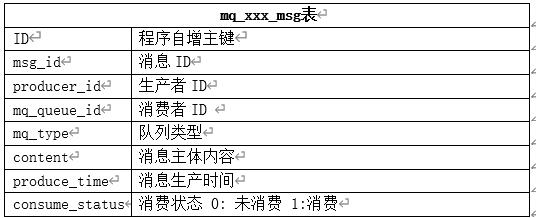

基于mysql的消息队列设计

Drawing process of hand-drawn map of scenic spots

Automated machine learning pycaret: PyCaret Basic Auto Classification LightGBM

对象缓存服务的思考和实现

/etc/sysconfig/network-scripts 配置网卡

Difference between first and take(1) operators in NgRx