当前位置:网站首页>MapReduce实现矩阵乘法–实现代码

MapReduce实现矩阵乘法–实现代码

2022-07-03 12:39:00 【星哥玩云】

之前写了一篇分析MapReduce实现矩阵乘法算法的文章:Mapreduce实现矩阵乘法的算法思路 http://www.linuxidc.com/Linux/2014-09/106646.htm

为了让大家更直观的了解程序执行,今天编写了实现代码供大家参考。

编程环境:

- java version "1.7.0_40"

- Eclipse Kepler

- Windows7 x64

- Ubuntu 12.04 LTS

- Hadoop2.2.0

- Vmware 9.0.0 build-812388

输入数据:

A矩阵存放地址:hdfs://singlehadoop:8020/wordspace/dataguru/hadoopdev/week09/matrixmultiply/matrixA/matrixa

A矩阵内容: 3 4 6 4 0 8

B矩阵存放地址:hdfs://singlehadoop:8020/wordspace/dataguru/hadoopdev/week09/matrixmultiply/matrixB/matrixb

B矩阵内容: 2 3 3 0 4 1

实现代码:

一共三个类:

- 驱动类MMDriver

- Map类MMMapper

- Reduce类MMReducer

大家可根据个人习惯合并成一个类使用。

MMDriver.java

package dataguru.matrixmultiply;

import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class MMDriver { public static void main(String[] args) throws Exception { // set configuration Configuration conf = new Configuration();

// create job Job job = new Job(conf,"MatrixMultiply"); job.setJarByClass(dataguru.matrixmultiply.MMDriver.class); // specify Mapper & Reducer job.setMapperClass(dataguru.matrixmultiply.MMMapper.class); job.setReducerClass(dataguru.matrixmultiply.MMReducer.class); // specify output types of mapper and reducer job.setOutputKeyClass(Text.class); job.setOutputValueClass(Text.class); job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(Text.class); // specify input and output DIRECTORIES Path inPathA = new Path("hdfs://singlehadoop:8020/wordspace/dataguru/hadoopdev/week09/matrixmultiply/matrixA"); Path inPathB = new Path("hdfs://singlehadoop:8020/wordspace/dataguru/hadoopdev/week09/matrixmultiply/matrixB"); Path outPath = new Path("hdfs://singlehadoop:8020/wordspace/dataguru/hadoopdev/week09/matrixmultiply/matrixC"); FileInputFormat.addInputPath(job, inPathA); FileInputFormat.addInputPath(job, inPathB); FileOutputFormat.setOutputPath(job,outPath);

// delete output directory try{ FileSystem hdfs = outPath.getFileSystem(conf); if(hdfs.exists(outPath)) hdfs.delete(outPath); hdfs.close(); } catch (Exception e){ e.printStackTrace(); return ; } // run the job System.exit(job.waitForCompletion(true) ? 0 : 1); } }

MMMapper.java

package dataguru.matrixmultiply;

import java.io.IOException; import java.util.StringTokenizer;

import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.lib.input.FileSplit;

public class MMMapper extends Mapper<Object, Text, Text, Text> { private String tag; //current matrix private int crow = 2;// 矩阵A的行数 private int ccol = 2;// 矩阵B的列数 private static int arow = 0; //current arow private static int brow = 0; //current brow @Override protected void setup(Context context) throws IOException, InterruptedException { // TODO get inputpath of input data, set to tag FileSplit fs = (FileSplit)context.getInputSplit(); tag = fs.getPath().getParent().getName(); }

/** * input data include two matrix files */ public void map(Object key, Text value, Context context) throws IOException, InterruptedException { StringTokenizer str = new StringTokenizer(value.toString()); if ("matrixA".equals(tag)) { //left matrix,output key:x,y int col = 0; while (str.hasMoreTokens()) { String item = str.nextToken(); //current x,y = line,col for (int i = 0; i < ccol; i++) { Text outkey = new Text(arow+","+i); Text outvalue = new Text("a,"+col+","+item); context.write(outkey, outvalue); System.out.println(outkey+" | "+outvalue); } col++; } arow++; }else if ("matrixB".equals(tag)) { int col = 0; while (str.hasMoreTokens()) { String item = str.nextToken(); //current x,y = line,col for (int i = 0; i < crow; i++) { Text outkey = new Text(i+","+col); Text outvalue = new Text("b,"+brow+","+item); context.write(outkey, outvalue); System.out.println(outkey+" | "+outvalue); } col++; } brow++; } } }

MMReducer.java

package dataguru.matrixmultiply;

import java.io.IOException; import java.util.HashMap; import java.util.Iterator; import java.util.Map; import java.util.StringTokenizer;

import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.Reducer.Context;

public class MMReducer extends Reducer<Text, Text, Text, Text> {

public void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

Map<String,String> matrixa = new HashMap<String,String>(); Map<String,String> matrixb = new HashMap<String,String>(); for (Text val : values) { //values example : b,0,2 or a,0,4 StringTokenizer str = new StringTokenizer(val.toString(),","); String sourceMatrix = str.nextToken(); if ("a".equals(sourceMatrix)) { matrixa.put(str.nextToken(), str.nextToken()); //(0,4) } if ("b".equals(sourceMatrix)) { matrixb.put(str.nextToken(), str.nextToken()); //(0,2) } } int result = 0; Iterator<String> iter = matrixa.keySet().iterator(); while (iter.hasNext()) { String mapkey = iter.next(); result += Integer.parseInt(matrixa.get(mapkey)) * Integer.parseInt(matrixb.get(mapkey)); }

context.write(key, new Text(String.valueOf(result))); } }

边栏推荐

- Task6: using transformer for emotion analysis

- 剑指 Offer 15. 二进制中1的个数

- [Exercice 5] [principe de la base de données]

- mysqlbetween实现选取介于两个值之间的数据范围

- Logback log framework

- [Database Principle and Application Tutorial (4th Edition | wechat Edition) Chen Zhibo] [Chapter 6 exercises]

- Flink SQL knows why (16): dlink, a powerful tool for developing enterprises with Flink SQL

- C graphical tutorial (Fourth Edition)_ Chapter 20 asynchronous programming: examples - using asynchronous

- 有限状态机FSM

- [combinatorics] permutation and combination (multiple set permutation | multiple set full permutation | multiple set incomplete permutation all elements have a repetition greater than the permutation

猜你喜欢

DQL basic query

MySQL_ JDBC

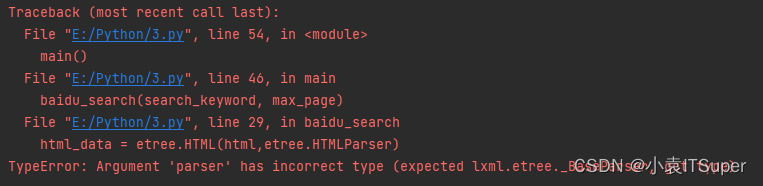

已解决TypeError: Argument ‘parser‘ has incorrect type (expected lxml.etree._BaseParser, got type)

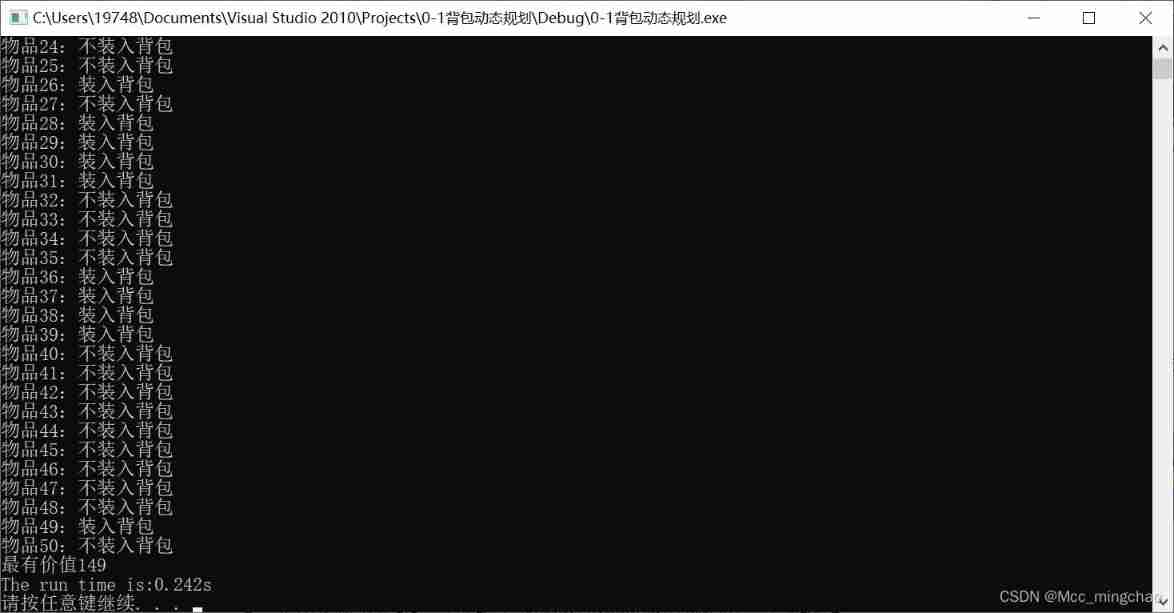

01 three solutions to knapsack problem (greedy dynamic programming branch gauge)

人身变声器的原理

PowerPoint tutorial, how to save a presentation as a video in PowerPoint?

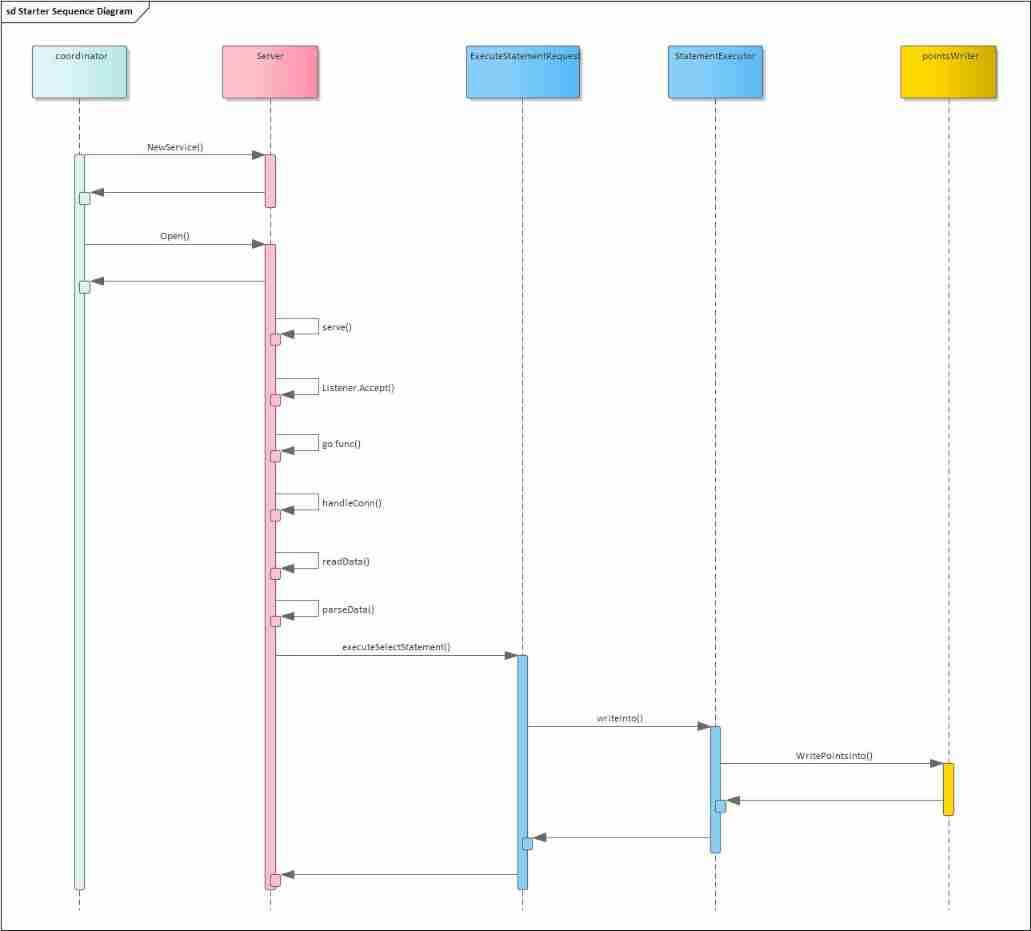

2022-02-14 analysis of the startup and request processing process of the incluxdb cluster Coordinator

MySQL

剑指 Offer 14- II. 剪绳子 II

106. 如何提高 SAP UI5 应用路由 url 的可读性

随机推荐

Logback 日志框架

Sword finger offer14 the easiest way to cut rope

php:&nbsp; The document cannot be displayed in Chinese

C graphical tutorial (Fourth Edition)_ Chapter 15 interface: interfacesamplep268

SLF4J 日志门面

2022-01-27 research on the minimum number of redis partitions

Today's sleep quality record 77 points

[Exercice 5] [principe de la base de données]

已解决(机器学习中查看数据信息报错)AttributeError: target_names

106. 如何提高 SAP UI5 应用路由 url 的可读性

Seven habits of highly effective people

JSP and filter

mysql更新时条件为一查询

[data mining review questions]

Flink SQL knows why (12): is it difficult to join streams? (top)

用户和组命令练习

Anan's doubts

2022-02-11 practice of using freetsdb to build an influxdb cluster

【Colab】【使用外部数据的7种方法】

Two solutions of leetcode101 symmetric binary tree (recursion and iteration)