当前位置:网站首页>Kubesphere cluster configuration NFS storage solution - favorite

Kubesphere cluster configuration NFS storage solution - favorite

2022-06-27 05:53:00 【[shenhonglei]】

Quickly build NFS Server And verify

It is not recommended that you use... In a production environment NFS Storage ( especially Kubernetes 1.20 Or above )

- selfLink was empty stay k8s colony v1.20 It existed before , stay v1.20 Then the question was deleted

- It may also cause failed to obtain lock and input/output error Other questions , Which leads to Pod CrashLoopBackOff. Besides , Some applications are incompatible NFS, for example Prometheus etc. .

install NFS Server

# install NFS Server side

sudo apt-get update # Execute the following command to ensure that the latest software package is used

sudo apt-get install nfs-kernel-server

# install NFS client

sudo apt-get install nfs-common

#yum

yum install -y nfs-utils

Create shared directory

First look at the configuration file /etc/exports

[email protected]:~# cat /etc/exports # /etc/exports: the access control list for filesystems which may be exported # to NFS clients. See exports(5). # # Example for NFSv2 and NFSv3: # /srv/homes hostname1(rw,sync,no_subtree_check) hostname2(ro,sync,no_subtree_check) # # Example for NFSv4: # /srv/nfs4 gss/krb5i(rw,sync,fsid=0,crossmnt,no_subtree_check) # /srv/nfs4/homes gss/krb5i(rw,sync,no_subtree_check)Create shared goals and empower

# Catalog ksha3.2 mkdir /ksha3.2 chmod 777 /ksha3.2 # Catalog demo mkdir /demo chmod 777 /demo # Catalog /home/ubuntu/nfs/ks3.1 mkdir /home/ubuntu/nfs/ks3.1 chmod 777 /home/ubuntu/nfs/ks3.1Add to configuration file /etc/exports

vi /etc/exports .... /home/ubuntu/nfs/ks3.1 *(rw,sync,no_subtree_check) /mount/ksha3.2 *(rw,sync,no_subtree_check) /mount/demo *(rw,sync,no_subtree_check) #/mnt/ks3.2 139.198.186.39(insecure,rw,sync,anonuid=500,anongid=500) #/mnt/demo 139.198.167.103(rw,sync,no_subtree_check) # The configuration format of this file is :< The output directory > [ client 1 Options ( Access right , User mapping , other )] [ client 2 Options ( Access right , User mapping , other )]Be careful : If the shared directory creation is invalid or missing , The following exceptions will be reported when using

mount.nfs: access denied by server while mounting 139.198.168.114:/mnt/demo1 # Client mount nfs Prompt when sharing Directory mount.nfs: access denied by server while mounting. # Question why : The shared directory on the server side does not have permission to allow others to access , Or the directory on which the client is mounted does not have permission . # terms of settlement : Modify the permissions of the shared directory on the server side , Successfully connected .

verification

# Update profile , Reload /etc/exports Configuration of :

exportfs -rv

# stay nfs server Up test View the directory shared by this computer :

showmount -e 127.0.0.1

showmount -e localhost

[email protected]:~# showmount -e 127.0.0.1

Export list for 127.0.0.1:

/mount/demo *

/mount/ksha3.2 *

/home/ubuntu/nfs/ks3.1 *

Use

On other networked machines , Use NFS share directory

Install client

If you do not install the client, you will report :bad option; for several filesystems (e.g. nfs, cifs) you might need a /sbin/mount. helper program.

#apt-get apt-get install nfs-common #yum yum install nfs-utilsTest on the user's machine

# Create the mount directory on this computer # Catalog ksha3.2 mkdir /root/aa chmod 777 /root/aa # Use nfs client Up test , take /root/aa Directory mount to remote 139.198.168.114 The shared storage directory for /mount/ksha3.2 On mount -t nfs 139.198.168.114:/mount/ksha3.2 /root/aasee

# Use df -h see df -h | grep aa 139.198.168.114:/mount/ksha3.2 146G 5.3G 140G 4% /root/aaUnbundling

umount -t nfs 139.198.168.114:/mnt/ksha3.2 /root/aa # If you are prompted when uninstalling : umount:/mnt:device is busy; resolvent : You need to exit the mount directory before uninstalling , Or Yes No NFS server It's down. # Need to force uninstall :mount –lf /mnt # This command can also :fuser –km /mnt Not recommended for use

KubeSphere docking NFS Dynamic distributor

Installable NFS Client program , In order to facilitate the docking of users NFS Server side ,KubeSphere Installable NFS Dynamic distributor , Support dynamic allocation of storage volumes , The process of allocating and reclaiming storage volumes is simple , One or more can be connected NFS Server side .

You can also use it Kubernetes Official method docking NFS Server side , This is a method of statically allocating storage volumes , The process of allocating and reclaiming storage volumes is complex , It can be connected to multiple NFS Server side .

Prerequisite

User docking NFS The server should ensure that KubeSphere Each node has permission to mount NFS Server folder .

Operation steps

In the following step example ,NFS Server side IP by 139.198.168.114,NFS The shared folder is /mnt/ksha3.2

install NFS Dynamic distributor

Official nfs provisoner,serviceAccount RABC relevant

SA RABC

Please download... Here rbac.yaml

perhaps kubectl apply -f https://raw.githubusercontent.com/kubernetes-incubator/external-storage/master/nfs-client/deploy/rbac.yaml[email protected]:~# kubectl apply -f https://raw.githubusercontent.com/kubernetes-incubator/external-storage/master/nfs-client/deploy/rbac.yaml serviceaccount/nfs-client-provisioner created clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner createdOfficial nfs provisoner Used for deloyment

deployment.yaml Configure download path

deployment There are several places in the document , Please modify it according to your own situationapiVersion: apps/v1 kind: Deployment metadata: name: nfs-client-provisioner labels: app: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default spec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: nfs-client-provisioner containers: - name: nfs-client-provisioner image: quay.io/external_storage/nfs-client-provisioner:latest volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: nfs/provisioner-229 - name: NFS_SERVER value: 139.198.168.114 - name: NFS_PATH value: /mount/ksha3.2 volumes: - name: nfs-client-root nfs: server: 139.198.168.114 path: /mount/ksha3.2Execution creation

[email protected]:~# kubectl apply -f deployment.yaml deployment.apps/nfs-client-provisioner createdestablish storageClass

Official documents class.yaml Download address

create a file storageclass.yaml, Note the correspondence of values# Please follow the above deployment During deployment provisioner_name Make corresponding changes , Or not modified , You don't have to move apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: managed-nfs-storage annotations: "storageclass.kubernetes.io/is-default-class": "false" provisioner: nfs/provisioner-229 # or choose another name, must match deployment's env PROVISIONER_NAME' parameters: archiveOnDelete: "false"Execution creation :kubectl apply -f storageclass.yaml

If you want this nfs By default provisioner, Then add

annotations: "storageclass.kubernetes.io/is-default-class": "true"Or set up

Mark a StorageClass Is the default , You need to add / Set annotation

storageclass.kubernetes.io/is-default-class=true.kubectl patch storageclass <your-class-name> -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}' # Check whether the cluster already exists default Storage Class $ kubectl get sc NAME PROVISIONER AGE glusterfs (default) kubernetes.io/glusterfs 3d4h

Verify installation results

Execute the following command , see NFS Whether the dynamic distributor container group is operating normally .

[email protected]:~# kubectl get po -A | grep nfs-client

default nfs-client-provisioner-7d69b9f45f-ks94m 1/1 Running 0 9m3s

see NFS Storage type

[email protected]:~# kubectl get sc managed-nfs-storage

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

managed-nfs-storage nfs/provisioner-229 Delete Immediate false 6m28s

Create and mount NFS Storage volume

Now you can create... Dynamically NFS Storage volumes and workloads are mounted NFS The storage volume

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: demo4nfs

namespace: ddddd

annotations:

kubesphere.io/creator: admin

volume.beta.kubernetes.io/storage-provisioner: nfs/provisioner-229

finalizers:

- kubernetes.io/pvc-protection

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: managed-nfs-storage

volumeMode: Filesystem

unexpected error getting claim reference: selfLink was empty, can’t make reference

Problem phenomenon

Use NFS establish PV when ,PVC Always in Pending state

see PVC

[email protected]:~# kubectl get pvc -n ddddd NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE demo4nfs Bound pvc-a561ce85-fc0d-42af-948e-6894ac000264 10Gi RWO managed-nfs-storage 32mView details

# View the current pvc Status information , Discovery is waiting volume The creation of [email protected]:~# kubectl get pvc -n dddddsee nfs-client-provisioner Log , yes seltlink was empty The problem of ,selfLink was empty stay k8s colony v1.20 It existed before , stay v1.20 Then deleted , Need to be in

/etc/kubernetes/manifests/kube-apiserver.yamlAdd parameter

[email protected]:~# kubectl get pod -n default

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-7d69b9f45f-ks94m 1/1 Running 0 27m

[email protected]:~# kubectl logs -f nfs-client-provisioner-7d69b9f45f-ks94m

I0622 09:41:33.606000 1 leaderelection.go:185] attempting to acquire leader lease default/nfs-provisioner-229...

E0622 09:41:33.612745 1 event.go:259] Could not construct reference to: '&v1.Endpoints{TypeMeta:v1.TypeMeta{Kind:"", APIVersion:""}, ObjectMeta:v1.ObjectMeta{Name:"nfs-provisioner-229", GenerateName:"", Namespace:"default", SelfLink:"", UID:"e8f19e28-f17f-4b22-9bb8-d4cbe20c796b", ResourceVersion:"23803580", Generation:0, CreationTimestamp:v1.Time{Time:time.Time{wall:0x0, ext:63791487693, loc:(*time.Location)(0x1956800)}}, DeletionTimestamp:(*v1.Time)(nil), DeletionGracePeriodSeconds:(*int64)(nil), Labels:map[string]string(nil), Annotations:map[string]string{"control-plane.alpha.kubernetes.io/leader":"{\"holderIdentity\":\"nfs-client-provisioner-7d69b9f45f-ks94m_8081b417-f20f-11ec-bff3-f64a9f402fda\",\"leaseDurationSeconds\":15,\"acquireTime\":\"2022-06-22T09:41:33Z\",\"renewTime\":\"2022-06-22T09:41:33Z\",\"leaderTransitions\":0}"}, OwnerReferences:[]v1.OwnerReference(nil), Initializers:(*v1.Initializers)(nil), Finalizers:[]string(nil), ClusterName:""}, Subsets:[]v1.EndpointSubset(nil)}' due to: 'selfLink was empty, can't make reference'. Will not report event: 'Normal' 'LeaderElection' 'nfs-client-provisioner-7d69b9f45f-ks94m_8081b417-f20f-11ec-bff3-f64a9f402fda became leader' I0622 09:41:33.612829 1 leaderelection.go:194] successfully acquired lease default/nfs-provisioner-229 I0622 09:41:33.612973 1 controller.go:631] Starting provisioner controller nfs/provisioner-229_nfs-client-provisioner-7d69b9f45f-ks94m_8081b417-f20f-11ec-bff3-f64a9f402fda! I0622 09:41:33.713170 1 controller.go:680] Started provisioner controller nfs/provisioner-229_nfs-client-provisioner-7d69b9f45f-ks94m_8081b417-f20f-11ec-bff3-f64a9f402fda! I0622 09:53:33.461902 1 controller.go:987] provision "ddddd/demo4nfs" class "managed-nfs-storage": started E0622 09:53:33.464213 1 controller.go:1004] provision "ddddd/demo4nfs" class "managed-nfs-storage": unexpected error getting claim reference: selfLink was empty, can't make reference

I0622 09:56:33.623717 1 controller.go:987] provision "ddddd/demo4nfs" class "managed-nfs-storage": started

E0622 09:56:33.625852 1 controller.go:1004] provision "ddddd/demo4nfs" class "managed-nfs-storage": unexpected error getting claim reference: selfLink was empty, can't make reference

terms of settlement

# stay kube-apiserver.yaml Add... To the file - --feature-gates=RemoveSelfLink=false

# Use the command to find kube-apiserver.yaml The location of find / -name kube-apiserver.yaml

/data/kubernetes/manifests/kube-apiserver.yaml

# Add... To the file - --feature-gates=RemoveSelfLink=false , Here's the picture

[email protected]:~# cat /data/kubernetes/manifests/kube-apiserver.yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

kubeadm.kubernetes.io/kube-apiserver.advertise-address.endpoint: 192.168.100.25:6443

creationTimestamp: null

labels:

component: kube-apiserver

tier: control-plane

name: kube-apiserver

namespace: kube-system

spec:

containers:

- command:

- kube-apiserver

- --feature-gates=RemoveSelfLink=false

- --advertise-address=0.0.0.0

- --allow-privileged=true

- --authorization-mode=Node,RBAC

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --enable-admission-plugins=NodeRestriction

# Use after adding kubeadm The deployed cluster will automatically load the deployment pod

#kubeadm Installed apiserver yes Static Pod, Its configuration file has been modified , Immediate effect .

#Kubelet Will listen for changes to the file , When you modify /etc/kubenetes/manifest/kube-apiserver.yaml After the document ,kubelet Will automatically terminate the original #kube-apiserver-{nodename} Of Pod, And automatically create a new configuration parameter Pod As a substitute .

# If you have more than one Kubernetes Master node , You need to be in every Master Modify the file on the node , And make the parameters on each node consistent .

# Note here that if api-server Boot failure It needs to be executed again

kubectl apply -f /etc/kubernetes/manifests/kube-apiserver.yaml

GitHub official ISSUES

边栏推荐

- Codeforces Round #802 (Div. 2)

- [collection] Introduction to basic knowledge of point cloud and functions of point cloud catalyst software

- 【Cocos Creator 3.5.1】event. Use of getbutton()

- Win 10 如何打开环境变量窗口

- DAST black box vulnerability scanner part 6: operation (final)

- 多线程基础部分Part3

- Assembly language - Wang Shuang Chapter 3 notes and experiments

- STM32 reads IO high and low level status

- Two position relay xjls-8g/220

- Unity中跨平臺獲取系統音量

猜你喜欢

Basic concepts of neo4j graph database

Reading graph augmentations to learn graph representations (lg2ar)

QT using Valgrind to analyze memory leaks

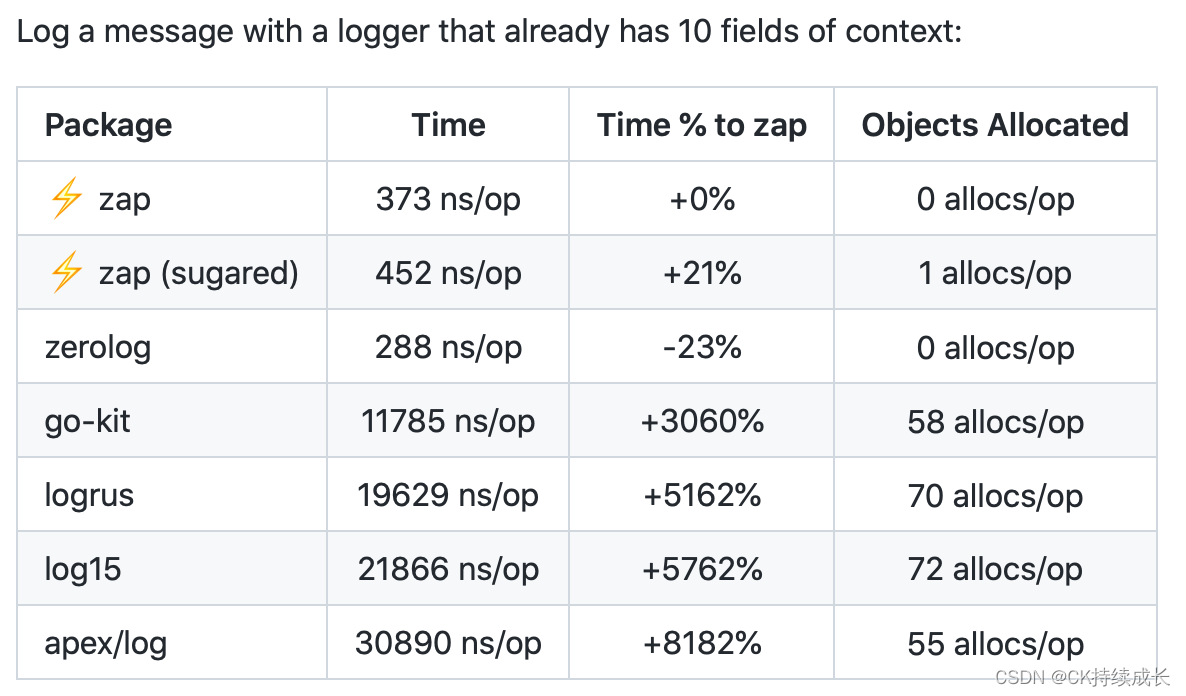

Go日志-Uber开源库zap使用

![[FPGA] realize the data output of checkerboard horizontal and vertical gray scale diagram based on bt1120 timing design](/img/80/c258817abd35887c0872a3286a821f.png)

[FPGA] realize the data output of checkerboard horizontal and vertical gray scale diagram based on bt1120 timing design

30个单片机常见问题及解决办法!

cpu-z中如何查看内存的频率和内存插槽的个数?

认知篇----2022高考志愿该如何填报

多线程基础部分Part 1

![[622. design cycle queue]](/img/f2/d499ac9ddc50b73f8c83e8b6af2483.png)

[622. design cycle queue]

随机推荐

Niuke practice 101-c reasoning clown - bit operation + thinking

Machunmei, the first edition of principles and applications of database... Compiled final review notes

Go log -uber open source library zap use

Logu p4683 [ioi2008] type printer problem solving

表单校验 v-model 绑定的变量,校验失效的解决方案

Obtenir le volume du système à travers les plateformes de l'unit é

leetcode298周赛记录

Formation and release of function stack frame

Terminal in pychar cannot enter the venv environment

QT using Valgrind to analyze memory leaks

Opencv implements object tracking

KubeSphere 集群配置 NFS 存储解决方案-收藏版

Senior [Software Test Engineer] learning route and necessary knowledge points

面试:Selenium 中有哪几种定位方式?你最常用的是哪一种?

leetcode299周赛记录

Interview: what are the positioning methods in selenium? Which one do you use most?

Discussion on streaming media protocol (MPEG2-TS, RTSP, RTP, RTCP, SDP, RTMP, HLS, HDS, HSS, mpeg-dash)

Zener diode zener diode sod123 package positive and negative distinction

网易云音乐params和encSecKey参数生成代码

Spark's projection