当前位置:网站首页>Introduction to memory wall

Introduction to memory wall

2022-07-27 09:26:00 【Dusk vending machine】

Memory wall

The following is an excerpt from First class technology website

- In recent years CV NLP The amount of computation in the field of speech recognition is every two years 15 Double the rate of growth , Promote AI Hardware development

- Communication bandwidth bottleneck : Inside the chip 、 Between chips 、AI Communication between hardware , Called many AI The bottleneck of application

- The average size of the model is doubled every two years 240 times , however AI The memory size of the hardware only doubles every two years 2 times

- Training AI The memory required by the model is several times more than that of the general model

Memory wall is not only related to memory capacity , It also includes the transmission bandwidth of memory .

- Involving multiple levels of memory data transmission :

for example , Between computing logic units and on-chip memory , Or between computing logic unit and main memory , Or data transfer across different processors in different slots

In all of the above cases , Capacity and data transmission speed are far behind the hardware computing capacity

- The distributed strategy is adopted to expand the training to multiple AI On the hardware , So as to break through the limitations brought by the memory capacity and bandwidth of the hardware . AI Hardware will encounter communication bottlenecks , Slower than on-chip data handling

- The horizontal expansion of distributed strategy is only in the case of little traffic and data transmission , It's the right solution for computing intensive problems

Promising solutions to break memory walls

In order to continue to innovate and “ Break the memory wall ”, We need to rethink the design of AI models . Here are a few key points :

- At present, the design methods of artificial intelligence models are mostly temporary , Or just rely on very simple amplification rules .( I don't understand )

- Need more effective data method training AI Model , Current network training is very inefficient . Learning requires a lot of data and hundreds of thousands of iterations

- Existing optimization and training methods , A lot of parameters need to be adjusted , Hundreds of trial and error are needed before setting parameters and training is successful .

- SOTA The scale of this kind of network is very large , Deploying them alone is extremely challenging .AI The hardware involved mainly focuses on improving the calculation example , And less focus on improving memory .

Training algorithm

- difficult : Violent exploratory mediation

- About Microsoft Zero This paper introduces a promising work : You can delete / The state parameters of the sliced redundancy optimizer [21, 3], On the premise of keeping memory consumption unchanged , Training 8 Times the size of the model . If the introduction of these higher-order methods overhead The problem can be solved , So it can significantly reduce the total cost of training large models .

- Improve the local properties of the optimization algorithm , And reduce memory usage , But it will increase the amount of calculation

- Sufficiently robust design 、 Use the optimization algorithm with low precision training

Efficient deployment

- Prune out redundant parameters in the model

- quantitative , Reduce precision

Conclusion

at present NLP Medium SOTA Transformer Computing power requirements of class models , Every two years 750 Double the rate of growth , The number of model parameters is based on every two years 240 Double the rate of growth . by comparison , The peak growth rate of hardware computing power is every two years 3.1 times .DRAM And the growth rate of hardware interconnection bandwidth is every two years 1.4 times , Has been gradually left behind by demand . Think deeply about the numbers , In the past 20 During the year, the peak of hardware computing power increased 90000 times , however DRAM/ Hardware interconnection bandwidth has only increased 30 times . In this trend , The data transfer , In particular, data transmission within or between chips will quickly become a large-scale training platform AI The bottleneck of the model . So we need to rethink AI Model training , Deployment and the model itself , Think about it , How to design AI hardware under this increasingly challenging memory wall .

边栏推荐

- 1640. Can you connect to form an array -c language implementation

- [C language _ study _ exam _ review lesson 3] overview of ASCII code and C language

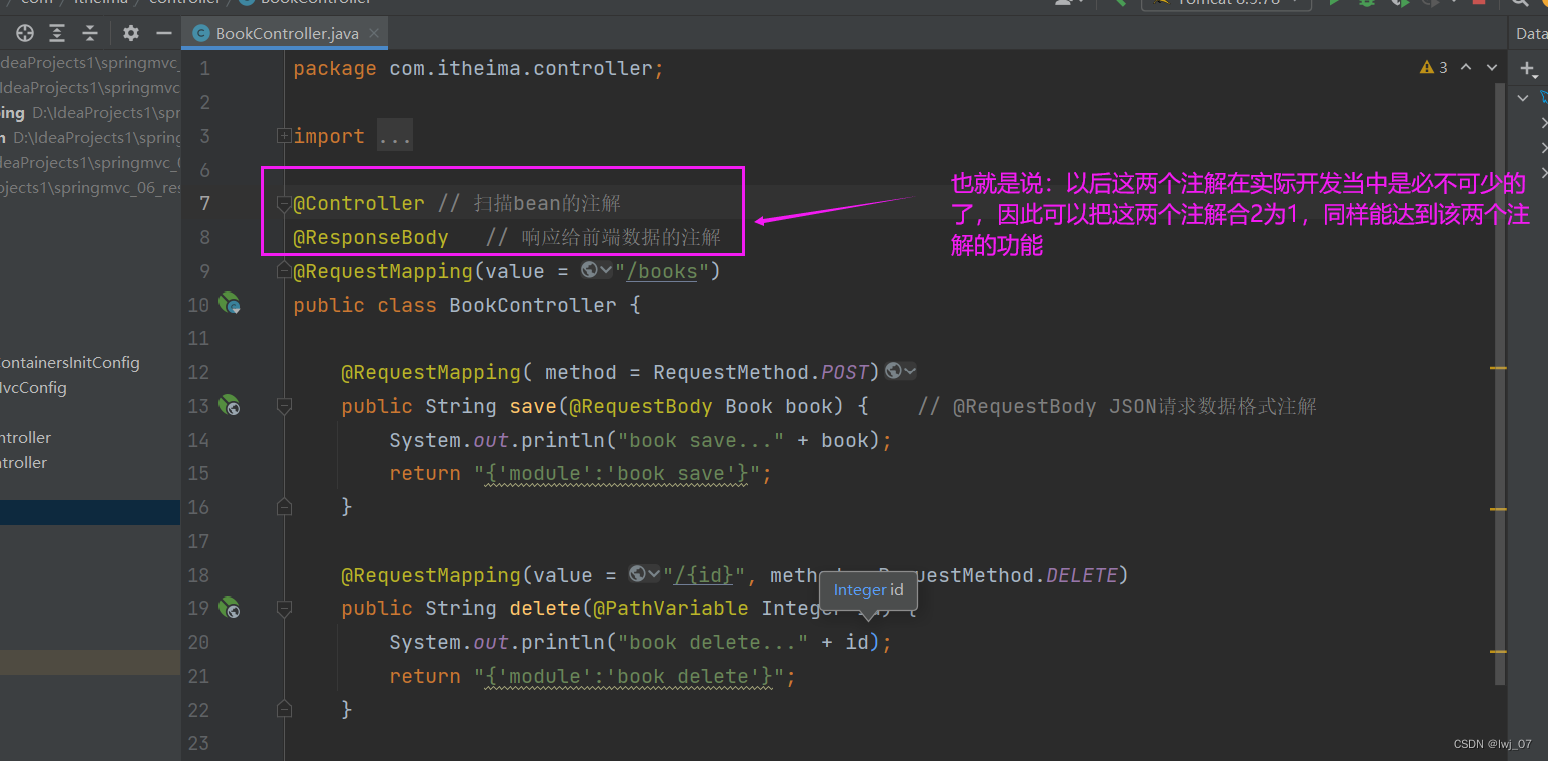

- Restful

- Read the paper learning to measure changes: full revolutionary Siamese metric networks for scene change detect

- [C language _ review _ learn Lesson 2] what is base system? How to convert between hexadecimals

- IDL calls 6S atmospheric correction

- NPM and yarn update dependent packages

- Replace restricts the text box to regular expressions of numbers, numbers, letters, etc

- NCCL (NVIDIA Collective Communications Library)

- 6S parameters

猜你喜欢

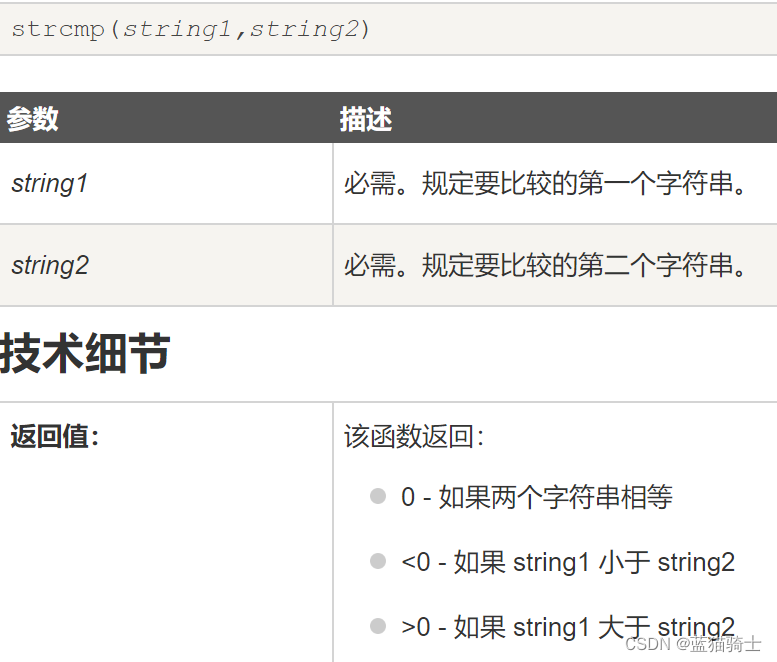

js call和apply

Antdesign a-modal自定义指令实现拖拽放大缩小

Restful

ESP8266-Arduino编程实例-中断

ESP8266-Arduino编程实例-ADC

Special exercises for beginners of C language to learn code for the first time

Analog library function

Wechat applet 5 - foundation strengthening (not finished)

快应用JS自定义月相变化效果

C language takes you to tear up the address book

随机推荐

Pymongo fuzzy query

ES6 new - Operator extension

2068. Check whether the two strings are almost equal

645. Wrong set

【云原生之kubernetes实战】在kubernetes集群下部署Rainbond平台

七月集训(第08天) —— 前缀和

Size limit display of pictures

Two tips in arkui framework

Antdesign a-modal user-defined instruction realizes dragging and zooming in and out

Qdoublevalidator does not take effect solution

IDL calls 6S atmospheric correction

Data interaction based on restful pages

js call和apply

面试官:什么是脚手架?为什么需要脚手架?常用的脚手架有哪些?

【云驻共创】华为云:全栈技术创新,深耕数字化,引领云原生

【微信小程序】农历公历互相转换

Explanation of common basic controls for C # form application (suitable for Mengxin)

七月集训(第24天) —— 线段树

七月集训(第03天) —— 排序

网易笔试之解救小易——曼哈顿距离的典型应用