当前位置:网站首页>GFS distributed file system

GFS distributed file system

2022-07-05 23:35:00 【Oranges are delicious】

List of articles

- One 、GlusterFS brief introduction

- Two 、GlusterFS The type of volume

- 3、 ... and 、 Deploy GlusterFS to cluster around

- Experiment preparation

- 1、 Change node name

- 2、 Node for disk mounting , Install local source

- 3、 Add nodes to create clusters

- 4、 Create distributed volumes

- 5、 Create a striped roll

- 6、 Create replication volume

- 7、 Create distributed striped volumes

- 8、 Deploy gluster client

- 9、 View file distribution

- 1、 View file distribution

- 2、 Destructive testing

- Four 、 Other maintenance commands

One 、GlusterFS brief introduction

- GFS It's an extensible distributed file system

- By the storage server 、 Client and NFS/Samba Storage gateway ( Optional , Choose to use as needed ) form .

- No metadata server component , This helps to improve the performance of the whole system 、 Reliability and stability .

MFS

Traditional distributed file systems mostly store metadata through meta servers , Metadata contains directory information on the storage node 、 Directory structure, etc . This design has a high efficiency in browsing the directory , But there are also some defects , For example, single point of failure . Once the metadata server fails , Even if the node has high redundancy , The entire storage system will also crash . and GlusterFS Distributed file system is based on the design of no meta server , Strong horizontal data expansion ability , High reliability and storage efficiency .

GclusterFs It's also Scale-out( Horizontal scaling ) Storage solutions Gluster At the heart of , It has strong horizontal expansion ability in storing data , It can support numbers through extension PB Storage capacity and handling of thousands of clients .

GlusterFS Support with the help of TCP/IP or InfiniBandRDMA The Internet ( A technology that supports multiple concurrent links , With high bandwidth 、 Low latency 、 Features of high scalability ) Bring together physically dispersed storage resources , Provide unified storage services , And use a unified global namespace to manage data .

1 、GlusterFs characteristic

Scalability and high performance

GlusterFs Leverage dual features to provide high-capacity storage solutions .

(1)Scale-Out The architecture allows to improve storage capacity and performance by simply adding storage nodes ( disk 、 The calculation and I/o Resources can be increased independently ), Support 10GbE and InfiniBand High speed internet connection .

(2)Cluster Elastic Hash (ElasticHash) It's solved GlusterFS Dependency on metadata server , Improved single point of failure and performance bottlenecks , The parallel data access is realized .GlusterFS The elastic hash algorithm can intelligently locate any data fragment in the storage pool ( Store the data in pieces on different nodes ), There is no need to view the index or query the metadata server .High availability

GlusterFS You can copy files automatically , Such as mirror image or multiple copies , To ensure that data is always accessible , Even in case of hardware failure, it can be accessed normally .

When the data is inconsistent , The self-healing function can restore the data to the correct state , Data repair is performed in the background in an incremental manner , Almost no performance load is generated .

GlusterFS Can support all storage , Because it did not design its own private data file format , Instead, it uses the mainstream standard disk file system in the operating system ( Such as EXT3、XFS etc. ) To store files , Therefore, data can be accessed in the traditional way of accessing disk .Global unified namespace

Distributed storage , Integrate the namespaces of all nodes into a unified namespace , Form the storage capacity of all nodes of the whole system into a large virtual storage pool , The front-end host can access these nodes to complete data reading and writing operations .Elastic volume management

GlusterFs By storing data in logical volumes , The logical volume is obtained by independent logical partition from the logical storage pool .

Logical storage pools can be added and removed online , No business interruption . Logical volumes can grow and shrink online as needed , And load balancing can be realized in multiple nodes .

File system configuration can also be changed and applied online in real time , It can adapt to changes in workload conditions or online performance tuning .Based on standard protocol

GlusterFS Storage services support NFS、CIFS、HPTP、FTP、SMB And Gluster Native protocol , Completely with POSIX standard ( Portable operating system interface ) compatible .

Existing applications can be modified without any modification Gluster To access the data in , You can also use dedicated API Visit .

2、GlusterFS The term

- Brick( Memory block ) :

It refers to the private partition provided by the host for physical storage in the trusted host pool , yes GlusterFS Basic storage unit in , It's also

The storage directory provided externally on the server in the trusted storage pool .

The format of the storage directory consists of the absolute path of the server and directory , The expression is SERVER:EXPORT, Such as 20.0.0.12:

/ data/ mydir/. - volume( Logic volume ):

A logical volume is a set of Brick Set . A volume is a logical device for data storage , Be similar to LVM Logical volumes in . Most of the Gluster Management operations are performed on volumes . - FUSE:

It's a kernel module , Allow users to create their own file systems , There is no need to modify the kernel code . - VFS:

The interface provided by kernel space to user space to access disk . - Glusterd ( Background management process ) :

Run on each node in the storage cluster .

3、 Modular stack architecture

- GlusterFS Use modularity 、 Dimensional trestle Architecture .

- Through various combinations of modules , To achieve complex functions . for example Replicate The module can implement RATD1,Stripe The module can implement RAID0, Through the combination of the two, we can realize RAID10 and RAID01, At the same time, higher performance and reliability .

4、Glusterfs workflow

- (1) Client or application through Glusterfs The mount point of access data .

- (2)linux The system kernel passes through VFSAPI Receive requests and process .

- (3)VFS Submit the data to FUSE Kernel file system , And register an actual file system with the system FSE, and FUS File systems pass data through ldev/fuse The equipment documents were submitted to GlusterFs client End . Can be FUSE The file system is understood as a proxy .

- (4)GlusterFs client After receiving the data ,client Process the data according to the configuration of the configuration file .

- (5) after GlusterFS client After processing , Transfer data over the network to the remote GlusterFS server, And write the data to the server storage device .

5、 elastic HASH Algorithm

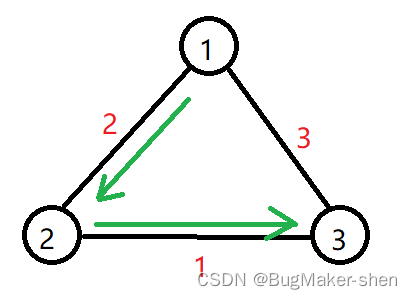

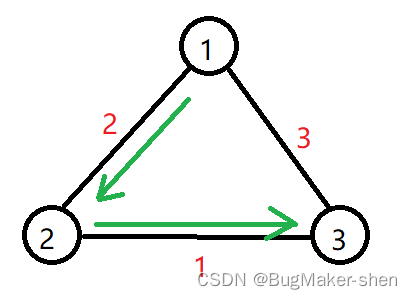

elastic HASH The algorithm is Davies-Meyer The specific implementation of the algorithm , adopt HASH The algorithm can get a 32 Bit integer range hash value , Suppose there are... In the logical volume N Units of storage Brick, be 32 The integer range of bits will be divided into N A continuous subspace , Each space corresponds to a Brick.

When a user or application accesses a namespace , By evaluating the namespace HASH value , According to the HASH Value corresponding to 32 Bit integer where the spatial positioning data is located Brick.

elastic HASH The advantages of the algorithm :

- Make sure that the data is evenly distributed in each Brick in .

- Solved the dependency on metadata server , And then solve the single point of failure and access bottlenecks .

Two 、GlusterFS The type of volume

GlusterFS Seven volumes are supported , Distributed volumes 、 Strip roll 、 Copy volume 、 Distributed striped volume 、 Distributed replication volumes 、 Striped replication volumes and distributed striped replication volumes .

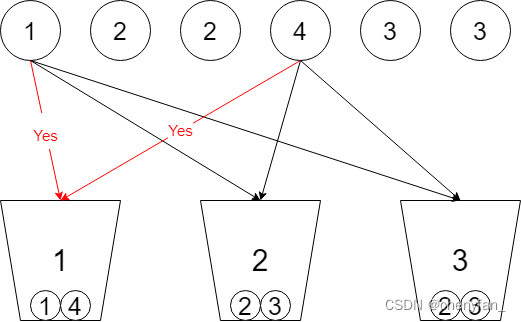

Distributed volumes ( Default ): File by HASH The algorithm is distributed to all Brick Server On , This kind of roll is GFS The basis of ; In document units according to HASH The algorithm hashes to different Brick, In fact, it just expands the disk space , Not fault tolerant , It belongs to file level RAID 0

Strip roll ( Default ): similar RAID 0, The files are divided into databases and distributed to multiple servers in a polling manner Brick Server On , File storage is in blocks , Support large file storage , The bigger the file , The more efficient the read is

Copy volume (Replica volume): Synchronize files to multiple Brick On , Make it have multiple copies of files , It belongs to file level RAID 1, Fault tolerance . Because the data is scattered in multiple Brick in , So the read performance has been greatly improved , But write performance drops

Distributed striped volume (Distribute Stripe volume):Brick Server The number is the number of bands ( Block distribution Brick Number ) Multiple , It has the characteristics of distributed volume and strip

Distributed replication volumes (Distribute Replica volume):Brick Server The number is the number of mirrors ( Copy of data Number ) Multiple , Features of both distributed and replicated volumes

Strip copy volume (Stripe Replca volume): similar RAID 10, It has the characteristics of striped volume and replicated volume at the same time

Distributed striped replication volumes (Distribute Stripe Replicavolume): The composite volume of three basic volumes is usually used for class Map Reduce application .

3、 ... and 、 Deploy GlusterFS to cluster around

Experiment preparation

node1 The server :20.0.0.10

node2 The server :20.0.0.5

node3 The server :20.0.0.6

node4 The server :20.0.0.7

Client node :20.0.0.12

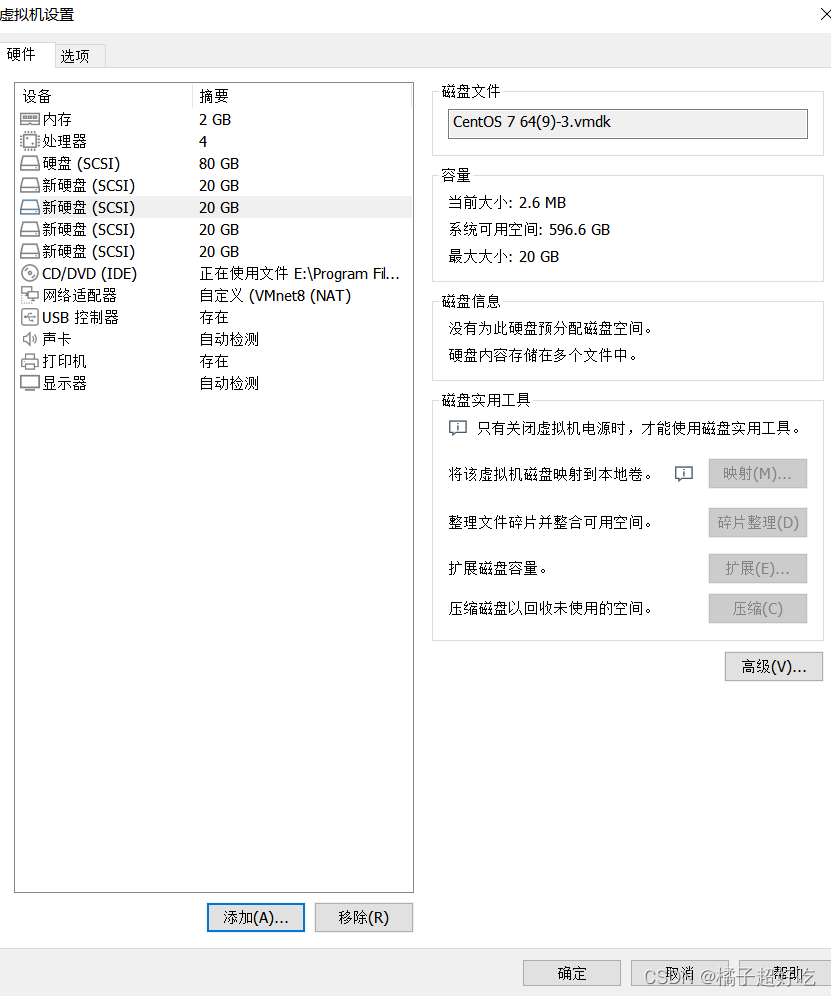

Four virtual machines added 4 Block NIC , Just experiment , It doesn't need to be too big

1、 Change node name

## Modify hostname

hostname node1

su

hostname node2

su

hostname node3

su

hostname node4

su

2、 Node for disk mounting , Install local source

cd /opt

vim /fdisk.sh

#!/bin/bash

NEWDEV=`ls /dev/sd* | grep -o 'sd[b-z]' | uniq`

for VAR in $NEWDEV

do

echo -e "n\np\n\n\n\nw\n" | fdisk /dev/$VAR &> /dev/null

mkfs.xfs /dev/${

VAR}"1" &> /dev/null

mkdir -p /data/${

VAR}"1" &> /dev/null

echo "/dev/${VAR}"1" /data/${VAR}"1" xfs defaults 0 0" >> /etc/fstab

done

mount -a &> /dev/null

chmod +x /opt/fdisk.sh

cd /opt/

./fdisk.sh

[[email protected] /opt] # echo "20.0.0.10 node1" >> /etc/hosts

[[email protected] /opt] # echo "20.0.0.5 node2" >> /etc/hosts

[[email protected] /opt] # echo "20.0.0.6 node3" >> /etc/hosts

[[email protected] /opt] # echo "20.0.0.7 node4" >> /etc/hosts

[[email protected] /opt] # ls

fdisk.sh rh

[[email protected] /opt] # rz -E

rz waiting to receive.

[[email protected] /opt] # ls

fdisk.sh gfsrepo.zip rh

[[email protected] /opt] # unzip gfsrepo.zip

[[email protected] /opt] # cd /etc/yum.repos.d/

[[email protected] /etc/yum.repos.d] # ls

local.repo repos.bak

[[email protected] /etc/yum.repos.d] # mv * repos.bak/

mv: Unable to change directory "repos.bak" Move to your own subdirectory "repos.bak/repos.bak" Next

[[email protected] /etc/yum.repos.d] # ls

repos.bak

[[email protected] /etc/yum.repos.d] # vim glfs.repo

[glfs]

name=glfs

baseurl=file:///opt/gfsrepo

gpgcheck=0

enabled=1

[[email protected] /etc/yum.repos.d] # yum clean all && yum makecache

Loaded plug-in :fastestmirror, langpacks

Cleaning up software source : glfs

Cleaning up everything

Maybe you want: rm -rf /var/cache/yum, to also free up space taken by orphaned data from disabled or removed repos

Loaded plug-in :fastestmirror, langpacks

glfs | 2.9 kB 00:00:00

(1/3): glfs/filelists_db | 62 kB 00:00:00

(2/3): glfs/other_db | 46 kB 00:00:00

(3/3): glfs/primary_db | 92 kB 00:00:00

Determining fastest mirrors

Metadata cache established

[[email protected] /etc/yum.repos.d] # yum -y install glusterfs glusterfs-server glusterfs-fuse glusterfs-rdma

[[email protected] /etc/yum.repos.d] # systemctl start glusterd.service

[[email protected] /etc/yum.repos.d] # systemctl enable glusterd.service

Created symlink from /etc/systemd/system/multi-user.target.wants/glusterd.service to /usr/lib/systemd/system/glusterd.service.

[[email protected] /etc/yum.repos.d] # systemctl status glusterd.service

3、 Add nodes to create clusters

Add nodes to the storage trust pool

[[email protected] ~] # gluster peer probe node1

peer probe: success. Probe on localhost not needed

[[email protected] ~] # gluster peer probe node2

peer probe: success.

[[email protected] ~] # gluster peer probe node3

peer probe: success.

[[email protected] ~] # gluster peer probe node4

peer probe: success.

[[email protected] ~] # gluster peer status

Number of Peers: 3

Hostname: node2

Uuid: 2ee63a35-6e83-4a35-8f54-c9c0137bc345

State: Peer in Cluster (Connected)

Hostname: node3

Uuid: e63256a9-6700-466f-9279-3e3efa3617ec

State: Peer in Cluster (Connected)

Hostname: node4

Uuid: 9931effa-92a6-40c7-ad54-7361549dd96d

State: Peer in Cluster (Connected)

4、 Create distributed volumes

# Create distributed volumes , No type specified , Distributed volumes are created by default

[[email protected] ~] # gluster volume create dis-volume node1:/data/sdb1 node2:/data/sdb1 force

volume create: dis-volume: success: please start the volume to access data

[[email protected] ~] # gluster volume list

dis-volume

[[email protected] ~] # gluster volume start dis-volume

volume start: dis-volume: success

[[email protected] ~] # gluster volume info dis-volume

Volume Name: dis-volume

Type: Distribute

Volume ID: 8f948537-5ac9-4091-97eb-0bdcf142f4aa

Status: Started

Snapshot Count: 0

Number of Bricks: 2

Transport-type: tcp

Bricks:

Brick1: node1:/data/sdb1

Brick2: node2:/data/sdb1

Options Reconfigured:

transport.address-family: inet

nfs.disable: on

5、 Create a striped roll

# The specified type is stripe, Values for 2, And followed by 2 individual Brick Server, So you're creating a striped volume

[[email protected] ~] # gluster volume create stripe-volume stripe 2 node1:/data/sdc1 node2:/data/sdc1 force

volume create: stripe-volume: success: please start the volume to access data

[[email protected] ~] # gluster volume start stripe-volume

volume start: stripe-volume: success

[[email protected] ~] # gluster volume info stripe-volume

Volume Name: stripe-volume

Type: Stripe

Volume ID: b1185b78-d396-483f-898e-3519d3ef8e37

Status: Started

Snapshot Count: 0

Number of Bricks: 1 x 2 = 2

Transport-type: tcp

Bricks:

Brick1: node1:/data/sdc1

Brick2: node2:/data/sdc1

Options Reconfigured:

transport.address-family: inet

nfs.disable: on

6、 Create replication volume

# The specified type is replica, Values for 2, And followed by 2 individual Brick Server, So what is created is a copy volume

[[email protected] ~] # gluster volume create rep-volume replica 2 node3:/data/sdb1 node4:/data/sdb1 force

volume create: rep-volume: success: please start the volume to access data

[[email protected] ~] # gluster volume start rep-volume

volume start: rep-volume: success

[[email protected] ~] # gluster volume info rep-volume

Volume Name: rep-volume

Type: Replicate

Volume ID: 9d39a2a6-b71a-44a5-8ea5-5259d8aef518

Status: Started

Snapshot Count: 0

Number of Bricks: 1 x 2 = 2

Transport-type: tcp

Bricks:

Brick1: node3:/data/sdb1

Brick2: node4:/data/sdb1

Options Reconfigured:

transport.address-family: inet

nfs.disable: on

7、 Create distributed striped volumes

# The specified type is stripe, Values for 2, And followed by 4 individual Brick Server, yes 2 Twice as many , So you create a distributed striped volume

[[email protected] ~] # gluster volume create dis-stripe stripe 2 node1:/data/sdd1 node2:/data/sdd1 node3:/data/sdd1 node4:/data/sdd1 force

volume create: dis-stripe: success: please start the volume to access data

[[email protected] ~] # gluster volume start dis-stripe

volume start: dis-stripe: success

[[email protected] ~] # gluster volume info dis-stripe

Volume Name: dis-stripe

Type: Distributed-Stripe

Volume ID: beb7aa78-78d1-435f-8d29-c163878c73f0

Status: Started

Snapshot Count: 0

Number of Bricks: 2 x 2 = 4

Transport-type: tcp

Bricks:

Brick1: node1:/data/sdd1

Brick2: node2:/data/sdd1

Brick3: node3:/data/sdd1

Brick4: node4:/data/sdd1

Options Reconfigured:

transport.address-family: inet

nfs.disable: on

8、 Deploy gluster client

[[email protected] ~]#systemctl stop firewalld

[[email protected] ~]#setenforce 0

[[email protected] ~]#cd /opt

[[email protected] opt]#ls

rh

[[email protected] opt]#rz -E

rz waiting to receive.

[[email protected] opt]#ls

gfsrepo.zip rh

[[email protected] opt]#unzip gfsrepo.zip

[[email protected] opt]#cd /etc/yum.repos.d/

[[email protected] yum.repos.d]#ls

local.repo repos.bak

[[email protected] yum.repos.d]#mv * repos.bak/

mv: Unable to change directory "repos.bak" Move to your own subdirectory "repos.bak/repos.bak" Next

[[email protected] yum.repos.d]#ls

repos.bak

[[email protected] yum.repos.d]#vim glfs.repo

[glfs]

name=glfs

baseurl=file:///opt/gfsrepo

gpgcheck=0

enabled=1

[[email protected] yum.repos.d]#yum clean all && yum makecache

[[email protected] yum.repos.d]#yum -y install glusterfs glusterfs-fuse

[[email protected] yum.repos.d]#mkdir -p /test/{

dis,stripe,rep,dis_stripe,dis_rep}

[[email protected] yum.repos.d]#cd /test/

[[email protected] test]#ls

dis dis_rep dis_stripe rep stripe

[[email protected] test]#

[[email protected] test]#echo "20.0.0.10 node1" >> /etc/hosts

[[email protected] test]#echo "20.0.0.5 node2" >> /etc/hosts

[[email protected] test]#echo "20.0.0.6 node3" >> /etc/hosts

[[email protected] test]#echo "20.0.0.7 node4" >> /etc/hosts

[[email protected] test]#mount.glusterfs node1:dis-volume /test/dis

[[email protected] test]#mount.glusterfs node1:stripe-volume /test/stripe

[[email protected] test]#mount.glusterfs node1:rep-volume /test/rep

[[email protected] test]#mount.glusterfs node1:dis-stripe /test/dis_stripe

[[email protected] test]#mount.glusterfs node1:dis-rep /test/dis_rep

[[email protected] test]#

[[email protected] test]#df -h

file system Capacity Already used You can use Already used % Mount point

/dev/sda2 16G 3.5G 13G 22% /

devtmpfs 898M 0 898M 0% /dev

tmpfs 912M 0 912M 0% /dev/shm

tmpfs 912M 18M 894M 2% /run

tmpfs 912M 0 912M 0% /sys/fs/cgroup

/dev/sda5 10G 37M 10G 1% /home

/dev/sda1 10G 174M 9.9G 2% /boot

tmpfs 183M 4.0K 183M 1% /run/user/42

tmpfs 183M 40K 183M 1% /run/user/0

/dev/sr0 4.3G 4.3G 0 100% /mnt

node1:dis-volume 6.0G 65M 6.0G 2% /test/dis

node1:stripe-volume 8.0G 65M 8.0G 1% /test/stripe

node1:rep-volume 3.0G 33M 3.0G 2% /test/rep

node1:dis-stripe 21G 130M 21G 1% /test/dis_stripe

node1:dis-rep 11G 65M 11G 1% /test/dis_rep

[[email protected] test]#cd /opt

[[email protected] opt]#dd if=/dev/zero of=/opt/demo1.log bs=1M count=40

Recorded 40+0 Read in of

Recorded 40+0 Write

41943040 byte (42 MB) Copied ,0.0311576 second ,1.3 GB/ second

[[email protected] opt]#dd if=/dev/zero of=/opt/demo2.log bs=1M count=40

Recorded 40+0 Read in of

Recorded 40+0 Write

41943040 byte (42 MB) Copied ,0.182058 second ,230 MB/ second

[[email protected] opt]#dd if=/dev/zero of=/opt/demo3.log bs=1M count=40

Recorded 40+0 Read in of

Recorded 40+0 Write

41943040 byte (42 MB) Copied ,0.196193 second ,214 MB/ second

[[email protected] opt]#dd if=/dev/zero of=/opt/demo4.log bs=1M count=40

Recorded 40+0 Read in of

Recorded 40+0 Write

41943040 byte (42 MB) Copied ,0.169933 second ,247 MB/ second

[[email protected] opt]#dd if=/dev/zero of=/opt/demo5.log bs=1M count=40

Recorded 40+0 Read in of

Recorded 40+0 Write

41943040 byte (42 MB) Copied ,0.181712 second ,231 MB/ second

[[email protected] opt]#

[[email protected] opt]#ls -lh /opt

[[email protected] opt]#cp demo* /test/dis

[[email protected] opt]#cp demo* /test/stripe/

[[email protected] opt]#cp demo* /test/rep/

[[email protected] opt]#cp demo* /test/dis_stripe/

[[email protected] opt]#cp demo* /test/dis_rep/

[[email protected] opt]#cd /test/

[[email protected] test]#tree

.

├── dis

│ ├── demo1.log

│ ├── demo2.log

│ ├── demo3.log

│ ├── demo4.log

│ └── demo5.log

├── dis_rep

│ ├── demo1.log

│ ├── demo2.log

│ ├── demo3.log

│ ├── demo4.log

│ └── demo5.log

├── dis_stripe

│ ├── demo1.log

│ ├── demo2.log

│ ├── demo3.log

│ ├── demo4.log

│ └── demo5.log

├── rep

│ ├── demo1.log

│ ├── demo2.log

│ ├── demo3.log

│ ├── demo4.log

│ └── demo5.log

└── stripe

├── demo1.log

├── demo2.log

├── demo3.log

├── demo4.log

└── demo5.log

5 directories, 25 files

[[email protected] test]#

9、 View file distribution

1、 View file distribution

## View distributed file distribution

[[email protected] ~] # ls -lh /data/sdb1

Total usage 160M

-rw-r--r--. 2 root root 40M 7 month 4 20:47 demo1.log

-rw-r--r--. 2 root root 40M 7 month 4 20:47 demo2.log

-rw-r--r--. 2 root root 40M 7 month 4 20:47 demo3.log

-rw-r--r--. 2 root root 40M 7 month 4 20:47 demo4.log

[[email protected] ~]#ll -h /data/sdb1

Total usage 40M

-rw-r--r--. 2 root root 40M 7 month 4 20:47 demo5.log

## View the Striped volume file distribution

[[email protected] ~] # ls -lh /data/sdc1

Total usage 100M

-rw-r--r--. 2 root root 20M 7 month 4 20:47 demo1.log

-rw-r--r--. 2 root root 20M 7 month 4 20:47 demo2.log

-rw-r--r--. 2 root root 20M 7 month 4 20:47 demo3.log

-rw-r--r--. 2 root root 20M 7 month 4 20:47 demo4.log

-rw-r--r--. 2 root root 20M 7 month 4 20:47 demo5.log

[[email protected] ~]#ll -h /data/sdc1

Total usage 100M

-rw-r--r--. 2 root root 20M 7 month 4 20:47 demo1.log

-rw-r--r--. 2 root root 20M 7 month 4 20:47 demo2.log

-rw-r--r--. 2 root root 20M 7 month 4 20:47 demo3.log

-rw-r--r--. 2 root root 20M 7 month 4 20:47 demo4.log

-rw-r--r--. 2 root root 20M 7 month 4 20:47 demo5.log

## View the distribution of replicated volume files

[[email protected] ~]#ll -h /data/sdb1

Total usage 200M

-rw-r--r--. 2 root root 40M 7 month 4 20:47 demo1.log

-rw-r--r--. 2 root root 40M 7 month 4 20:47 demo2.log

-rw-r--r--. 2 root root 40M 7 month 4 20:47 demo3.log

-rw-r--r--. 2 root root 40M 7 month 4 20:47 demo4.log

-rw-r--r--. 2 root root 40M 7 month 4 20:47 demo5.log

[[email protected] ~]#ll -h /data/sdb1

Total usage 200M

-rw-r--r--. 2 root root 40M 7 month 4 20:47 demo1.log

-rw-r--r--. 2 root root 40M 7 month 4 20:47 demo2.log

-rw-r--r--. 2 root root 40M 7 month 4 20:47 demo3.log

-rw-r--r--. 2 root root 40M 7 month 4 20:47 demo4.log

-rw-r--r--. 2 root root 40M 7 month 4 20:47 demo5.log

## View the distributed striped volume distribution

[[email protected] ~] # ll -h /data/sdd1

Total usage 60M

-rw-r--r--. 2 root root 20M 7 month 4 20:47 demo1.log

-rw-r--r--. 2 root root 20M 7 month 4 20:47 demo2.log

-rw-r--r--. 2 root root 20M 7 month 4 20:47 demo3.log

[[email protected] ~]#ll -h /data/sdd1

Total usage 60M

-rw-r--r--. 2 root root 20M 7 month 4 20:47 demo1.log

-rw-r--r--. 2 root root 20M 7 month 4 20:47 demo2.log

-rw-r--r--. 2 root root 20M 7 month 4 20:47 demo3.log

[[email protected] ~]#ll -h /data/sdd1

Total usage 40M

-rw-r--r--. 2 root root 20M 7 month 4 20:47 demo4.log

-rw-r--r--. 2 root root 20M 7 month 4 20:47 demo5.log

[[email protected] ~]#ll -h /data/sdd1

Total usage 40M

-rw-r--r--. 2 root root 20M 7 month 4 20:47 demo4.log

-rw-r--r--. 2 root root 20M 7 month 4 20:47 demo5.log

2、 Destructive testing

#1、 Hang up node2 Node or close glusterd Service to simulate failure

[[email protected] ~]# systemctl stop glusterd.service

#2、 Check whether the file is normal on the client

# Distributed volume data viewing

[[email protected] test]# ll /test/dis/ # Found missing... On the client demo5.log file , This is in node2 Upper

Total usage 163840

-rw-r--r-- 1 root root 41943040 7 month 4 20:48 demo1.log

-rw-r--r-- 1 root root 41943040 7 month 4 20:48 demo2.log

-rw-r--r-- 1 root root 41943040 7 month 4 20:48 demo3.log

-rw-r--r-- 1 root root 41943040 7 month 4 20:48 demo4.log

# Strip roll

[[email protected] test]# cd /test/stripe/ # cannot access , Striped volumes are not redundant

[[email protected] stripe]# ll

Total usage 0

# Distributed striped volume

[[email protected] test]# ll /test/dis_stripe/ # cannot access , Distributed striped volumes are not redundant

Total usage 40960

-rw-r--r-- 1 root root 41943040 7 month 4 20:48 demo5.log

# Distributed replication volumes

[[email protected] test]# ll /test/dis_rep/ # You can visit , Distributed replication volumes are redundant

Total usage 204800

-rw-r--r-- 1 root root 41943040 7 month 4 20:48 demo1.log

-rw-r--r-- 1 root root 41943040 7 month 4 20:48 demo2.log

-rw-r--r-- 1 root root 41943040 7 month 4 20:48 demo3.log

-rw-r--r-- 1 root root 41943040 7 month 4 20:48 demo4.log

-rw-r--r-- 1 root root 41943040 7 month 4 20:48 demo5.log

# Hang up node2 and node4 node , Check whether the file is normal on the client

# Test whether the replicated volume is normal

[[email protected] rep]# ls -l /test/rep/ # Test normal on the client , The data are

Total usage 204800

-rw-r--r-- 1 root root 41943040 7 month 4 20:48 demo1.log

-rw-r--r-- 1 root root 41943040 7 month 4 20:48 demo2.log

-rw-r--r-- 1 root root 41943040 7 month 4 20:48 demo3.log

-rw-r--r-- 1 root root 41943040 7 month 4 20:48 demo4.log

-rw-r--r-- 1 root root 41943040 7 month 4 20:48 demo5.log

# Test whether the distributed stripe volume is normal

[[email protected] dis_stripe]# ll /test/dis_stripe/ # There is no data to test on the client

Total usage 0

# Test whether the distributed replication volume is normal

[[email protected] dis_rep]# ll /test/dis_rep/ # Test normal on the client , There's data

Total usage 204800

-rw-r--r-- 1 root root 41943040 7 month 4 20:49 demo1.log

-rw-r--r-- 1 root root 41943040 7 month 4 20:49 demo2.log

-rw-r--r-- 1 root root 41943040 7 month 4 20:49 demo3.log

-rw-r--r-- 1 root root 41943040 7 month 4 20:49 demo4.log

-rw-r--r-- 1 root root 41943040 7 month 4 20:49 demo5.log

Four 、 Other maintenance commands

1. see GlusterFS volume

gluster volume list

2. View information for all volumes

gluster volume info

3. View the status of all volumes

gluster volume status

4. Stop a volume

gluster volume stop dis-stripe

5. Delete a volume , Be careful : When deleting a volume , You need to stop the volume first , And no host in the trust pool is in the down state , Otherwise, the deletion will not succeed

gluster volume delete dis-stripe

6. Set the access control for the volume

## Just refuse

gluster volume set dis-rep auth.allow 20.0.0.20

## Only allowed

gluster volume set dis-rep auth.allow 20.0.0.* # Set up 20.0.0.0 All of the segments IP The address can be accessed dis-rep volume ( Distributed replication volumes )

边栏推荐

- 3D reconstruction of point cloud

- Neural structured learning 4 antagonistic learning for image classification

- How to enable relationship view in phpMyAdmin - how to enable relationship view in phpMyAdmin

- Comparison of parameters between TVs tube and zener diode

- TVS管 与 稳压二极管参数对比

- Multi camera stereo calibration

- Sum of two numbers, sum of three numbers (sort + double pointer)

- Hcip course notes-16 VLAN, three-tier architecture, MPLS virtual private line configuration

- 并查集实践

- Idea rundashboard window configuration

猜你喜欢

Practice of concurrent search

Spire Office 7.5.4 for NET

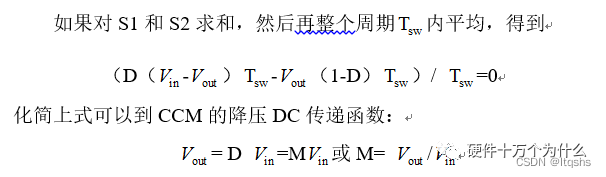

Switching power supply buck circuit CCM and DCM working mode

4点告诉你实时聊天与聊天机器人组合的优势

698. 划分为k个相等的子集 ●●

SpreadJS 15.1 CN 与 SpreadJS 15.1 EN

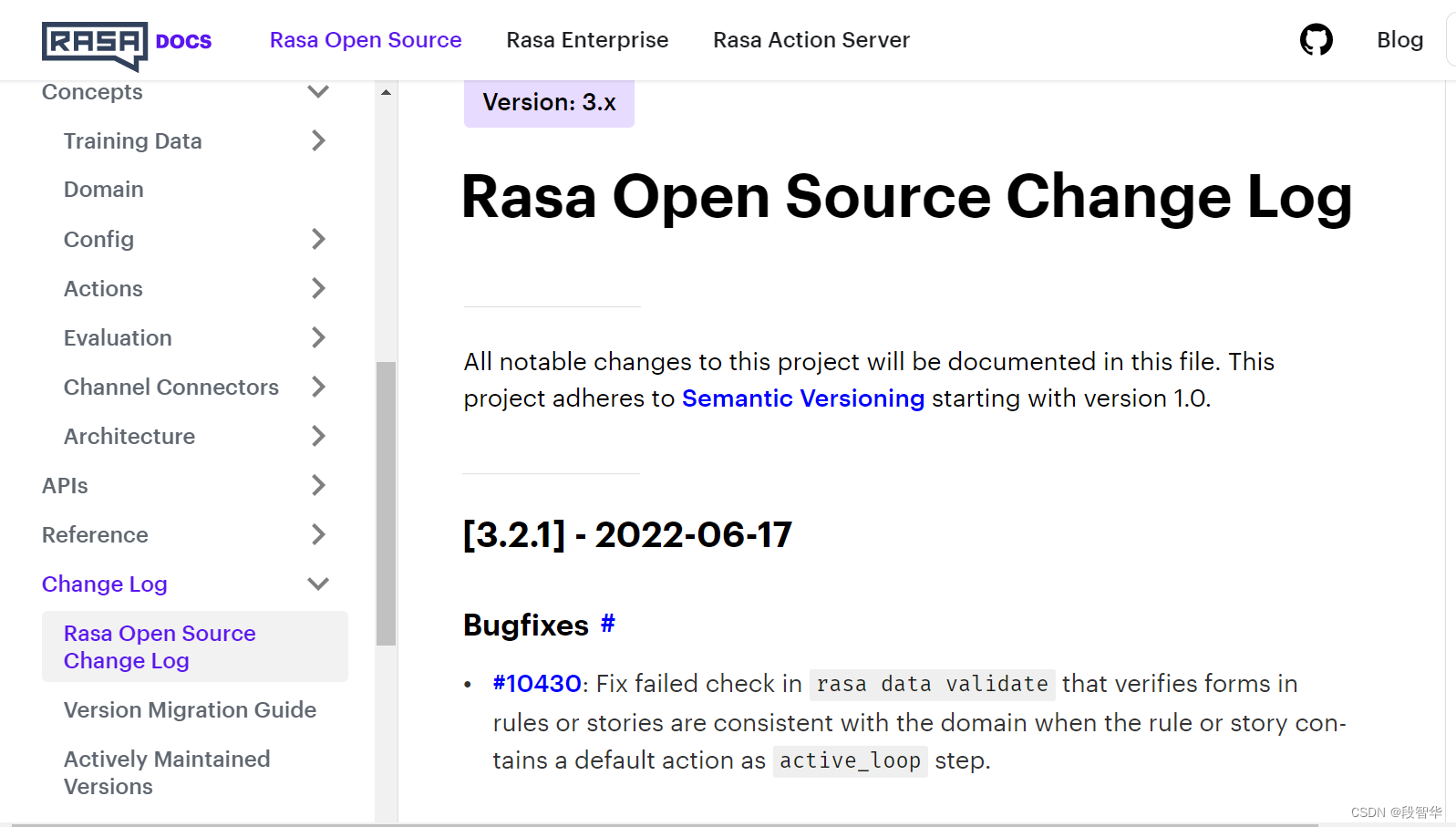

Rasa 3. X learning series -rasa 3.2.1 new release

Rasa 3. X learning series -rasa x Community Edition (Free Edition) changes

并查集实践

数学公式截图识别神器Mathpix无限使用教程

随机推荐

Basic knowledge of database (interview)

GFS分布式文件系统

Déterminer si un arbre binaire est un arbre binaire complet

Pyqt control part (I)

Spire Office 7.5.4 for NET

二叉树递归套路总结

11gR2 Database Services for "Policy" and "Administrator" Managed Databases (文件 I

【原创】程序员团队管理的核心是什么?

Go language implementation principle -- lock implementation principle

TVS管和ESD管的技術指標和選型指南-嘉立創推薦

Idea connects to MySQL, and it is convenient to paste the URL of the configuration file directly

When to use useImperativeHandle, useLayoutEffect, and useDebugValue

Krypton Factor-紫书第七章暴力求解

判斷二叉樹是否為完全二叉樹

帶外和帶內的區別

Attacking technology Er - Automation

TS type declaration

asp.net弹出层实例

MySQL delete uniqueness constraint unique

UVA – 11637 Garbage Remembering Exam (组合+可能性)