当前位置:网站首页>Dolphin scheduler 2.0.5 task test (spark task) reported an error: container exited with a non zero exit code 1

Dolphin scheduler 2.0.5 task test (spark task) reported an error: container exited with a non zero exit code 1

2022-06-23 05:03:00 【Zhun Xiaozhao】

Catalog

Container exited with a non-zero exit code 1

Yesterday in dolphinscheduler involve HDFS A functional test ( 3、 ... and )spark task Problems encountered in , It hasn't been solved , Take another look today , At a glance

Local browser access virtual machine domain name configuration

Every time you view the page, you need to add the virtual machine domain name host1 Replace with specific IP, The browser can access it normally , It's too troublesome

Configuration method

- Will the machine

C:\Windows\System32\drivers\etc'\hostsConfigure to and virtual machine/etc/hostsThe address is consistent

validate logon

Check the log

Check the output log

stderr( No useful information )

stdout( Finally, I have found a way )

Specific log :

Tools

/home/dolphinscheduler/app/hadoop-2.7.3/data/tmp/nm-local-dir/usercache/dolphinscheduler/appcache/application_1655121288928_0003/container_1655121288928_0003_01_000001/pyspark.zip/pyspark/sql/context.py:77: FutureWarning: Deprecated in 3.0.0. Use SparkSession.builder.getOrCreate() instead.

/home/dolphinscheduler/app/hadoop-2.7.3/data/tmp/nm-local-dir/usercache/dolphinscheduler/appcache/application_1655121288928_0003/container_1655121288928_0003_01_000001/pyspark.zip/pyspark/sql/dataframe.py:138: FutureWarning: Deprecated in 2.0, use createOrReplaceTempView instead.

Traceback (most recent call last):

File "/home/dolphinscheduler/app/hadoop-2.7.3/data/tmp/nm-local-dir/usercache/dolphinscheduler/appcache/application_1655121288928_0003/container_1655121288928_0003_01_000001/sparktasktest.py", line 42, in <module>

df_result.coalesce(1).write.json(sys.argv[2])

File "/home/dolphinscheduler/app/hadoop-2.7.3/data/tmp/nm-local-dir/usercache/dolphinscheduler/appcache/application_1655121288928_0003/container_1655121288928_0003_01_000001/pyspark.zip/pyspark/sql/readwriter.py", line 846, in json

File "/home/dolphinscheduler/app/hadoop-2.7.3/data/tmp/nm-local-dir/usercache/dolphinscheduler/appcache/application_1655121288928_0003/container_1655121288928_0003_01_000001/py4j-0.10.9.3-src.zip/py4j/java_gateway.py", line 1321, in __call__

File "/home/dolphinscheduler/app/hadoop-2.7.3/data/tmp/nm-local-dir/usercache/dolphinscheduler/appcache/application_1655121288928_0003/container_1655121288928_0003_01_000001/pyspark.zip/pyspark/sql/utils.py", line 111, in deco

File "/home/dolphinscheduler/app/hadoop-2.7.3/data/tmp/nm-local-dir/usercache/dolphinscheduler/appcache/application_1655121288928_0003/container_1655121288928_0003_01_000001/py4j-0.10.9.3-src.zip/py4j/protocol.py", line 326, in get_return_value

py4j.protocol.Py4JJavaError: An error occurred while calling o56.json.

: java.io.IOException: Incomplete HDFS URI, no host: hdfs:///test/softresult

at org.apache.hadoop.hdfs.DistributedFileSystem.initialize(DistributedFileSystem.java:143)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2669)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:94)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2703)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2685)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:373)

at org.apache.hadoop.fs.Path.getFileSystem(Path.java:295)

at org.apache.spark.sql.execution.datasources.DataSource.planForWritingFileFormat(DataSource.scala:461)

at org.apache.spark.sql.execution.datasources.DataSource.planForWriting(DataSource.scala:556)

at org.apache.spark.sql.DataFrameWriter.saveToV1Source(DataFrameWriter.scala:382)

at org.apache.spark.sql.DataFrameWriter.saveInternal(DataFrameWriter.scala:355)

at org.apache.spark.sql.DataFrameWriter.save(DataFrameWriter.scala:239)

at org.apache.spark.sql.DataFrameWriter.json(DataFrameWriter.scala:763)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:282)

at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

at py4j.commands.CallCommand.execute(CallCommand.java:79)

at py4j.ClientServerConnection.waitForCommands(ClientServerConnection.java:182)

at py4j.ClientServerConnection.run(ClientServerConnection.java:106)

at java.lang.Thread.run(Thread.java:748)

Environmental problems

ModuleNotFoundError: No module named ‘py4j’

The last night's runaway script can be successful , Now it seems that the script execution reports an error , The module of independent execution does not exist

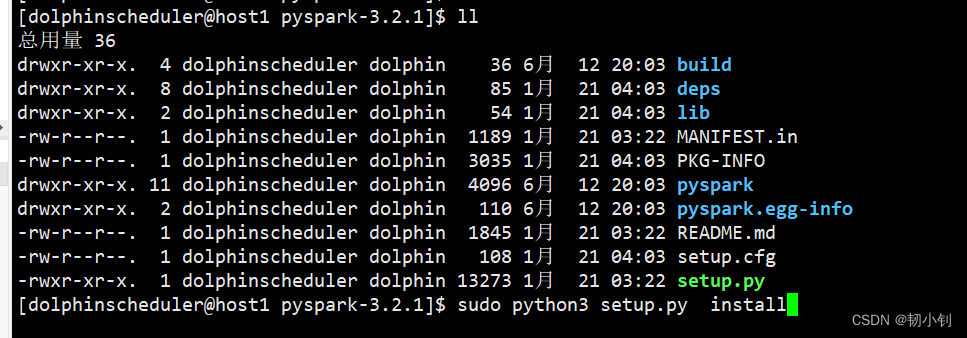

pyspark reinstall

Online installation , It was installed offline last night , There may be a problem with the downloaded package

sudo /usr/local/python3/bin/pip3 install pyspark

- Execute the script again and no module missing error will be reported

- Manual installation pyspark step ( Unzip the installation package to execute

sudo python3 setup.py install)

spark-submit To verify again

The mistake remains :

Report errors :Incomplete HDFS URI, no host: hdfs:///test/softresult

It is said on the Internet that it may not be introduced hadoop The configuration file , Result 1 inspection , It is found that there is a real problem with the configuration ( Matched hadoop_home The address of )

- Simply HADOOP_HOME Configure it (

conf/spark-env.sh)

export JAVA_HOME=/usr/local/java/jdk1.8.0_151

export HADOOP_HOME=/home/dolphinscheduler/app/hadoop-2.7.3

export HADOOP_CONF_DIR=/home/dolphinscheduler/app/hadoop-2.7.3/etc/hadoop

export SPARK_PYTHON=/usr/local/bin/python3

Report errors :path hdfs://192.168.56.10:8020/test/softresult already exists.

The problem should have been solved , Before no host: hdfs:///test/softresult, It is suspected that the directory does not exist , Manually created , So the report directory already exists , Specify a new directory to view again

SUCCEEDED

- It's a success

- The verification results , Still a little imperfect , The data is not written in , It must be a matter of procedure ( The next step is to study python Well ? The more you study, the more ignorant you become )

边栏推荐

- 磁阻 磁饱和

- Current relay hdl-a/1-110vdc-1

- ICer技能03Design Compile

- free( )的一个理解(《C Primer Plus》的一个错误)

- PCB placing components at any angle and distance

- McKinsey: in 2021, the investment in quantum computing market grew strongly and the talent gap expanded

- 【OFDM通信】基于matlab OFDM多用户资源分配仿真【含Matlab源码 1902期】

- Const understanding II

- How to make social media the driving force of cross-border e-commerce? This independent station tool cannot be missed!

- Can bus Basics

猜你喜欢

ApiPost接口测试的用法之------Post

Abnova 荧光染料 555-C3 马来酰亚胺方案

Icer Skill 02makefile script Running VCS Simulation

dolphinscheduler 2.0.5 spark 任务测试总结(源码优化)

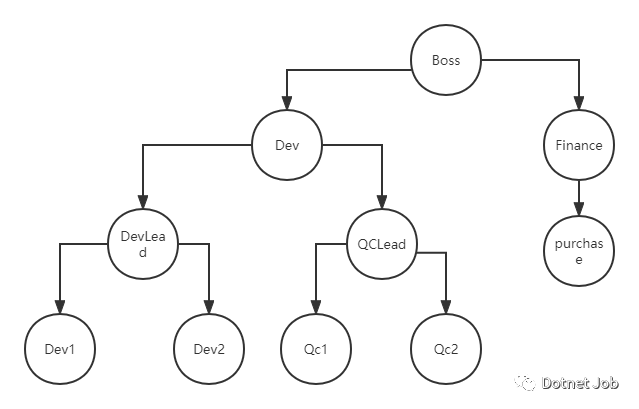

Talk about the composite pattern in C #

Current relay jdl-1002a

Mini Homer——几百块钱也能搞到一台远距离图数传链路?

ICer技能02makefile脚本自跑vcs仿真

Abnova blood total nucleic acid purification kit protocol

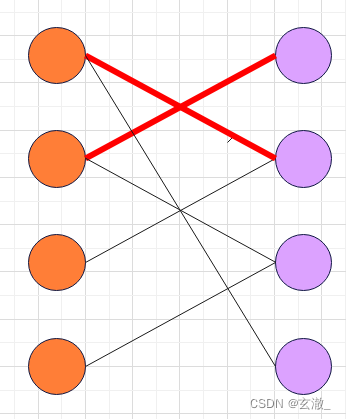

【图论】—— 二分图

随机推荐

With the arrival of intelligent voice era, who is defining AI in the new era?

What are the types of independent station chat robots? How to quickly create your own free chat robot? It only takes 3 seconds!

Abnova LiquidCell-负富集细胞分离和回收系统

Gson typeadapter adapter

Openwrt directory structure

项目总结1(头文件,switch,&&,位变量)

独立站聊天机器人有哪些类型?如何快速创建属于自己的免费聊天机器人?只需3秒钟就能搞定!

DPR-34V/V双位置继电器

Install and run mongodb under win10

ApiPost接口测试的用法之------Post

2022 simulated examination question bank and answers for safety management personnel of metal and nonmetal mines (open pit mines)

Abnova PSMA磁珠解决方案

Current relay jdl-1002a

PCB -- bridge between theory and reality

如何更好地组织最小 WEB API 代码结构

【论文阅读】Semi-Supervised Learning with Ladder Networks

Abnova liquidcell negative enrichment cell separation and recovery system

Laravel 通过服务提供者来自定义分页样式

Actual combat | multiple intranet penetration through Viper

聊聊 C# 中的 Composite 模式