当前位置:网站首页>[openvino+paddle] paddle detection / OCR / SEG export based on paddle2onnx

[openvino+paddle] paddle detection / OCR / SEG export based on paddle2onnx

2022-07-04 05:50:00 【Yida gum】

【openVINO+paddle】 be based on Paddle2ONNX Realization Paddle-Detection/OCR/Seg export

I have run through all the code in this article . I will provide all the source code, datasets and related files , My environment is BML codeLAB , His file path is /home/work/ instead of aistudio Of /home/aistudio/ This path , So please pay attention to the problem of path conversion when developing , There is anything you don't understand or make mistakes , Welcome to CSDN Search the account of Yida gum to chat with me .

I have uploaded the code and data to aistudio , Friends who want to compare the results or experience it can use it directly aistudio Run it online , Here's the link Click on the open :

https://aistudio.baidu.com/aistudio/projectdetail/3459413

Generally, the deployment of the propeller passes ONNX Model to carry on , So I want to be in CPU Wait for the model trained with the propeller , Most of them need to export the model before deployment . So in this project, I first provide an overall dynamic and static export demonstration of the propeller , Then take our common detection、OCR、Seg Demonstrate for example , The export of the omni-directional demonstration model , For later deployment and use .

Catalog

1、ONNX Introduce

ONNX It is an open source file format designed for machine learning , Used to store our trained models . Suppose a model is trained through the learning framework , We can store it , Then the stored format can be used ONNX This format of , In the model, the model construction is directed acyclic , There are many nodes in the figure , Then each node will have one or more inputs , And this section will also have one or more outputs . These inputs and outputs can form a complete picture . Each node becomes a OP.

With ONNX After the model , Models of different algorithm architectures can also be stored in the same file format .

You may encounter such a problem in practice , If you meet a good one pytorch Model , But you can't get their data set , Or there is no hardware condition to support the equipment . At this time of science, we have a good idea , That is to say pytorch What about this model , Migrate directly , Then deployment can solve this problem . Here is a new solution , Is that we can pass ONNX Accepted conversion format . First , We will want to convert pytorch The model is saved , Save it as a ONNX Format , After saving , Because we are ONNX Format , It can go through , For example, our operation paddleX(paddle A tool kit for ) convert . Then it can be converted into one of ours paddle One of the inference Model . Then you can pass this inference The model is deployed .

This is very conducive to our research and deployment . From the figure below , We can see ONNX Support many deep learning frameworks , such as tensorflow、pytorch、caffe、paddlepaddle. At the same time, transformation can deploy it to our cpu gpu perhaps FPGA Up top .

2、paddle2ONNX brief introduction

Propeller is the earliest open source framework in China , Have been with ONNX Have carried out in-depth cooperation , Open source, too paddle2ONNX As paddle paddle A core module of , Then support will paddlepaddle The model of framework training is directly saved as ONNX Format to help our developers quickly integrate our paddle Model speed 、 Efficient 、 Flexibly deploy to various mainstream platforms .

We can see from the following picture , It's ours paddlepaddle As a learning framework for deep learning , We can use a lot of data to train a model , We may deploy on a certain platform , Then the platform , Maybe we use the model of our propeller . If that platform does not support this model , For example, it's similar to tensorRT In this case , What should we do ? This is the time paddle ONNX It plays a great role , First of all, we will have our model of the propeller , Export it directly after training , Our model is directly derived into ONNX Format , then ONNX You can deploy with models . Then based on this feature, we can directly put our paddle Received on many different platforms , Deploy in various forms . in other words ,ONNX Direct use paddle Model to a bridge on various platforms .

3、 Dynamic and static diagram Export

Dynamic export

We can use a few simple command lines , We can put this paddle The model is exported and generated ONNX A model . On the left is an example of dynamic export , First of all, we use paddle Of API Networking , For example, we set up a model network . Then we will input according to our network , Give him a random input . Then you only need one line (export) Where? , You can export the model . Inside this bracket , We need to pass in our model , And the one we derived onnx The file name , And according to his output and input , He will run some related logic , Then export our model into ONNX Format .

Static export

The above is for our direct use 2.0 Then a way to export dynamic graph networking , Then say , If we have derived a model , Export to a inference Is the way of static Export . We support direct conversion by using the command line at the bottom , The following is a command line for a transformation , We just need to specify the model we transform 、 Its address 、 And the name of this model and its model file 、 And the name of her parameter file , And we need to import onnx A name of the file , Then we can easily carry out model transformation .

Now the propeller has supported many open source and many excellent algorithms , For example, flying oars NLP、Seg、object Detection、class、OCR wait . If I see it one day paddle There's a model , Then I want to use it in your project , You can support ONNX Deployment , After transformation, it can be deployed to our various platforms .

4、Paddle2ONNX Support model

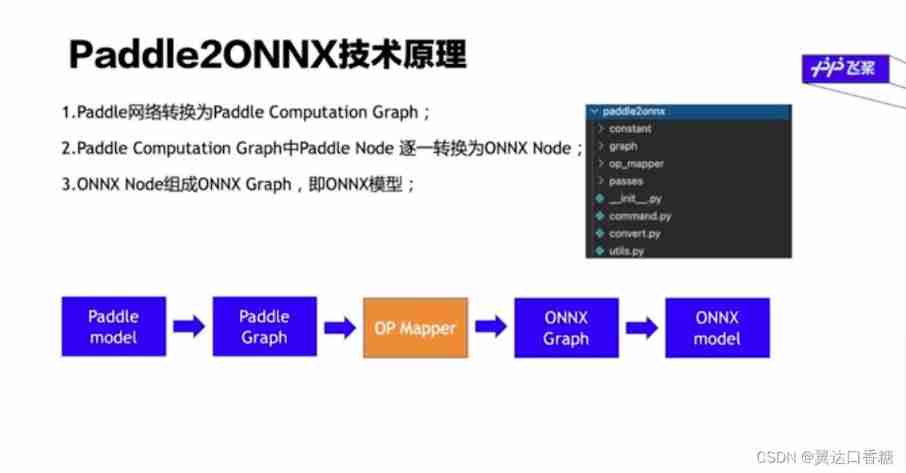

5、paddle2ONNX Technical principle

In general, there are three main steps , First of all , The first step is to use paddle 2.0 After that API Conduct a networking , The composition of a network includes some convolution layers 、 Or the whole connection layer to form a network , Then our first step is to convert it into paddle A calculation diagram of , The code structure is represented by these calculation diagrams , Include paddle Or ONNX The calculation diagram will be implemented in our graph The following implementation . And then this is the first step , Is transformed to paddle The calculation chart of

Our second and most important step is OP matching , What kind of work does he do ? It's us paddle The calculation chart of , As mentioned above , In fact, it is a directed acyclic graph with many nodes , Then the second step is to put these OP Convert one by one , Just sort according to his topology , Convert from beginning to end , Then turn it all over ONNX Of node, Those node It's ours ONNX Model . Of course , Before we export it into a model , We also need to test this model , For example, are there any useless nodes in it , These tests are used to test whether our model is reasonable . If this model is reasonable , Then it can be exported , Splicing generates our ONNX Model of . Among them, we paddle2ONNX The most important part of this project is our op mapper, When it is realized, it is paddle node To onnx node A transformation of , It is a core part of the whole project .

6、OP Process development

Now? paddle There are not many models that can be transformed , Many good models cannot be deployed , Therefore, developers need to carry out new OP Development process .

This is the core op mapper Development . For example, if you encounter a op I won't support it , How should we go about the new op Development of . We usually use paddle API When converting , He is really sinking into the code layer , Yes, in the calculation diagram API The transformation . What is? API Well ?API It refers to some interfaces that we interact with users , Users can use these API Call us directly paddle Inside op, This API There are multiple or one OP To form a model network .

Convert into onnx when , If you choose the part that is not officially developed , When exporting, it must be because of these API There will be some unsupported OP, When exporting, you will be prompted which one is in the end OP I won't support it .

So we need to write code manually , To clarify the transformation relationship . This needs to be added , Two models of OP There may not be a one-to-one correspondence , But it is also possible that there are multiple OP Go and combine , At this time, developers need to develop , But this is too difficult and complicated , So we need to carry out a project to develop , None of the following examples will involve OP Development , Are examples that can be directly transformed .

When doing this development , Mainly refer to the following materials 、 video , If there is something unclear, you can read these and think over again .

https://aistudio.baidu.com/aistudio/projectdetail/3458732?forkThirdPart=1

https://aistudio.baidu.com/aistudio/projectdetail/1904306

https://aistudio.baidu.com/aistudio/projectdetail/2187665?channelType=0&channel=0

https://aistudio.baidu.com/aistudio/projectdetail/1637670?channelType=0&channel=0

https://aistudio.baidu.com/aistudio/projectdetail/2250546?channelType=0&channel=0

https://aistudio.baidu.com/aistudio/projectdetail/2254777?channelType=0&channel=0

https://www.bilibili.com/video/BV1aq4y1G7Ma?from=search&seid=14303610212269096609&spm_id_from=333.337.0.0

paddle Dynamic and static model ONNX export ( With u2netp For example )

1、 Environmental preparation

https://aistudio.baidu.com/aistudio/projectdetail/3459413

Installation dependency

The first thing to install here is pycocotools、paddle2onnx、onnxruntime, Everyone is no stranger to the second , The third is that we will use onnxruntime, The first is pycocotools(python api tools of COCO) Used for processing COCO Data set of . Many friends here will see why the first and second steps are not installed together , This emphasizes , Must not be together . Be sure to make onnx Version of , He needs less than 1.9.0. Many shared open source projects are installed directly , That's because they didn't update the version so quickly at that time and it can support .

!pip install pycocotools paddle2onnx onnxruntime

!pip install onnx==1.9.0

Next, you need to download paddle2onnx And initialize them , Because of the BML, So every time you shut down and log in again, you need to run the configuration code of these environments again , Otherwise, an error will be reported due to the lack of environment .

!git clone https://github.com/paddlepaddle/paddle2onnx --depth 1

!cd ~/paddle2onnx && python setup.py install

Install it in advance GPU Environment , Avoid making mistakes at that time , I will install it again later .

!python -m pip install paddlepaddle-gpu==2.1.3.post101 -f https://www.paddlepaddle.org.cn/whl/linux/mkl/avx/stable.html

Extract the model related files , Because it is the first project , I directly configured the relevant files and environment , You only need to download this compressed package on Baidu online disk , How to extract this file to the same directory . Or run the project , And upload to work in . Baidu network disk link : link :https://pan.baidu.com/s/1k7rRTGoGGyLRQdEU2SnYvg password :mp95 If the file is invalid or has any problems, you can CSDN Talk to Yida gum about me in private .

!unzip wenjiang.zip

2、 Dynamic graph model Export ONNX agreement

Use Paddle2.0 Build a dynamic graph model , By calling paddle.onnx.export To achieve ONNX Quick export of model

The general principle is dynamic graph -> Static diagram ->ONNX Model , Next, let's demonstrate the export process through code

import os

import time

import paddle

# Import the model from the model code

from u2net import U2NETP

# Instantiation model

model = U2NETP()

# Load pre training model parameters

model.set_dict(paddle.load('u2netp.pdparams'))

# Set the model to the evaluation state

model.eval()

# Define input data

input_spec = paddle.static.InputSpec(shape=[None, 3, 320, 320], dtype='float32', name='image')

# ONNX Model export

paddle.onnx.export(model, 'u2netp', input_spec=[input_spec], opset_version=12)

3、 Static graph model export ONNX agreement

In addition to dynamic graph model, it can be exported as ONNX Model , The reasoning model of static graph can also be transformed into ONNX Model .

The conversion can be completed by calling the following commands from the command line , More introduction to the use of command line parameters , Please move Github Home page .

]

!paddle2onnx \

--model_dir u2netp \

--model_filename __model__ \

--params_filename __params__ \

--save_file u2netp_static.onnx \

--opset_version 12

4、 Model visualization

adopt VisualDL The tool can easily visualize the model structure .

stay AIStudio On the platform , Click on the left visualization -> Select the model file , Select the suffix just saved as .onnx Model files for .

Then start the service to view the calculation diagram structure of the model and the relevant information of the model .

paddle detection Model ONNX export ( With YOLO3 For example )

1、 Environmental preparation

clone PaddleDetection Code

!git clone https://gitee.com/PaddlePaddle/PaddleDetection.git -b develop

Install dependencies and GPU Environmental Science

! pip install -r PaddleDetection/requirements.txt

!python -m pip install paddlepaddle-gpu==2.1.3.post101 -f https://www.paddlepaddle.org.cn/whl/linux/mkl/avx/stable.html

2、 The export model

Use here PaddleDetection The static diagram version is used as a demonstration

Model selection pre trained YOLO v3 Pedestrian detection model

%cd ~/PaddleDetection/static/

!python tools/export_model.py -c contrib/PedestrianDetection/pedestrian_yolov3_darknet.yml --output_dir ~/inference_model

3、 Model transformation

Use here Paddle2ONNX To transform the model

%cd ~

!paddle2onnx \

--model_dir inference_model/pedestrian_yolov3_darknet \

--model_filename __model__ \

--params_filename __params__ \

--save_file inference_model/pedestrian_yolov3_darknet_onnx/inference.onnx \

--opset_version 12 \

--enable_onnx_checker True

!cp inference_model/pedestrian_yolov3_darknet/infer_cfg.yml inference_model/pedestrian_yolov3_darknet_onnx

4、 Model test

Use ONNXRuntime The model can be loaded normally during testing

import os

import onnxruntime

def load_onnx(model_dir):

model_path = os.path.join(model_dir, 'inference.onnx')

session = onnxruntime.InferenceSession(model_path)

input_names = [input.name for input in session.get_inputs()]

output_names = [output.name for output in session.get_outputs()]

return session, input_names, output_names

session, input_names, output_names = load_onnx('inference_model/pedestrian_yolov3_darknet_onnx')

print(input_names, output_names)

paddleSeg Model ONNX export

paddleSeg brief introduction

paddleseg release2.0 Version provides 50+ High quality pre training model , Support 15+ Mainstream segmentation network , Provides the industry's SOTA Model OCRNet, It improves the usability of the product .

PaddleSeg It's based on the oars PaddlePaddle Developed an end-to-end image segmentation development kit , It covers a large number of high-quality segmentation models in different directions such as high precision and lightweight . Through modular design , Provides configurable drivers and API Call two applications , Help developers more easily complete the whole process of image segmentation applications from training to deployment .

1、 Environmental preparation

install PaddleSeg

! pip install paddleseg

download PaddleSeg Code

! git clone https://gitee.com/paddlepaddle/PaddleSeg.git

2、 Training models

! python PaddleSeg/train.py --config PaddleSeg/configs/quick_start/bisenet_optic_disc_512x512_1k.yml --do_eval \

--use_vdl --save_interval 200 --save_dir output

3、 Model validation

When the model is saved , We can go through PaddleSeg The provided script evaluates the model

! python PaddleSeg/val.py \

--config PaddleSeg/configs/quick_start/bisenet_optic_disc_512x512_1k.yml \

--model_path output/iter_1000/model.pdparams

4、 Model to predict

When the model is saved , Can pass PaddleSeg The provided script predicts the model , At the same time, visualize the results , View the segmentation effect .

–model_path output/iter_1000/model.pdparams Means to select the model path --image_path data/optic_disc_seg/JPEGImages/H0003.jpg Indicates the picture selected for prediction , If the next time image_path There are multiple pictures in , All predictions can also be achieved --save_dir output/result Indicates the address of the prediction saved result

! python PaddleSeg/predict.py --config PaddleSeg/configs/quick_start/bisenet_optic_disc_512x512_1k.yml \

--model_path output/iter_1000/model.pdparams \

--image_path data/optic_disc_seg/JPEGImages/N0093.jpg \

--save_dir output/result

5、 Model export

PaddleSeg It provides the function of one key dynamic to static , That is to transform the trained dynamic graph model file into static graph form .

Results file

output

├── deploy.yaml # Deploy related configuration files

├── model.pdiparams # Static graph model parameters

├── model.pdiparams.info # Parameter additional information , Generally, there is no need to pay attention to

└── model.pdmodel # Static diagram model file

! python PaddleSeg/export.py \

--config PaddleSeg/configs/quick_start/bisenet_optic_disc_512x512_1k.yml \

--model_path output/iter_1000/model.pdparams

6、 Use paddle2onnx take paddle Convert model format to ONNX Model format .

! paddle2onnx --model_dir ./output/ \

--model_filename model.pdmodel \

--params_filename model.pdiparams \

--save_file segonnx/model.onnx \

--opset_version 11

7、ONNX Model validation

import onnx

# onnx_file = save_path + '.onnx'

# onnx_file ='onnx-model/detectionmodel.onnx'

save_path = 'segonnx/'

onnx_file = save_path + 'model.onnx'

onnx_model = onnx.load(onnx_file)

onnx.checker.check_model(onnx_model)

print('The model is checked!')

Of course , Here you can also use the above detection Model to test , There is no problem .

import onnx

# onnx_file = save_path + '.onnx'

# onnx_file ='onnx-model/detectionmodel.onnx'

save_path = 'inference_model/pedestrian_yolov3_darknet_onnx/'

onnx_file = save_path + 'inference.onnx'

onnx_model = onnx.load(onnx_file)

onnx.checker.check_model(onnx_model)

print('The model is checked!')

PPOCR Model ONNX export

PP-OCR brief introduction

PP-OCR As Paddle To launch the OCR model base , Have a high-quality pre training model , Accurate recognition effect . In order to better PP-OCR Deploy to different hardware platforms , This tutorial USES Paddle2ONNX take PP-OCR testing 、 The direction classification and character recognition models are exported as ONNX.

The whole tutorial mainly includes the following links : Configuration environment , Export... Separately PP-OCR Detection of 、 Direction classification and character recognition inference Model , And then use Paddle2ONNX take inference The model is converted to ONNX agreement .

1、 Configuration environment

download OCR Code

!git clone https://gitee.com/PaddlePaddle/PaddleOCR.git

Some friends may think this code is a little strange , Because generally, you only need to perform the first step . But I found that I couldn't finish downloading , The version cannot match , So you can just execute the second line of code . If not, open paddleOCR, Open this. requirements.txt, Delete this opencv-contrib-python , Save and exit, and then execute the third command

%cd /home/work/PaddleOCR

!pip install -r requirements.txt

!pip install opencv-contrib-python==4.2.0.32

initialization OCR, Configuration environment

!cd /home/work/PaddleOCR && python setup.py install

2、 export PP-OCR Of inference Model

Export detection (det), Direction classification (cls) And character recognition (rec) Model . function export_ocr.sh, And designate PaddleOCR The path of and the path to save the exported model . export_ocr.sh I have put the internal code below the script , You just need to create one on your computer txt Change of name sh, Copy the following to upload .

ppocr_path=$1

model_save_dir=$2

wget -P ~/ch_lite/ https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_mobile_v2.0_cls_train.tar && tar xf ~/ch_lite/ch_ppocr_mobile_v2.0_cls_train.tar -C ~/ch_lite/

wget -P ~/ch_lite/ https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_det_train.tar && tar xf ~/ch_lite/ch_ppocr_server_v2.0_det_train.tar -C ~/ch_lite/

wget -P ~/ch_lite/ https://paddleocr.bj.bcebos.com/dygraph_v2.0/ch/ch_ppocr_server_v2.0_rec_train.tar && tar xf ~/ch_lite/ch_ppocr_server_v2.0_rec_train.tar -C ~/ch_lite/

cd $ppocr_path

python3 tools/export_model.py -c configs/rec/ch_ppocr_v2.0/rec_chinese_common_train_v2.0.yml -o Global.pretrained_model=/home/aistudio/ch_lite/ch_ppocr_server_v2.0_rec_train/best_accuracy Global.load_static_weights=False Global.save_inference_dir=$model_save_dir/rec_crnn/

python3 tools/export_model.py -c configs/det/ch_ppocr_v2.0/ch_det_res18_db_v2.0.yml -o Global.pretrained_model=/home/aistudio/ch_lite/ch_ppocr_server_v2.0_det_train/best_accuracy Global.load_static_weights=False Global.save_inference_dir=$model_save_dir/det_db/

python3 tools/export_model.py -c configs/cls/cls_mv3.yml -o Global.pretrained_model=/home/aistudio/ch_lite/ch_ppocr_mobile_v2.0_cls_train/best_accuracy Global.load_static_weights=False Global.save_inference_dir=$model_save_dir/cls/

rm -rf ~/ch_lite

Then switch to the correct path

%cd /home/work/

!sh export_ocr.sh PaddleOCR/ /home/work/inference_model/paddle/

3、 take inference The model is converted to ONNX agreement

Use Paddle2ONNX take inference The model is converted to ONNX agreement .

!paddle2onnx -m /home/work/inference_model/paddle/cls/ --model_filename inference.pdmodel --params_filename inference.pdiparams -s /home/work/inference_model/onnx/cls/model.onnx --opset_version 11

!paddle2onnx -m /home/work/inference_model/paddle/rec_crnn/ --model_filename inference.pdmodel --params_filename inference.pdiparams -s /home/work/inference_model/onnx/rec_crnn/model.onnx --opset_version 11

Here you will master ONNX The transformation of , You can transform the required model with different frameworks . But for calculation OP conversion , You can refer to the official documents .

边栏推荐

- 1.1 history of Statistics

- Online shrimp music will be closed in January next year. Netizens call No

- Zhanrui tankbang | jointly build, cooperate and win-win zhanrui core ecology

- 【雕爷学编程】Arduino动手做(105)---压电陶瓷振动模块

- 卸载Google Drive 硬盘-必须退出程序才能卸载

- LayoutManager布局管理器:FlowLayout、BorderLayout、GridLayout、GridBagLayout、CardLayout、BoxLayout

- MySQL的information_schema数据库

- Tf/pytorch/cafe-cv/nlp/ audio - practical demonstration of full ecosystem CPU deployment - Intel openvino tool suite course summary (Part 2)

- ansys命令

- 冲击继电器JC-7/11/DC110V

猜你喜欢

AWT常用组件、FileDialog文件选择框

![BUU-Crypto-[GUET-CTF2019]BabyRSA](/img/87/157066155e8d3a93e30a68eaf1781b.jpg)

BUU-Crypto-[GUET-CTF2019]BabyRSA

![[wechat applet] template and configuration (wxml, wxss, global and page configuration, network data request)](/img/78/63ab1a8bb1b6e256cc740f3febe711.jpg)

[wechat applet] template and configuration (wxml, wxss, global and page configuration, network data request)

The end of the Internet is rural revitalization

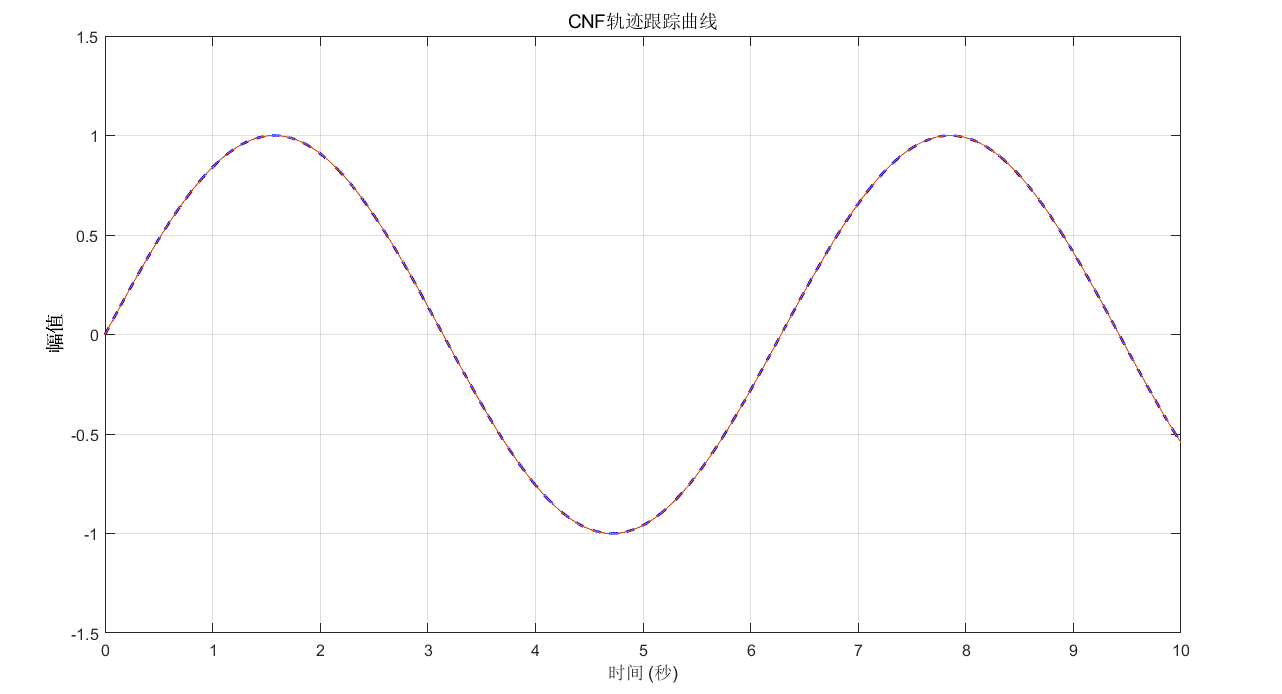

复合非线性反馈控制(二)

What is MQ?

Simulink and Arduino serial port communication

Steady! Huawei micro certification Huawei cloud computing service practice is stable!

体验碎周报第 102 期(2022.7.4)

VB. Net simple processing pictures, black and white (class library - 7)

随机推荐

Build an Internet of things infrared temperature measuring punch in machine with esp32 / rush to work after the Spring Festival? Baa, no matter how hard you work, you must take your temperature first

HMS v1.0 appointment. PHP editid parameter SQL injection vulnerability (cve-2022-25491)

Steady! Huawei micro certification Huawei cloud computing service practice is stable!

input显示当前选择的图片

js获取对象中嵌套的属性值

The difference between PX EM rem

Kubernets first meeting

How to get the parent node of all nodes in El tree

Thinkphp6.0 middleware with limited access frequency think throttle

Flask

BUU-Real-[PHP]XXE

HMS v1.0 appointment.php editid参数 SQL注入漏洞(CVE-2022-25491)

C # character similarity comparison general class

Input displays the currently selected picture

2022 a special equipment related management (elevator) examination questions simulation examination platform operation

Review | categories and mechanisms of action of covid-19 neutralizing antibodies and small molecule drugs

509. 斐波那契数、爬楼梯所有路径、爬楼梯最小花费

APScheduler如何设置任务不并发(即第一个任务执行完再执行下一个)?

How to configure static IP for Kali virtual machine

接地继电器DD-1/60