当前位置:网站首页>Raki's notes on reading paper: learning fast, learning slow: a general continuous learning method

Raki's notes on reading paper: learning fast, learning slow: a general continuous learning method

2022-06-11 19:21:00 【Sleepy Raki】

Abstract & Introduction & Related Work

- Research tasks

Continuous learning - Existing methods and related work

- CLS The theory is that , Efficient learning requires two complementary learning systems : The hippocampus shows short-term adaptability and rapid learning of dual information , Then gradually consolidate to the neocortex , To slowly learn structured information

- Many existing methods only focus on modeling the prefrontal cortex directly , There is no quick learning network , And the rapid learning network is in the realization of high efficiency in the brain CL Aspects play a key role

- Facing the challenge

In deep neural networks (DNNs) To realize CL The main challenge is , The continuous acquisition of incremental information from unsteady data distribution usually leads to catastrophic forgetting , When learning new tasks , The performance of the model on the previous learning task will drop sharply . - Innovative ideas

Do not use task boundaries , Nor does it make any assumptions about the distribution of the data , This makes it versatile , Suitable for “ General continuous learning ” - The experimental conclusion

Based on the theory of complementary learning system in the brain, we propose a novel Double memory experience playback method

Except for a small incident memory , Our method constructs long-term and short-term semantic memory to imitate the rapid and slow adaptation of information , Because of the learning representation of network weight coding task (Krishnan wait forsomeone ,2019 year ), Semantic memory is maintained by taking the exponential moving average of the working model weights , To consolidate the task information of different time windows and frequencies . Semantic memory interacts with explicit memory , To extract the consolidated replay activation mode , And enforce consistency loss for updates to the working model , In order to acquire new knowledge , At the same time, the decision boundary of working model is consistent with that of semantic memory . This maintains a balance between the plasticity and stability of the model , In order to effectively consolidate knowledge

METHOD

COMPLEMENTARY LEARNING SYSTEM THEORY

CLS The theory is that , Effective lifelong learning in the brain requires two complementary learning systems . The hippocampus quickly encodes new information into short-term memory , It is then used to transfer and consolidate knowledge in the neocortex , Neocortex gradually obtains structured knowledge representation as long-term memory through experience playback . The interaction between the functions of hippocampus and neocortex is an effective representation for simultaneous learning ( In order to better generalize ) And the specific content of instance based explicit memory is very important

COMPLEMENTARY LEARNING SYSTEM BASED EXPERIENCED REPLAY

stay CLS Inspired by the theory , We propose a method of replaying double memory experience , namely CLS-ER, The aim is to mimic the interaction between fast learning and slow learning mechanisms , In order to realize the DNNs Effective CL. Our approach preserves both short-term and long-term semantic memory of tasks encountered , These memories interact with event memory that replays related neural activity . The working model is updated , Make them acquire new knowledge , At the same time, the decision boundary is consistent with the semantic memory , To achieve the consolidation of cross task structured knowledge . chart 1 To emphasize the CLS The similarities between the theory and our method

Semantic Memories

Because the knowledge of the learned task is encoded in DNN In the weight of , Our goal is to form our semantic memory by accumulating the knowledge encoded in the corresponding weights of the model , Because it learns different tasks in turn

Average teacher (Mean Teacher) It provides an effective method to aggregate model weights , It is a distillation of knowledge , Use the exponential moving average of students' weights during training (EMA) As a teacher of semi supervised learning . It can also be thought of as a self combination that forms an intermediate model state , This leads to better internal representation . We use the average teacher approach to build our semantic memory , Because it provides an efficient method of computing and memory to accumulate task knowledge

because CL It involves sequential learning tasks , The model weight of each training step can be considered as a student model for a specific task . therefore , The average weight in the training process can be considered as a set of student models that form a specific task , It effectively aggregates the information of the whole task , Resulting in smoother decision boundaries .CLS-ER By keeping two... In the weight of the working model EMA Weighted models to build long-term ( Stable model ) And short term ( Plastic model ) Semantic memory of . The update frequency of stable model is low , The window size is large , So it can retain more information from earlier tasks , The updating frequency of plastic model is higher , Small window size , So it can adapt to the information of new tasks more quickly ( chart 2).D Section further illustrates the benefits of using two semantic memories instead of a single one

Episodic Memory

A sample that reproduces a previous task stored in a small explicit memory is CL A common method of , It has been proved to be effective in reducing catastrophic forgetting . Because our goal is to CLS-ER It is positioned as a versatile general incremental learning method , We don't use mission boundaries , Nor does it make any strong assumptions about the distribution of tasks or samples . therefore , To maintain a fixed episodic memory buffer , We used reservoir sampling (Vitter, 1985), It assigns equal probabilities to each sample in the stream , To be reflected in the buffer , And randomly replace the existing memory samples ( Algorithm 2). This is a global distribution matching strategy , Ensure that at any time , The distribution of samples in the buffer will roughly match the distribution of all samples seen so far

Consolidation of Information

CL The key challenge is to integrate new information with previously acquired information . This requires an effective balance between the stability and plasticity of the model . Besides , With the learning of new tasks , The rapid change of decision boundary makes the consolidation of information in the task more challenging .CLS-ER To solve these challenges, a novel mechanism of double memory experience replay is proposed . Long term and short-term semantic memory interact with explicit memory , To extract memory samples for consolidation activation , These activations are then used to limit updates to the working model , So as to acquire new knowledge when the decision boundary is consistent with semantic memory . This prevents rapid changes in the parameter space when learning new tasks . Besides , There are two purposes to keep the decision boundary of the working model consistent with the semantic memory :

- Helps retain and consolidate information

- Leading to smoother adaptation of decision boundaries

In each training step , The working model receives training batches from the data stream X b X_b Xb, And retrieve a batch of random examples from explicit memory X m X_m Xm. And then retrieve the best semantic information , That is, the structural knowledge encoded in semantic memory , This knowledge shows the consolidation of feature space and the adaptation to the decision boundary of previous tasks . The design of semantic memory enables the plastic model to have higher performance on recent tasks , The stable model keeps the information of old tasks first . therefore , We prefer the stable model Z S Z_S ZS The logarithm of is used in earlier examples , The plastic model Z P Z_P ZP For recent examples . because CLS-ER It is a general incremental learning method , We don't use hard thresholds or task information , Instead, I chose a simple task independent method , That is, the performance of semantic memory on the paradigm is used as the selection criteria , Based on experience , This method works well . For each sample , According to which model do we have the highest softmax Score to select playback logic Z( Algorithm 1 The first 5-6 That's ok )

then , Replay logs selected from semantic memory are used to implement consistency loss on the working model , So that it does not deviate from what has been learned . therefore , The working model is a combination of data flow and explicit memory samples X Cross entropy loss and canonical Xm A combination of consistency losses to update :

After updating the weights of the working model , With r P r_P rP and r S r_S rS Learning rate to randomly update plastic memory and stable memory , The learning rate of plastic memory is higher than that of stable memory , So the update is faster , It is biologically more persuasive to use random rather than deterministic methods , This reduces the overlap of working model snapshots , Lead to more diversity of semantic memory . Semantic memory is updated by exponentially moving average of the weights of the working model (Tarvainen & Valpola, 2017), The attenuation parameters are respectively α P α_P αP and α S α_S αS

EXPERIMENTAL SETUP

EMPIRICAL EVALUATION

MODEL CHARACTERISTICS

CONCLUSION

We use the theory of complementary learning systems in the brain , This paper presents a novel method for replaying double memory experience . Our method maintains long-term and short-term semantic memory , These memories are used to effectively replay the neural activities of explicit memory , And make the decision boundary of the working model consistent , To achieve efficient knowledge consolidation . We demonstrate the effectiveness of our approach in benchmark datasets and more challenging general incremental learning scenarios , And has reached a new and most advanced level in the vast majority of continuous learning settings . We further show that ,CLS-ER Converge to a flatter minimum , Lessened the prejudice against recent tasks , And provides a well calibrated high-performance model . Our strong empirical results urge us to further study how to more faithfully imitate the complementary learning system in the brain , In order to realize the DNN The best continuous learning

Remark

My biggest feeling after reading it is , This article is very good at telling stories , Actually, it's just LwF The magic modified version of , Change a fixed model into a small learning rate , Add some cache memory , What a flowery statement … That's OK

边栏推荐

- Experience of remote office communication under epidemic situation | community essay solicitation

- collect. stream(). Use of the collect() method

- KMP! You deserve it!!! Run directly!

- Use canvas to add text watermark to the page

- The 2023 MBA (Part-time) of Beijing University of Posts and telecommunications has been launched

- Neural network and deep learning-2-simple example of machine learning pytorch

- MongoDB 什么兴起的?应用场景有哪些?

- mysql 联合索引和BTree

- Undefined reference to 'g2o:: vertexe3:: vertexe3()'

- leetcode:926. Flip the string to monotonically increasing [prefix and + analog analysis]

猜你喜欢

cf:E. Price Maximization【排序 + 取mod + 双指针+ 配对】

更换目标检测的backbone(以Faster RCNN为例)

![[image denoising] image denoising based on Markov random field with matlab code](/img/ef/d28b89a47723b43705fca07261c958.png)

[image denoising] image denoising based on Markov random field with matlab code

![leetcode:剑指 Offer 59 - II. 队列的最大值[deque + sortedlist]](/img/6b/f2e04cd1f3aaa9fe057c292301894a.png)

leetcode:剑指 Offer 59 - II. 队列的最大值[deque + sortedlist]

【Multisim仿真】利用运算放大器产生方波、三角波发生器

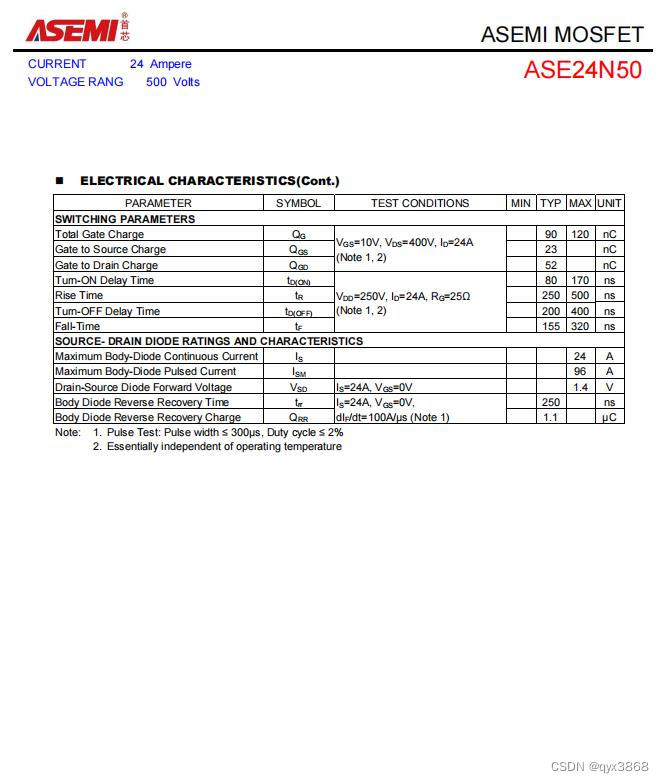

ASEMI的MOS管24N50参数,24N50封装,24N50尺寸

cf:D. Black and White Stripe【连续k个中最少的个数 + 滑动窗口】

![[image denoising] impulse noise image denoising based on absolute difference median filter, weighted median filter and improved weighted median filter with matlab code attached](/img/dc/6348cb17ca91afe39381a9b3eb54e6.png)

[image denoising] impulse noise image denoising based on absolute difference median filter, weighted median filter and improved weighted median filter with matlab code attached

Flash ckeditor rich text compiler can upload and echo images of articles and solve the problem of path errors

SISO decoder for repetition (supplementary Chapter 4)

随机推荐

7-3 组合问题(*)

Use Mysql to determine the day of the week

Pyramid test principle: 8 tips for writing unit tests

疫情下远程办公沟通心得|社区征文

对‘g2o::VertexSE3::VertexSE3()’未定义的引用

Hyper parameter optimization of deep neural networks using Bayesian Optimization

An adaptive chat site - anonymous online chat room PHP source code

Internet_ Business Analysis Overview

About my experience of "binary deployment kubernetes cluster"

PIL pilot image processing [1] - installation and creation

Visual slam lecture notes-10-2

E-commerce (njupt)

【视频去噪】基于SALT实现视频去噪附Matlab代码

Leetcode: sword finger offer 59 - ii Maximum value of queue [deque + sortedlist]

MongoDB 什么兴起的?应用场景有哪些?

Experience of remote office communication under epidemic situation | community essay solicitation

Pymysql uses cursor operation database method to encapsulate!!!

NR LDPC punched

视觉SLAM十四讲笔记-10-1

KMP! You deserve it!!! Run directly!