当前位置:网站首页>[pytorch] kaggle image classification competition arcface + bounding box code learning

[pytorch] kaggle image classification competition arcface + bounding box code learning

2022-06-13 02:11:00 【liyihao76】

[pytorch] Kaggle Picture classification competition ArcFace + bounding box Code learning

The data in the competition contains data from 28 Of different research institutions 30 Different species ( Whales and dolphins ) Of 15,000 Images of several unique individual marine mammals . The competition requirement is to test the set of individuals id The classification of .

kaggle Game data details and data set download :Happywhale - Whale and Dolphin Identification

Code link

arcface Is one of the best ways to perform in this competition . Code :

Basic knowledge of

[ theory ] Measure learning Metric Learning

Measure learning (Metric Learning) It is a method often used in the process of machine learning , It can use a series of observations , Construct the corresponding measurement function , So as to learn the distance or difference between the data , Effectively describe the similarity between samples . This measure function is applicable to observations with high similarity , A small distance value will be returned ; For observations that vary widely , A large distance value will be returned . When the sample size is small , Measurement learning in dealing with the accuracy and efficiency of classification tasks , Showing significant advantages .

However , If the classification task to be handled is very complex , With multiple categories 、 Small sample, etc , Deep measurement learning combining deep learning and measurement learning ((Deep Metric Learning, abbreviation DML)), Is the real king . Depth metric learning is also called distance metric learning (Distance Metric Learning). Compared to measuring learning , Depth measurement learning can make nonlinear mapping to input features .

By training a person based on CNN Nonlinear feature extraction module or encoder , Depth measurement learning can combine the extracted image features (Embedding) Embedded in the nearest neighbor location , At the same time, with the help of Euclidean distance 、cosine Equidistance measurement , Distinguish different image features .

Depth measurement learning in CV Some extreme classification tasks in the field ( There are many categories 、 The sample size is insufficient ) Excellent performance in , It is widely used in face recognition 、 Pedestrian recognition 、 image retrieval 、 Target tracking 、 Feature matching and other scenarios .

Reference link :

- Measurement learning and pytorch-metric-learning Use

- PyTorch Depth measurement learning is invincible Buff: Nine modules 、 Call at will

- Measure learning / Introduction to comparative learning : Paper reading notes -Deep Metric Learning: A Survey

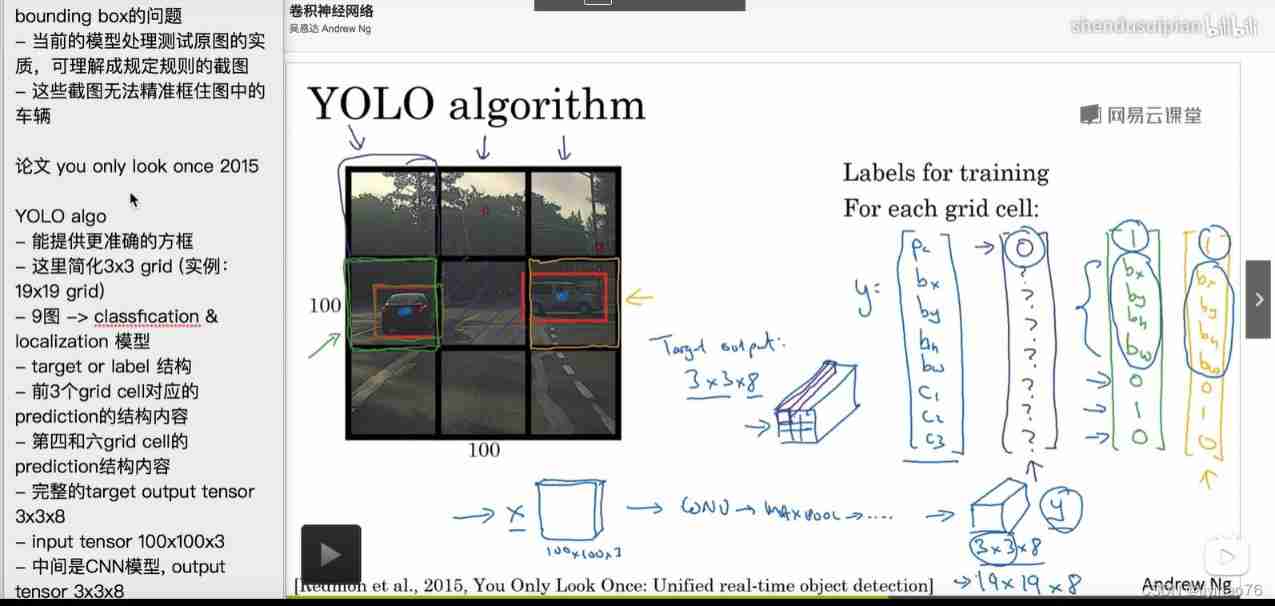

[ theory ] bounding box object detection

In the image classification task , We assume that there is only one main object in the image , We only focus on how to identify its categories . However , Many times, there are multiple targets in the image that we are interested in , We don't just want to know their categories , Also want to get their specific position in the image . In computer vision , We call this kind of task target detection (object detection) Or target recognition (object recognition).

In target detection , We usually use bounding boxes (bounding box) To describe the spatial location of the object . The bounding box is rectangular , From the top left corner and the bottom right corner of the rectangle x and y Coordinates determine . Another common boundary box representation is the center of the boundary box (x,y) Axis coordinates and the width and height of the box .

Reference link :

1.CNN: bounding box prediction 01 problem

2.CNN: bounding box prediction - specify bounding box

3.CNN: bounding box prediction - YOLO algo

4.CNN: 3.9 YOLO Algorithm part1

5.CNN: 3.9 YOLO Algorithm part2

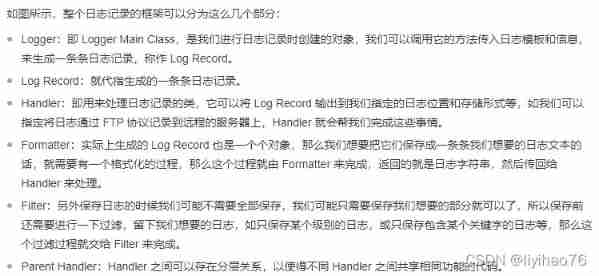

[python] logging modular

So in Python in , How can it be regarded as a standard logging process ? Maybe a lot of people will use print Statement to output some running information , And then watch it on the console , At run time, the output is redirected to a file, and the output stream is saved to a file , In fact, this is very irregular , stay Python There is a standard logging modular , We can use it for tagging logging , With it, we can log more easily , At the same time, it can also make more convenient level distinction and record some additional log information , Such as time 、 Running module information, etc .

Let's take a look at the overall framework of the logging process .

Reference link :

- It's time to abandon print 了 , Start experiencing logging It's very powerful !

- Python Log processing (logging modular )

Data preprocessing

First , According to the results of our statistics :[pytorch] Kaggle Large image data sets Data analysis + visualization

The size of data pictures varies greatly , Among them, the location of whales or dolphins we want to detect is also at sixes and sevens

Things to know before starting image preprocessing

Here you can see some extreme cases

therefore , The first step in image processing is to identify dolphins / The position of the whale in the picture , So , We used bounding box[YOLOv5].

Happywhale: BoundingBox [YOLOv5]

In this code , We will use YOLOv5 Generate bounding box . The purpose of this is to make the following image crop Provide direction , Thus, the images of data sets with different sizes can be cropped , Finally, better classification results can be achieved .

We use Whale Flute Data sets ( the other one Kaggle Competition data , Whale tail fin positioning ) To train and test BoundingBox Model , We have 1200 Samples with bounding boxes . after , We will use Whale Flute Model to our Whale and Dolphin Data sets for prediction .

Whales Fluke The bounding box in the dataset is very large , and Whales & Dolphin Data sets have both small and large bounding boxes . To adjust this problem , You can try to change hyp.yaml In the document scale Parameters . The default value is 0.5, You can try increasing the value . You can also try to bbox Zoom in , for example 1.5x or 1.7x. This will ensure that you do not crop to whales or dolphins .

After determining the position of the bounding box , We continue to clip the image to get the image we need for classification

Happywhale: Cropped Dataset [YOLOv5]

Final , After resizing , We get a new dataset image .

Data sets :JPEG Happywhale 384x384

Code details

To configure

!pip install timm

!pip install pytorch-metric-learning[with-hooks]

Open source measurement learning library pytorch-metric-learning, It integrates various commonly used measurement learning methods , It's a very useful tool .

import os

import glob

import pandas as pd

import numpy as np

import logging

import timm

from tqdm.notebook import tqdm # Progress bar

import torch

import torch.nn as nn

import torch.optim as optim

from torch.utils.data import Dataset, DataLoader

from torchvision.io import ImageReadMode, read_image

from torchvision.transforms import Compose, Lambda, Normalize, AutoAugment, AutoAugmentPolicy

import pytorch_metric_learning

import pytorch_metric_learning.utils.logging_presets as LP

from pytorch_metric_learning.utils import common_functions

from pytorch_metric_learning import losses, miners, samplers, testers, trainers

from pytorch_metric_learning.utils.accuracy_calculator import AccuracyCalculator

from pytorch_metric_learning.utils.inference import InferenceModel

for handler in logging.root.handlers[:]:

logging.root.removeHandler(handler) # remove exactly the preexisting handler object

logging.getLogger().setLevel(logging.INFO) # obtain logger example Specify the minimum output level of the log

logging.info("VERSION %s" % pytorch_metric_learning.__version__) # Print library version

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print(device) # cuda:0

print(torch.cuda.get_device_name(0)) # NVIDIA RTX A6000

Parameters

MODEL_NAME='tf_efficientnet_b4_ns'

N_CLASSES=15587 # Number of individuals

OUTPUT_SIZE = 1792

EMBEDDING_SIZE = 512

N_EPOCH=15

BATCH_SIZE=16

ACCUMULATION_STEPS = int(256 / BATCH_SIZE)

MODEL_LR = 1e-3

PCT_START=0.3

PATIENCE=5

N_WORKER=2

N_NEIGHBOURS = 750

Read csv data

df = pd.read_csv('./happy-whale-and-dolphin/train.csv')

df.head()

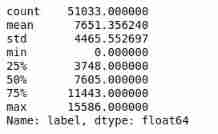

df['label'] = df.groupby('individual_id').ngroup()

df['label'].describe()

It realizes the conversion from species to tag numbers

df.groupby

groupby The process is to take the original DataFrame according to groupby Field of ( Here is individual_id), Divided into groups DataFrame, There are as many groups as you are divided into DataFrame.Pandas course | Super easy to use Groupby Usage detailsGroupBy.ngroup(self, ascending:bool = True) return= Unique number of each group .

Data summary df.describe()

A statistical table of all numeric columns with multiple rows will be returned , Each row is a statistical indicator , Total 、 The average 、 Standard deviation 、 Max min 、 Quartile, etc , It is still very useful for us to get a preliminary understanding of the data . If it is a time type, it will be time related, such as start and end time 、 Period and so on .

Divide the data set

Training set and verification set

valid_proportion = 0.1

valid_df = df.sample(frac=valid_proportion, replace=False, random_state=1).copy()

train_df = df[~df['image'].isin(valid_df['image'])].copy()

print(train_df.shape) # (45930, 4)

print(valid_df.shape) # (5103, 4)

Reset index on both since we want to use it for KNN lookups later:?

train_df.reset_index(drop=True, inplace=True)

valid_df.reset_index(drop=True, inplace=True)

Read image data

Create... For loading images dataset class .

class HappyWhaleDataset(Dataset):

def __init__(

self,

df: pd.DataFrame,

image_dir: str,

return_labels=True,

):

self.df = df

self.images = self.df["image"]

self.image_dir = image_dir

self.image_transform = Compose(

[

AutoAugment(AutoAugmentPolicy.IMAGENET),

Lambda(lambda x: x / 255),

]

)

self.return_labels = return_labels

def __len__(self):

return len(self.images)

def __getitem__(self, idx):

image_path = os.path.join(self.image_dir, self.images.iloc[idx])

image = read_image(path=image_path)

image = self.image_transform(image)

if self.return_labels:

label = self.df['label'].iloc[idx] # iloc function : Get line data by line number

return image, label

else:

return image

train_dataset = HappyWhaleDataset(df=train_df, image_dir=TRAIN_DIR, return_labels=True)

len(train_dataset)#45930

valid_dataset = HappyWhaleDataset(df=valid_df, image_dir=TRAIN_DIR, return_labels=True)

len(valid_dataset)#5103

dataset_dict = {

"train": train_dataset, "val": valid_dataset}

Look at the training set

To be continued …

边栏推荐

- 回顾ITIL各版本历程,找到企业运维发展的关键点

- Record: how to solve the problem of "the system cannot find the specified path" in the picture message uploaded by transferto() of multipartfile class [valid through personal test]

- 蓝牙模块:使用问题集锦

- 1、 Set up Django automation platform (realize one click SQL execution)

- The fastest empty string comparison method C code

- When AI meets music, iFLYTEK music leads the industry reform with technology

- 传感器:MQ-5燃气模块测量燃气值(底部附代码)

- Area of basic exercise circle ※

- The new wild prospect of JD instant retailing from the perspective of "hour shopping"

- STM32 sensorless brushless motor drive

猜你喜欢

(novice to) detailed tutorial on machine / in-depth learning with colab from scratch

万字讲清 synchronized 和 ReentrantLock 实现并发中的锁

华为设备配置双反射器优化虚拟专用网骨干层

![[the second day of actual combat of smart lock project based on stm32f401ret6 in 10 days] (lighting with library function and register respectively)](/img/f7/b2463d8ffe75113d352cae332046db.jpg)

[the second day of actual combat of smart lock project based on stm32f401ret6 in 10 days] (lighting with library function and register respectively)

The commercial value of Kwai is being seen by more and more brands and businesses

传感器:MQ-5燃气模块测量燃气值(底部附代码)

Top level configuration + cooling black technology + cool appearance, the Red Devils 6S Pro is worthy of the flagship game of the year

C language conditional compilation routine

![[the third day of actual combat of smart lock project based on stm32f401ret6 in 10 days] communication foundation and understanding serial port](/img/82/ed215078da0325b3adf95dcd6ffe30.jpg)

[the third day of actual combat of smart lock project based on stm32f401ret6 in 10 days] communication foundation and understanding serial port

What did Hello travel do right for 500million users in five years?

随机推荐

STM32 IIC protocol controls pca9685 steering gear drive board

[learning notes] xr872 GUI littlevgl 8.0 migration (file system)

The execution results of i+=2 and i++ i++ under synchronized are different

General IP address, account and password of mobile IPv6 optical cat login, and mobile optical cat is in bridging mode

rsync 传输排除目录

Simple ranging using Arduino and ultrasonic sensors

Ruixing coffee 2022, extricating itself from difficulties and ushering in a smooth path

What did Hello travel do right for 500million users in five years?

华为设备配置双反射器优化虚拟专用网骨干层

js-dom

Leetcode daily question - 890 Find and replace mode

json,xml,txt

Differences between constants and variables (detailed description) (learning note 3 -- variables and constants)

The new wild prospect of JD instant retailing from the perspective of "hour shopping"

Huawei equipment is configured with IP and virtual private network hybrid FRR

Use of Arduino series pressure sensors and detected data displayed by OLED (detailed tutorial)

[learning notes] xr872 audio driver framework analysis

华为设备配置IP和虚拟专用网混合FRR

[keras learning]fit_ Generator analysis and complete examples

Sensor: MQ-5 gas module measures the gas value (code attached at the bottom)