当前位置:网站首页>Write time replication of hugetlbfs

Write time replication of hugetlbfs

2022-06-29 08:25:00 【kaka__ fifty-five】

This analysis is based on linux kernel 4.19.195

Recently, I thought of a problem that I hadn't considered before :

hugetlbfs Large page encountered cow when , How did you handle it ?

The initial idea was , according to cow Principle , The kernel will reapply a large page , It is used by the first process in the parent process or child process that triggers a write operation , But is it true ? In doing so , If we start with /sys/kernel/mm/hugepages/hugepages-xxxkB/nr_hugepages What will happen if the value written in is not enough ?

Give a conclusion first , And then analyze :

- If you use MAP_SHARED Mapping done , Not going cow, stay fork The page table will be established ,fork When the subprocess accesses this section of memory, it will not trigger page failure interrupt

- If not used MAP_SHARED Mapping done , The parent process continues to use the previously allocated large page memory , The child process will be mapped to the original large page memory at the beginning , But once the parent process or child process writes , It will trigger cow( This is the same as the normal page cow It's the same ), If the page allocation is successful , The end result is a process using a new large page , A process continues to use the old large page .

Let's start with code analysis 1.

perform fork() when , There will be the following process :

_do_fork()->copy_process()->copy_mm()->dup_mm()->dup_mmap()->copy_page_range()->copy_hugetlb_page_range()

int copy_hugetlb_page_range(struct mm_struct *dst, struct mm_struct *src,

struct vm_area_struct *vma)

{

pte_t *src_pte, *dst_pte, entry, dst_entry;

struct page *ptepage;

unsigned long addr;

int cow;

struct hstate *h = hstate_vma(vma);

unsigned long sz = huge_page_size(h);

unsigned long mmun_start; /* For mmu_notifiers */

unsigned long mmun_end; /* For mmu_notifiers */

int ret = 0;

cow = (vma->vm_flags & (VM_SHARED | VM_MAYWRITE)) == VM_MAYWRITE;

mmun_start = vma->vm_start;

mmun_end = vma->vm_end;

if (cow)

mmu_notifier_invalidate_range_start(src, mmun_start, mmun_end);

for (addr = vma->vm_start; addr < vma->vm_end; addr += sz) {

spinlock_t *src_ptl, *dst_ptl;

src_pte = huge_pte_offset(src, addr, sz);

if (!src_pte)

continue;

dst_pte = huge_pte_alloc(dst, addr, sz);

if (!dst_pte) {

ret = -ENOMEM;

break;

}

/* * If the pagetables are shared don't copy or take references. * dst_pte == src_pte is the common case of src/dest sharing. * * However, src could have 'unshared' and dst shares with * another vma. If dst_pte !none, this implies sharing. * Check here before taking page table lock, and once again * after taking the lock below. */

dst_entry = huge_ptep_get(dst_pte);

if ((dst_pte == src_pte) || !huge_pte_none(dst_entry))

continue;

dst_ptl = huge_pte_lock(h, dst, dst_pte);

src_ptl = huge_pte_lockptr(h, src, src_pte);

spin_lock_nested(src_ptl, SINGLE_DEPTH_NESTING);

entry = huge_ptep_get(src_pte);

dst_entry = huge_ptep_get(dst_pte);

if (huge_pte_none(entry) || !huge_pte_none(dst_entry)) {

/* * Skip if src entry none. Also, skip in the * unlikely case dst entry !none as this implies * sharing with another vma. */

;

} else if (unlikely(is_hugetlb_entry_migration(entry) ||

is_hugetlb_entry_hwpoisoned(entry))) {

swp_entry_t swp_entry = pte_to_swp_entry(entry);

if (is_write_migration_entry(swp_entry) && cow) {

/* * COW mappings require pages in both * parent and child to be set to read. */

make_migration_entry_read(&swp_entry);

entry = swp_entry_to_pte(swp_entry);

set_huge_swap_pte_at(src, addr, src_pte,

entry, sz);

}

set_huge_swap_pte_at(dst, addr, dst_pte, entry, sz);

} else {

if (cow) {

/* * No need to notify as we are downgrading page * table protection not changing it to point * to a new page. * * See Documentation/vm/mmu_notifier.rst */

huge_ptep_set_wrprotect(src, addr, src_pte); // The function is to set the last level page table that maps the physical memory of large pages as write protected

}

entry = huge_ptep_get(src_pte);

ptepage = pte_page(entry);

get_page(ptepage);

page_dup_rmap(ptepage, true);

set_huge_pte_at(dst, addr, dst_pte, entry);

hugetlb_count_add(pages_per_huge_page(h), dst);

}

spin_unlock(src_ptl);

spin_unlock(dst_ptl);

}

if (cow)

mmu_notifier_invalidate_range_end(src, mmun_start, mmun_end);

return ret;

}

You can see that in the code ,fork According to our mmap Whether there is VM_SHARED Parameter to determine whether it is necessary to cow, If necessary , Would call huge_ptep_set_wrprotect, First, change the page table entry of the parent process to write protected , Then copy it to the page table entry of the child process , That is, both the parent and child processes are write protected for the page table entries in the memory ; If not needed , Then both the parent and child processes can read and write page table entries in the memory , So we can conclude that 1 Conclusion .

Next, analyze the situation 2.

By analyzing the situation 1 Analysis of , We know , If not used MAP_SHARED Mapping done , The page tables corresponding to the parent and child processes are write protected , that , When writing , It will trigger cow. In page break , Will go to the function hugetlb_cow()

/* * Hugetlb_cow() should be called with page lock of the original hugepage held. * Called with hugetlb_instantiation_mutex held and pte_page locked so we * cannot race with other handlers or page migration. * Keep the pte_same checks anyway to make transition from the mutex easier. */

static vm_fault_t hugetlb_cow(struct mm_struct *mm, struct vm_area_struct *vma,

unsigned long address, pte_t *ptep,

struct page *pagecache_page, spinlock_t *ptl)

{

pte_t pte;

struct hstate *h = hstate_vma(vma);

struct page *old_page, *new_page;

int outside_reserve = 0;

vm_fault_t ret = 0;

unsigned long mmun_start; /* For mmu_notifiers */

unsigned long mmun_end; /* For mmu_notifiers */

unsigned long haddr = address & huge_page_mask(h);

pte = huge_ptep_get(ptep);

old_page = pte_page(pte);

retry_avoidcopy:

/* If no-one else is actually using this page, avoid the copy * and just make the page writable */

if (page_mapcount(old_page) == 1 && PageAnon(old_page)) {

// There is only one virtual page map && Anonymous page ; Under this condition, you only need to modify the page table entry directly

page_move_anon_rmap(old_page, vma);

set_huge_ptep_writable(vma, haddr, ptep);

return 0;

}

/* * If the process that created a MAP_PRIVATE mapping is about to * perform a COW due to a shared page count, attempt to satisfy * the allocation without using the existing reserves. The pagecache * page is used to determine if the reserve at this address was * consumed or not. If reserves were used, a partial faulted mapping * at the time of fork() could consume its reserves on COW instead * of the full address range. */

if (is_vma_resv_set(vma, HPAGE_RESV_OWNER) &&

old_page != pagecache_page)

outside_reserve = 1;

get_page(old_page);

/* * Drop page table lock as buddy allocator may be called. It will * be acquired again before returning to the caller, as expected. */

spin_unlock(ptl);

new_page = alloc_huge_page(vma, haddr, outside_reserve); // Allocate Jumbo pages

if (IS_ERR(new_page)) {

// If the allocation fails

/* * If a process owning a MAP_PRIVATE mapping fails to COW, * it is due to references held by a child and an insufficient * huge page pool. To guarantee the original mappers * reliability, unmap the page from child processes. The child * may get SIGKILLed if it later faults. */

if (outside_reserve) {

put_page(old_page);

BUG_ON(huge_pte_none(pte));

unmap_ref_private(mm, vma, old_page, haddr);

BUG_ON(huge_pte_none(pte));

spin_lock(ptl);

ptep = huge_pte_offset(mm, haddr, huge_page_size(h));

if (likely(ptep &&

pte_same(huge_ptep_get(ptep), pte)))

goto retry_avoidcopy;

/* * race occurs while re-acquiring page table * lock, and our job is done. */

return 0;

}

ret = vmf_error(PTR_ERR(new_page));

goto out_release_old;

}

/* * When the original hugepage is shared one, it does not have * anon_vma prepared. */

if (unlikely(anon_vma_prepare(vma))) {

ret = VM_FAULT_OOM;

goto out_release_all;

}

copy_user_huge_page(new_page, old_page, address, vma, //copy

pages_per_huge_page(h));

__SetPageUptodate(new_page);

mmun_start = haddr;

mmun_end = mmun_start + huge_page_size(h);

mmu_notifier_invalidate_range_start(mm, mmun_start, mmun_end);

/* * Retake the page table lock to check for racing updates * before the page tables are altered */

spin_lock(ptl);

ptep = huge_pte_offset(mm, haddr, huge_page_size(h));

if (likely(ptep && pte_same(huge_ptep_get(ptep), pte))) {

ClearPagePrivate(new_page);

/* Break COW */

huge_ptep_clear_flush(vma, haddr, ptep);

mmu_notifier_invalidate_range(mm, mmun_start, mmun_end);

set_huge_pte_at(mm, haddr, ptep, // Map to new page

make_huge_pte(vma, new_page, 1));

page_remove_rmap(old_page, true);

hugepage_add_new_anon_rmap(new_page, vma, haddr);

set_page_huge_active(new_page);

/* Make the old page be freed below */

new_page = old_page;

}

spin_unlock(ptl);

mmu_notifier_invalidate_range_end(mm, mmun_start, mmun_end);

out_release_all:

restore_reserve_on_error(h, vma, haddr, new_page);

put_page(new_page);

out_release_old:

put_page(old_page);

spin_lock(ptl); /* Caller expects lock to be held */

return ret;

}

fork The first process to write to this page , Will enter the missing page interrupt ; This function assigns a hugepage, After the distribution is successful , Just call copy_user_huge_page Complete the copy of large pages , Then do some initialization work on the large page , You can exit .

fork The second process that writes to this page , Will also enter the missing page interrupt ; We pay attention to the label retry_avoidcopy Code at , This time will be mapcount The judgment of the , If only one process has mapped this page , Then we just need to simply remove the write protection ( That is, configure as writable That's all right. ), Then the function can exit .

If the allocation of large pages fails , There will be two situations :

If the process that triggers the page error exception is the process that creates the private map , Then delete the mapping of all child processes , Is a member of the virtual memory region of the child process vm_private_data Set logo HPAGE_RESV_UNMAPPED, Let the child process be killed in case of page error exception .

If the process that triggers the page error exception is not the process that creates the private map , Returns an error .

边栏推荐

- Audio and video development cases 99 lectures - Contents

- NP3 格式化输出(一)

- js:Array. Reduce cumulative calculation and array consolidation

- Codeforces Round #799 (Div. 4)

- Django - installing mysqlclient error: mysqlclient 1.4.0 or newer is required; you have 0.9.3

- PostgreSQL installation: the database cluster initialization failed, stack hbuilder installation

- 变形金刚Transformer详解

- SizeBalanceTree

- New spark in intelligent era: wireless irrigation with Lora wireless transmission technology

- 在colaboratory上云端使用GPU训练(以YOLOv5举例)

猜你喜欢

AI deep dive of Huawei cloud

蓝图基础

Solidity deploy and validate agent contracts

华为设备配置中型网络WLAN基本业务

Protobuf binary file learning and parsing

What are the organizational structure and job responsibilities of product managers in Internet companies?

What are the constraints in MySQL? (instance verification)

【6G】算力网络技术白皮书整理

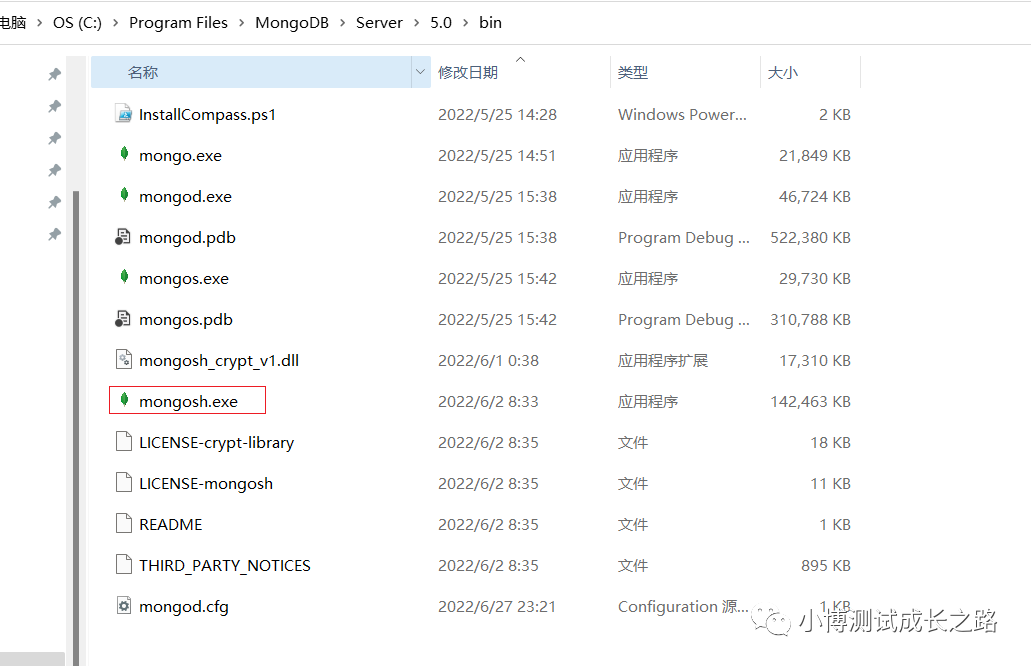

Mongodb- connect to the database using the mongo/mongosh command line

MySQL system keyword summary (official website)

随机推荐

笔记本电脑快速连接手机热点的方法

Voice annotation automatic segment alignment tool sppas usage notes

Taro 介绍

语音标注工具:Praat

Sed replace value with variable

Want to open a stock account, is it safe to open a stock account online-

A high-frequency problem, three kinds of model thinking to solve this risk control problem

Code:: blocks code formatting shortcuts

Hands on deep learning (I) -- linear neural network

[eye of depth wuenda machine learning homework class phase IV] summary of logistic regression

U盘内存卡数据丢失怎么恢复,这样操作也可以

语音标注自动音段对齐工具SPPAS使用笔记

What does Ali's 211 mean?

开户买基金,通过网上基金开户安全吗?-

实战回忆录从webshell开始突破边界

Basics - syntax standards (ANSI C, ISO C, GNU C)

Message Oriented Middleware: pulsar

Explain the garbage collection mechanism (GC) in JVM

[Kerberos] analysis of Kerberos authentication

Using method and de duplication of distinct() in laravel