relation ( In front of / Post processor regular extractor )

Support IP cheating

I won't support it IP cheating

The test result analysis chart is powerful ( Data collection )

The function of test result analysis chart is relatively weak , Need to rely on extensions

heavyweight

Lightweight

Complicated installation

Simple installation

Cross platform

Generate different number of concurrent users according to different loads

Currently, a thread group can only generate one

performance

Support web End function test

Widely supports various industry standard protocols 、 Multiple platform development scripts

Components

know Locust

Definition

Locust Is an easy to use distributed load testing tool , Based entirely on events , That is, a locust Nodes can also support thousands of concurrent users in one process , Do not use callback , adopt gevent Using lightweight processes ( That is, run in your own process ).

locust: Open source 、 be based on python , Non multithreading ( coroutines )、“ Use cases are code ” ; No recording tool 、

python A library , need python3.6 And above environmental support

It can be used for performance test

Based on events , Application process Perform performance testing

Support graphics 、no-gui、 Distributed and other operation modes

Why choose locust

Based on the coroutine , Achieve more concurrency at a low cost

Script enhancement (“ Testing is code ”)

Used requests send out http request

Support distributed

Use Flask Provide WebUI

There are third-party plug-ins 、 extensible

( Two ) locust Basic usage

Convention over configuration

1. install locust

pip install locust

locust -v

2. Write use cases

test_xxx ( General test framework conventions ) → unittest 、pytest

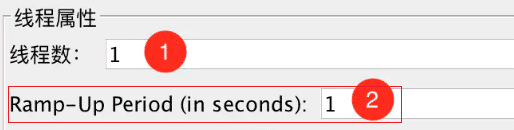

- Number of users Number of analog users - Spawn rate : Production quantity ( Per second )、 =>jmeter : Ramp-Up Period (in seconds) - Host (e.g. http://www.example.com) => Depends on the script Absolute address -

WebUI The module specification :

New test: Click this button to edit the total number of virtual users simulated and the number of virtual users started per second ;

Statistics: Be similar to jmeter in Listen Aggregate report for ;

Charts: Curve showing the change trend of test results , The number of requests completed per second (RPS)、 response time 、 Number of virtual users at different times ;

Failures: Display interface of failed request ;

Exceptions: Display interface of exception request ;

Download Data: Test data download module , There are three types of CSV Format Download , Namely :Statistics、responsetime、exceptions;

The framework is through commands locust Running , Common parameters are :

-H: Specify the host address of the test ( notes : Will be covered Locust Class specifies the host address )

-f: Specify the test script address ( notes : The script must contain a Locust The derived class of )

-u: Number of concurrent users , And --no-web Use it together

-r: Number of users started per second , And --no-web Use it together

-t: The elapsed time ( Company : second ), And --no-web Use it together

-L: The level of logging , The default is INFO Debug command : locust -f **.py --no-web -u 1 -t 1 Run the command : locust -f **.py

4. locust Concept

The parent class is User ?

Represents the... Of the system to be generated for load testing HTTP“ user ”.

Performance testing Simulate real users

Every user It is equivalent to a collaboration link , Conduct relevant system interaction

Why method , To be packaged as task

task Indicates the operation to be performed by the user

Visit the home page → Sign in → increase 、 To search for → homPage

TaskSet : A class that defines a set of tasks that the user will perform . After the test task starts , Every Locust Users will start from TaskSet Middle random selection A task execution

tasks = [task1,task2]

Specific content : Method code

classMyHttpUser(HttpUser):# user # wait_time = lambda self: random.expovariate(1)*1000 wait_time = between(1, 2) # Perform tasks The waiting time checkpoint Thinking time

@task defindex_page(self):# The user performs the operation self.client.get("https://baidu.com/123") # Service error 、 Network error self.client.get("https://baidu.com/456") # Assertion 、 Business error

( 3、 ... and ) locust Custom pressure test protocol websocket

locust Is not http Interface testing tool , Just built in “HttpUser” Example , Can test any protocol : websocket 、socket 、mqtt (webAPP、Hybrid、Html5 、 Desktop browser ) 、rpc

What is? websocket agreement ?

WebSocket It's one in a single TCP Connect to carry on full duplex Communication protocol . WebSocket Makes it easier to exchange data between client and server , Allows the server to actively push data to the client . stay WebSocket API in , The browser and the server only need to complete a handshake , A persistent connection can be created directly between the two , And two-way data transmission .ws A long connection 、 and http There are essential differences ;

agreement upgrade

client launch , The server may accept or reject switching to the new protocol . The client can use common protocols ( Such as HTTP / 1.1) Initiate request , The request description needs to be switched to HTTP / 2 Or even to WebSocket

image-20220630143249367

A word summary : agreement “ Use HTTP A handshake , After use TCP Have a conversation ” Full duplex protocol

choice websocket client

at present pypi , 2 individual ws library

websockets: Provide client , Provides server ; Use async grammar

websocket_client: Available only client 、 Use ( Not async) Synchronous Syntax

install pip install websocket_client

Use

import asyncio import websockets

asyncdefhello(): asyncwith websockets.connect('ws://localhost:8765') as websocket: name = input("What's your name? ")

# Classify and independently calculate performance indicators for each pushed event try: recv_j = json.loads(recv) eventType_s = jsonpath.jsonpath(recv_j, expr='$.eventType') eventType_success(eventType_s[0], recv, total_time) except websocket.WebSocketConnectionClosedException as e: events.request_failure.fire(request_type="[ERROR] WebSocketConnectionClosedException", name='Connection is already closed.', response_time=total_time, exception=e) except: print(recv) # normal OK Respond to , Or other heartbeat responses are added to avoid handling as exceptions if'ok'in recv: eventType_success('ok', 'ok', total_time)

** summary :**

1. Create a dedicated client link

2. Set the statistical results

3. Set successfully 、 The conditions of failure

> pyfile: locustfile_websocket02.py

>pyfile: locustfile_websocket01.py

> | TimeoutError(10060, '[WinError 10060] Because the connector did not reply correctly after a period of time or the connected host did not respond , Connection attempt failed .') |

> | ------------------------------------------------------------ |

> | 1. It is caused by the anti crawl mechanism of the request server , The request was not accepted ; ip Limit <br>→ Page access verification - |

> | 2. http The number of connections for exceeds the maximum limit .</br>headers Of Connection The parameter defaults to keep-alive, All previously requested links always exist , Occupied the subsequent link requests |

> | 3. The web server is too bad → Modify the middleware configuration (SLB、[ApiGateway](https://blog.csdn.net/cwenao/article/details/54572648) Fuse 、 Current limiting ~ </br> →timeout Timeout time </br>  |

> | ........... [9 Big strategy and 6 Big target ](https://juejin.cn/post/6982773753511936030#heading-1) 、 |

> | ........... Hardware ( Computer architecture )、 operating system (OS\JVM)、 file system 、 Network communication 、 Database system 、 middleware (transaction、message、app)、 The application itself |

Local :netsh winsock reset → restart

Preliminary results : 1. RPS xxxx about 2. Maximum number of users xxx > Express : The current maximum number of users allowed requests , But not all the results can be returned

Common mistakes

Possible cause analysis

TPS There's a lot of volatility

Network fluctuations 、 Other service resources compete as well as Garbage collection problem

High and send a large number of error reports

The port is completely occupied due to short connection as well as The configuration of the maximum number of threads in the thread pool is small And The timeout time is short Lead to .

Cluster system , The load of each service node is unbalanced

SLB The service has set session persistence

The number of concurrent is increasing ,TPS Couldn't get on ,CPU Low usage

SQL No index created /SQL The statement filter condition is not clear 、 The code contains Synchronization lock , Lock waiting occurs during high concurrency ;

connection reset、 Service to restart 、timeout etc.

Parameter configuration 、 Service strategy 、 Blocking and various locks lead to

( Four ). locust Core components

Core components : 2 class ,4 individual

User : stay locust in User Class represents a user .locust A will be generated for each simulated user User Class instance , And we can be in User Class to define user behavior .

HttpUser

websockeruser

Task: User behavior

SequentialTaskSet

TaskSet:

tasks Attribute will be multiple TaskSet Subclasses nested together

Classes are nested inside each other

User Class TaskSet class , As User Subclasses of

Events : locust Event hooks provided , It is used to perform some operations at a specific time during the rerunning process .

Adaptive rhythm time : constant_pacing It is used to ensure that the task every X second ( most ) To run a

task: Mission ( User behavior )

tasks :

Add... To the user behavior method of the user class @task modification

When referencing an external user behavior method Use tasks Realization

weight

In the test , There are multiple User Class, By default locust Will be for each User Class The number of instances of is the same . By setting weight attribute , To control locust For our User Class Generate a different number of instances .

locustfile07.py

( 5、 ... and ). locust Expansion enhancements

→ Python Code

1. Record use case

I won't support it , → Plug in enhancements

2. Data Association

locustfile05.py

Use variables to pass

3. A parameterized

locustfile09.py

locust A parameterized : Introduce the concept of queues queue , The implementation is to push the parameters into the queue , Take out in turn during the test , When all is taken locust Will automatically stop . If parameter cyclic pressure measurement is used , You need to push the extracted parameters to the end of the queue .

Variable

CSV

queue

..

4. checkpoint

locust The default checkpoint is used by default , For example, when the interface times out 、 The reasons for link failure are , Will automatically determine the failure

principle :

Use self.client Provided catch_response=True` Parameters , add to locust Provided ResponseContextManager Class to set checkpoints manually .

ResponseContextManager There are two methods to declare success and failure , Namely success and failure. among failure Method requires us to pass in a parameter , Content is the cause of failure .

locustfile10.py

from requests import codes from locust import HttpUser, task, between

defon_start(self): # Pass in manually catch_response=True Parameter set checkpoint manually with self.client.get('/', catch_response=True) as r: if r.status_code == codes.bad: r.success() else: r.failure(" Failed to request Baidu homepage ")

@task defsearch_locust(self): with self.client.get('/s?ie=utf-8&wd=locust', catch_response=True) as r: if r.status_code == codes.ok: r.success() else: r.failure(" Search for locust failed ")

5. Thinking time

wait_time

between

constant

6. The weight

The first one is : The method specifies

locust The default is random execution taskset Inside task Of .

The weight is passed in @task Parameter , As in the code hello:world:item yes 1:3:2, Actual execution code , stay user in tasks The task will be generated into a list [hello,world,world,world,item,item]

@tag("tag1", "tag2") @task(3) defview_items(self): for item_id in range(10): #self.client.request_name="/item?id=[item_id]"# Group request # Will count 10 Pieces of information are grouped into a group named /item Under entry self.client.get(f"/item?id={item_id}", name="/item") time.sleep(1)

classMyUserGroup(HttpUser): """ Define thread groups """ tasks = [QuickstartUser] # tasks Task list host = "http://www.baidu.com"

The second kind : Specify... In the attribute

There are multiple user class scenarios in the file ,

No user class is specified on the command line ,Locust The same number of each user class will be generated .

You can specify that you want to use the same... By passing them as command line arguments locustfile Which user classes in : locust -f locustfile07.py QuickstartUser2

# locustfile07.py import time from locust import HttpUser, task, between, TaskSet, tag, constant

The rendezvous point is used to synchronize virtual users , So that just in time At the same time Perform tasks . stay [ test plan ] in , The system may be required to withstand 1000 People submit data at the same time , You can add a collection point before submitting data , So when the virtual user runs to the collection point of submitting data , Just check how many users run to the assembly point at the same time , If less than 1000 people , Users who have reached the assembly point wait here , When the user waiting at the assembly point reaches 1000 Human hour ,1000 People submit data at the same time , So as to meet the requirements in the test plan .

web_concurrent_start(flag)

transaction() ;

web_concurrent_end(param)

Be careful : The framework itself does not directly encapsulate the concept of assembly points , Indirectly through gevent Concurrent mechanism , Use gevent The lock of

semaphore Is a built-in counter : Whenever you call acquire() when , Built in counter -1 Whenever you call release() when , Built in counter +1 Counter must not be less than 0, When the counter is 0 when ,acquire() Will block threads until other threads call release()

Two steps :

all_locusts_spawned Create hook function

take locust The instance is attached to the listener events.spawning_complete.add_listener

Locust Triggered when the instance preparation is completed

Sample code :

# locustfile08.py import os

from locust import HttpUser, TaskSet, task,between,events from gevent._semaphore import Semaphore

events.spawning_complete.add_listener(on_hatch_complete) # Hang on to locust Hook function ( be-all Locust Trigger when the sample generation is completed )

n = 0 classUserBehavior(TaskSet):

deflogin(self): global n n += 1 print("%s Virtual users started , And login "%n)

deflogout(self): print(" Log out ")

defon_start(self): self.login()

all_locusts_spawned.wait() # Sync lock waiting

@task(4) deftest1(self):

url = '/list' param = { "limit":8, "offset":0, } with self.client.get(url,params=param,headers={},catch_response = True) as response: print(" Users browse the login home page ")

@task(6) deftest2(self):

url = '/detail' param = { 'id':1 } with self.client.get(url,params=param,headers={},catch_response = True) as response: print(" Users execute queries at the same time ")

if __name__ == '__main__': os.system("locust -f locustfile08.py")

8. Distributed

Locust Realize a large number of single machine concurrency through concurrency , But for multicore CPU Our support is not good , You can start multiple... On one machine Locust Instance implementation for multi-core CPU The use of ( Single machine distributed system ) , Empathy : A single computer is not enough to simulate the required number of users ,Locust It also supports distributed load testing on multiple computers .

One is stand-alone setting master and slave Pattern , The other is that there are multiple machines , One of the machine settings master, Other machine settings slave node

Be careful : Master node master Computers and every work All work node computers must have Locust A copy of the test script .

Single machine master-slave mode

among slave The number of nodes must be less than or equal to the number of local processors

step : Take a single computer for example ( As the main controller , Also as a working machine )

Step1:→ start-up locust master node

locust -f locustfile07.py --master

Step2:→ Every work node locust -f locustfile07.py --worker

Multi machine master-slave mode

Select one of the computers , start-up master node , Because the master node cannot operate on other nodes , Therefore, the slave must be started on other machines Locust node , Keep up --worker Parameters , as well as --master-host( Specify the IP / Host name ).

locust -f locustfile07.py --master

On other machines ( The environment is consistent with that of the primary node , All need to have locust The running environment and scripts ), start-up slave node , Set up --master-host

take locust Set to master Pattern .Web The interface will run on this node .

--worker

take locuster Set to worker Pattern .

--master-host= X. X. X. X

Optional and -- worker Use it together , To set the host name of the primary node /IP ( The default value is 127.0.0.1)

--master-port

Optionally with -- worker Used together to set the port number of the primary node ( The default value is 5557).

-master-bind-host= X. X. X. X Optional and --master Use it together . Determine the network interface to which the primary node will be bound . The default is *( All available interfaces ).

--master-bind-port=5557 Can choose And --master Use it together . Determine the network port on which the primary node will listen . The default value is 5557.

--expect-workers= X In the use of --headless Use... When starting the master node . Then the main node will wait , until X worker The node is already connected , Then the test begins .

Locust Default HTTP Client side usage python-requests. If you plan to run tests at very high throughput and run Locust Limited hardware , Then sometimes it is not efficient .Locust Also attached FastHttpUser Use geventhttpclient Instead of . It provides a very similar API, And use CPU Time is significantly reduced , Sometimes the maximum number of requests per second on a given hardware is increased by 5 To 6 times .

Use under the same concurrency conditions FastHttpUser It can effectively reduce the resource consumption of the load machine, so as to achieve greater http request .

The comparison results are as follows :

locustfile11.py

HttpUser:

contrast :FastHttpUser

( 6、 ... and ) Extraneous

0. process 、 Threads 、 The difference of cooperation

process : The process has certain uniqueness A functional program about a running activity on a data set , A process is an independent unit of the system for resource allocation and scheduling . Every Each process has its own independent memory space , Different processes go through Interprocess communication To communication . Because the process is heavier , Occupy independent memory , So the switching overhead between context processes ( Stack 、 register 、 Virtual memory 、 File handle, etc ) The larger , But relatively stable and safe .

Threads : A thread is an entity of a process , yes CPU Basic unit of dispatch and dispatch , It's a smaller, independent, basic unit than a process . The thread itself basically does not have system resources , Have only a few essential resources in operation ( Such as program counter , A set of registers and stacks ), But it can share all the resources owned by a process with other threads belonging to the same process . The communication between threads is mainly through the common Shared memory , Context switching is fast , Less resource overhead , But it's easier to lose data than it's unstable .

coroutines : Coroutine is a lightweight thread in user mode , The scheduling of the process is completely controlled by the user . It has its own register context and stack . When the coordination scheduling is switched , Save register context and stack elsewhere , At the time of cutting back , Restore the previously saved register context and stack , The direct operation stack basically has no kernel switching overhead , You can access global variables without locking , So context switching is very fast .

Process vs. thread :

Processes are used for independent address spaces ; A thread is attached to a process ( There are processes before threads ) , Process address space can be shared

Global variables are not shared between processes , Sharing global variables between threads

Thread is cpu The basic unit of dispatch ; A process is the smallest unit of resources allocated by the operating system

Processes are independent of each other , Can be executed concurrently ( The number of cores is greater than the number of threads )

Multi process operation. The failure of one process will not affect the operation of other processes , In multithreaded development, the current process hangs up Destroy by attaching to multiple threads in the current process

Comparison between threads and coroutines

A thread can contain multiple coroutines , A process can also have multiple coroutines

Threads 、 process Synchronization mechanism , Coroutine asynchrony

The coroutine retains the state of the last invocation , Each process reentry is equivalent to waking up

The switching of threads is scheduled by the operating system , The process is scheduled by the user

resource consumption : Thread default Stack Size is 1M, And the process is lighter , near 1K.

Threads : Lightweight process coroutines : Lightweight threads ( User mode )

If Locust The file is located at and locustfile.py In different subdirectories / Or the file name is different , Then use parameters -f+ file name :

$ locust -f locust_files/my_locust_file.py

To run in multiple processes Locust, We can do this by specifying --master:

$ locust -f locust_files/my_locust_file.py --master

Start any number of dependent processes :

$ locust -f locust_files/my_locust_file.py --slave

If you want to run on more than one machine Locust, The master server host must also be specified when starting the slave server ( Run on a single computer Locust There is no need for , Because the primary server host defaults to 127.0.0.1):

$ locust -f locust_files/my_locust_file.py --slave --master-host=192.168.0.100

Also available in the configuration file (locust.conf or ~/.locust.conf) Or to LOCUST_ Prefixed env vars Setting parameters in

for example :( This will do the same thing as the last command )

$ LOCUST_MASTER_HOST=192.168.0.100 locust

Be careful : To see all available options , Please type the :locust —help