当前位置:网站首页>The difference between bagging and boosting in machine learning

The difference between bagging and boosting in machine learning

2022-07-04 04:05:00 【Xiaobai learns vision】

Click on the above “ Xiaobai studies vision ”, Optional plus " Star standard " or “ Roof placement ”

Heavy dry goods , First time delivery Bagging and Boosting All of them combine the existing classification or regression algorithms in a certain way , Form a more powerful classifier , More precisely, it's a way to assemble classification algorithms . The method of assembling weak classifiers into strong classifiers .

First introduced Bootstraping, Self help method : It's a sampling method with put back ( Duplicate samples may be drawn ).

1. Bagging (bootstrap aggregating)

Bagging The bagging method , The algorithm process is as follows :

The training set was extracted from the original sample set . Each round is used from the original sample set Bootstraping Method extraction n Training samples ( In training set , Some samples may be taken multiple times , Some samples may not be picked at all ). Together with k Round draw , obtain k Training set .(k The training sets are independent of each other )

One model at a time using one training set ,k A total of training sets k A model .( notes : There is no specific classification algorithm or regression method , We can adopt different classification or regression methods according to the specific problem , Such as the decision tree 、 Sensors etc. )

Pair classification problem : I'm going to go up k The classification results were obtained by voting ; Pair regression problem , The mean value of the above model is calculated as the final result .( All models are equally important )

2. Boosting

The main idea is to assemble the weak classifier into a strong classifier . stay PAC( The probability approximation is correct ) Under the learning framework , Then the weak classifier can be assembled into a strong classifier .

About Boosting The two core issues of this issue :

2.1 How to change the weight or probability distribution of training data in each round ?

By increasing the weight of the samples that were divided by the weak classifier in the previous round , Reduce the weight of the previous round of pairing samples , So that the classifier has a better effect on the misclassified data .

2.2 How to combine weak classifiers ?

The weak classifiers are combined linearly through the additive model , such as AdaBoost By a weighted majority , That is to increase the weight of the classifier with small error rate , At the same time, the weight of the classifier with high error rate is reduced .

The lifting tree gradually reduces the residual by fitting the residual , The final model is obtained by superimposing the models generated in each step .

3. Bagging,Boosting The difference between the two

Bagging and Boosting The difference between :

1) Sample selection :

Bagging: The training set is selected from the original set , The training sets selected from the original set are independent of each other .

Boosting: The training set of each round is the same , Only the weight of each sample in the classifier changes in the training set . And the weight is adjusted according to the last round of classification results .

2) Sample weights :

Bagging: Use uniform sampling , The weight of each sample is equal

Boosting: Adjust the weight of the sample according to the error rate , The greater the error rate, the greater the weight .

3) Prediction function :

Bagging: All prediction functions have equal weight .

Boosting: Each weak classifier has its own weight , For the classifier with small classification error, it will have more weight .

4) Parallel computing :

Bagging: Each prediction function can be generated in parallel

Boosting: Each prediction function can only be generated in sequence , Because the latter model parameter needs the result of the previous model .

4. summary

These two methods are to integrate several classifiers into one classifier , It's just that the way of integration is different , In the end, we get a different effect , The application of different classification algorithms into this kind of algorithm framework will improve the classification effect of the original single classifier to a certain extent , But it also increases the amount of computation .

Here is a new algorithm that combines decision tree with these algorithm frameworks :

Bagging + Decision tree = Random forests

AdaBoost + Decision tree = Ascension tree

Gradient Boosting + Decision tree = GBDT

download 1:OpenCV-Contrib Chinese version of extension module

stay 「 Xiaobai studies vision 」 Official account back office reply : Extension module Chinese course , You can download the first copy of the whole network OpenCV Extension module tutorial Chinese version , cover Expansion module installation 、SFM Algorithm 、 Stereo vision 、 Target tracking 、 Biological vision 、 Super resolution processing And more than 20 chapters .

download 2:Python Visual combat project 52 speak

stay 「 Xiaobai studies vision 」 Official account back office reply :Python Visual combat project , You can download the Image segmentation 、 Mask detection 、 Lane line detection 、 Vehicle count 、 Add Eyeliner 、 License plate recognition 、 Character recognition 、 Emotional tests 、 Text content extraction 、 face recognition etc. 31 A visual combat project , Help fast school computer vision .

download 3:OpenCV Actual project 20 speak

stay 「 Xiaobai studies vision 」 Official account back office reply :OpenCV Actual project 20 speak , You can download the 20 Based on OpenCV Realization 20 individual Actual project , Realization OpenCV Learn advanced .

Communication group

Welcome to join the official account reader group to communicate with your colleagues , There are SLAM、 3 d visual 、 sensor 、 Autopilot 、 Computational photography 、 testing 、 Division 、 distinguish 、 Medical imaging 、GAN、 Wechat groups such as algorithm competition ( It will be subdivided gradually in the future ), Please scan the following micro signal clustering , remarks :” nickname + School / company + Research direction “, for example :” Zhang San + Shanghai Jiaotong University + Vision SLAM“. Please note... According to the format , Otherwise, it will not pass . After successful addition, they will be invited to relevant wechat groups according to the research direction . Do not Send ads within the group , Or you'll be invited out , Thanks for your understanding ~

边栏推荐

- Zigzag scan

- [book club issue 13] packaging format of video files

- Which product is better for 2022 annual gold insurance?

- 【罗技】m720

- Epidemic strikes -- Thinking about telecommuting | community essay solicitation

- super_ Subclass object memory structure_ Inheritance tree traceability

- '2'>' 10'==true? How does JS perform implicit type conversion?

- The three-year revenue is 3.531 billion, and this Jiangxi old watch is going to IPO

- 拼夕夕二面:说说布隆过滤器与布谷鸟过滤器?应用场景?我懵了。。

- Deep thinking on investment

猜你喜欢

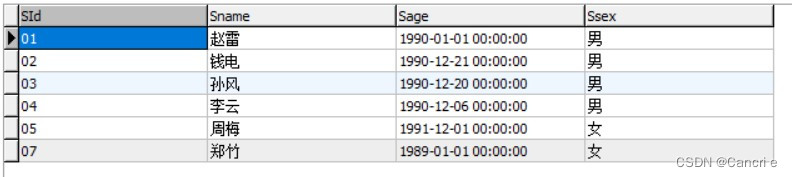

SQL statement strengthening exercise (MySQL 8.0 as an example)

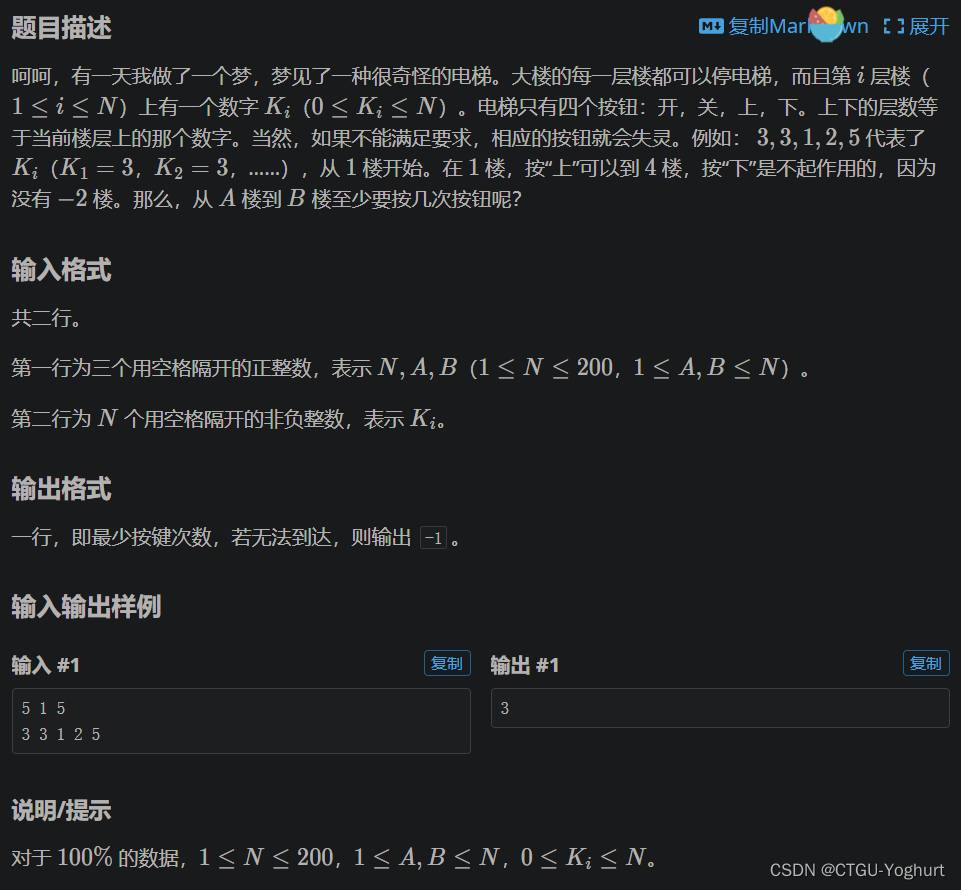

2022-07-03: there are 0 and 1 in the array. Be sure to flip an interval. Flip: 0 becomes 1, 1 becomes 0. What is the maximum number of 1 after turning? From little red book. 3.13 written examination.

'2'>' 10'==true? How does JS perform implicit type conversion?

mysql数据库的存储

Sales management system of lightweight enterprises based on PHP

Brief explanation of depth first search (with basic questions)

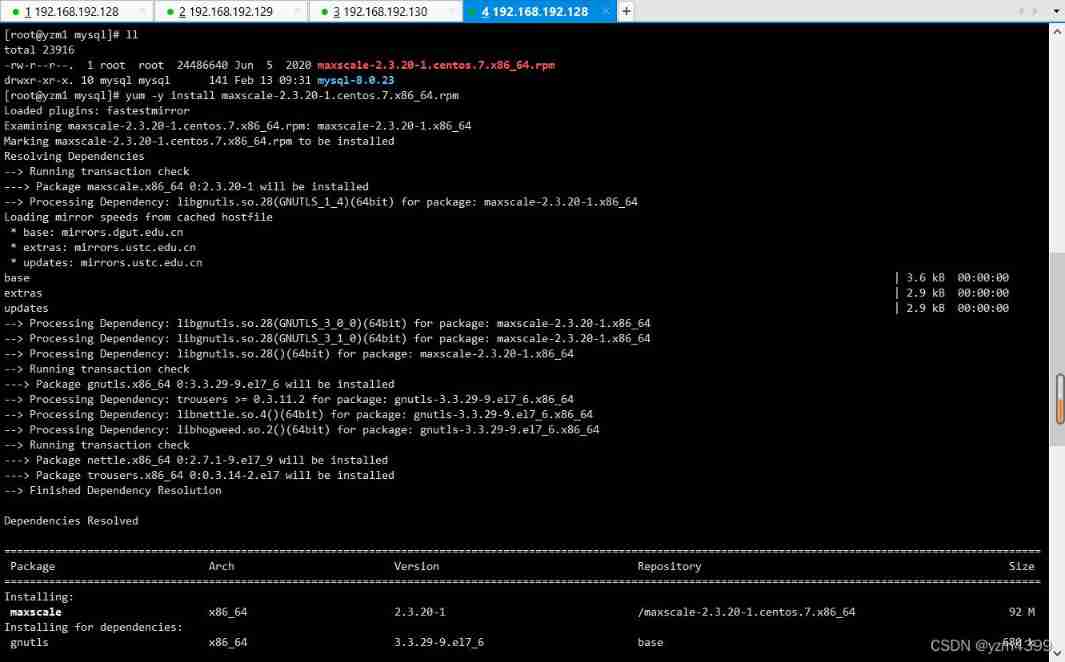

MySQL maxscale realizes read-write separation

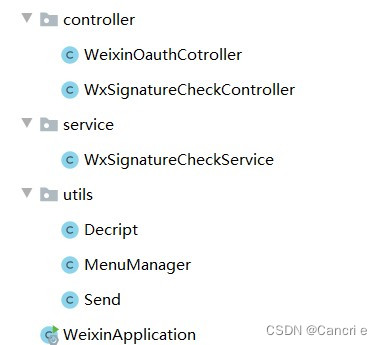

微信公众号网页授权

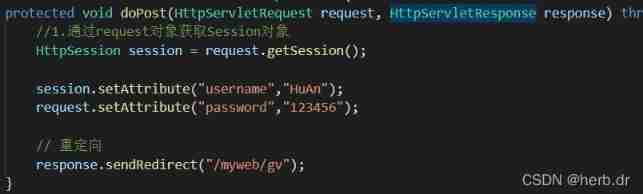

Session learning diary 1

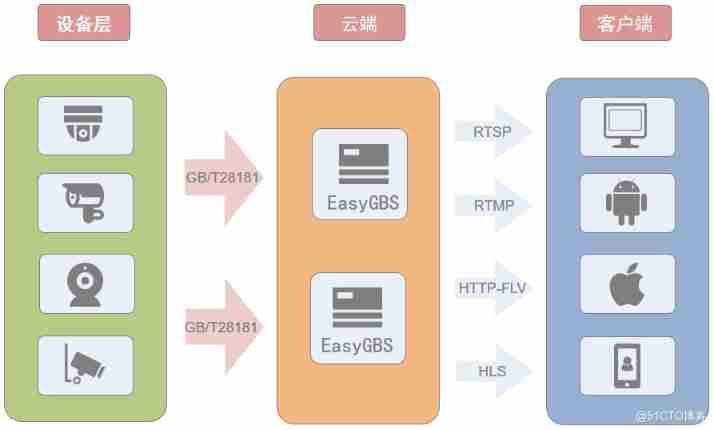

National standard gb28181 protocol platform easygbs fails to start after replacing MySQL database. How to deal with it?

随机推荐

JDBC advanced

Formulaire day05

'2'>' 10'==true? How does JS perform implicit type conversion?

CSP drawing

Zigzag scan

Msgraphmailbag - search only driveitems of file types

Which product is better if you want to go abroad to insure Xinguan?

Spa in SDP

Typical applications of minimum spanning tree

Brief explanation of depth first search (with basic questions)

Go 语言入门很简单:Go 实现凯撒密码

Getting started with the go language is simple: go implements the Caesar password

Recursive structure

vue多级路由嵌套怎么动态缓存组件

潘多拉 IOT 开发板学习(HAL 库)—— 实验6 独立看门狗实验(学习笔记)

STM32外接DHT11显示温湿度

Nbear introduction and use diagram

How about the ratings of 2022 Spring Festival Gala in all provinces? Map analysis helps you show clearly!

Katalon framework tests web (XXI) to obtain element attribute assertions

【愚公系列】2022年7月 Go教学课程 002-Go语言环境安装