当前位置:网站首页>Mmdetection dataloader construction

Mmdetection dataloader construction

2022-06-10 22:41:00 【Wu lele~】

List of articles

Preface

This article will introduce mmdetection How to build dataloader Class .dataloader It mainly controls the iterative reading of data sets . It is necessary to realize dataset class . About dataset Class implementation please go to mmdetection And dataset Class construction .

1、 Overall process

stay pytorch in ,Dataloader The following important parameters are required for instance construction ( Intercept dataloader Source code ).

Arguments:

dataset (Dataset): dataset from which to load the data.

batch_size (int, optional): how many samples per batch to load

(default: ``1``).

shuffle (bool, optional): set to ``True`` to have the data reshuffled

at every epoch (default: ``False``).

sampler (Sampler or Iterable, optional): defines the strategy to draw

samples from the dataset. Can be any ``Iterable`` with ``__len__``

implemented. If specified, :attr:`shuffle` must not be specified.

batch_sampler (Sampler or Iterable, optional): like :attr:`sampler`, but

returns a batch of indices at a time. Mutually exclusive with

:attr:`batch_size`, :attr:`shuffle`, :attr:`sampler`,

and :attr:`drop_last`.

num_workers (int, optional): how many subprocesses to use for data

loading. ``0`` means that the data will be loaded in the main process.

(default: ``0``)

collate_fn (callable, optional): merges a list of samples to form a

mini-batch of Tensor(s). Used when using batched loading from a

map-style dataset.

Briefly introduce the meaning of each parameter :

dataset: Is to inherit Dataset Class ;

batch_size: Batch size

shuffle: True. At the beginning of a new round epoch when , Whether the data will be disrupted again

sampler: iterator : It stores the subscript of the data set ( May be disturbed / The order ). Is the iterator .

batch_samper: iteration sampler Middle subscript , Then according to the subscript dataset Remove from batch_size Data .

collate_fn: take batch Data is integrated into one list, Adjust width and height .

Maybe the definitions of the above parameters are a little vague . No problem , Just remember dataset,sampler,batch_sampler,dataloader Are iterators . As for iterators : Can be understood as for … in dataset: Just use it .

since Dataloader The main parameters are , Now look at mmdetection How about Chinese build_dataloader Of . Next, I'm going to explain it in two parts :

(1) How to instantiate a dataloader object . As shown in the figure below :mmdetection The following four parameters are mainly implemented in .GroupSamper Inherited from torch Of sampler class .shuffle Most of them are True. and batch_sampler Parameters mmdetection Use yes pytorch Implemented in BatchSampler class .

(2) Read a batch Data flow .

2、 Instantiation dataloader

2.1. GroupSampler Class implementation

dataset Please go to dataset Class construction . Here I post GroupSampler Source code :

class GroupSampler(Sampler):

def __init__(self, dataset, samples_per_gpu=1):

assert hasattr(dataset, 'flag')

self.dataset = dataset

self.samples_per_gpu = samples_per_gpu

self.flag = dataset.flag.astype(np.int64) #

self.group_sizes = np.bincount(self.flag) # np.bincount() Function Statistics Subscript 01 Number of occurrences .

self.num_samples = 0

for i, size in enumerate(self.group_sizes):

self.num_samples += int(np.ceil(

size / self.samples_per_gpu)) * self.samples_per_gpu

def __iter__(self):

indices = []

for i, size in enumerate(self.group_sizes): # self.group_sizes = [942,4096] ; among 942 Represents the length ratio <1 Number of images ;

if size == 0:

continue

indice = np.where(self.flag == i)[0] # Extract self.flag Medium equals current i The subscript . self.flag All images in the training set are stored in sequence aspect-ratio

assert len(indice) == size

np.random.shuffle(indice) # The subscript here is confused

num_extra = int(np.ceil(size / self.samples_per_gpu)

) * self.samples_per_gpu - len(indice)

indice = np.concatenate(

[indice, np.random.choice(indice, num_extra)])

indices.append(indice)

indices = np.concatenate(indices) # Merge Chen Yi list, The length is 5011 Of

indices = [ # according to batch take list Divide : if batch=1, Then the list is divided into 5011 Array of .

indices[i * self.samples_per_gpu:(i + 1) * self.samples_per_gpu]

for i in np.random.permutation(

range(len(indices) // self.samples_per_gpu))

]

indices = np.concatenate(indices)

indices = indices.astype(np.int64).tolist()

assert len(indices) == self.num_samples

return iter(indices)

def __len__(self):

return self.num_samples

In fact, it mainly realizes __iter__ Method to make it an iterator . The general idea is : If I had one 5000 A data set of images . Then the data set subscript is 0~4999. adopt np.random.shuffle Upset 5000 A subscript . If batch yes 2, Then get 2500 Yes . Will this 2500 The pair is stored in an array indices This list in . Finally through iter(indices) iteration .

2.2. BatchSampler class

This part mmdetection It uses pytorch Source code . I posted the source code :

class BatchSampler(Sampler[List[int]]):

def __init__(self, sampler: Sampler[int], batch_size: int, drop_last: bool) -> None:

self.sampler = sampler

self.batch_size = batch_size

self.drop_last = drop_last

def __iter__(self):

batch = []

for idx in self.sampler:

batch.append(idx)

if len(batch) == self.batch_size:

yield batch

batch = []

if len(batch) > 0 and not self.drop_last:

yield batch

def __len__(self):

# Can only be called if self.sampler has __len__ implemented

# We cannot enforce this condition, so we turn off typechecking for the

# implementation below.

# Somewhat related: see NOTE [ Lack of Default `__len__` in Python Abstract Base Classes ]

if self.drop_last:

return len(self.sampler) // self.batch_size # type: ignore

else:

return (len(self.sampler) + self.batch_size - 1) // self.batch_size # type: ignore

You can see from the source code :BatchSampler With sampler The initialization of the . At the same time __iter__ Method , Each iteration is enough for one batch, With the help of the generator yield batch, I.e. return to a batch data .

3、 Read a batch Data flow

Here I want to use a picture to illustrate : Words are not easy to describe :

summary

This paper mainly introduces mmdetection How to realize dataset,sampler To construct a Dataloader, in addition , It shows dataloader How to iterate each batch data internally . If you have any questions, welcome +vx:wulele2541612007, Pull you into the group to discuss communication .

边栏推荐

猜你喜欢

leetcode:333. 最大 BST 子树

Diablo immortality database station address Diablo immortality database website

【TcaplusDB知识库】TcaplusDB推送配置介绍

![[tcapulusdb knowledge base] Introduction to tcapulusdb patrol inspection statistics](/img/67/e0112903cb3992a64bab02060dd59a.png)

[tcapulusdb knowledge base] Introduction to tcapulusdb patrol inspection statistics

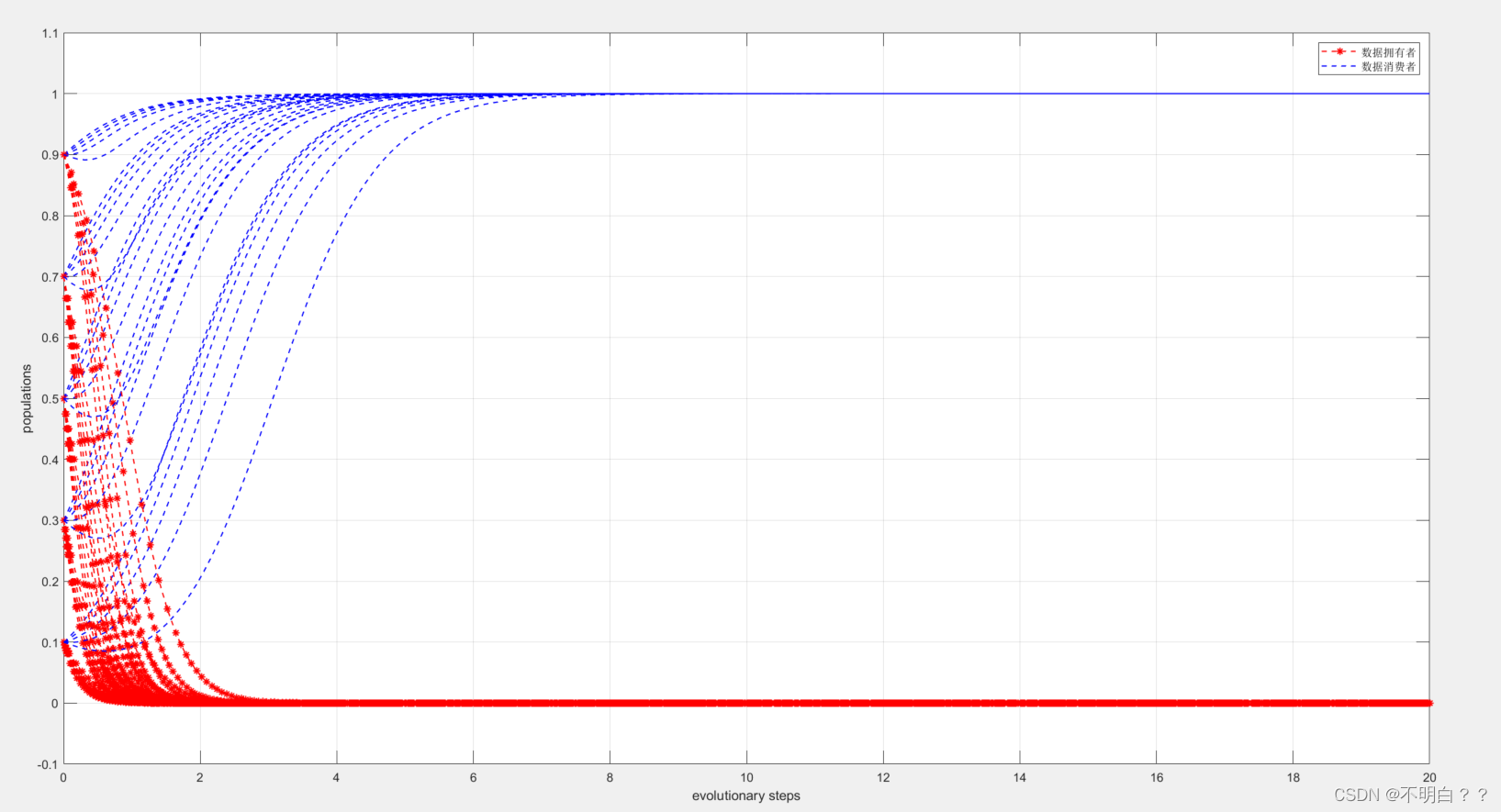

Matlab - 演化博弈论实现

Differences between disk serial number, disk ID and volume serial number

鯨會務智慧景區管理解决方案

MySQL master-slave replication solves read-write separation

【TcaplusDB知识库】TcaplusDB巡检统计介绍

1.Tornado简介&&本专栏搭建tornado项目简介

随机推荐

What about the popular state management library mobx?

Error parsing mapper XML

I have made a dating app for myself. Interested friends can have a look

Tcapulusdb Jun · industry news collection (IV)

Modify frontsortinglayer variable of spritemask

Record (II)

Pytorch 安装超简单

Visio to high quality pdf

[applet] vant sliding cell adds the function of clicking other positions to close automatically

[applet] the vant wearp radio radio radio component cannot trigger the bind:change event

Variables (automatic variables, static variables, register variables, external variables) and memory allocation of C malloc/free, calloc/realloc

(11) Tableview

String inversion

[tcapulusdb knowledge base] Introduction to the machine where the tcapulusdb viewing process is located

小微企业如何低成本搭建微官网

Sealem finance builds Web3 decentralized financial platform infrastructure

Innovation and exploration are added layer by layer, and the field model of intelligent process mining tends to be mature

TcaplusDB君 · 行业新闻汇编(三)

Apache相关的几个安全漏洞修复

Notes (II)