当前位置:网站首页>GCC: Graph Contrastive Coding for Graph Neural NetworkPre-Training

GCC: Graph Contrastive Coding for Graph Neural NetworkPre-Training

2022-07-02 19:52:00 【yihanyifan】

Abstract

Figure shows that learning has become a powerful technology to solve real problems . Various downstream graph learning tasks benefit from their recent development , Such as node classification 、 Similarity search and graph classification . However , The existing graph shows that learning technology focuses on domain specific problems , And train a special model for each graph data set , This is usually not transferable to extraterritorial data . Inspired by the latest advances in natural language processing and computer vision in pre training , We designed a graph comparison code (GCC)1 - A self supervised graph neural network pre training framework , To capture common network topology attributes across multiple networks . We will GCC The pre training task of is designed as the instance discrimination of subgraphs in the network , By using contrast learning, graph neural network can learn intrinsic and transferable structural representation . We have conducted extensive experiments on three graph learning tasks and ten graph datasets . It turns out that ,GCC Pre training on different data sets can achieve greater competitiveness or better performance with task specific and trained peers . This shows that pre training and fine-tuning paradigms provide great potential for graph representation learning .

INTRODUCTION

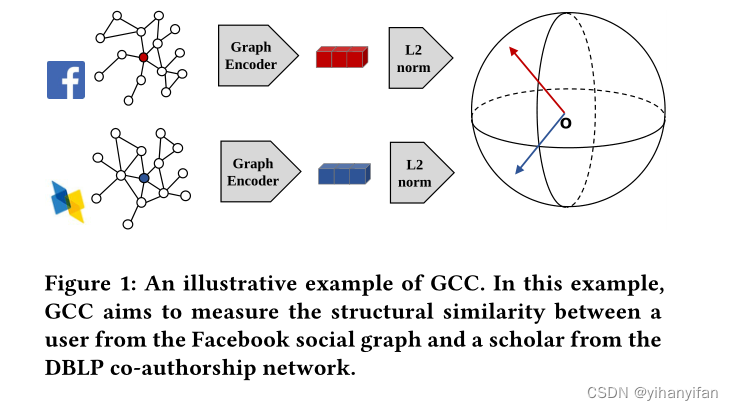

In this paper, we propose Graph Contrastive Coding(GCC) Framework to learn structural representation across graphics . contrary , Using the idea of contrastive learning, we design a graph pre training task as an example recognition . Its basic idea is to extract instance, Think of them as a different kind of themselves , And learn to code and distinguish these examples .GCC Three questions need to be answered , So that it can learn the transferable structural patterns:(1) what are the instances? (2) what are the discrimination rules? (3) how to encode the instances?

The pre training task of this paper : Subgraph instance discrimination (subgraph instance discrimination). For each vertex , Put it r-ego networks As an example of a pattern drawing ,GCC The purpose of is to distinguish subgraphs sampled from specific vertices from subgraphs sampled from other vertices .

Main contributions :

- We will Across multiple graphs GNN Preliminary training The problem is formulated , And identify the main challenges ( difficulty ).

- We designed the pre training task as Graph representation learning , To capture common and transferable structural features from multiple input diagrams .

- We have put forward GCC Frame to learn structural graph representations, The framework is based on contrastive learning To guide the training .

- We did a lot of experiments , To prove that for cross cutting tasks ,GCC Can provide with Models for specific task areas Approximate or higher performance .

RELATED WORK

It mainly includes three parts :vertex similarity, contrastive learning , graph pre-training

Vertex Similarity

Include Neighborhood similarity,Structural similarity,Attribute similarity

Graph Pre-Training

- Skip-gram based model

Some are affected by word2vec Inspired graph embedding Method , for example LINE、DeepWalk、node2vec、 metapath2vec, Mostly based on similar characteristics of nodes , And you can't use problems other than samples . And what this paper proposes GCC The model is based on structural similarity , And can be transferred on the map outside the training .

- Pre-training graph neural networks

Before this article , There are also some pre training articles for graphs , These articles either use the attributes of nodes or edges on the graph , Or define the training task . The model of this paper :1) Do not use icon labels ;2) There are no characteristic tasks to learn .

GRAPH CONTRASTIVE CODING (GCC)

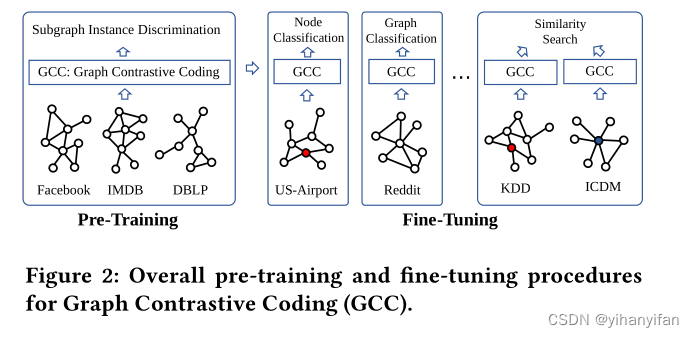

Figure 2 It shows GCC Of pre-training and fine-tuning Overview of the phase .

The GNN Pre-Training Problem

Given a set of graphs from different fields , Our goal is to pre train GNN Model , With self-supervised Capture the structural features in these diagrams . The model should be able to benefit downstream tasks on different data sets . The basic assumption is , Different graph There is common and transferable structural patterns, For example, theme , This is obvious in the literature of network science . An easy to understand scenario , We are Facebook、IMDB and DBLP One is pre trained on the picture GNN Model , And carry on self-supervised Study , And then apply it to US-Airport network The node classification task of the graph , Pictured 2 Shown .

Formally ,GNN The pre training problem is to learn the function of mapping vertices to low dimensional eigenvectors f, And f It has the following two characteristics :

- First ,structural similarity, Nodes with similar topological structure map similarly in vector space .

- secondly ,transferability, It is compatible with vertices and shapes that are not visible during pre training .

GCC Pre-Training

Given a set of graphs , Our goal is to pre train a universal graph neural network encoder , To capture the structural patterns behind these diagrams . To achieve this , We need to design appropriate self-monitoring tasks and learning objectives for graph structured data .

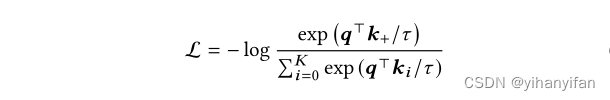

We suggest using subgraph instance discrimination as a pre training task , use InfoNCE[35] As a learning goal . The pre training task regards each instance of the subgraph as a different kind , And learn to distinguish these examples . Its commitment is , It can output a representation that captures the similarity between these subgraph instances .

From the perspective of dictionary search , Given a query q And one containing K+1 individual keys {k ,⋯,k

,⋯,k } Dictionary , Contrast learning to find q Matching keys in the dictionary k+ . This paper adopts InfoNCE :

} Dictionary , Contrast learning to find q Matching keys in the dictionary k+ . This paper adopts InfoNCE :

among ,τ Is the temperature super parameter .fq and f Are the two GNN encoder , take query x

Are the two GNN encoder , take query x and keys x

and keys x Encoded as d Wei said , use q=fq(xq) and k=fk(xk) Express .

Encoded as d Wei said , use q=fq(xq) and k=fk(xk) Express .

To be in GCC Instantiate each component in , We need to answer the following three questions :

- Q1: How to define subgraph instances in graphs?

- Q2: How to define (dis) similar instance pairs in and across graphs, i.e., for a query Xq, which key Xk is the matched one?

- Q3: What are the proper graph encoders fq and fk ?

Q1: Design (subgraph) instances in graphs.

The example in the figure is not clearly defined . Besides , The focus of our pre training is purely structured representation , No additional input features / attribute . This makes natural selection of a single vertex as an instance infeasible , Because it is not suitable for distinguishing two vertices .

To solve this problem , We suggest using subgraphs as comparison examples , Extend each single vertex to its local structure . say concretely , For a vertex v, We define an instance as its r-ego The Internet :

Definition 3.1. A r-ego network. For a vertex v, Its r- Neighbors are defined as Sv = {u: d(u,v)≤r}, among d(u,v) The picture is g in u To v The shortest path distance between , The vertices v Of r-ego The Internet , Write it down as Gv, By Sv Induced subgraph .

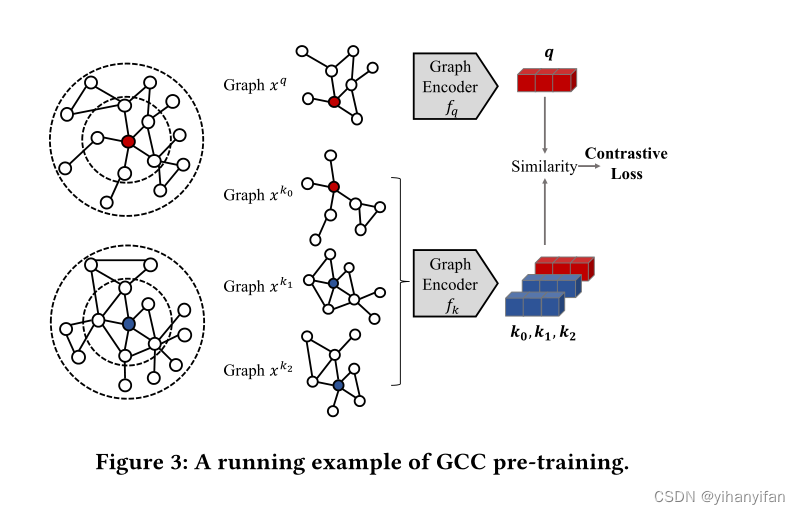

chart 3 The left side of shows 2-ego Two examples of Networks .GCC Each one r-ego The Internet is regarded as a different category of itself , And encourage the model to distinguish between similar instances and different instances .

Q2: Define (dis)similar instances.

stay GCC in , We will be the same r-ego Two random data augmentations of the network are taken as a similar example pair , Data augmentation is defined as graph sampling [27]. Graph sampling is a technique to obtain representative subgraph samples from the original graph . Suppose we want to expand vertices v Of r-ego The Internet (Gv), GCC The graph sampling of follows three steps : Restart random walk (RWR)[50]、 Subgraph induction and anonymization

- Random walk with restart. We start from the top of ourselves v Start in G Random walk on , Walk iteratively moves to its neighborhood with a probability proportional to the edge weight . Besides , At each step , Steps return to the starting vertex v The probability is positive .

- Subgraph induction. Random walk with restart collection v A set of vertices around , use eSv Express . from eSv Induced subgraph eGv It is considered as self network Gv Extended version of . This step is also called induced subgraph random walk sampling (ISRW).

- Anonymization. We will sample the graph eGv Anonymity , The method is to re mark its vertices as {1,2,···,|eSv |}, In any order

We repeat the above process twice , To create two data extensions , They form a similar instance pair (xq, xk+). If two subgraphs from different r-ego The network is expanded , We regard them as having k It's not equal to k+ Different instances of (xq, xk). It is worth noting that , All the above diagram operations — Restart random walk 、 Subgraph induction and anonymization — Can be in DGL The package uses .

Q3: Define graph encoders.

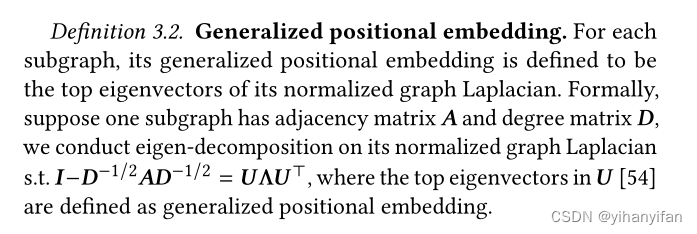

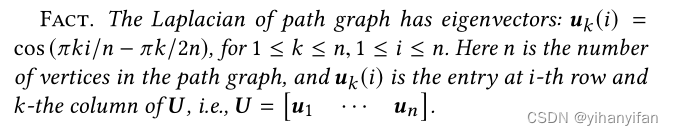

Given two mining drawings xq and xk, GCC Through two neural network encoders fq and fk Code it . Technically speaking , Any graphical neural network can be used here [4] As an encoder , also GCC The model is not sensitive to different choices . in application , We use the latest graph neural network model —— Graph isomorphic networks (GIN)[59] As our graph encoder . Think about it , Before training, we focus on structural representation , While most of the GNN The model requires vertex features / Property as input . To close the gap , We propose to initialize vertex features by using the graph structure of each mining graph . In particular , We define generalized position embedding as follows :

Definition 3.2. Generalized positional embedding

The above facts show that , The position embedding in the sequence model can be regarded as the Laplacian eigenvector of the path graph . This inspires us to generalize location embedding from path graph to arbitrary graph . The reason for using the normalized graph Laplace graph instead of the non normalized graph is that the path graph is a regular graph ( Namely constancy ), In the real world, the graph is usually irregular , There is a skew degree distribution . In addition to generalized positional embedding , We also added vertex degrees [59] The unique hot coding and the binary index of self vertex are used as vertex features . After coding by the graph encoder , The final dd Dimension output vectors and then use their L2-Norm Normalize .

A running example

We are in the picture 3 It shows a GCC Running example of pre training . For the sake of simplicity , We set the dictionary size to 3.GCC First, from the diagram 3 One on the left 2-ego Add two subgraphs randomly in the network xq and xk0. meanwhile , The other two subgraphs xk1 and xk2 It is generated by the noise distribution —— In this case , They are made up of figures 3 Another one on the left panel 2-ego The network expands randomly . Then two graphic encoders fq and fk Map the query and the three keys to a low dimensional vector q and {k0, k1, k2}. Last , The formula 1 The contrast loss in promotes model recognition (xq, xk0) For similar instance pairs , And compare them with different examples ( namely {xk1, xk2}) Distinguish .

GCC Fine-Tuning

The downstream tasks in graph learning can be divided into graph level and node level , The goal is to predict the labels of graph level or nodes . For graph level tasks , The input graph itself can be represented by GCC Encoding , Express in implementation . For node level tasks , The node representation can be encoded by its r-ego The Internet ( Or from its r-ego Network enhanced subgraph ) To define .

Freezing vs. full fine-tuning

GCC Two fine tuning strategies are provided for downstream tasks ——freezing mode and fine-tuning mode.

- stay freezing mode Next , Freeze the pre trained map encoder fqfq Parameters of , And use it as a static feature extractor , Then the classifier for specific downstream tasks is trained on the extracted features .

- stay fine-tuning mode Next , Map encoder to be initialized with pre trained parameters fqfq Train downstream tasks end-to-end with the classifier .

GCC as a local algorithm

GCC As a graph algorithm , It belongs to the local algorithm category , because GCC Based on random walk ( On a large scale ) Network graph sampling method to explore the local structure , therefore GCC Only the input is involved ( On a large scale ) Local exploration of the network . This characteristic makes GCC It can be extended to large-scale graphic learning tasks , And it is very friendly to distributed computing settings .

边栏推荐

- API文档工具knife4j使用详解

- 解决方案:VS2017 无法打开源文件 stdio.h main.h 等头文件[通俗易懂]

- Zabbix5 client installation and configuration

- SQLite 3.39.0 发布,支持右外连接和全外连接

- Windows2008r2 installing php7.4.30 requires localsystem to start the application pool, otherwise 500 error fastcgi process exits unexpectedly

- HDL design peripheral tools to reduce errors and help you take off!

- SQLite 3.39.0 release supports right external connection and all external connection

- Workplace four quadrant rule: time management four quadrant and workplace communication four quadrant "suggestions collection"

- 自动化制作视频

- Postman download and installation

猜你喜欢

GCC: Graph Contrastive Coding for Graph Neural NetworkPre-Training

浏览器缓存机制概述

SQLite 3.39.0 release supports right external connection and all external connection

Build a master-slave mode cluster redis

勵志!大凉山小夥全獎直博!論文致謝看哭網友

Motivation! Big Liangshan boy a remporté le prix Zhibo! Un article de remerciement pour les internautes qui pleurent

upload-labs

JASMINER X4 1U deep disassembly reveals the secret behind high efficiency and power saving

rxjs Observable 自定义 Operator 的开发技巧

sql-labs

随机推荐

Introduction to program ape (XII) -- data storage

Start practicing calligraphy

AcWing 1125. Cattle travel problem solution (shortest path, diameter)

AcWing 383. Sightseeing problem solution (shortest circuit)

KT148A语音芯片ic的用户端自己更换语音的方法,上位机

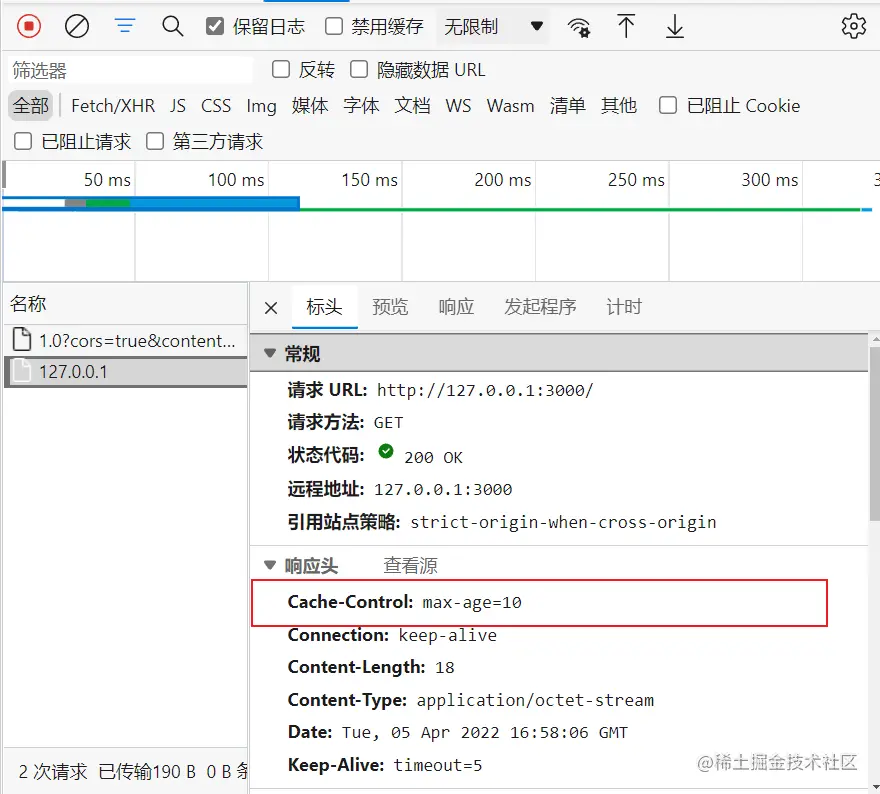

浏览器缓存机制概述

RPD product: super power squad nanny strategy

Chapter 7 - class foundation

Correspondence between pytoch version, CUDA version and graphics card driver version

451 implementation of memcpy, memmove and memset

B端电商-订单逆向流程

【Hot100】21. 合并两个有序链表

有时候只查询一行语句,执行也慢

CheckListBox control usage summary

Educational Codeforces Round 129 (Rated for Div. 2) 补题题解

自動生成VGG圖像注釋文件

KT148A语音芯片ic的开发常见问题以及描述

Workplace four quadrant rule: time management four quadrant and workplace communication four quadrant "suggestions collection"

AcWing 1127. Sweet butter solution (shortest path SPFA)

[JS] get the search parameters of URL in hash mode