" Talk about dry goods "

Coverage technology basis

By the end of Go1.15.2 before , About the underlying implementation of coverage technology , You should know the following :

-

go The language is in the form of plug-in source code , Instead of waiting for the binary to execute breakpoints. This leads to the current go Test coverage collection technology , It must be intrusive , Will modify the source code of the target program . Once upon a time, some students would ask , Can the binary with the stake be put on the line , So it's better not to .

-

What exactly is " Pile insertion "? This question is very important . You can find any one go file , Try to order

go tool cover -mode=count -var=CoverageVariableName xxxx.go, See what the output file is ?-

The author takes this document as an example

https://github.com/qiniu/goc/blob/master/goc.go, The following results are obtained :package main import "github.com/qiniu/goc/cmd" func main() {CoverageVariableName.Count[0]++; cmd.Execute() } var CoverageVariableName = struct { Count [1]uint32 Pos [3 * 1]uint32 NumStmt [1]uint16 } { Pos: [3 * 1]uint32{ 21, 23, 0x2000d, // [0] }, NumStmt: [1]uint16{ 1, // 0 }, }

-

You can see , After execution , There's more in the source code CoverageVariableName Variable , It has three key attributes :

* `Count` uint32 Array , Each element in the array represents the corresponding basic block (basic block) The number of times it was executed

* `Pos` Represents the location of the basic blocks in the source file , Three in a group . Like here `21` Represents the starting line number of the basic block ,`23` Represents the number of end lines ,`0x2000d` More interesting , Before that 16 Bits represent the number of ending columns , after 16 Bits represent the number of starting columns . A point can be uniquely identified by rows and columns , And through the starting and ending points , Can accurately express the physical scope of a basic block in the source file

* `NumStmt` Represents how many statements are in the scope of the corresponding basic block (statement)

`CoverageVariableName` The variable sets counters in each execution logical unit , such as `CoverageVariableName.Count[0]++`, And this is the so-called pile insertion . Through this counter, it is easy to calculate whether the code has been executed to , And how many times it has been implemented . I believe you have seen it before go Coverage results in coverprofile data , It's like this :

`github.com/qiniu/goc/goc.go:21.13,23.2 1 1`

The content here is through variables like the above `CoverageVariableName` obtain . Its basic meaning is

"** file : Start line . Start column , End line . End column The number of statements in the basic block The number of times the basic block has been executed **"

Depending on go Language official powerful tool chain , We can do single test coverage collection and statistics very conveniently . But collective testing /E2E It's not so convenient . But the good thing is that we have https://github.com/qiniu/goc.

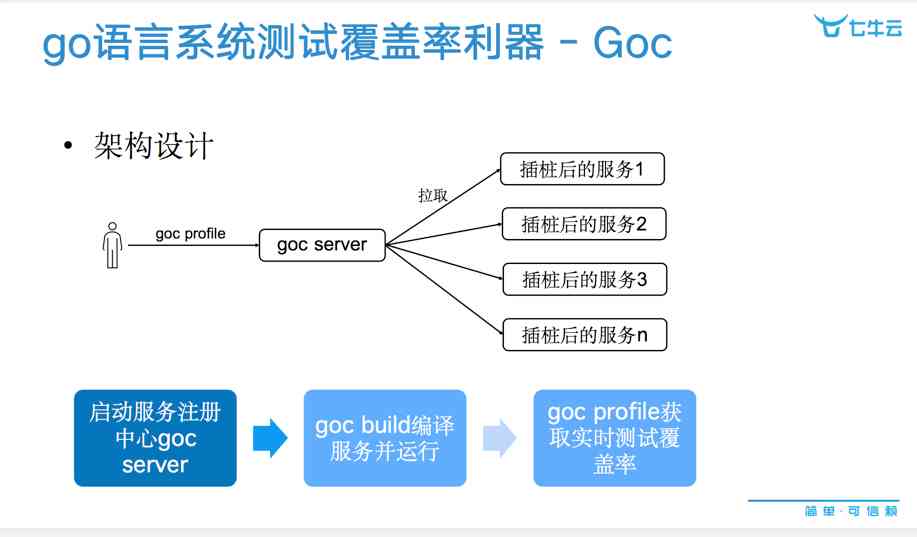

Collection coverage rate collection tool - Goc principle

About the single measurement , thorough go Source code , We will find that go test -cover The command will automatically generate a _testmain.go file . This document will Import The bags that have been pegged , So you can read the instrumentation variables directly , To calculate the test coverage . actually goc It's a similar principle (PS: About why not use go test -c -cover programme , You can refer to here https://mp.weixin.qq.com/s/DzXEXwepaouSuD2dPVloOg).

But when it comes to collective testing , The object under test is usually a complete product , Involving multiple long running Back end services . therefore goc It's designed to be automated, and it injects HTTP API, And through the service registry goc server To manage all the services under test . Such words , You can do this at run time , Through the command goc profile Get the coverage results of the whole cluster in real time , It's really convenient .

For the overall structure, see :

Best practices for code coverage

Technology needs to serve enterprise value , Or you're playing rogue . You can see , Currently playing coverage , There are mainly the following directions :

-

Measure - Depth measurement , All kinds of bags , file , Methods measure , All belong to the system . The value behind it lies in feedback and discovery . What is the level of feedback testing , Identify deficiencies or risks and improve them . For example, it is commonly used as an assembly line access standard , Release access control and so on . Measurement is the foundation , But it can't stop at data . The ultimate goal of coverage , It's about increasing test coverage , Especially the coverage of automation scenarios , And stick to it . So based on this , In the industry, we see , A valuable landing pattern is a measure of incremental coverage .goc diff combination Prow The platform has a similar capability , If you also use Kubernetes, Try it . Of course, the same type of well-known commercial services , Also have CodeCov/Coveralls etc. , However, most of them are limited in the field of single test .

-

Accurate test direction - It's a big direction , The value logic behind it is clear , It's about building two-way feedback from business to code , It is used to improve the accuracy and efficiency of test behavior . But there's a paradox here , Students who understand the code , Probably not without brain feedback ; Students who can't go deep into the code , You give code level feedback , It doesn't work well . So the landing position is very important here . At present, the industry has not seen a good example of practice , Most of them solve problems in specific situations , There are certain limitations .

Compared to the landing direction , As the majority of R & D students , The following best practices may be more valuable to you :

- High code coverage does not guarantee high product quality , But the low code coverage must indicate that most of the logic is not automatically measured . The latter usually increases the risk of problems left online , When it comes to attention .

- There is no universal strict coverage standard for all products . In fact, it should be the business or technical leaders based on their own domain knowledge , The importance of code modules , Modification frequency and other factors , Set your own standards in the team , And promote the team consensus .

- Low code coverage is not terrible , Be able to take the initiative to analyze the parts that are not covered , And assess whether the risk is acceptable , It will make more sense . In fact, I think , As long as this submission is better than the last one , It's all worth encouraging .

Google has a blog ( Reference material ) mention , Its experience shows that , Teams that value code coverage are often more likely to foster a culture of excellence in Engineers , Because these teams think about testability at the beginning of the product design , In order to achieve the test goal more easily . And these measures, in turn, encourage engineers to write higher quality code , More emphasis on modularity .

Last , Welcome to the details button in the lower left corner , Join qiniuyun Goc Communication group , Let's talk about goc, Talk about R & D efficiency .

Reference material

- Google's experience with managing coverage : https://testing.googleblog.com/2020/08/code-coverage-best-practices.html

Previous recommendation

I think it's good , Welcome to your attention :