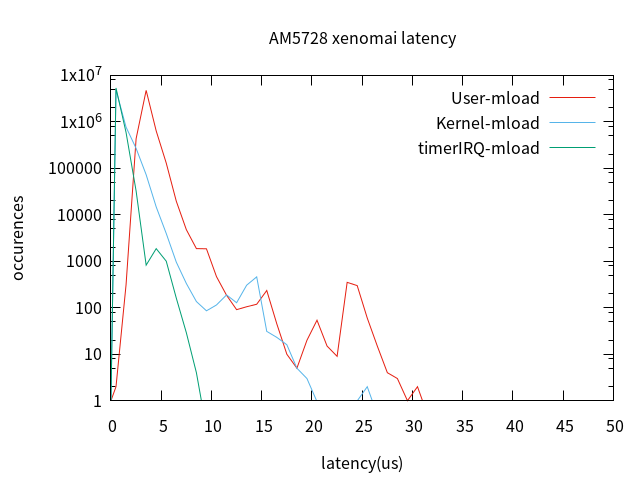

[TOC] ## 1. Overview of the problem Yes ti am5728 xenomai System latency During the test , During the test, it was found that , Memory pressure on latency It's a huge impact , The data under pressure without adding memory is as follows ( notes : All tests in this paper use the default gravity, For real-time tasks cpu Already used `isolcpus=1` Isolation , In addition, the conclusion in this paper may only be true to ARM The platform works ): ```shell stress -c 16 -i 4 -d 2 --hdd-bytes 256M ``` | | user-task ltaency | kernel-task ltaency | TimerIRQ | | ---- | ---- | ---- | ---- | | minimum value | 0.621 | -0.795 | -1.623 | | Average | 3.072 | 0.970 | -0.017 | | Maximum | ==**16.133**== | ==**12.966**== | **7.736** | Add arguments ` --vm 2 --vm-bytes 128M ` Simulate memory pressure .( establish 2 Programs that simulate memory pressure , Keep repeating : Request memory size 128MB, Memory for applications every 4096 Write a character at byte ’Z‘, And then read it to see if it's still ’Z‘, Check and release , Back to the application process ) ```shell stress -c 16 -i 4 -d 2 --hdd-bytes 256M --vm 2 --vm-bytes 128M ``` After adding memory pressure latency, Test 10 Minutes ( Due to time, it was not measured 1 Hours ), The test data are as follows :  | | user-task ltaency | kernel-task ltaency | TimeIRQ | | ---- | ---- | ---- | ---- | | minimum value | 0.915 | -1.276 | -1.132 | | Average | 3.451 | 0.637 |0.530 | | Maximum | ==**30.918**== | ==**25.303**== | **8.240** | | Standard deviation | 0.626 | 0.668 | 0.345 | You can see , After adding memory pressure ,latency The maximum is the maximum without memory pressure 2 times . ## 2. stress Memory pressure principle `stress` The tool has a memory pressure dependent argument : > -m, --vm N fork N Program to memory malloc()/free() > --vm-bytes B The memory size of each program operation is B bytes ( Presupposition 256MB) > --vm-stride B every other B Byte access to a byte ( Presupposition 4096) > --vm-hang N malloc sleep N Seconds later free ( Default not to sleep ) > --vm-keep Allocate memory only once , Release until the end of the program This argument can be used to simulate different pressures ,`--vm-bytes` Represents the memory size of each allocation .`--vm-stride` every other B Byte access to a byte , The main simulation cache miss Situation of .`--vm-hang` Specifies the time to hold memory , Assign frequency . For the above argument `--vm 2 --vm-bytes 128M `, To establish 2 Programs that simulate memory pressure , Keep repeating : Request memory size 128MB, Memory for applications every 4096 Write a character at byte ’Z‘, And then read it to see if it's still ’Z‘, Check and release , Back to the application process . Looking back on our questions , Among them, the variables that affect real-time performance are : (1).** Memory allocation size ** (2).**latency During the test stress Whether to allocate / Free up memory ** (3).** Whether memory uses access ** (4).** Step size per memory access ** Further summary of memory real-time impact factors are : - cache Influence - cache miss High rate - Memory rate ( bandwidth ) - Memory management - Memory allocation / Release operation - Memory access page missing (MMU Congestion ) Let's design test arguments for these effects , Test and check . ## 2. cache factors Shut down cache Can be used to simulate 100% Cache misses , This measures the worst-case impact of cache miss that may be caused by congestion such as memory bus and off chip memory . ### 2.1 Not pressurized am5728 There's no testing here L1 Cache Impact of , The main test L2 cache, Configure core shutdown L2 cache, Recompile the core . ``` System Type ---> [ ] Enable the L2x0 outer cache controller ``` To confirm L2 cahe It's closed , Use the following program to verify , The application size is `SIZE` One int Memory of , Add... To an integer in memory 3, First for In steps of 1, The second one for In steps of 16( Every integer 4 Byte ,16 One 64 Byte ,cacheline It's just the size of 64). Because of the back for Loop steps are 16 , In the absence of cache When , The second one for The execution time of the loop should be the first for Of 1/16, To verify L2 Cache It's closed . Turn on L2 cache In the case of two for The execution time of is 2000ms:153ms(**13 times **), Shut down L2 cache The last two for The execution time of is 2618ms:153ms(**17 times **, Bigger than 16 The reason is that the same memory is used here , No physical memory has been allocated after the memory request , First for When looping, some page missing exception handling will be performed , So it takes a little longer ). ```c #include

#include

#include

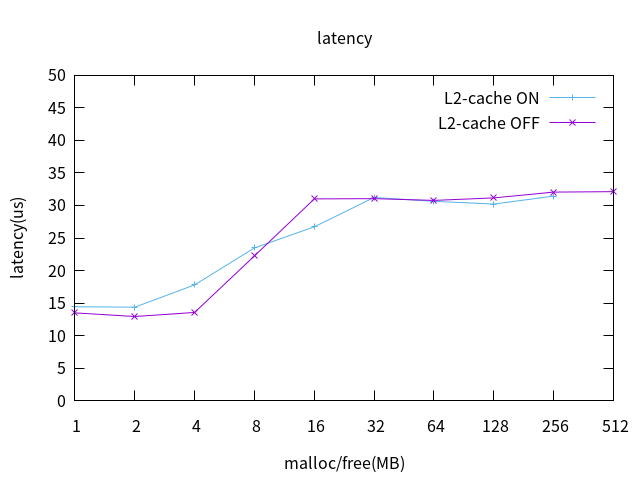

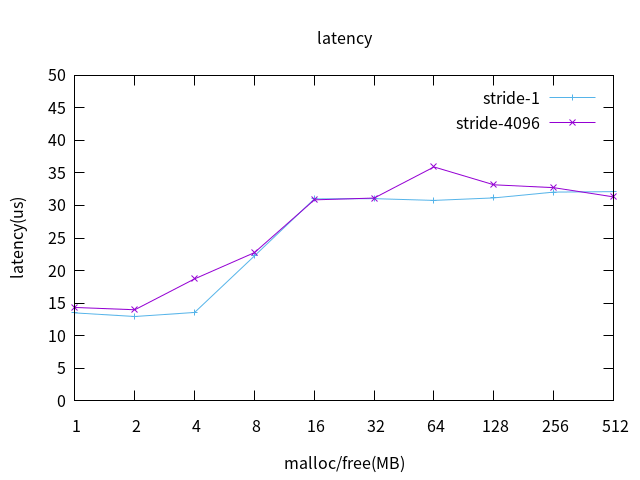

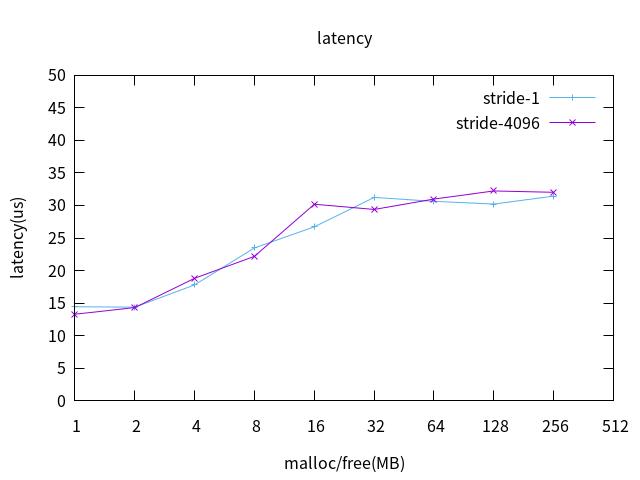

#define SIZE 64*1024*1024 int main(void) { struct timespec time_start,time_end; int i; unsigned long time; int *buff =malloc(SIZE * sizeof(int)); clock_gettime(CLOCK_MONOTONIC,&time_start); for (i = 0; i< SIZE; i ++) buff[i] += 3;// clock_gettime(CLOCK_MONOTONIC,&time_end); time = (time_end.tv_sec * 1000000000 + time_end.tv_nsec) - (time_start.tv_sec * 1000000000 + time_start.tv_nsec); printf("1:%ldms ",time/1000000); clock_gettime(CLOCK_MONOTONIC,&time_start); for (i = 0; i< SIZE; i += 16 ) buff[i] += 3;// clock_gettime(CLOCK_MONOTONIC,&time_end); time = (time_end.tv_sec * 1000000000 + time_end.tv_nsec) - (time_start.tv_sec * 1000000000 + time_start.tv_nsec); printf("64:%ldms\n",time/1000000); free(buff); return 0; } ``` Without pressure , Test off L2 Cache Before and after latency The situation ( The test time is 10min), The information is as follows : | | L2 Cache ON | L2 Cache OFF | | ----| ---- | ---- | | min | -0.879 | 2.363 | | avg | ==1.261== | ==4.174== | | max | **==8.510==** | **==13.161==** | It can be seen from the data that :** Shut down L2Cache After ,latency Overall rise . Without pressure ,L2 Cahe High hit rate , Improve code execution efficiency , It can significantly improve the real-time performance of the system , The same piece of code , Execution time is shorter **. ### 2.2 Pressurization (cpu/io) No pressure on memory , Just test CPU Computing intensive tasks and IO Under pressure , L2 Cache It's right to close or not latency Impact of . The pressure argument is as follows : ```shell stress -c 16 -i 4 ``` Also test 10 Minutes , The information is as follows : | | L2 ON | L2 OFF | | ---- | -------------- | -------------- | | min | 0.916 | 1.174 | | avg | ==4.134== | ==4.002== | | max | **==10.463==** | **==11.414==** | Conclusion :**CPU、IO Under pressure ,L2 Cache It doesn't seem so important whether it's closed or not ** analysis : - Without pressure ,L2 cache In an idle state , Real time tasks cache High hit rate ,latency So the average is low . When shut down L2 cache After ,100% cache Not hit , Both the average and maximum values increased . - newly added CPU、IO After the pressure ,18 A computer program snatched cpu Resources , For real-time tasks , When the real-time task preempts execution ,L2 Cache Has been filled with data from the stress calculation task , For real-time tasks, it's almost 100% Not hit . therefore CPU、IO Under pressure ,L2 Cache Close or not latency almost . ## 3. Memory management factors After 2 Section test , Whether there is... Under pressure cache Of latency Almost the same , Can be ruled out Cache. Let's test memory allocation / Release 、 Memory access page missing (MMU Congestion ) Yes latency Impact of . ### 3.1 Memory allocation / Release stay 2 On the basis of the new memory allocation release pressure , The size of the test pair is 1M、2M、4M、8M、16M、32M、64M、128M、256M Under the memory allocation free operation of latency Information about , Every test 3 Minutes , To test MMU Congestion , Allocate memory in steps of '1' '16' '32' '64' '128' '256' '512' '1024' '2048' '4096' Memory access for , The test code is as follows : ```shell #!/bin/bash test_time=300 #5min base_stride=1 VM_MAXSIZE=1024 STRIDE_MAXSIZE=('1' '16' '32' '64' '128' '256' '512' '1024' '2048' '4096') trap 'killall stress' SIGKILL for((vm_size = 64;vm_size <= VM_MAXSIZE; vm_size = vm_size * 2));do for stride in ${STRIDE_MAXSIZE[@]};do stress -c 16 -i 4 -m 2 --vm-bytes ${vm_size}M --vm-stride $stride & echo "--------${vm_size}-${stride}------------" latency -p 100 -s -g ${vm_size}-${stride} -T $test_time -q killall stress >/dev/null sleep 1 stress -c 16 -i 4 -m 2 --vm-bytes ${vm_size}M --vm-stride $stride --vm-keep & echo "--------${vm_size}-${stride}-keep----------------" latency -p 100 -s -g ${vm_size}-${stride}-keep -T $test_time -q killall stress >/dev/null sleep 1 stress -c 16 -i 4 -m 2 --vm-bytes ${vm_size}M --vm-stride $stride --vm-hang 2 & echo "--------${vm_size}-${stride}-hang----------------" latency -p 100 -s -g ${vm_size}-${stride}-hang -T $test_time -q killall stress >/dev/null sleep 1 done done ``` L2 Cache Turn on , When allocating and releasing memory of different sizes latency Data drawing , The horizontal axis is the memory size of each request for memory pressure tasks , The longitudinal axis is at this pressure latency Maximum , as follows :  As you can see from the picture above , The two inflection points are respectively 4MB,16MB , Distribute / Free memory in 4MB Within latency Not affected , Keep it at a normal level ,** The memory released by allocation is larger than 16MB When latency Reach 30us above , In line with the question **. Thus we can see that :** Ordinary Linux The memory allocation release of tasks can affect real-time performance .** ### 3.2 MMU Congestion According to the core page size 4K, stay 3.1 The foundation of New argument above `–vm-stride 4096`, To make stress Every time you access memory They're all missing pages , To simulate MMU Congestion ,L2 cache off The test data are plotted as follows :  L2 cache on The test data are plotted as follows :  MMU Congestion has little effect on real-time performance . ### 4 Summary After the separation of various factors, the test shows that , After applying memory pressure , The poor real-time performance is due to the allocation and release of memory , It shows that the platform is executed on cpu0 It's ordinary Linux A task's request to release memory will affect execution in cpu1 Real time performance of real-time tasks on . am5728 There are only two levels cache, L2 Cache stay CPU Idle time can significantly improve real-time performance , but CPU When the load is too heavy L2 Cache Change in and out frequently , Not good for real-time tasks Cahe hit , Almost no real-time help . For more information, refer to another article in this blog :[ It's good for improving xenomai Some real-time configuration suggestions ](https://www.cnblogs.com/wsg1100/p/1273072

版权声明

本文为[itread01]所创,转载请带上原文链接,感谢