当前位置:网站首页>The fourth back propagation back propagation

The fourth back propagation back propagation

2022-08-05 05:25:00 【A long way to go】

课堂练习

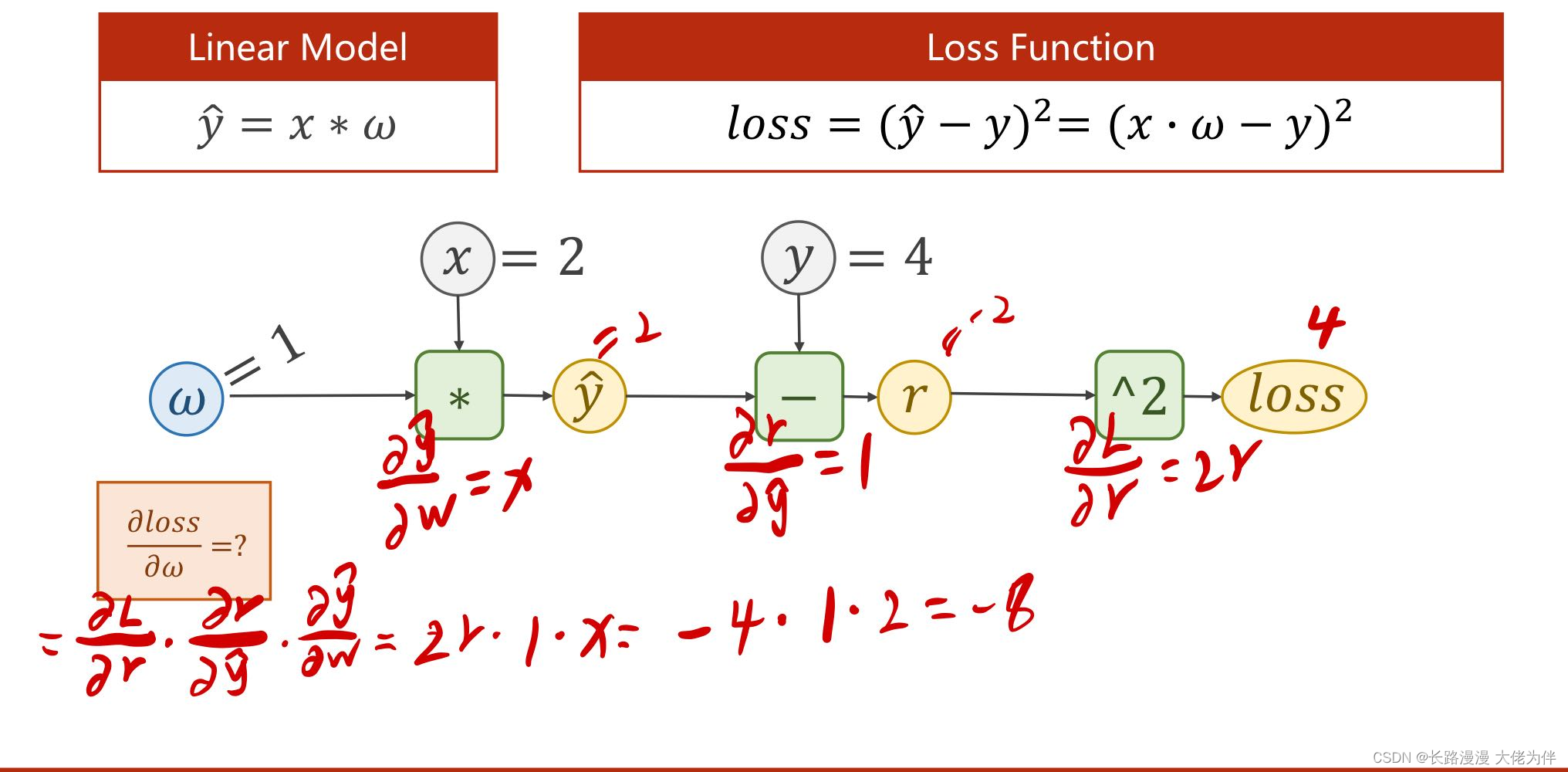

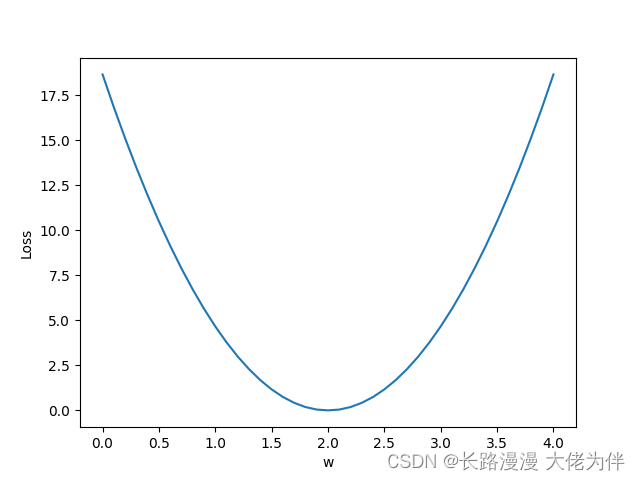

手动推导线性模型y=w*x,损失函数loss=(ŷ-y)²下,当数据集x=2,y=4的时候,反向传播的过程.

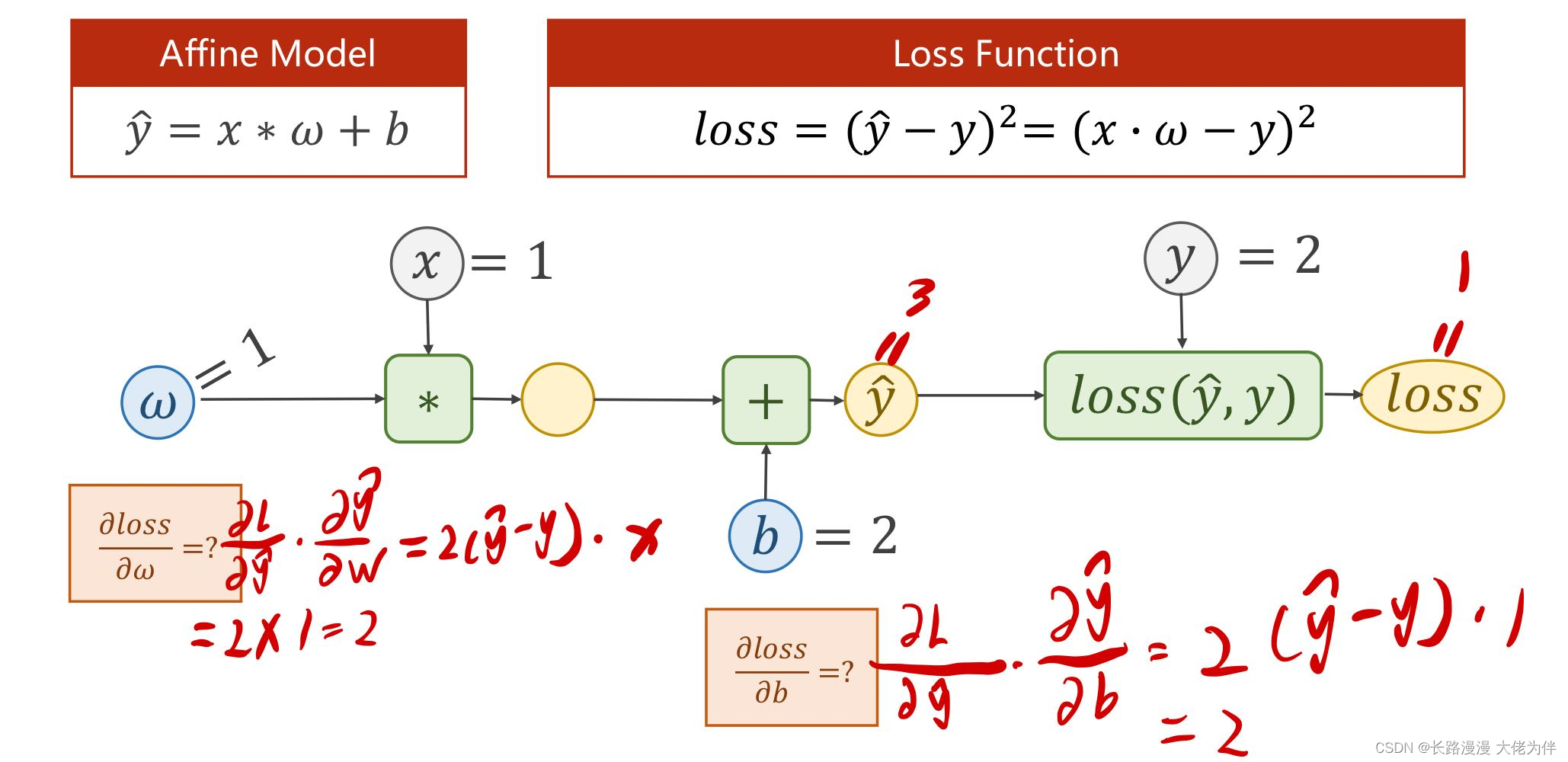

手动推导线性模型 y=w*x+b,损失函数loss=(ŷ-y)²下,当数据集x=1,y=2的时候,反向传播的过程.

线性模型y=w*x,损失函数loss=(ŷ-y)²下,当数据集x=2,y=4的时候,反向传播代码实现

注意:w.grad.data不会自动清0,Manual cleaning required0

代码如下:

import torch

x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

w = torch.Tensor([1.0]) # w的初值为1.0

w.requires_grad = True # 需要计算梯度

learning_rate=0.05 #学习率

def forward(x):

return x * w # w是一个Tensor

def loss(x, y):

y_pred = forward(x)

return (y_pred - y) *(y_pred - y)

print("predict (before training)", 4, forward(4).item())

for epoch in range(100):

for x, y in zip(x_data, y_data):

l = loss(x, y) # l是一个张量,tensor主要是在建立计算图 forward, compute the loss

l.backward() # backward,compute grad for Tensor whose requires_grad set to True

print('\tgrad:', x, y, w.grad.item())

w.data = w.data - learning_rate * w.grad.data # 权重更新时,注意grad也是一个tensor

w.grad.data.zero_() # after update, remember set the grad to zero

print('progress:', epoch, l.item()) # 取出loss使用l.item,不要直接使用l(l是tensor会构建计算图)

print("predict (after training)", 4, forward(4).item())

运行结果:

predict (before training) 4 4.0

grad: 1.0 2.0 -2.0

grad: 2.0 4.0 -7.520000457763672

grad: 3.0 6.0 -12.859201431274414

progress: 0 4.593307018280029

grad: 1.0 2.0 -0.6572480201721191

grad: 2.0 4.0 -2.47125244140625

grad: 3.0 6.0 -4.225842475891113

progress: 1 0.4960484504699707

grad: 1.0 2.0 -0.2159874439239502

grad: 2.0 4.0 -0.8121128082275391

grad: 3.0 6.0 -1.388711929321289

progress: 2 0.05357002466917038

grad: 1.0 2.0 -0.07097864151000977

grad: 2.0 4.0 -0.2668800354003906

grad: 3.0 6.0 -0.45636463165283203

....................

progress: 13 9.094947017729282e-13

grad: 1.0 2.0 -4.76837158203125e-07

grad: 2.0 4.0 -1.9073486328125e-06

grad: 3.0 6.0 -5.7220458984375e-06

progress: 14 9.094947017729282e-13

grad: 1.0 2.0 -2.384185791015625e-07

grad: 2.0 4.0 -9.5367431640625e-07

grad: 3.0 6.0 -2.86102294921875e-06

progress: 15 2.2737367544323206e-13

grad: 1.0 2.0 0.0

grad: 2.0 4.0 0.0

grad: 3.0 6.0 0.0

progress: 16 0.0

grad: 1.0 2.0 0.0

grad: 2.0 4.0 0.0

grad: 3.0 6.0 0.0

.....................

progress: 97 0.0

grad: 1.0 2.0 0.0

grad: 2.0 4.0 0.0

grad: 3.0 6.0 0.0

progress: 98 0.0

grad: 1.0 2.0 0.0

grad: 2.0 4.0 0.0

grad: 3.0 6.0 0.0

progress: 99 0.0

predict (after training) 4 8.0

边栏推荐

- 1.3 mysql batch insert data

- Detailed Explanation of Redis Sentinel Mode Configuration File

- 【过一下14】自习室的一天

- How does the Flutter TapGestureRecognizer work

- Convert the paper official seal in the form of a photo into an electronic official seal (no need to download ps)

- [Study Notes Dish Dog Learning C] Classic Written Exam Questions of Dynamic Memory Management

- Requests the library deployment and common function

- coppercam primer [6]

- Transformation 和 Action 常用算子

- 【解码工具】Bitcoin的一些在线工具

猜你喜欢

随机推荐

RDD和DataFrame和Dataset

HQL语句执行过程

Xiaobai, you big bulls are lightly abused

结构光三维重建(一)条纹结构光三维重建

02.01-----参数的引用的作用“ & ”

【过一下 17】pytorch 改写 keras

第5讲 使用pytorch实现线性回归

将照片形式的纸质公章转化为电子公章(不需要下载ps)

Flutter real machine running and simulator running

range函数作用

Reverse theory knowledge 4

2023年信息与通信工程国际会议(JCICE 2023)

Returned object not currently part of this pool

第二讲 Linear Model 线性模型

Opencv中,imag=cv2.cvtColor(imag,cv2.COLOR_BGR2GRAY) 报错:error:!_src.empty() in function ‘cv::cvtColor‘

学习总结week3_4类与对象

【过一下3】卷积&图像噪音&边缘&纹理

Redis - 13. Development Specifications

SQL(一) —— 增删改查

第三讲 Gradient Tutorial梯度下降与随机梯度下降

![coppercam入门手册[6]](/img/d3/a7d44aa19acfb18c5a8cacdc8176e9.png)

![[cesium] 3D Tileset model is loaded and associated with the model tree](/img/03/50b7394f33118c9ca1fbf31b737b1a.png)