当前位置:网站首页>Fully understand the MESI cache consistency protocol

Fully understand the MESI cache consistency protocol

2022-06-29 10:35:00 【Xuanguoguo】

Catalog

MESI Cache consistency protocol

Multicore CPU Multi level cache consistency protocol MESI

MESI Optimization and the problems they introduce

MESI Cache consistency protocol

Multicore CPU Multi level cache consistency protocol MESI

Multicore CPU In this case, there are multiple first level caches , How to ensure the consistency of the internal data in the cache , Don't let the system data mess up . This leads to a consistent protocol MESI.

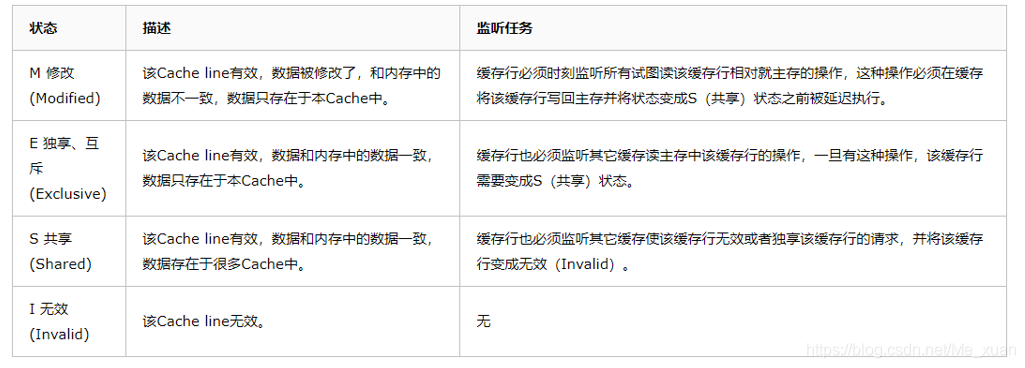

MESI Protocol cache state

MESI Refer to 4 The first letter of the state in . Every Cache line( Cache line (Cache line): A unit for storing data ). Yes 4 Status , You can use 2 individual bit Express , They are :Modidied( modify ),Exclusive( exclusive ),Shared( share ),Invalid( Invalid ).

Be careful :

about M and E State is always accurate , They are consistent with the true state of the cache line , and S The state may be inconsistent . If a cache will be in S The cache line of the state is invalidated , Another cache may actually own the cache line , But the cache does not promote the cache row to E state , This is because other caches don't broadcast their notification to void the cache line , Also, since the cache does not hold the cache line copy The number of , therefore ( Even with such a notice ) There is no way to determine whether you have exclusive access to the cache line .

In the sense above E State is a speculative optimization : If one CPU Want to modify a position in S State cache line , The bus transaction needs to transfer all of the cache rows copy become invalid state , And modify E State caching does not require bus transactions .

Cache line Cache line Yes, there is 64byte, If the data cache rows cannot be placed , You have to switch to bus lock .

Bus lock : There are parameter changes , You have to go through BUS Bus . such as CPU A and CPU B Also update a value , Must first pass the bus lock , The bus lock will be handled uniformly , So the efficiency will be very low .

Now draw a rough flow chart :

MESI Optimization and the problems they introduce

Cache consistent messaging takes time , This will cause delay when switching . When one cache is switched, other caches receive In a long string of time when messages complete their respective switching and send response messages CPU Will wait for all cached responses to complete . Possible Blocking can lead to a variety of performance and stability problems .

CPU Switching state blocking resolution

Store cached (Store Bufferes)

For example, you need to modify a message in the local cache , Then you must I( Invalid ) Status notification to other users who own the cached data CPU slow In storage , And wait for confirmation . Waiting for confirmation will block the processor , This will reduce the performance of the processor . Because this waiting is far better than a Instructions take much longer to execute .

Store Bufferes

To avoid this kind of CPU A waste of computing power ,Store Bufferes Be introduced into use . The processor writes the value it wants to write to main memory to the buffer save , Then continue to deal with other things . When all failures are confirmed (Invalidate Acknowledge) All received , Data is the most Finally submitted .

There are two risks in doing so ,Store Bufferes The risk of

First of all 、 That is, the processor will try to cache from storage (Store buffer) Read value in , But it hasn't been submitted yet . The solution to this be called Store Forwarding, It makes loading time , If... Exists in the storage cache , Then go back to .

second 、 When will the save be completed , There is no guarantee that .

My colleague asked me an interesting question , Now that you have cache consistency , Then why do we need lock Well ?

I thought about it in general , Reviewed relevant documents , It is probably the following points .

1. The problem of instruction rearrangement , Because of compiler and virtual machine optimization , There will be instruction rearrangement , But we locked it , Ensure the correctness and sequence of the program .

2. For hardware compatibility , After all, the cache consistency protocol is cpu Internally defined .

3. timeliness . The cache consistency protocol can update the cache information , However, there is no guarantee that the synchronization to main memory and others will occur at any time cpu.( I think the main reason is )

What is your understanding , Welcome to share . Thank you for the .

边栏推荐

猜你喜欢

Call another interface button through win32API

区域工业互联网市场成绩单,百度智能云开物第二

产品力不输比亚迪,吉利帝豪L雷神Hi·X首月交付1万台

《CLR via C#》读书笔记-CLR寄宿与AppDomain

Alibaba cloud server is installed and configured with redis. Remote access is unavailable

在VMware workstation中安装WMware ESXi 6.5.0并进行配置

Comprehensive understanding of synchronized

PGP在加密技术中的应用

Recurrence of vulnerability analysis for Cisco ASA, FTD and hyperflex HX

Rikka with cake (segment tree + segment tree)

随机推荐

SQL Server 数据库的几种简单查询

The process of updating a record in MySQL

Web漏洞手动检测分析

September 21, 2020 referer string segmentation boost gateway code organization level

IIS服务器相关错误

Beautiful ruins around Kiev -- a safe guide to Chernobyl!

BUUCTF RE-easyre

2019.10.30 learning summary

Picture verification code control

Related problems of pointer array, array pointer and parameter passing

std::make_ shared<T>/std::make_ Unique < T> and std:: shared_ ptr<T>/std::unique_ The difference and relation between PTR < t >

520 diamond Championship 2021

IIS server related error

2019.10.6 training summary

拼图小游戏中学到的Graphics.h

qgis制图

Reading notes of CLR via C -clr boarding and AppDomain

BUUCTF--新年快乐

Web vulnerability manual detection and analysis

软件测试模型(V模型和W模型)