当前位置:网站首页>85.4% mIOU! NVIDIA: using multi-scale attention for semantic segmentation, the code is open source!

85.4% mIOU! NVIDIA: using multi-scale attention for semantic segmentation, the code is open source!

2022-07-05 01:36:00 【Xiaobai learns vision】

Thesis download and source code

link :https://pan.baidu.com/s/17oy5JBnmDDOtKasfPrWNiQ Extraction code :lk5z

Reading guide

come from NVIDIA Of SOTA Semantic segmentation article , Open source code .

The paper :https://arxiv.org/abs/2005.10821

There is an important technology , Usually used for automatic driving 、 Medical imaging , Even zoom the virtual background :“ Semantic segmentation . This marks the pixels in the image as belonging to N One of the classes (N Is any number of classes ) The process of , These classes can be like cars 、 road 、 Things like people or trees . In terms of medical images , Categories correspond to different organs or anatomical structures .

NVIDIA Research Semantic segmentation is being studied , Because it is a widely applicable technology . We also believe that , Improved semantic segmentation techniques may also help improve many other intensive prediction tasks , Such as optical flow prediction ( Predict the motion of objects ), Image super-resolution , wait .

We develop a new method of semantic segmentation , On two common benchmarks :Cityscapes and Mapillary Vistas Up to SOTA Result .IOU It's Cross and compare , It is a measure of the prediction accuracy of semantic segmentation .

stay Cityscapes in , This method achieves 85.4 IOU, Considering the closeness between these scores , This is a considerable improvement over other methods .

stay Mapillary On , A single model is used to achieve 61.1 IOU, Compared with other models, the optimal result of model integration is 58.7.

Predicted results

Research process

In order to develop this new method , We considered which specific areas of the image need to be improved . chart 2 It shows the two biggest failure modes of the current semantic segmentation model : Detail error and class confusion .

chart 2, An example is given to illustrate the common error patterns of semantic segmentation due to scale . In the first line , Shrinking 0.5x In the image of , The thin mailboxes are divided inconsistently , But it is expanding 2.0x In the image of , Better prediction . In the second line , The bigger road / The isolation zone area is at a low resolution (0.5x) The lower segmentation effect is better

In this case , There are two problems : Details are confused with classes .

- The details of the mailbox in the first picture are 2 The best resolution is obtained in the prediction of multiple scales , But in 0.5 The resolution at multiple scales is very poor .

- Compared with median segmentation , stay 0.5x The rough prediction of roads under the scale is better than that under 2x Better under the scale , stay 2x There is class confusion under the scale .

Our solution can perform much better on these two problems , Class confusion rarely occurs , The prediction of details is also more smooth and consistent .

After identifying these error patterns , The team experimented with many different strategies , Including different network backbones ( for example ,WiderResnet-38、EfficientNet-B4、xcepase -71), And different partition decoders ( for example ,DeeperLab). We decided to adopt HRNet As the backbone of the network ,RMI As the main loss function .

HRNet It has been proved to be very suitable for computer vision tasks , Because it keeps the network better than before WiderResnet38 high 2 Multiple resolution representation .RMI Losses provide a way to obtain structural losses without resorting to things like conditional random fields .HRNet and RMI Loss can help solve the confusion of details and classes .

To further address the main error patterns , We have innovated two methods : Multiscale attention and automatic tagging .

Multiscale attention

In the computer vision model , Usually, the method of multi-scale reasoning is used to obtain the best result . Multiscale images run in the network , And combine the results using average pooling .

Use average pooling as a combination strategy , Regard all dimensions as equally important . However , Fine details are usually best predicted at higher scales , Large objects are better predicted at lower scales , On a lower scale , The receptive field of the network can better understand the scene .

Learning how to combine multi-scale prediction at the pixel level can help solve this problem . There has been research on this strategy before ,Chen Etc. Attention to Scale It's the closest . In this method , Learn all scales of attention at the same time . We call it explicit method , As shown in the figure below .

chart 3,Chen Their explicit method is to learn a set of fixed scale intensive attention mask, Combine them to form the final semantic prediction .

suffer Chen Method inspiration , We propose a multi-scale attention model , The model also learned to predict a dense mask, So as to combine MULTI-SCALE PREDICTION . But in this method , We learned a relative attention mask, It is used to pay attention between one scale and the next higher scale , Pictured 4 Shown . We call it hierarchical method .

chart 4, Our hierarchical multiscale attention method . Upper figure : In the process of training , Our model learned to predict the attention between two adjacent scale pairs . The figure below : Reasoning is in a chain / Complete in a hierarchical way , In order to combine multiple prediction scales . Low scale attention determines the contribution of the next higher scale .

The main benefits of this method are as follows :

- Theoretical training cost ratio Chen The method reduces about 4x.

- Training is only carried out on a paired scale , Reasoning is flexible , It can be carried out on any number of scales .

surface 3, Hierarchical multi-scale attention method and Mapillary Comparison of other methods on the validation set . The network structure is DeepLab V3+ and ResNet-50 The trunk . Evaluation scale : Scale for multi-scale evaluation .FLOPS: The relative of network for training flops. This method obtains the best verification set score , But compared with the explicit method , The amount of calculation is only medium .

chart 5 Some examples of our method are shown , And learned attention mask. For the details of the mailbox in the left picture , We pay little attention to 0.5x The forecast , But yes. 2.0x The prediction of scale is very concerned . contrary , For the very large road in the image on the right / Isolation zone area , Attention mechanisms learn to make the best use of lower scales (0.5x), And less use of the wrong 2.0x forecast .

chart 5, Semantic and attention prediction of two different scenarios . The scene on the left illustrates a fine detail , The scene on the right illustrates a large area segmentation problem . White indicates a higher value ( near 1.0). The sum of attention values of a given pixel on all scales is 1.0. Left : The small mailbox beside the road is 2 Get the best resolution at a scale of times , Attention has successfully focused on this scale and not on other scales , This can be done from 2 It is proved in the white of the mailbox in the double attention image . Right picture : Big road / The isolation zone area is 0.5x The prediction effect under the scale is the best , And the attention of this area has indeed successfully focused on 0.5x On the scale .

Automatic marking

A common method to improve the results of semantic segmentation of urban landscape is to use a large number of coarse labeled data . This data is about the baseline fine annotation data 7 times . In the past Cityscapes Upper SOTA Method will use bold labels , Or use rough labeled data to pre train the network , Or mix it with fine-grained data .

However , Rough labeling is a challenge , Because they are noisy and inaccurate .ground truth The thick label is shown in the figure 6 As shown in the for “ Original thick label ”.

chart 6, Examples of automatic generation of coarse image labels . Automatically generated thick labels ( Right ) Provides more than the original ground truth Thick label ( in ) Finer label details . This finer label improves the distribution of labels , Because now small and large objects have representation , Not only on major big items .

Inspired by recent work , We will use automatic annotation as a method , To produce richer labels , To fill in ground truth The label of the thick label is blank . The automatic labels we generated show better details than the baseline thick labels , Pictured 6 Shown . We think , By filling the data distribution gap of long tailed classes , This helps to generalize .

Use the naive method of automatic marking , For example, use multi class probability from teacher network to guide students , It will cost a lot of disk space . by 20,000 Zhang straddle 19 Category 、 The resolution is 1920×1080 It takes about 2tb Storage space . The biggest impact of such a big price will be to reduce the training performance .

We use the hard threshold method instead of the soft threshold method to reduce the space occupied by the generated tag from 2TB Greatly reduced to 600mb. In this method , Teachers predict probability > 0.5 It works , A prediction with a lower probability is considered “ Ignore ” class . surface 4 It shows the benefits of adding coarse data to fine data and using the fused data set to train new students .

surface 4, The baseline method shown here uses HRNet-OCR As the backbone and our multi-scale attention method . We compared the two models : use ground truth Fine label + ground truth Rough label training to ground truth Fine label + auto- Thick label ( Our approach ). The method of using automatic coarsening labels improves the baseline 0.9 Of IOU.

chart 7, Examples of automatic generation of coarse image labels

The final details

The model uses PyTorch In the framework of 4 individual DGX On the node fp16 Tensor kernel for automatic hybrid accuracy training .

The paper :https://arxiv.org/abs/2005.10821

The original English text :https://developer.nvidia.com/blog/using-multi-scale-attention-for-semantic-segmentation/

边栏推荐

- Global and Chinese market of portable CNC cutting machines 2022-2028: Research Report on technology, participants, trends, market size and share

- ROS command line tool

- 小程序容器技术与物联网 IoT 可以碰撞出什么样的火花

- 如果消费互联网比喻成「湖泊」的话,产业互联网则是广阔的「海洋」

- Heartless sword English translation of Xi Murong's youth without complaint

- When the industrial Internet era is truly developed and improved, it will witness the birth of giants in every scene

- Global and Chinese market of network connected IC card smart water meters 2022-2028: Research Report on technology, participants, trends, market size and share

- Wechat applet: independent background with distribution function, Yuelao office blind box for making friends

- Common bit operation skills of C speech

- PowerShell: use PowerShell behind the proxy server

猜你喜欢

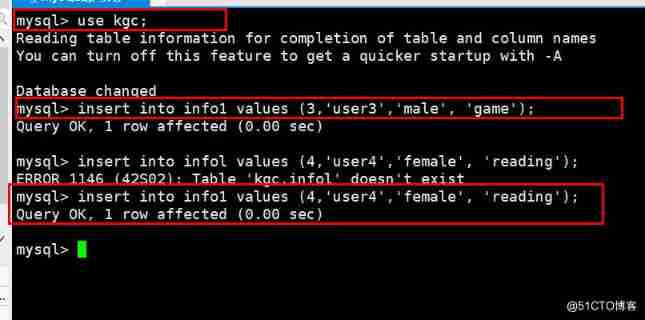

MySQL backup and recovery + experiment

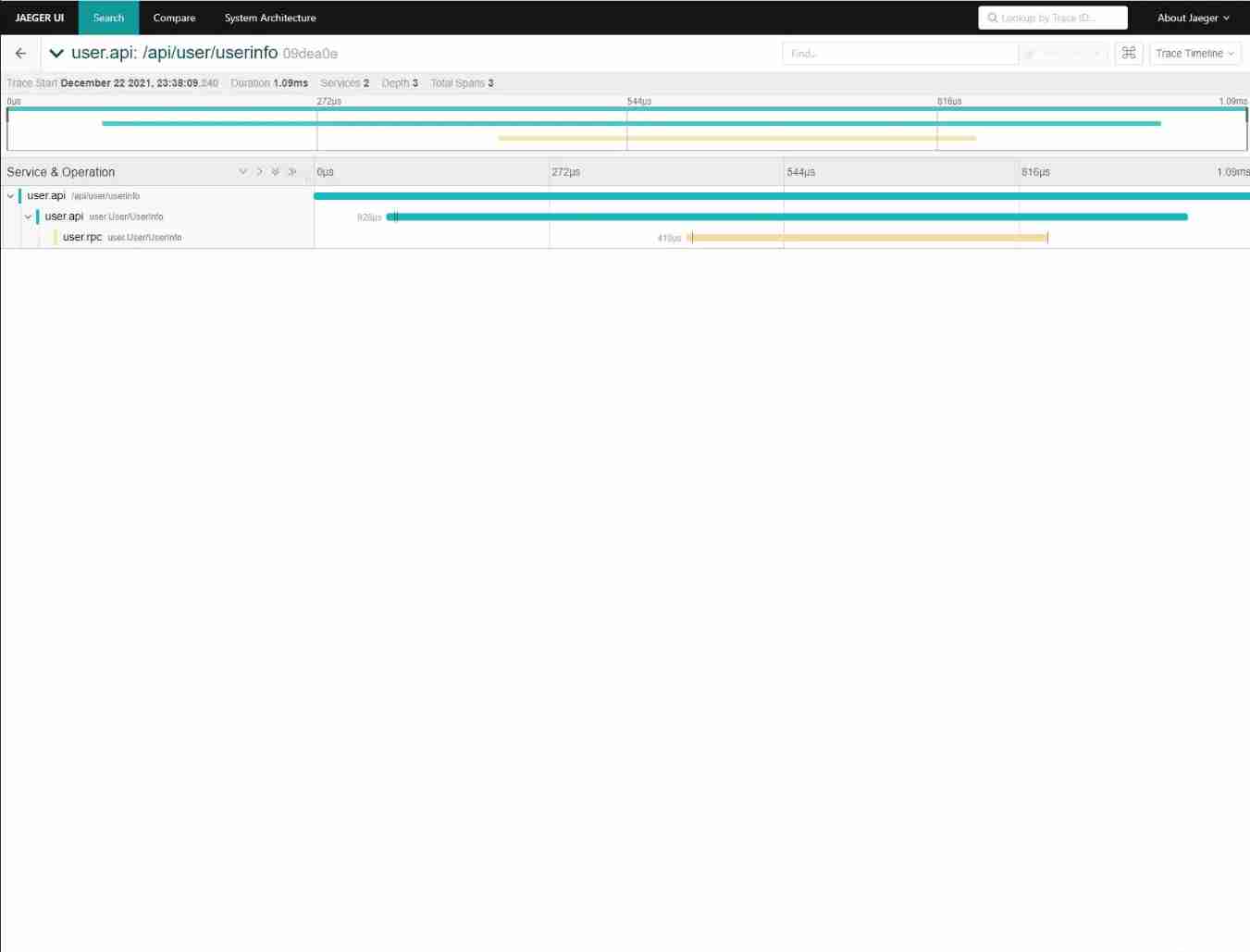

Take you ten days to easily complete the go micro service series (IX. link tracking)

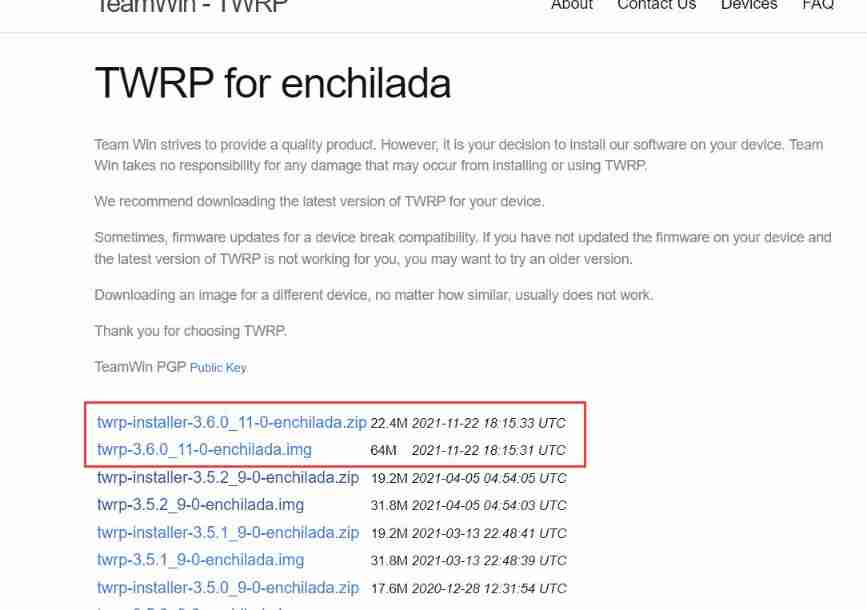

One plus six brushes into Kali nethunter

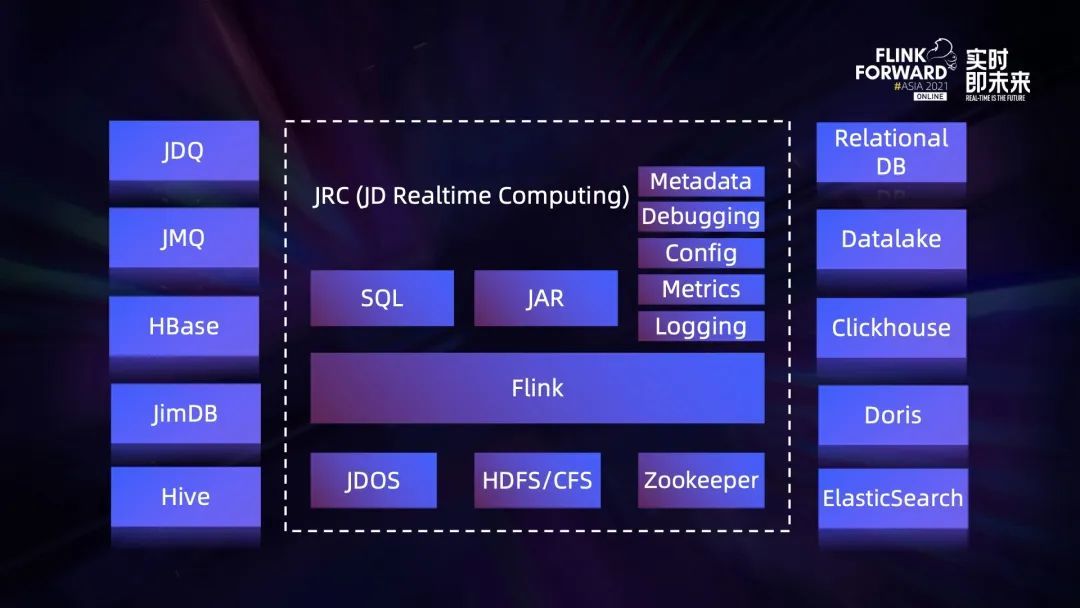

Exploration and practice of integration of streaming and wholesale in jd.com

The perfect car for successful people: BMW X7! Superior performance, excellent comfort and safety

Expansion operator: the family is so separated

Database performance optimization tool

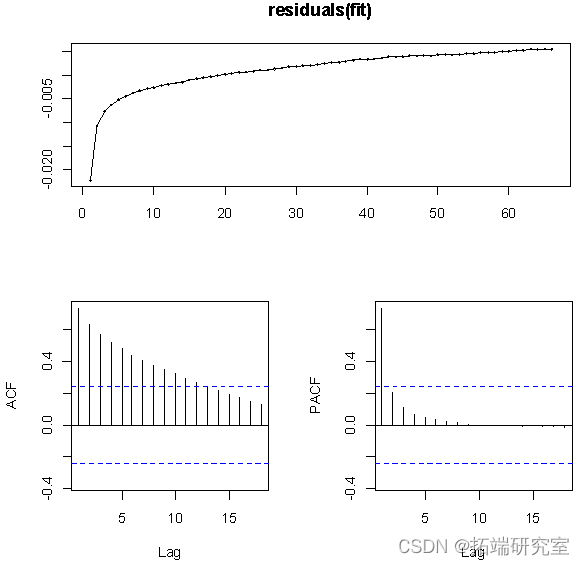

R语言用logistic逻辑回归和AFRIMA、ARIMA时间序列模型预测世界人口

![[wave modeling 1] theoretical analysis and MATLAB simulation of wave modeling](/img/c4/46663f64b97e7b25d7222de7025f59.png)

[wave modeling 1] theoretical analysis and MATLAB simulation of wave modeling

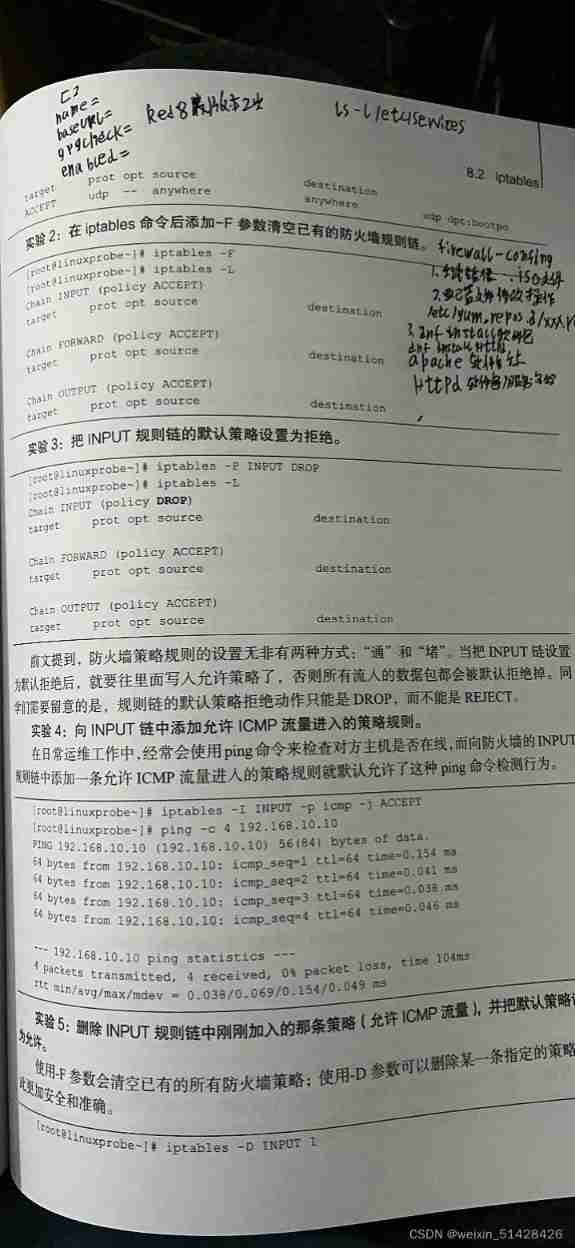

Hedhat firewall

随机推荐

线上故障突突突?如何紧急诊断、排查与恢复

[wave modeling 3] three dimensional random real wave modeling and wave generator modeling matlab simulation

Global and Chinese markets for stratospheric UAV payloads 2022-2028: Research Report on technology, participants, trends, market size and share

Main window in QT application

Win:使用 Shadow Mode 查看远程用户的桌面会话

Remote control service

Kibana installation and configuration

C basic knowledge review (Part 3 of 4)

Are you still writing the TS type code

Yyds dry goods inventory kubernetes management business configuration methods? (08)

After reading the average code written by Microsoft God, I realized that I was still too young

Global and Chinese market of nutrient analyzer 2022-2028: Research Report on technology, participants, trends, market size and share

Actual combat simulation │ JWT login authentication

One plus six brushes into Kali nethunter

PHP Basics - detailed explanation of DES encryption and decryption in PHP

Global and Chinese market of portable CNC cutting machines 2022-2028: Research Report on technology, participants, trends, market size and share

Global and Chinese market of optical densitometers 2022-2028: Research Report on technology, participants, trends, market size and share

Delaying wages to force people to leave, and the layoffs of small Internet companies are a little too much!

Unified blog writing environment

[development of large e-commerce projects] performance pressure test - Optimization - impact of middleware on performance -40