当前位置:网站首页>[point cloud processing paper crazy reading frontier version 8] - pointview gcn: 3D shape classification with multi view point clouds

[point cloud processing paper crazy reading frontier version 8] - pointview gcn: 3D shape classification with multi view point clouds

2022-07-03 09:07:00 【LingbinBu】

Pointview-GCN: 3D Shape Classification With Multi-View Point Clouds

Abstract

- Capture part of the point cloud from multiple viewpoints around the object 3D shape classification

- Pointview-GCN have multi-level Of Graph Convolutional Networks (GCNs), With fine-to-coarse To aggregate the shape features of a single view point cloud , So as to achieve the right Geometric information of the object and Multi perspective relationship The purpose of coding

- The code can be found in the :https://github.com/SMohammadi89/PointView-GCN PyTorch edition

1. introduction

- The point cloud data captured in real life are all part of the point cloud obtained from different perspectives

- Graph Convolutional Networks (GCNs) It is proved that it is right to Semantic relationship coding for feature aggregation The power of

- Pointview-GCN A method with multi-level GCNs Network of , Aggregate shape features from partial point clouds of multiple views , With fine-to-coarse Mining semantic relationships in adjacent views

- In different layers GCNs Inter join skip connection

- A new data set is proposed , This data set contains point cloud data from a single perspective

2. Related work

MVCNN Use max-pooling Aggregate features from different views , Finally, we get a global shape descriptor, The disadvantage is that The semantic relationship between multi view data is not considered .

View-GCN A new method based on view Graph convolution network , Capture structural relationships in data , But all of the above methods are to aggregate features on the image .

3. Method

- First, take multiple partial point data from different perspectives of the object

- utilize backbone Extract the features of each part of the point cloud

- Create a with N N N Graph of nodes G = { v i } i ∈ N G=\left\{ {v_i} \right\}_{i \in N} G={ vi}i∈N, Pass the first i i i Shape features of single view point cloud data F i F_i Fi Representation node v i v_i vi, among F = { F i } i ∈ N \mathbf{F}=\left\{ {F_i} \right\}_{i \in N} F={ Fi}i∈N yes G G G All node characteristics of , v p v_p vp yes v i v_i vi Adjacent points of (kNN), G G G The adjacency matrix of is A \mathbf{A} A

It is proposed that the feature aggregation of network includes multiple level Of GCNs, Pictured 2 Shown ,level The optimal number of M M M Determined by experiment .

In the j j j individual level in , For the input G j G^j Gj perform graph convolution operation , Update node characteristics F i F_i Fi, Followed by an optional view-sampling, Get smaller graph G j + 1 G^{j+1} Gj+1, G j + 1 G^{j+1} Gj+1 It contains G j G^{j} Gj The most important view information .

G j + 1 G^{j+1} Gj+1 It is put into the... As input again j + 1 j+1 j+1 individual level in .

3.1. Graph convolution and Selective View Sampling

In the j j j individual level in , Perform the following three operations :

- local graph convolution

- non-local message passing

- selective view sampling (SVS)

Local graph convolution

Consider nodes v i j v_i^j vij And its adjacent nodes ,local graph convolution Update the node through the following formula v i j v_i^j vij Characteristics of :

F ~ j = L ( A j F j W j ; α j ) \tilde{\mathbf{F}}^{j}=\mathcal{L}\left(\mathbf{A}^{j} \mathbf{F}^{j} \mathbf{W}^{j} ; \alpha^{j}\right) F~j=L(AjFjWj;αj)

among L ( ⋅ ) \mathcal{L}(\cdot) L(⋅) Express LeakyReLU operation , α j \alpha^{j} αj and W j \mathbf{W}^{j} Wj Is the weight matrix .

non-local message passing

Then we have to pass non-local message passing to update F ~ j \tilde{\mathbf{F}}^{j} F~j, consider G j G^{j} Gj Long distance relationship between all nodes in . Every node v i v_i vi First, update its state to the edge between adjacent vertices :

m i , p j = R ( F ~ i j , F ~ p j ; β j ) i , p ∈ N j m_{i, p}^{j}=\mathcal{R}\left(\tilde{F}_{i}^{j}, \tilde{F}_{p}^{j} ; \beta^{j}\right)_{i, p \in N^{j}} mi,pj=R(F~ij,F~pj;βj)i,p∈Nj

among R ( ⋅ ) \mathcal{R}(\cdot) R(⋅) Represents the... Between a pair of views relation function, β j \beta^{j} βj yes related parameters.

Then update the feature of the vertex by the following formula :

F ~ i j = C ( F ~ i j , ∑ p = 1 , p ≠ i N j m i , p j ; γ j ) \tilde{F}_{i}^{j}=\mathcal{C}\left(\tilde{F}_{i}^{j}, \sum_{p=1, p \neq i}^{N_{j}} m_{i, p}^{j} ; \gamma^{j}\right) F~ij=C⎝⎛F~ij,p=1,p=i∑Njmi,pj;γj⎠⎞

among C ( ⋅ ) \mathcal{C}(\cdot) C(⋅) yes combination function, γ j \gamma^{j} γj yes related parameters.

Through non-local message passing after , The feature is updated by considering the relationship of the whole graph .

selective view sampling (SVS)

- Use Farthest Point Sampling (FPS) Yes G j G^{j} Gj Take the next sample

- Each node after down sampling v i v_i vi The nearest neighbor of V i j \mathbf{V}_{i}^{j} Vij in , Use view-selector choice softmax The node with the largest function response

- take coarsened G j + 1 G^{j+1} Gj+1 And updated F j + 1 \mathbf{F}^{j+1} Fj+1 Put it on the next layer to continue processing

3.2. Multi-level feature aggregation and training loss

In each layer graph convolution after , All have one floor max-pooling It works on F j \mathbf{F}^{j} Fj On , The goal is to get every level Global shape feature on F global F_{\text {global }} Fglobal .

The final global shape feature F global F_{\text {global }} Fglobal It's all level Middle quilt pool Stitching of back features .

From the first floor convolution level To the last floor convolution level Added a residual connection, Avoid when GCNs level The increase in the number of leads to the disappearance of the gradient .

Training losses consist of two elements , Global shape loss L global L_{\text {global }} Lglobal and selective-view Shape loss L selective L_{\text {selective }} Lselective :

L = L global ( S ( F global ) , y ) + ∑ j = 1 M ∑ i = 1 N j + 1 ∑ v s ∈ V i j L selective ( V ( F s j ; θ j ) , y ) \begin{aligned} L=& L_{\text {global }}\left(\mathcal{S}\left(F_{\text {global }}\right), y\right)+\\ & \sum_{j=1}^{M} \sum_{i=1}^{N^{j+1}} \sum_{v_{s} \in \mathbf{V}_{i}^{j}} L_{\text {selective }}\left(\mathcal{V}\left(F_{s}^{j} ; \theta^{j}\right), y\right) \end{aligned} L=Lglobal (S(Fglobal ),y)+j=1∑Mi=1∑Nj+1vs∈Vij∑Lselective (V(Fsj;θj),y)

among L global L_{\text {global }} Lglobal It's cross entropy loss , S \mathcal{S} S It includes the full connection layer and softmax Function classifier , y y y Is shape classification . L selective L_{\text {selective }} Lselective Is used for view selector Cross entropy of , Ensure that the selected view can recognize shape shape classification . V ( ⋅ ) \mathcal{V}(\cdot) V(⋅) Is used for view selector Function of , Parameter is θ j \theta^{j} θj. F s j F_{s}^{j} Fsj Is the node after down sampling .

During the training , Only L global L_{\text {global }} Lglobal Participate in .

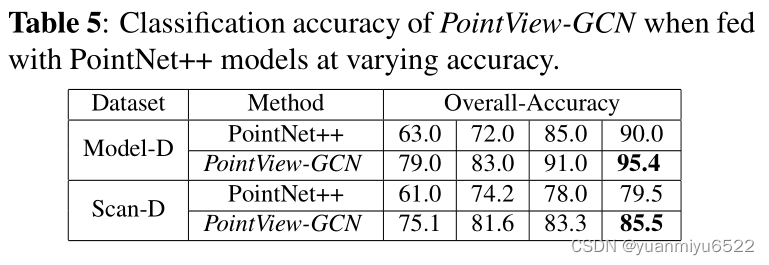

4. experiment

Dataset generation

ModelNet40 Contains 12311 individual model,40 Categories

ScanObjectNN Contains 2909 individual model,15 Categories

Based on this, we build 4 Data sets :Model-D, Model-H, Scan-D and Scan-H

D Represents icosahedral (20 individual viewpoints),H It means hemisphere (12 individual viewpoints)

Implementation details

backbone:PointNet++ /DGCNN

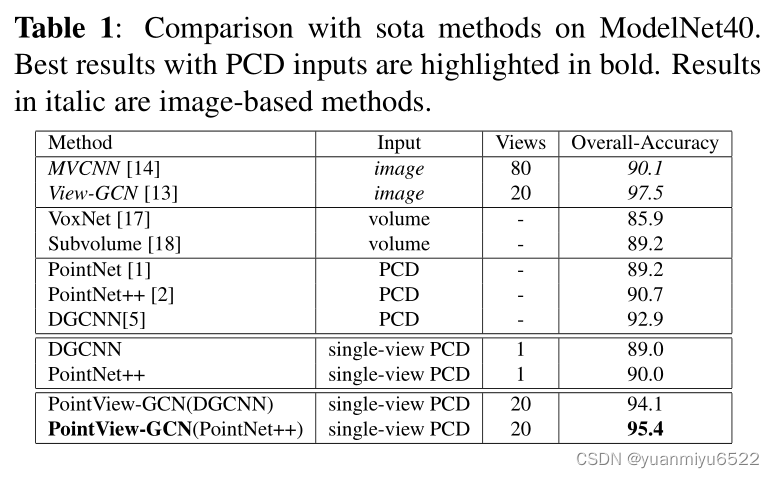

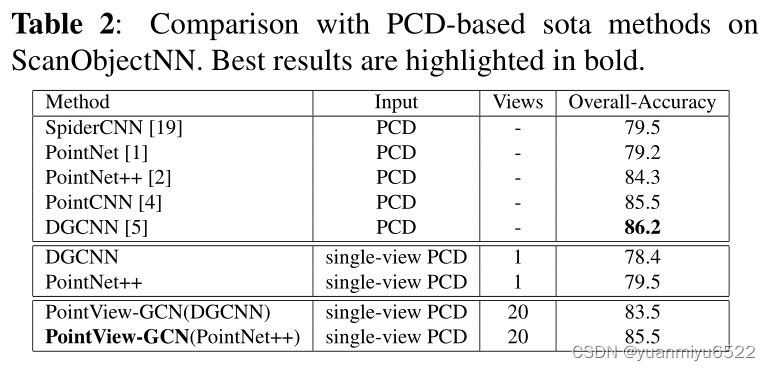

4.1. Comparison against state-of-the-art methods

4.2. Ablation studies

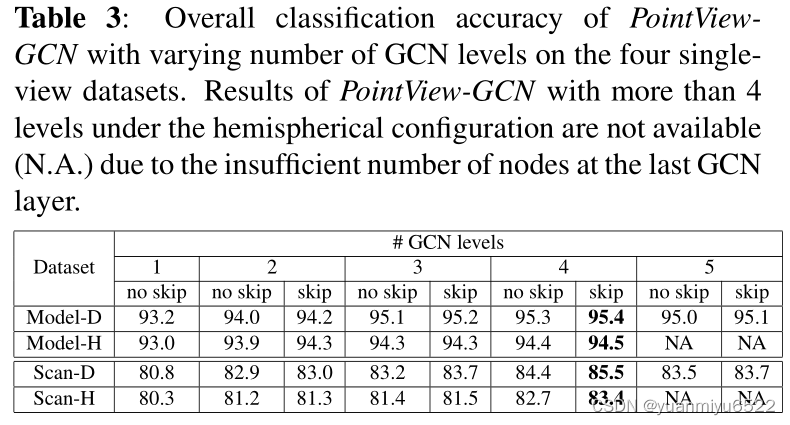

Effects of levels of GCN and skip connection

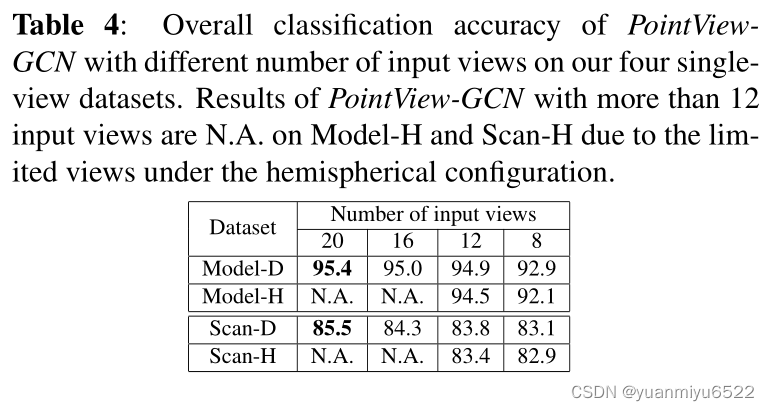

Effects of number of input views

Hemispherical angle of view design accuracy is not as good as icosahedral , Maybe it's because the bottom doesn't collect enough information .

Effects of PointNet++ models of varying classification accuracy

边栏推荐

- SQL statement error of common bug caused by Excel cell content that is not paid attention to for a long time

- 【点云处理之论文狂读经典版8】—— O-CNN: Octree-based Convolutional Neural Networks for 3D Shape Analysis

- 22-05-26 Xi'an interview question (01) preparation

- too many open files解决方案

- MySQL three logs

- 高斯消元 AcWing 883. 高斯消元解线性方程组

- Wonderful review | i/o extended 2022 activity dry goods sharing

- 状态压缩DP AcWing 291. 蒙德里安的梦想

- Pic16f648a-e/ss PIC16 8-bit microcontroller, 7KB (4kx14)

- Tree DP acwing 285 A dance without a boss

猜你喜欢

教育信息化步入2.0,看看JNPF如何帮助教师减负,提高效率?

We have a common name, XX Gong

excel一小时不如JNPF表单3分钟,这样做报表,领导都得点赞!

Memory search acwing 901 skiing

LeetCode 438. Find all letter ectopic words in the string

Binary tree sorting (C language, char type)

LeetCode 508. The most frequent subtree elements and

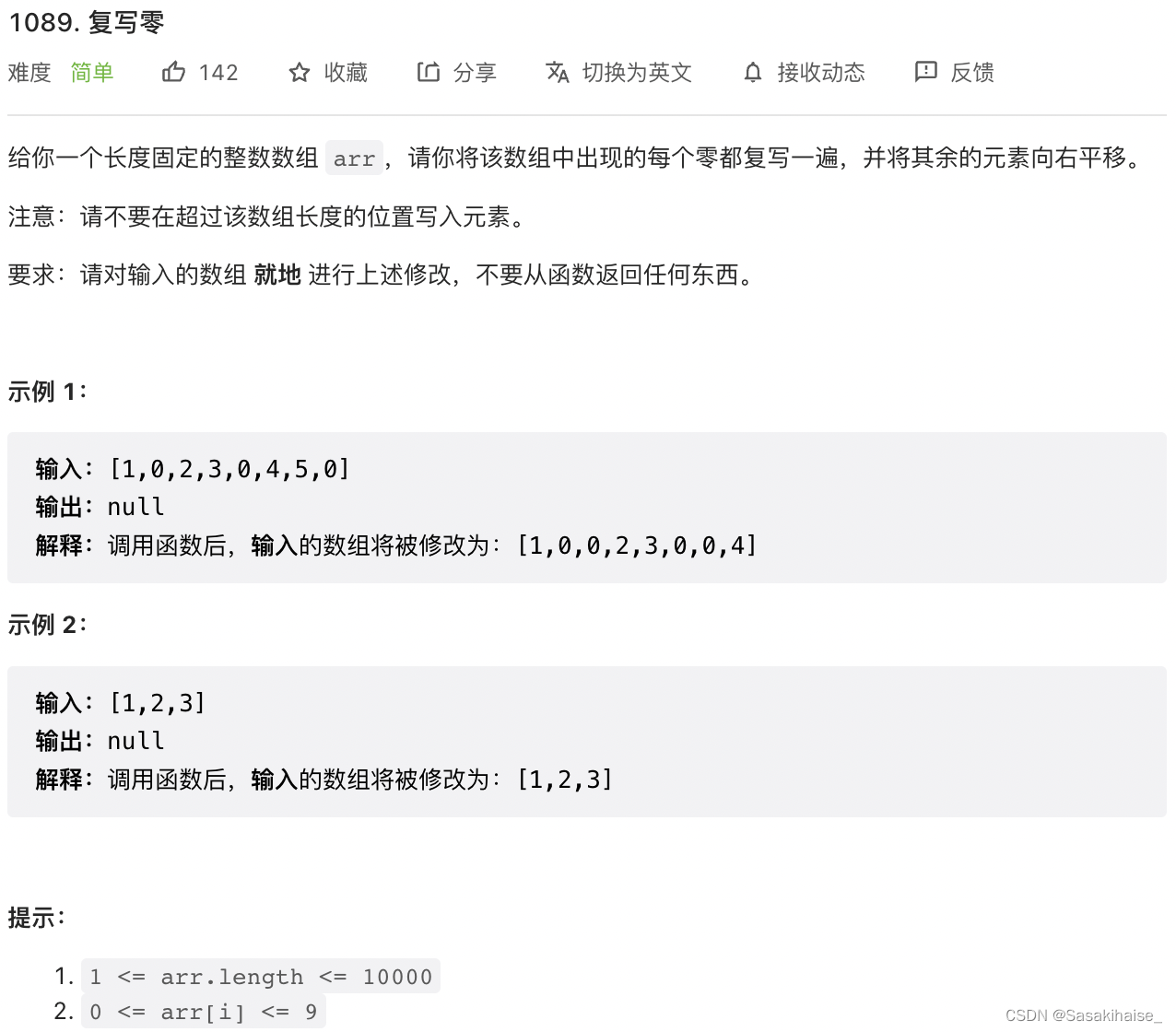

LeetCode 1089. Duplicate zero

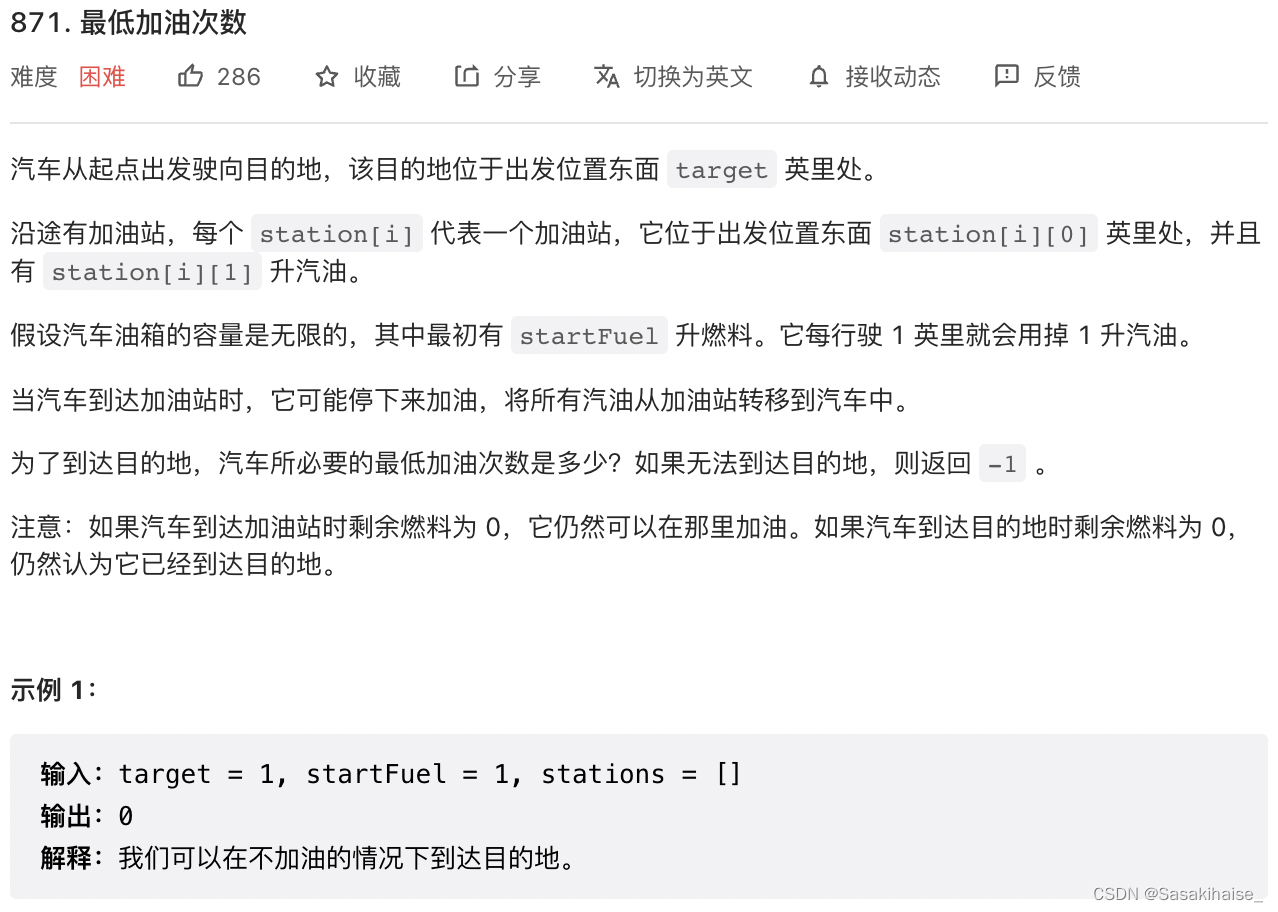

LeetCode 871. Minimum refueling times

Deeply understand the underlying data structure of MySQL index

随机推荐

【点云处理之论文狂读前沿版12】—— Adaptive Graph Convolution for Point Cloud Analysis

Get the link behind? Parameter value after question mark

State compression DP acwing 291 Mondrian's dream

22-05-26 Xi'an interview question (01) preparation

The method of replacing the newline character '\n' of a file with a space in the shell

数位统计DP AcWing 338. 计数问题

Digital management medium + low code, jnpf opens a new engine for enterprise digital transformation

PIC16F648A-E/SS PIC16 8位 微控制器,7KB(4Kx14)

Too many open files solution

What is an excellent fast development framework like?

With low code prospect, jnpf is flexible and easy to use, and uses intelligence to define a new office mode

状态压缩DP AcWing 91. 最短Hamilton路径

AcWing 787. Merge sort (template)

Using variables in sed command

AcWing 785. 快速排序(模板)

How to check whether the disk is in guid format (GPT) or MBR format? Judge whether UEFI mode starts or legacy mode starts?

Recommend a low code open source project of yyds

MySQL three logs

What are the stages of traditional enterprise digital transformation?

Find the combination number acwing 886 Find the combination number II