当前位置:网站首页>Pytorch learning 3 (test training model)

Pytorch learning 3 (test training model)

2022-06-27 13:37:00 【Rat human decline】

Data set preparation

It uses fashionMinst Data sets

'''

Data set preparation

'''

batch_size = 4 # Batch training data 、 Amount of data per batch

DOWNLOAD_MNIST = False # Whether to download data online

# Data preparation Are grayscale images The number of image channels of the input data is 1

# FashionMNIST, I keep it in data Folder

if not(os.path.exists('./data/FashionMNIST/')) or not os.listdir('./data/FashionMNIST/'):# Judge mnist Whether the dataset has been downloaded

# not mnist dir or mnist is empyt dir

DOWNLOAD_MNIST = True

train_dataset = datasets.FashionMNIST(

root = './data',

train= True, #download train data

transform = transforms.ToTensor(),

download=DOWNLOAD_MNIST

)

test_dataset = datasets.FashionMNIST(

root='./data',

train=False, #download test data False It means downloading the data of the test set

transform=transforms.ToTensor(),

download=DOWNLOAD_MNIST

)

# The interface is mainly used to read or output the data of the user-defined interface PyTorch Input of existing data reading interface

# according to batch size Encapsulated into Tensor, Later, it only needs to be repackaged into Variable It can be used as the input of the model

train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True) #shuffle Whether to disrupt the loading data

test_loader = DataLoader(test_dataset, batch_size=batch_size, shuffle=False)

Print dataloader Information and picture methods

'''

The last print

14999

torch.Size([4, 1, 28, 28]) 4- batchsize 1-channel 28*28-H*W

torch.Size([4]) batchsize

tensor([5, 3, 8, 9]) batch Images correspond to lable

'''

'''

# Show dataloaer Information in

for i,(img,target) in enumerate(train_loader):

print(i)

print(img.shape)

print(target.shape)

print(target)

'''

'''

dataloader Is essentially an iteratable object

iter -- iteration

Display a batch Image

'''

'''

def imshow(img, ):

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

# display picture , Show the four pictures together

plt.figure()

dataiter = iter(train_loader)

images, labels = dataiter.next()

imshow(torchvision.utils.make_grid(images))

plt.show()

'''

print(train_loader)

labels_map = {

0: "T-Shirt",

1: "Trouser",

2: "Pullover",

3: "Dress",

4: "Coat",

5: "Sandal",

6: "Shirt",

7: "Sneaker",

8: "Bag",

9: "Ankle Boot",

}

# Display images and label

dataiter = iter(train_loader)

images, labels = dataiter.next()

for i in range(batch_size):

# Display diagram

npimg = images[i].numpy()

plt.subplot(1,batch_size,i+1)

plt.imshow(np.transpose(npimg, (1, 2, 0)))

label = labels_map[int(labels[i].numpy())]

plt.title(label)

plt.show()

Training neural network

Iter------ One iteration , It means a min_batch Primary forward+backward

Epoch------ Iterate all training data (1 Time ), Is called a epoch

'''

Training process

'''

# Start training

EPOCH = 20 # The number of iterations

for epoch in range(EPOCH):

sum_loss = 0

# data fetch

for i, data in enumerate(train_loader):

inputs, labels = data

inputs, labels = inputs.to(device), labels.to(device) # Yes GPU Then put the data into GPU Speed up

# Gradient clear

optimizer.zero_grad()

# Transmission loss + Update parameters

output = net(inputs)

loss = loss_fuc(output, labels)

loss.backward()

optimizer.step()

# Every training 100 individual batch Print once average loss

sum_loss += loss.item()

if i % 100 == 99:

print('[Epoch:%d, batch:%d] train loss: %.03f' % (epoch + 1, i + 1, sum_loss / 100))

sum_loss = 0.0

correct = 0

total = 0

for data in test_loader:

test_inputs, labels = data

test_inputs, labels = test_inputs.to(device), labels.to(device)

outputs_test = net(test_inputs)

_, predicted = torch.max(outputs_test.data, 1) # Output the class with the highest score

total += labels.size(0) # Statistics 50 individual batch Total number of pictures

correct += (predicted == labels).sum() # Statistics 50 individual batch Number of correct classifications

print(' The first {} individual epoch The recognition accuracy of is :{}%'.format(epoch + 1, 100 * correct.item() / total))

# Model preservation

# -------- Save the model -----------

torch.save(net, './model/LeNet.pth') # Save the entire model , Larger in volume Training part of the overall code

import torch

from torch import nn

import torch.nn.functional as F

from torch.utils.data import DataLoader

from torchvision import datasets

from torchvision import transforms

import os

import torchvision

from torch import optim

import matplotlib.pyplot as plt

import cv2 as cv

import numpy as np

'''

Data set preparation

'''

batch_size = 64 # Batch training data 、 Amount of data per batch

DOWNLOAD_MNIST = False # Whether to download data online

# Data preparation Are grayscale images The number of image channels of the input data is 1

# FashionMNIST, I keep it in data Folder

if not(os.path.exists('./data/FashionMNIST/')) or not os.listdir('./data/FashionMNIST/'):# Judge mnist Whether the dataset has been downloaded

# not mnist dir or mnist is empyt dir

DOWNLOAD_MNIST = True

train_dataset = datasets.FashionMNIST(

root = './data',

train= True, #download train data

transform = transforms.ToTensor(),

download=DOWNLOAD_MNIST

)

test_dataset = datasets.FashionMNIST(

root='./data',

train=False, #download test data False It means downloading the data of the test set

transform=transforms.ToTensor(),

download=DOWNLOAD_MNIST

)

# The interface is mainly used to read or output the data of the user-defined interface PyTorch Input of existing data reading interface

# according to batch size Encapsulated into Tensor, Later, it only needs to be repackaged into Variable It can be used as the input of the model

train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True) #shuffle Whether to disrupt the loading data

test_loader = DataLoader(test_dataset, batch_size=batch_size, shuffle=False)

'''

Neural network design and modification

I used to use LeNet-5, Make certain modifications

If the input is grayscale namely Input channel = 1

Color picture channel = 3 , It is necessary to modify the input

'''

class LeNet(nn.Module):

def __init__(self):

super(LeNet, self).__init__()

# Build a convolution layer C1 and Pooling layer S2

self.conv1 = nn.Sequential(

nn.Conv2d(1, 6, kernel_size=5, stride=1, padding=2),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2, padding=0)

)

# Build a convolution layer C3 and Pooling layer S4

self.conv2 = nn.Sequential(

nn.Conv2d(6, 16, kernel_size=5, stride=1, padding=0),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2, padding=0)

)

# Build a full connection layer C5 Fully connected layer F6 Output layer

self.fc = nn.Sequential(

nn.Linear(16 * 5 * 5, 120),

nn.ReLU(),

nn.Linear(120, 84),

nn.ReLU(),

nn.Linear(84, 10)

)

# Set up network forward propagation , According to the order

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = x.view(x.size(0), -1) # Used in all connection layers nn.Linear() Linear structure , The input and output dimensions are all one-dimensional , Therefore, it is necessary to pull the data into one dimension

x = self.fc(x)

return x

net = LeNet()

device = torch.device("cuda" if torch.cuda.is_available() else "cpu") # If... Is detected GPU The environment uses GPU, Otherwise use CPU

net = LeNet().to(device) # Instantiate the network , Yes GPU Put the network into GPU Speed up

'''

Error and optimization

'''

loss_fuc = nn.CrossEntropyLoss() # Multiple classification problem , Choose the cross entropy loss function

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9) # choice SGD, The learning rate is taken as 0.001

'''

Training process

'''

# Start training

EPOCH = 2 # The number of iterations

for epoch in range(EPOCH):

sum_loss = 0

# data fetch

for i, data in enumerate(train_loader):

inputs, labels = data

inputs, labels = inputs.to(device), labels.to(device) # Yes GPU Then put the data into GPU Speed up

# Gradient clear

optimizer.zero_grad()

# Transmission loss + Update parameters

output = net(inputs)

loss = loss_fuc(output, labels)

loss.backward()

optimizer.step()

# Every training 100 individual batch Print once average loss

sum_loss += loss.item()

if i % 100 == 99:

print('[Epoch:%d, batch:%d] train loss: %.03f' % (epoch + 1, i + 1, sum_loss / 100))

sum_loss = 0.0

correct = 0

total = 0

for data in test_loader:

test_inputs, labels = data

test_inputs, labels = test_inputs.to(device), labels.to(device)

outputs_test = net(test_inputs)

_, predicted = torch.max(outputs_test.data, 1) # Output the class with the highest score

total += labels.size(0) # Statistics 50 individual batch Total number of pictures

correct += (predicted == labels).sum() # Statistics 50 individual batch Number of correct classifications

print(' The first {} individual epoch The recognition accuracy of is :{}%'.format(epoch + 1, 100 * correct.item() / total))

# Model preservation

# -------- Save the model -----------

torch.save(net, './model/LeNet.pth') # Save the entire model , Larger in volume

Testing neural networks

test result

The overall code of the test

import numpy as np

import torch

import cv2 as cv

from torch.utils.data import Dataset

from torchvision import datasets

from torchvision.transforms import ToTensor

import matplotlib.pyplot as plt

from pytorchlearn import net

'''

from dataset Randomly select four , Conduct test

'''

print('start predict')

training_data = datasets.FashionMNIST(

root="data",

train=True,

download=True,

transform=ToTensor()

)

test_data = datasets.FashionMNIST(

root="data",

train=False,

download=True,

transform=ToTensor()

)

'''

We can index Datasets manually like a list: training_data[index].

We use matplotlib to visualize some samples in our training data.

'''

labels_map = {

0: "T-Shirt",

1: "Trouser",

2: "Pullover",

3: "Dress",

4: "Coat",

5: "Sandal",

6: "Shirt",

7: "Sneaker",

8: "Bag",

9: "Ankle Boot",

}

test_num = 4

test_imgs = [] # For model testing

test_labels = []

# Randomly select some pictures from the training set for display

for i in range(test_num):

sample_idx = torch.randint(len(training_data), size=(1,)).item()

img, label = training_data[sample_idx]

test_imgs.append(img)

test_labels.append(labels_map[label])

# Put the picture data in convert to tensor

def model_test(img):

img_np = np.array(img)

img_tensor = torch.from_numpy(img_np)

img_tensor = img_tensor.view(1,1,28,28) #batch * channel * h * w

img_tensor = img_tensor.to(device)

out = model(img_tensor)

_, pred = torch.max(out, 1)

print(' Forecast as : Numbers {}.'.format(pred))

return pred

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model = torch.load('./model/LeNet.pth') # Load model

model = model.to(device)

model.eval() # Turn the model into test Pattern

test_res = []

for i in range(test_num):

img = test_imgs[i]

n_cpu = model_test(img).cpu()

l = labels_map[int(n_cpu.numpy())]

res = test_res.append(l)

test_res.append(res)

print(' test result ',test_res)

print(' The actual result ',test_labels)

边栏推荐

- 【第27天】给定一个整数 n ,打印出1到n的全排列 | 全排列模板

- jvm 性能调优、监控工具 -- jps、jstack、jmap、jhat、jstat、hprof

- How to use 200 lines of code to implement Scala's Object Converter

- Today's sleep quality record 78 points

- 对半查找(折半查找)

- crane:字典项与关联数据处理的新思路

- ENSP cloud configuration

- 面试官:Redis的共享对象池了解吗?

- Pre training weekly issue 51: reconstruction pre training, zero sample automatic fine tuning, one click call opt

- 《预训练周刊》第51期:重构预训练、零样本自动微调、一键调用OPT

猜你喜欢

Openhgnn releases version 0.3

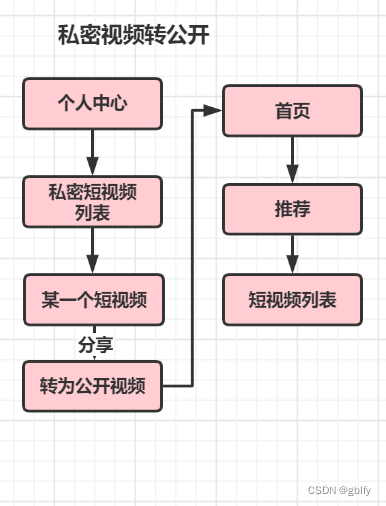

Tiktok practice ~ public / private short video interchange

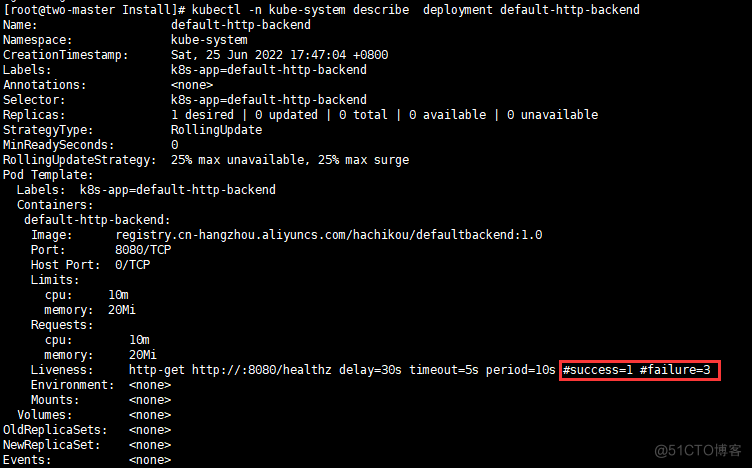

After the deployment is created, the pod problem handling cannot be created

![[安洵杯 2019]Attack](/img/1a/3e82a54cfcb90ebafebeaa8ee1ec01.png)

[安洵杯 2019]Attack

Cesium实现卫星在轨绕行

![[tcaplusdb knowledge base] Introduction to tcaplusdb tcapulogmgr tool (I)](/img/ce/b58e436e739a96b3ba6d2d33cf8675.png)

[tcaplusdb knowledge base] Introduction to tcaplusdb tcapulogmgr tool (I)

高效率取幂运算

一次性彻底解决 Web 工程中文乱码问题

Hue new account error reporting solution

Record number of visits yesterday

随机推荐

hue新建账号报错解决方案

Today's sleep quality record 78 points

Pre training weekly issue 51: reconstruction pre training, zero sample automatic fine tuning, one click call opt

Does Xinhua San still have to rely on ICT to realize its 100 billion enterprise dream?

What is low code for digital Nova? What is no code

How to set postman to Chinese? (Chinese)

数字化新星何为低代码?何为无代码

Firewall foundation Huawei H3C firewall web page login

Clear self orientation

【周赛复盘】LeetCode第81场双周赛

一次性彻底解决 Web 工程中文乱码问题

高效率取幂运算

[acwing] explanation of the 57th weekly competition

jvm 参数设置与分析

buuctf misc 百里挑一

思考的角度的差异

基于JSP实现医院病历管理系统

Record number of visits yesterday

Prometheus 2.26.0 new features

Infiltration learning diary day20