当前位置:网站首页>Remember error scheduler once Asynceventqueue: dropping event from queue shared causes OOM

Remember error scheduler once Asynceventqueue: dropping event from queue shared causes OOM

2022-07-29 02:24:00 【The south wind knows what I mean】

List of articles

Problem description

journal :

2022-07-23 01:03:40 ERROR scheduler.AsyncEventQueue: Dropping event from queue shared. This likely means one of the listeners is too slow and cannot keep up with the rate at which tasks are being started by the scheduler.

2022-07-23 01:03:40 WARN scheduler.AsyncEventQueue: Dropped 1 events from shared since the application started.

2022-07-23 01:04:41 WARN scheduler.AsyncEventQueue: Dropped 2335 events from shared since Sat Jul 23 01:03:40 CST 2022.

2022-07-23 01:05:42 WARN scheduler.AsyncEventQueue: Dropped 2252 events from shared since Sat Jul 23 01:04:41 CST 2022.

2022-07-23 01:06:42 WARN scheduler.AsyncEventQueue: Dropped 1658 events from shared since Sat Jul 23 01:05:42 CST 2022.

2022-07-23 01:07:42 WARN scheduler.AsyncEventQueue: Dropped 1405 events from shared since Sat Jul 23 01:06:42 CST 2022.

2022-07-23 01:08:43 WARN scheduler.AsyncEventQueue: Dropped 1651 events from shared since Sat Jul 23 01:07:42 CST 2022.

2022-07-23 01:09:43 WARN scheduler.AsyncEventQueue: Dropped 1983 events from shared since Sat Jul 23 01:08:43 CST 2022.

2022-07-23 01:10:43 WARN scheduler.AsyncEventQueue: Dropped 1680 events from shared since Sat Jul 23 01:09:43 CST 2022.

2022-07-23 01:11:43 WARN scheduler.AsyncEventQueue: Dropped 1643 events from shared since Sat Jul 23 01:10:43 CST 2022.

2022-07-23 01:12:44 WARN scheduler.AsyncEventQueue: Dropped 1959 events from shared since Sat Jul 23 01:11:43 CST 2022.

2022-07-23 01:13:45 WARN scheduler.AsyncEventQueue: Dropped 2315 events from shared since Sat Jul 23 01:12:44 CST 2022.

2022-07-23 01:14:47 WARN scheduler.AsyncEventQueue: Dropped 2473 events from shared since Sat Jul 23 01:13:45 CST 2022.

2022-07-23 01:15:47 WARN scheduler.AsyncEventQueue: Dropped 1962 events from shared since Sat Jul 23 01:14:47 CST 2022.

2022-07-23 01:16:48 WARN scheduler.AsyncEventQueue: Dropped 1645 events from shared since Sat Jul 23 01:15:47 CST 2022.

2022-07-23 01:17:48 WARN scheduler.AsyncEventQueue: Dropped 1885 events from shared since Sat Jul 23 01:16:48 CST 2022.

2022-07-23 01:18:48 WARN scheduler.AsyncEventQueue: Dropped 2391 events from shared since Sat Jul 23 01:17:48 CST 2022.

2022-07-23 01:19:48 WARN scheduler.AsyncEventQueue: Dropped 1501 events from shared since Sat Jul 23 01:18:48 CST 2022.

2022-07-23 01:20:49 WARN scheduler.AsyncEventQueue: Dropped 1733 events from shared since Sat Jul 23 01:19:48 CST 2022.

2022-07-23 01:21:49 WARN scheduler.AsyncEventQueue: Dropped 1867 events from shared since Sat Jul 23 01:20:49 CST 2022.

2022-07-23 01:22:50 WARN scheduler.AsyncEventQueue: Dropped 1561 events from shared since Sat Jul 23 01:21:49 CST 2022.

2022-07-23 01:23:51 WARN scheduler.AsyncEventQueue: Dropped 1364 events from shared since Sat Jul 23 01:22:50 CST 2022.

2022-07-23 01:24:52 WARN scheduler.AsyncEventQueue: Dropped 1579 events from shared since Sat Jul 23 01:23:51 CST 2022.

2022-07-23 01:25:52 WARN scheduler.AsyncEventQueue: Dropped 1847 events from shared since Sat Jul 23 01:24:52 CST 2022.

Exception in thread "streaming-job-executor-0" java.lang.OutOfMemoryError: GC overhead limit exceeded

at org.apache.xbean.asm7.ClassReader.readLabel(ClassReader.java:2447)

at org.apache.xbean.asm7.ClassReader.createDebugLabel(ClassReader.java:2477)

at org.apache.xbean.asm7.ClassReader.readCode(ClassReader.java:1689)

at org.apache.xbean.asm7.ClassReader.readMethod(ClassReader.java:1284)

at org.apache.xbean.asm7.ClassReader.accept(ClassReader.java:688)

at org.apache.xbean.asm7.ClassReader.accept(ClassReader.java:400)

at org.apache.spark.util.ClosureCleaner$.clean(ClosureCleaner.scala:359)

at org.apache.spark.util.ClosureCleaner$.clean(ClosureCleaner.scala:162)

at org.apache.spark.SparkContext.clean(SparkContext.scala:2362)

at org.apache.spark.rdd.RDD.$anonfun$mapPartitions$1(RDD.scala:834)

at org.apache.spark.rdd.RDD$$Lambda$2785/604434085.apply(Unknown Source)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:388)

at org.apache.spark.rdd.RDD.mapPartitions(RDD.scala:833)

at org.apache.spark.sql.Dataset.rdd$lzycompute(Dataset.scala:3200)

at org.apache.spark.sql.Dataset.rdd(Dataset.scala:3198)

at cn.huorong.utils.PhoenixUtil$.jdbcBatchInsert(PhoenixUtil.scala:216)

at cn.huorong.run.SampleTaskSinkHbaseMapping_OfficialService.storePhoenix(SampleTaskSinkHbaseMapping_OfficialService.scala:94)

at cn.huorong.run.SampleTaskSinkHbaseMapping_OfficialService.$anonfun$sink$1(SampleTaskSinkHbaseMapping_OfficialService.scala:74)

at cn.huorong.run.SampleTaskSinkHbaseMapping_OfficialService.$anonfun$sink$1$adapted(SampleTaskSinkHbaseMapping_OfficialService.scala:37)

at cn.huorong.run.SampleTaskSinkHbaseMapping_OfficialService$$Lambda$1277/1357069303.apply(Unknown Source)

at org.apache.spark.streaming.dstream.DStream.$anonfun$foreachRDD$2(DStream.scala:629)

at org.apache.spark.streaming.dstream.DStream.$anonfun$foreachRDD$2$adapted(DStream.scala:629)

at org.apache.spark.streaming.dstream.DStream$$Lambda$1291/1167476357.apply(Unknown Source)

at org.apache.spark.streaming.dstream.ForEachDStream.$anonfun$generateJob$2(ForEachDStream.scala:51)

at org.apache.spark.streaming.dstream.ForEachDStream$$Lambda$1576/1966952151.apply$mcV$sp(Unknown Source)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23)

at org.apache.spark.streaming.dstream.DStream.createRDDWithLocalProperties(DStream.scala:417)

at org.apache.spark.streaming.dstream.ForEachDStream.$anonfun$generateJob$1(ForEachDStream.scala:51)

at org.apache.spark.streaming.dstream.ForEachDStream$$Lambda$1563/607343052.apply$mcV$sp(Unknown Source)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23)

2022-07-23 02:01:34 WARN scheduler.AsyncEventQueue: Dropped 429 events from shared since Sat Jul 23 02:00:29 CST 2022.

Exception in thread "dispatcher-event-loop-0" java.lang.OutOfMemoryError: GC overhead limit exceeded

at scala.runtime.ObjectRef.create(ObjectRef.java:24)

at org.apache.spark.rpc.netty.Inbox.process(Inbox.scala:86)

at org.apache.spark.rpc.netty.MessageLoop.org$apache$spark$rpc$netty$MessageLoop$$receiveLoop(MessageLoop.scala:75)

at org.apache.spark.rpc.netty.MessageLoop$$anon$1.run(MessageLoop.scala:41)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Cause analysis :

1. Main cause :

2022-07-23 01:03:40 WARN scheduler.AsyncEventQueue: Dropped 1 events from shared since the application started.

- all Spark Homework 、 Phases and tasks are pushed to the event queue .

- The backend listener reads from this queue Spark UI event , And present Spark UI.

- Event queue (

spark.scheduler.listenerbus.eventqueue.capacity) The default capacity of is 10000.

If the number of events pushed to the event queue exceeds the number of events available to the back-end listener , The oldest event will be deleted from the queue , And listeners will never use them .

These events will be lost , And not in Spark UI In .

2. Source code analysis

/** initialization event Queue size LISTENER_BUS_EVENT_QUEUE_PREFIX = "spark.scheduler.listenerbus.eventqueue" LISTENER_BUS_EVENT_QUEUE_CAPACITY = .createWithDefault(10000) **/

private[scheduler] def capacity: Int = {

val queueSize = conf.getInt(s"$LISTENER_BUS_EVENT_QUEUE_PREFIX.$name.capacity",

conf.get(LISTENER_BUS_EVENT_QUEUE_CAPACITY))

assert(queueSize > 0, s"capacity for event queue $name must be greater than 0, " +

s"but $queueSize is configured.")

queueSize // Default 10000

}

3.Spark Official website

Solution :

1. Solve the loss event The method of is actually to use Spark Provided parameters , Statically, the capacity of the queue becomes larger when initializing , This needs to be driver A little more memory

2. Cluster level cluster Spark The configuration of the spark.scheduler.listenerbus.eventqueue.capacity The value is set to be greater than 10000 Value .

3. This value sets the capacity of the application status event queue , It contains the events of the internal application state listener . Increase this value , The event queue can hold more events , But it may cause the driver to use more memory .

# Reference resources

Spark in Histroy Server lose task,job and Stage Problem research

The newly discovered

lately , Colleagues found git It was mentioned by others PR, Found the essence of the problem , The original link is posted below

https://github.com/apache/spark/pull/31839

original text :

Translate :

This PR Proposed a repair ExectionListenerBus An alternative to memory leaks , This method will automatically clear these memory leaks .

Basically , Our idea is to registerSparkListenerForCleanup Add to ContextCleaner,

So when SparkSession By GC‘ ed when , We can go from LiveListenerBus Delete in ExectionListenerBus.

On the other hand , In order to make SparkSession can GC, We need to get rid of ExectionListenerBus Medium SparkSession quote .

therefore , We introduced sessionUUID ( One SparkSession Unique identifier of ) To replace SparkSession object .

SPARK-34087

analysis

We can see from this , This is spark3.0.1 One of the bug,ExecutionListenerBus This thing will continue to grow ,gc It will not decrease after , And because the default queue length is only 1 ten thousand , Growth to 1 ten thousand , Will delete the old , But one problem is to delete the old speed Less than the newly increased speed , Then the queue will become very long , Being in memory all the time will lead to Driver OOM

Let's record how to view it ExecutionListenerBus

- 1. find driver The node

- find driver Where AM

[node04 userconf]# jps | grep ApplicationMaster

168299 ApplicationMaster

168441 ApplicationMaster

[node04 userconf]# ps -ef | grep application_1658675121201_0408 | grep 168441

hadoop 168441 168429 24 Jul27 ? 07:53:51 /usr/java/jdk1.8.0_202/bin/java -server -Xmx2048m -Djava.io.tmpdir=/tmp/hadoop-hadoop/nm-local-dir/usercache/hadoop/appcache/application_1658675121201_0408/container_e47_1658675121201_0408_01_000001/tmp -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintGCTimeStamps -XX:+PrintHeapAtGC -Dspark.yarn.app.container.log.dir=/var/log/udp/2.0.0.0/hadoop/userlogs/application_1658675121201_0408/container_e47_1658675121201_0408_01_000001 org.apache.spark.deploy.yarn.ApplicationMaster --class cn.huorong.SampleTaskScanMapping_Official --jar file:/data/udp/2.0.0.0/dolphinscheduler/exec/process/5856696115520/5888199825472_7/70/4192/spark real time /taskmapping_official_stream-3.0.3.jar --arg -maxR --arg 100 --arg -t --arg hr_task_scan_official --arg -i --arg 3 --arg -g --arg mappingOfficialHbaseOfficial --arg -pn --arg OFFICIAL --arg -ptl --arg SAMPLE_TASK_SCAN,SCAN_SHA1_M_TASK,SCAN_TASK_M_SHA1 --arg -hp --arg p --arg -local --arg false --properties-file /tmp/hadoop-hadoop/nm-local-dir/usercache/hadoop/appcache/application_1658675121201_0408/container_e47_1658675121201_0408_01_000001/__spark_conf__/__spark_conf__.properties --dist-cache-conf /tmp/hadoop-hadoop/nm-local-dir/usercache/hadoop/appcache/application_1658675121201_0408/container_e47_1658675121201_0408_01_000001/__spark_conf__/__spark_dist_cache__.properties

- 3. Get into arthas

// Switch to ordinary users

java -jar arthas-boot.jar --telnet-port 9998 -http-port -1

find 168441 Corresponding coordinates

- 4 utilize arthas View the number of instances

// Do it a few more times You can see The number of instances has been growing

[[email protected]]$ vmtool --action getInstances --className *ExecutionListenerBus --limit 10000 --express 'instances.length'

@Integer[2356]

solve :

spark The official advice , Upgrade to spark 3.0.3 Can solve , We were 3.0.1, Small version upgrade , It's replaced spark jar Bag can , Monitor again listenerBus Number , You will find that the quantity will fluctuate .

Turn that task on , Observed 2 God , Find that everything is fine . thus , We checked 5 God is solved .

边栏推荐

- 实验二:Arduino的三色灯实验

- Control buzzer based on C51

- Quanzhi t3/a40i industrial core board, 4-core [email protected] The localization rate reaches 100%

- How much is the report development cost in the application system?

- [upload picture 2-cropable]

- ES6 语法扩展

- Rgbd point cloud down sampling

- Thermistor temperature calculation formula program

- 3D模型格式全解|含RVT、3DS、DWG、FBX、IFC、OSGB、OBJ等70余种

- Resolve the conflict with vetur when using eslint, resulting in double quotation marks and comma at the end of saving

猜你喜欢

Motionlayout -- realize animation in visual editor

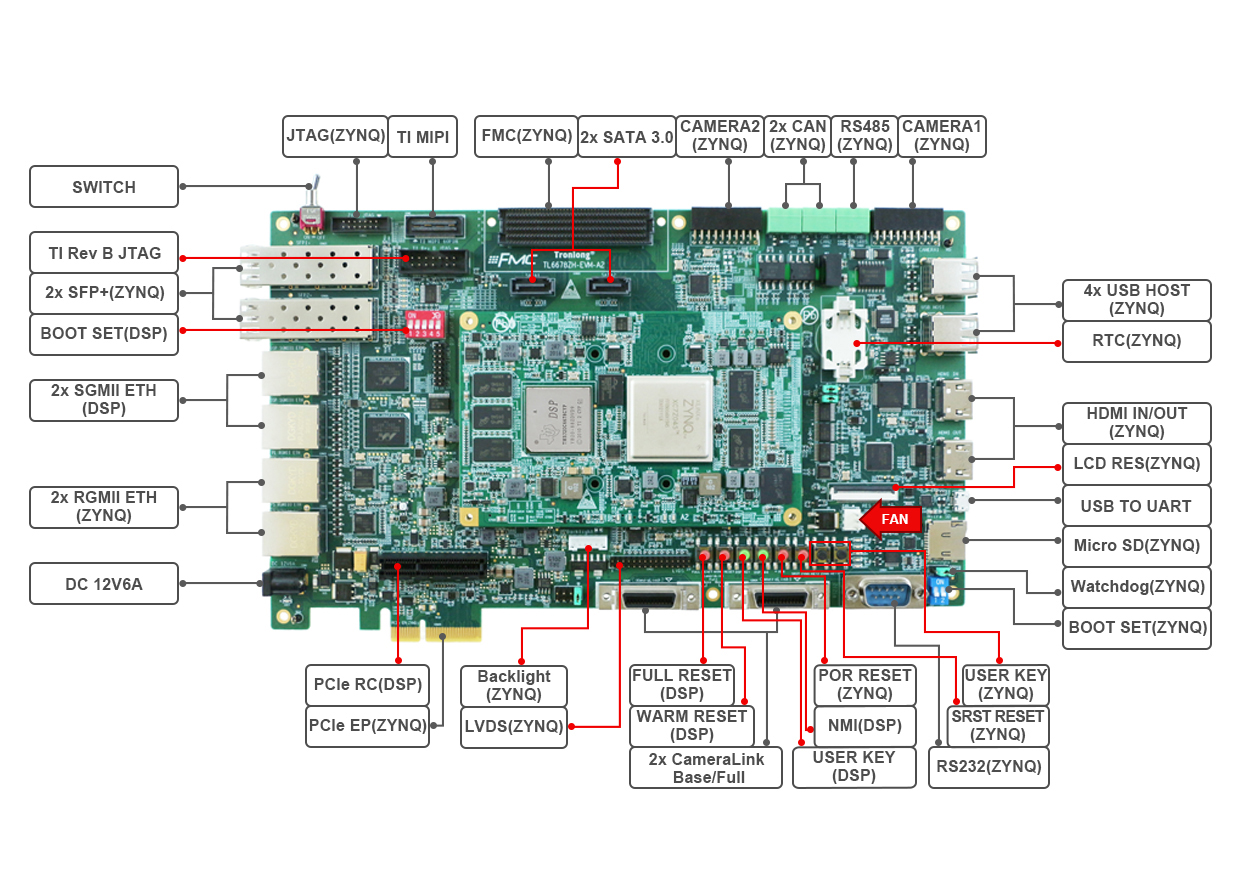

PS + PL heterogeneous multicore case development manual for Ti C6000 tms320c6678 DSP + zynq-7045 (2)

基于对象的实时空间音频渲染丨Dev for Dev 专栏

Internet of things development -- mqtt message server emqx

Prometheus + alertmanager message alert

聊聊 Feign 的实现原理

How to customize a new tab in Duoyu security browser?

Custom MVC principle and framework implementation

“蔚来杯“2022牛客暑期多校训练营2,签到题GJK

基于C51控制蜂鸣器

随机推荐

Ignore wechat font settings

我被这个浏览了 746000 次的问题惊住了

RGBD点云降采样

NPM install reports an error: eperm: operation not permitted, rename

响应式织梦模板装修设计类网站

Click the button to slide to the specified position

Cookie和Session

即时通讯场景下安全合规的实践和经验

QT qstackedwidget multi interface switching

2022.7.28-----leetcode.1331

多边形点测试

[one · data | chained binary tree]

向量相似度评估方法

如果非要在多线程中使用 ArrayList 会发生什么?

基于C51实现数码管的显示

如何利用 RPA 实现自动化获客?

STM32 DMA receives serial port data

什么是作用域和作用域链

autoware中ndtmatching功能加载点云图坐标系修正的问题

Day 15 (VLAN related knowledge)