当前位置:网站首页>Hands-on Deep Learning_GoogLeNet / Inceptionv1v2v3v4

Hands-on Deep Learning_GoogLeNet / Inceptionv1v2v3v4

2022-08-05 11:27:00 【CV Small Rookie】

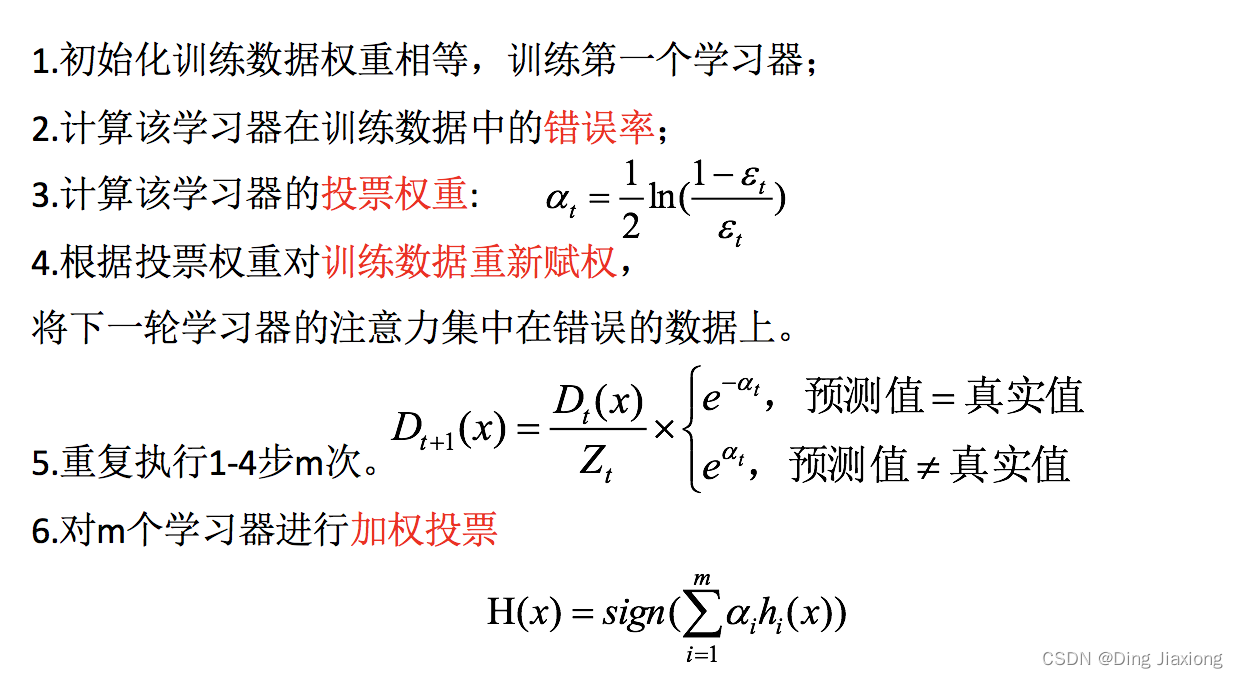

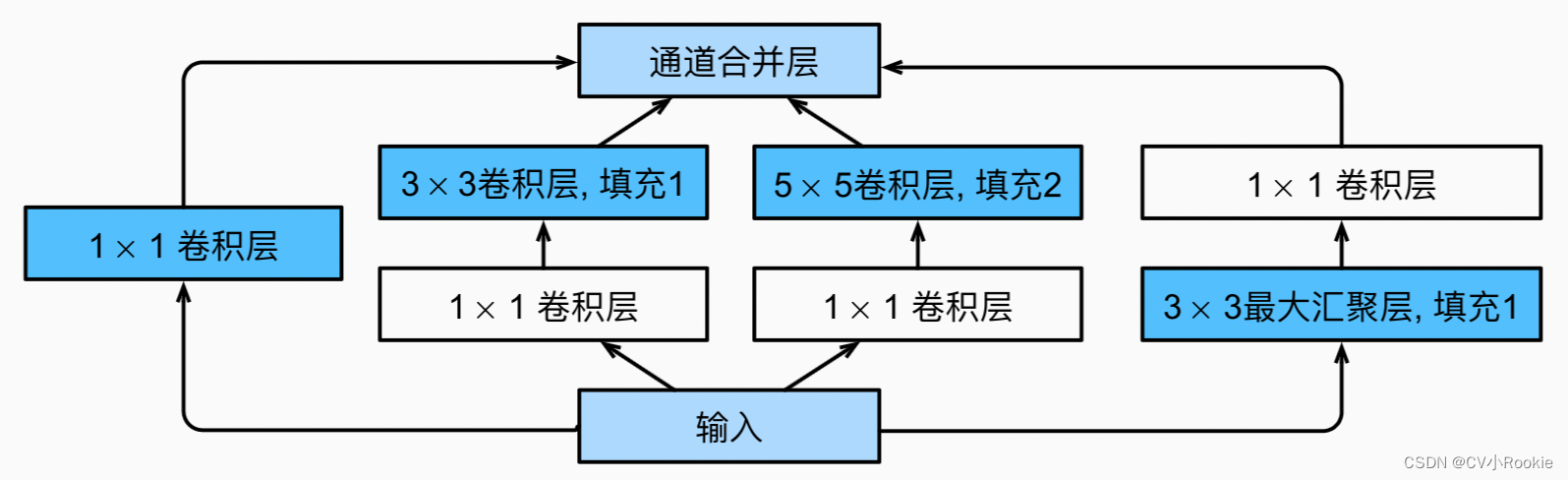

GoogLeNet 是根据 NiN 中串联网络的思想,在此基础上进行改进.A focus of this paper is to solve the problem of what size convolution kernel is most suitable. 毕竟,以前流行的网络使用小到 1 × 1,大到 11 × 11 的卷积核. 本文的一个观点是,有时使用不同大小的卷积核组合是有利的.Here we write the code,是GoogLeNet 的简化版,I posted the original implementation at the end,就不逐一分析了,基本思路一致.

在GoogLeNet中,基本的卷积块被称为 Inception 块(Inception block)

Inception block:

class Inception(nn.Module):

# c1--c4是每条路径的输出通道数

def __init__(self, in_channels, c1, c2, c3, c4, **kwargs):

super(Inception, self).__init__(**kwargs)

# 线路1,单1x1卷积层

self.p1_1 = nn.Conv2d(in_channels, c1, kernel_size=1)

# 线路2,1x1卷积层后接3x3卷积层

self.p2_1 = nn.Conv2d(in_channels, c2[0], kernel_size=1)

self.p2_2 = nn.Conv2d(c2[0], c2[1], kernel_size=3, padding=1)

# 线路3,1x1卷积层后接5x5卷积层

self.p3_1 = nn.Conv2d(in_channels, c3[0], kernel_size=1)

self.p3_2 = nn.Conv2d(c3[0], c3[1], kernel_size=5, padding=2)

# 线路4,3x3最大汇聚层后接1x1卷积层

self.p4_1 = nn.MaxPool2d(kernel_size=3, stride=1, padding=1)

self.p4_2 = nn.Conv2d(in_channels, c4, kernel_size=1)

def forward(self, x):

p1 = F.relu(self.p1_1(x))

p2 = F.relu(self.p2_2(F.relu(self.p2_1(x))))

p3 = F.relu(self.p3_2(F.relu(self.p3_1(x))))

p4 = F.relu(self.p4_2(self.p4_1(x)))

# 在通道维度上连结输出

return torch.cat((p1, p2, p3, p4), dim=1)

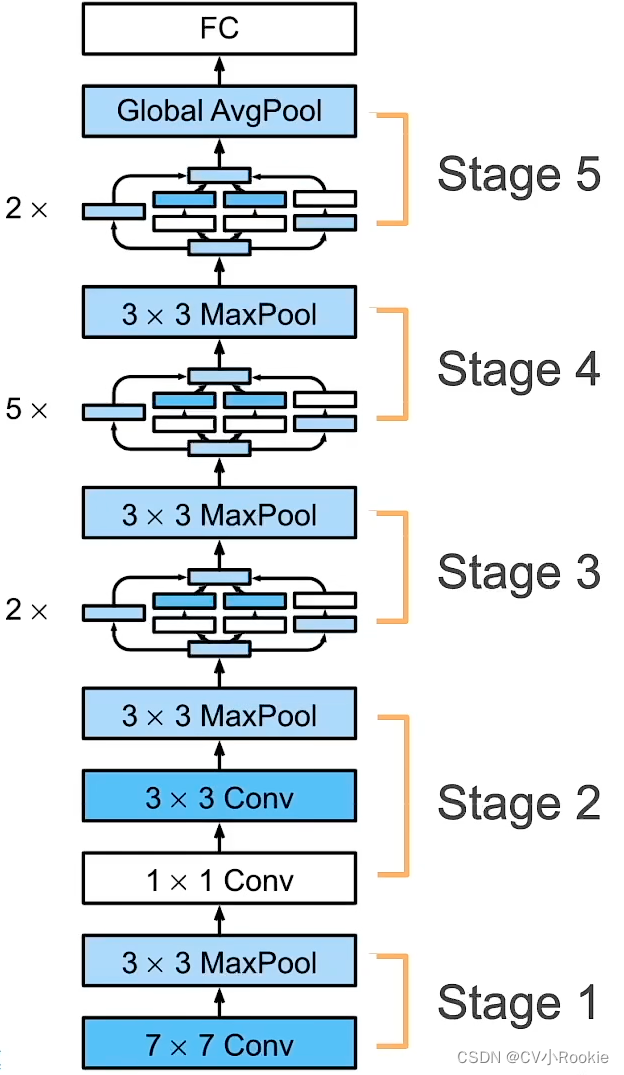

This is a simplified version of what we're going to implement today GoogLeNet .我们把它分成5个块,The basis is that the height and width are halved as one Stage .

Stage 1 :输出 64 通道,7 x 7 的卷积层;(这里还是使用 MNIST,So initially the input channel is 1 ,最后输出是 10)

b1 = nn.Sequential(nn.Conv2d(1, 64, kernel_size=7, stride=2, padding=3),

nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1))Stage 2 :输出64通道, 1 x 1 的卷积层;输出通道 192 ,3 × 3 卷积层;

b2 = nn.Sequential(nn.Conv2d(64, 64, kernel_size=1),

nn.ReLU(),

nn.Conv2d(64, 192, kernel_size=3, padding=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1))Stage 3 :

第一个 Inception 块的输出通道数(从左到右)为 64+128+32+32=256 ,其中路径 2 和 3 First reduce the number of input channels to 96 和 16 ,Then connect its second convolutional layer.

第二个 Inception 块的输出通道数为 128+192+96+64=480 ,其中路径 2 和 3 First reduce the number of input channels to 128 和 32 ,Then connect its second convolutional layer.

b3 = nn.Sequential(Inception(192, 64, (96, 128), (16, 32), 32),

Inception(256, 128, (128, 192), (32, 96), 64),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1))Stage 4:第四模块更加复杂, 它串联了5个Inception块.

第一个 Inception 块的输出通道数为192+208+48+64=512 ,其中路径 2 和 3 First reduce the number of input channels to 96 和 16 ,Then connect its second convolutional layer.

第二个 Inception 块的输出通道数为160+224+64+64=512 ,其中路径 2 和 3 First reduce the number of input channels to 112 和 24 ,Then connect its second convolutional layer.

第三个 Inception 块的输出通道数为 128+256+64+64=512 ,其中路径 2 和 3 First reduce the number of input channels to 128 和 24 ,Then connect its second convolutional layer.

第四个 Inception 块的输出通道数为 112+288+64+64=528 ,其中路径 2 和 3 First reduce the number of input channels to 144 和 32 ,Then connect its second convolutional layer.

第五个 Inception 块的输出通道数为 256+320+128+128=832 ,其中路径 2 和 3 First reduce the number of input channels to 160 和 32 ,Then connect its second convolutional layer.

b4 = nn.Sequential(Inception(480, 192, (96, 208), (16, 48), 64),

Inception(512, 160, (112, 224), (24, 64), 64),

Inception(512, 128, (128, 256), (24, 64), 64),

Inception(512, 112, (144, 288), (32, 64), 64),

Inception(528, 256, (160, 320), (32, 128), 128),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1))Stage 5:

第一个 Inception 块的输出通道数为 256+320+128+128=832 ,其中路径 2 和 3 First reduce the number of input channels to 160 和 32 ,Then connect its second convolutional layer.

第二个 Inception 块的输出通道数为 384+384+128+128=1024 ,其中路径 2 和 3 First reduce the number of input channels to 192 和 48 ,Then connect its second convolutional layer.

It is then followed by a global average pooling layer,Reduce the height and width of each channel to 1 x 1 ,最后 Flatten 展平.

b5 = nn.Sequential(Inception(832, 256, (160, 320), (32, 128), 128),

Inception(832, 384, (192, 384), (48, 128), 128),

nn.AdaptiveAvgPool2d((1,1)),

nn.Flatten())

net = nn.Sequential(b1, b2, b3, b4, b5, nn.Linear(1024, 10))Sequential output shape: torch.Size([1, 64, 24, 24]) Sequential output shape: torch.Size([1, 192, 12, 12]) Sequential output shape: torch.Size([1, 480, 6, 6]) Sequential output shape: torch.Size([1, 832, 3, 3]) Sequential output shape: torch.Size([1, 1024]) Linear output shape: torch.Size([1, 10])

Inceptionv1v2v3v4The code I put here,有需要的可以去下载:Inception

边栏推荐

- 手把手教你定位线上MySQL慢查询问题,包教包会

- Import Excel/CSV from Sub Grid within Dynamics 365

- 巴比特 | 元宇宙每日必读:中国1775万件数字藏品分析报告显示,85%的已发行数藏开通了转赠功能...

- 【C语言指针】用指针提升数组的运算效率

- 互联网行业凛冬之至,BATM的程序员是如何应对中年危机的?

- 负载均衡应用场景

- STM32入门开发:编写XPT2046电阻触摸屏驱动(模拟SPI)

- 一张图看懂 SQL 的各种 join 用法!

- Chapter 5: Activiti process shunting judgment, judging to go to different task nodes

- TiDB 6.0 Placement Rules In SQL Usage Practice

猜你喜欢

随机推荐

STM32 entry development: write XPT2046 resistive touch screen driver (analog SPI)

nyoj754 黑心医生 结构体优先队列

5G NR 系统消息

Letter from Silicon Valley: Act fast, Facebook, Quora and other successful "artifacts"!

四、kubeadm单master

“小钢炮”气质明显,安全、舒适一个不落

发现C语言的乐趣

Chapter 5: Multithreaded Communication—wait and notify

Chapter 5: Activiti process shunting judgment, judging to go to different task nodes

Android 开发用 Kotlin 编程语言一 基本数据类型

并非富人专属,一文让你对NFT改观

hdu4545 Magic String

解决【命令行/终端】颜色输出问题

5G NR system messages

How OpenHarmony Query Device Type

提问题进不去。想问大家一个关于返回值的问题(图的遍历),求给小白解答啊

API 网关简述

【MySQL基础】-【数据处理之增删改】

365 days challenge LeetCode1000 questions - Day 050 add a row to the binary tree binary tree

深度学习(四)分析问题与调参 理论部分