当前位置:网站首页>Batch normalization batch_ normalization

Batch normalization batch_ normalization

2022-07-26 17:08:00 【Full stack programmer webmaster】

Hello everyone , I meet you again , I'm the king of the whole stack

In order to solve the problem of reducing gradient disappearance at the initial stage of deep neural network training / Explosion problem ,Sergey loffe and Christian Szegedy A scheme using batch normalization technology is proposed , This technique involves adding an operation to the model before each layer activates the function , Simple zero centering and normalized input , Then pass the two new parameters of each layer ( A zoom , Another mobile ) Zoom and move results , Words and sentences , This operation enables the model to learn the best model and the average value of input at each layer

Batch normalization principle

(1)\(\mu_B = \frac{1}{m_B}\sum_{i=1}^{m_B}x^{(i)}\) # Experience average , Evaluate the whole small batch B

(2)\(\theta_B = \frac{1}{m_B}\sum_{i=1}^{m_b}(x^{(i)} – \mu_B)^2\) # Evaluate the whole small batch B The variance of

(3)\(x_{(i)}^* = \frac{x^{(i)} – \mu_B}{\sqrt{\theta_B^2+\xi}}\)# Zero centralization and normalization

(4)\(z^{(i)} = \lambda x_{(i)}^* + \beta\)# Zoom and move the input

During the test , There is no small batch of data to calculate the empirical mean and standard deviation , All can be simply replaced by the average value and standard deviation of the whole training set , In the process of training, it can be effectively calculated with the average value of changes

however , Batch normalization does add some complexity and running cost to the model , Make the prediction speed of neural network slow , So if inverse requires fast prediction , It may be necessary to check the following before batch normalization ELU+He How does initialization behave

tf.layers.batch_normalization Use

The function prototype

def batch_normalization(inputs,

axis=-1,

momentum=0.99,

epsilon=1e-3,

center=True,

scale=True,

beta_initializer=init_ops.zeros_initializer(),

gamma_initializer=init_ops.ones_initializer(),

moving_mean_initializer=init_ops.zeros_initializer(),

moving_variance_initializer=init_ops.ones_initializer(),

beta_regularizer=None,

gamma_regularizer=None,

beta_constraint=None,

gamma_constraint=None,

training=False,

trainable=True,

name=None,

reuse=None,

renorm=False,

renorm_clipping=None,

renorm_momentum=0.99,

fused=None,

virtual_batch_size=None,

adjustment=None):Precautions for use

(1) Use batch_normalization It takes three steps :

a. Set the activation function at the convolution layer to None

b. Use batch_normalization

c. Use the activation function to activate

Example :

inputs = tf.layers.dense(inputs,self.n_neurons,

kernel_initializer=self.initializer,

name = 'hidden%d'%(layer+1))

if self.batch_normal_momentum:

inputs = tf.layers.batch_normalization(inputs,momentum=self.batch_normal_momentum,train=self._training)

inputs = self.activation(inputs,name = 'hidden%d_out'%(layer+1))(2) During the training , The parameter training Set to True, At testing time , take training Set to False, At the same time, pay special attention to update_ops Use

update_ops = tf.get_collection(tf.GraphKeys.UPDATE_OPS)

Need to be updated every time you train , have access to sess.run(update_ops)

It's fine too :

with tf.control_dependencies(update_ops):

train_op = tf.train.AdamOptimizer(learning_rate).minimize(loss)Use mnist Data sets for simple testing

from tensorflow.examples.tutorials.mnist import input_data

import tensorflow as tf

import numpy as npmnist = input_data.read_data_sets('MNIST_data',one_hot=True)

x_train,y_train = mnist.train.images,mnist.train.labels

x_test,y_test = mnist.test.images,mnist.test.labelsExtracting MNIST_data\train-images-idx3-ubyte.gz

Extracting MNIST_data\train-labels-idx1-ubyte.gz

Extracting MNIST_data\t10k-images-idx3-ubyte.gz

Extracting MNIST_data\t10k-labels-idx1-ubyte.gzhe_init = tf.contrib.layers.variance_scaling_initializer()

def dnn(inputs,n_hiddens=1,n_neurons=100,initializer=he_init,activation=tf.nn.elu,batch_normalization=None,training=None):

for layer in range(n_hiddens):

inputs = tf.layers.dense(inputs,n_neurons,kernel_initializer=initializer,name = 'hidden%d'%(layer+1))

if batch_normalization is not None:

inputs = tf.layers.batch_normalization(inputs,momentum=batch_normalization,training=training)

inputs = activation(inputs,name = 'hidden%d'%(layer+1))

return inputstf.reset_default_graph()

n_inputs = 28*28

n_hidden = 100

n_outputs = 10

X = tf.placeholder(tf.float32,shape=(None,n_inputs),name='X')

Y = tf.placeholder(tf.int32,shape=(None,n_outputs),name='Y')

training = tf.placeholder_with_default(False,shape=(),name='tarining')

dnn_outputs = dnn(X)

logits = tf.layers.dense(dnn_outputs,n_outputs,kernel_initializer = he_init,name='logits')

y_proba = tf.nn.softmax(logits,name='y_proba')

xentropy = tf.nn.softmax_cross_entropy_with_logits(labels=Y,logits=y_proba)

loss = tf.reduce_mean(xentropy,name='loss')

train_op = tf.train.AdamOptimizer(learning_rate=0.01).minimize(loss)

correct = tf.equal(tf.argmax(Y,1),tf.argmax(y_proba,1))

accuracy = tf.reduce_mean(tf.cast(correct,tf.float32))

epoches = 20

batch_size = 100

np.random.seed(42)

init = tf.global_variables_initializer()

rnd_index = np.random.permutation(len(x_train))

n_batches = len(x_train) // batch_size

with tf.Session() as sess:

sess.run(init)

for epoch in range(epoches):

for batch_index in np.array_split(rnd_index,n_batches):

x_batch,y_batch = x_train[batch_index],y_train[batch_index]

feed_dict = {X:x_batch,Y:y_batch,training:True}

sess.run(train_op,feed_dict=feed_dict)

loss_val,accuracy_val = sess.run([loss,accuracy],feed_dict={X:x_test,Y:y_test,training:False})

print('epoch:{},loss:{},accuracy:{}'.format(epoch,loss_val,accuracy_val))Publisher : Full stack programmer stack length , Reprint please indicate the source :https://javaforall.cn/120021.html Link to the original text :https://javaforall.cn

边栏推荐

- [untitled]

- Use verdaccio to build your own NPM private library

- Idea Alibaba cloud multi module deployment

- 导数、微分、偏导数、全微分、方向导数、梯度的定义与关系

- Relationship between standardization, normalization and regularization

- 2022-2023 信息管理毕业设计选题题目推荐

- Wechat applet - network data request

- C # method to read the text content of all files in the local folder

- Packet capturing and streaming software and network diagnosis

- There are six ways to help you deal with the simpledateformat class, which is not a thread safety problem

猜你喜欢

Matlab论文插图绘制模板第40期—带偏移扇区的饼图

【飞控开发基础教程2】疯壳·开源编队无人机-定时器(LED 航情灯、指示灯闪烁)

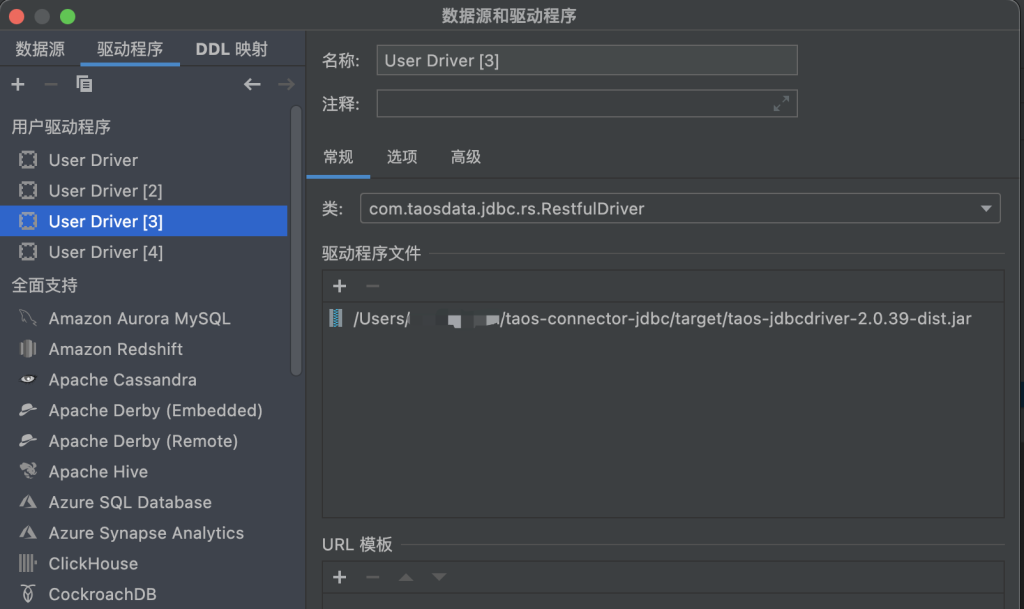

How to connect tdengine with idea database tool?

NUC 11 build esxi 7.0.3f install network card driver-v2 (upgraded version in July 2022)

Oracle创建表分区后,查询的时候不给出partition,但是会给分区字段指定的值,会不会自动按照分区查询?

【开发教程9】疯壳·ARM功能手机-I2C教程

Win11如何关闭共享文件夹

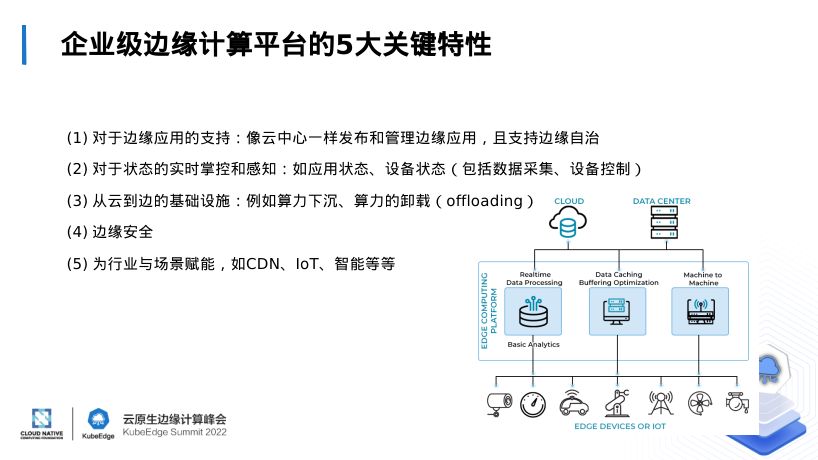

浅谈云原生边缘计算框架演进

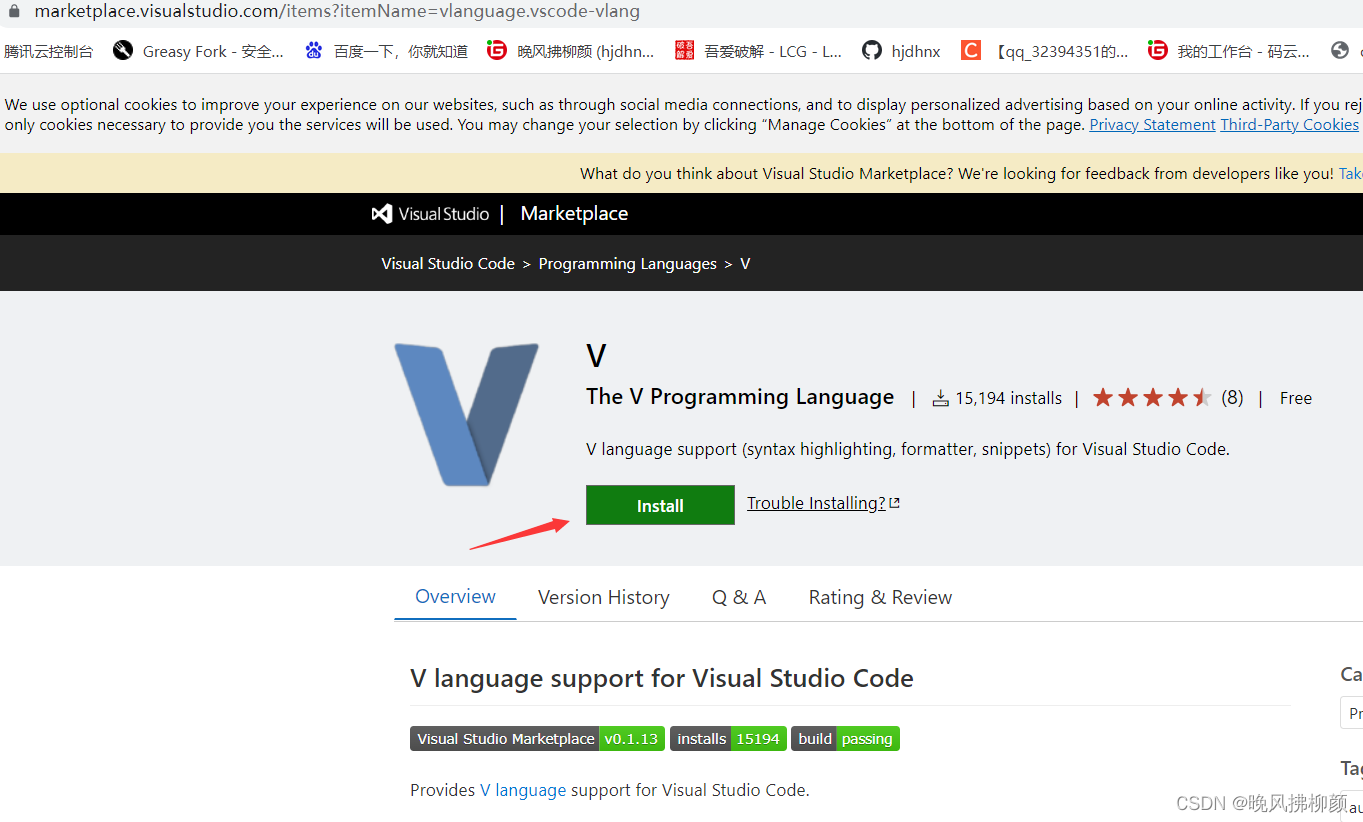

Vlang's way of beating drums

How does win11 reinstall the system?

随机推荐

【飞控开发基础教程1】疯壳·开源编队无人机-GPIO(LED 航情灯、信号灯控制)

IDEA 阿里云多模块部署

37. [categories of overloaded operators]

Merge multiple row headers based on apache.poi operation

第一章概述-------第一节--1.3互联网的组成

mysql锁机制(举例说明)

【无标题】

极大似然估计

Win11怎么重新安装系统?

Are CRM and ERP the same thing? What's the difference?

Vs2017 opens the project and prompts the solution of migration

Guetzli simple to use

Use verdaccio to build your own NPM private library

Using MySQL master-slave replication delay to save erroneously deleted data

PyQt5快速开发与实战 3.2 布局管理入门 and 3.3 Qt Designer实战应用

How to use C language nested linked list to realize student achievement management system

【Express接收Get、Post、路由请求参数】

【开发教程9】疯壳·ARM功能手机-I2C教程

利用MySQL主从复制延迟拯救误删数据

Thinkphp历史漏洞复现