当前位置:网站首页>FPN network details

FPN network details

2022-07-01 16:20:00 【Full stack programmer webmaster】

Hello everyone , I meet you again , I'm your friend, Quan Jun .

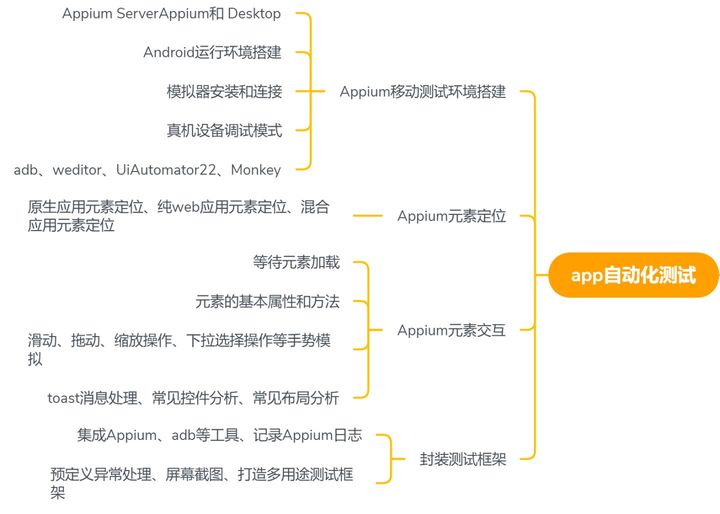

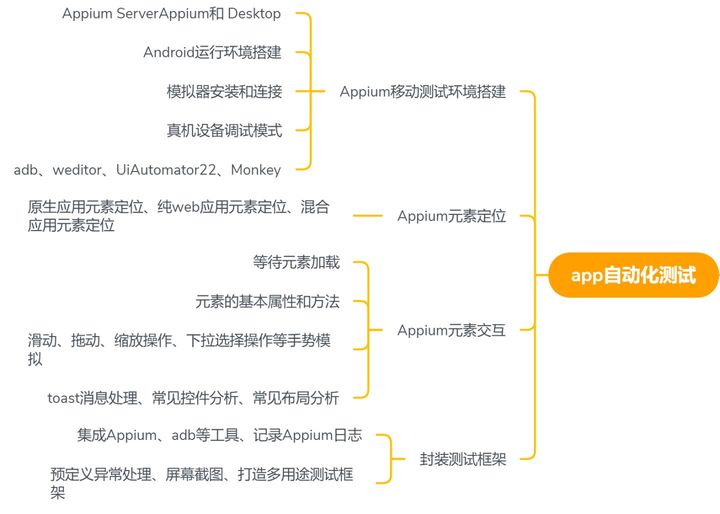

Characteristic graph pyramid network FPN(Feature Pyramid Networks) yes 2017 A network proposed in ,FPN The main solution is the multi-scale problem in object detection , Change through a simple network connection , Without increasing the amount of calculation of the original model , Greatly improve the performance of small object detection .

Low level features have less semantic information , But the target location is accurate ; High level feature semantic information is rich , But the target location is rough . In addition, although some algorithms use multi-scale feature fusion , But generally, the fused features are used for prediction , And this article FPN The difference is that the prediction is carried out independently in different feature layers .

One 、 Comparison of various network structures

1、 Usual CNN The network structure is shown in the figure below

chart 1

The network above is convolution from bottom to top , Then use the last layer of feature map to predict , image SPP-Net,Fast R-CNN,Faster R-CNN It's in this way , That is, only the features of the last layer of the network .

With VGG16 As an example , If feat_stride=16, If the size of the original image is 1000*600, After passing through the network, the size of the deepest layer is 60*40, It can be understood that a pixel on the feature map maps to one of the original images 16*16 Region ; There is a smaller than in the original picture 16*16 Small objects of the same size , Will it be ignored , I can't detect it ?

So the disadvantage of the network in the above figure is This will cause a sharp decline in the performance of small object detection .

2、 Image pyramid generates feature pyramid

Since the above single-layer detection will lose details ; You will think of using various scales of the image for training and testing , As shown in the figure below , Make the image into different scale, Then it's different scale The corresponding image generation is different scale Characteristics of

Figure 2

Zoom the picture to multiple scales , Each scale extracts feature map separately for prediction , such , We can get ideal results , however More time-consuming , It's not suitable for practice . Some algorithms only use image pyramids when testing .

3、 The way of multi-scale feature fusion

chart 3

image SSD(Single Shot Detector) This is the way of multi-scale feature fusion , There is no upsampling process , That is to extract features of different scales from different layers of the network for prediction , This method does not add additional computation . The author thinks that SSD The algorithm does not use enough low-level features ( stay SSD in , The lowest layer is characterized by VGG Online conv4_3), In the author's opinion, sufficiently low-level features are very helpful for detecting small objects .

4、FPN(Feature Pyramid Networks)

chart 4

This is the network that this article will talk about ,FPN The main solution is the multi-scale problem in object detection , Change through a simple network connection , Without increasing the amount of calculation of the original model , Greatly improve the performance of small object detection . Up sampling through high-level features and top-down connection through low-level features , And every layer will make predictions . More on that later , Now let's take a look at the other two

5、top-down pyramid w/o lateral

chart 5

In the figure above, the network has a top-down process , But there is no lateral connection , That is, the downward process does not integrate the original characteristics . The experiment found that this effect is better than the picture 1 The network effect of is worse .

6、only finest nevel

chart 6

The figure above has skip connection The prediction of the network structure is in finest level( The last layer from top to bottom ) On going , In short, it is the last step after multiple up sampling and feature fusion , Predict the features generated in the last step , Follow FPN The difference is that it only predicts at the last level .

Two 、FPN Detailed explanation

The author's main network adopts ResNet.

The general structure of the algorithm is as follows : A bottom-up line , A top-down line , Transverse connection (lateral connection). The enlarged area in the figure is the horizontal connection , here 1*1 The main function of the convolution kernel is to reduce the number of convolution kernels , It means less feature map The number of , It doesn't change feature map Size of .

① Bottom up :

The bottom-up process is the common forward propagation process of neural networks , The characteristic graph is calculated by convolution kernel , It usually gets smaller and smaller .

To be specific , about ResNets, We use the last of each stage residual block The feature of the output activates the output . about conv2,conv3,conv4 and conv5 Output , We will end up with residual block The output of is expressed as {C2,C3,C4,C5}, And they have... Relative to the input image {4, 8, 16, 32} Step size of . Because of its huge memory footprint , We will not conv1 Included in the pyramid .

② From top to bottom :

The top-down process is to abstract 、 High level feature graph with stronger semantics is used for up sampling (upsampling), The horizontal connection is to combine the result of up sampling with the result of bottom-up generation of the same size feature map To merge (merge). The two-layer features of horizontal connection have the same spatial dimension , This can take advantage of the underlying location details . Make a low resolution feature map 2 Multiple sampling ( For the sake of simplicity , Use nearest neighbor sampling ). Then by adding by element , Merge the upsampling mapping with the corresponding bottom-up mapping . This process is iterative , Until the final resolution map is generated .

To start the iteration , We just need to C5 Attach a 1×1 Convolution layer to generate low resolution map P5. Last , We attach a to each merged graph 3×3 Convolution to generate the final feature map , This is to reduce the aliasing effect of up sampling . This final feature mapping set is called {P2,P3,P4,P5}, Corresponding to {C2,C3,C4,C5}, They have the same size .

Because all levels of the pyramid use shared classifiers like the traditional characteristic image pyramid / Regressor , So we fix the feature dimension in all the feature graphs ( The channel number , Write it down as d). In this article, we set up d = 256, So all the extra convolution layers have 256 Output of channels .

③ Transverse connection :

use 1×1 The convolution kernel of ( Reduce the number of feature maps ).

3、 ... and 、FPN Experimental effect table of joining various networks

On the one hand, the author will FPN Put it in RPN Used in the network to generate proposal, The original RPN The network is output as a volume layer of the main network feature map As input , To put it simply, it only uses this scale feature map. But now we have to FPN Embedded in RPN In the network , Generate different scale features and fuse them as RPN Network input . In every one of them scale layer , All define different sizes of anchor, about P2,P3,P4,P5,P6 These layers , Definition anchor The size is 32^2,64^2,128^2,256^2,512^2, And each of them scale All layers have 3 Length width contrast :1:2,1:1,2:1. So the whole feature pyramid has 15 Kind of anchor.

Definition of positive and negative samples Faster RCNN almost : If a anchor And a given ground truth Have the highest IOU Or with any one Ground truth Of IOU Is greater than 0.7, Is a positive sample . If one anchor And any one of them ground truth Of IOU All less than 0.3, Is a negative sample .

Join in FPN Of RPN The effectiveness of the network is shown in the following table Table1. These results are based on ResNet-50 Of . The evaluation standard is AR(Average Recall),AR In the top right corner of the 100 or 1K Indicates that each image has 100 or 1000 individual anchor,AR In the lower right corner s,m,l Express COCO Data set object The sizes of are small , in , Big .feature Braces for Columns {} Represents the independent prediction of each layer .

Table1

from (a)(b)(c) We can see from the comparison of FRN The effect of is really obvious . in addition (a) and (b) It can be seen from the comparison that the high-level features are not more effective than the features of the lower level . (d) Indicates that there is only lateral connection , There is no top-down process , That is, just do one for each bottom-up result 1*1 Transverse connection and 3*3 The final result is obtained by convolution , from feature As can be seen from the column, the prediction is still hierarchical and independent , Like above chart 3 Structure . The author speculates that (d) The reason why the result is not good lies in the difference between different layers from bottom to top semantic gaps The larger . (e) Indicates that there is a top-down process , But there is no lateral connection , That is, the downward process does not integrate the original characteristics , Like above chart 5 Structure . The reason why this effect is not good is that the goal is location The feature becomes more inaccurate after multiple downsampling and upsampling processes . (f) use finest level Layer to make predictions ( Above chart 6 Structure ), That is, after multiple feature up sampling and fusion to the last step, the generated features are used for prediction , It is mainly to prove the expression ability of pyramid hierarchical independent prediction . obviously finest level It's not as effective as FPN good , The reason lies in PRN The network is a sliding window detector with fixed window size , Therefore, sliding on different layers of the pyramid can increase its robustness to scale changes . in addition (f) There are more anchor, Description added anchor The number of is not effective in improving accuracy .

On the other hand, it will FPN be used for Fast R-CNN Detection part of . except (a) outside , Classification layer and convolution layer are added before 2 individual 1024 The full connectivity layer of dimension . The experimental results are as follows Table2, Here's the test Fast R-CNN Detection effect of , therefore proposal Is constant ( use Table1(c) How to do it ). And Table1 It's similar ,(a)(b)(c) In the region based target convolution problem , Feature pyramid is more effective than single-scale feature .(c)(f) The gap is small , The author thinks that the reason is ROI pooling about region The scale of is not sensitive . Therefore, it cannot be universally believed that (f) This way of feature fusion is not good , Bloggers personally believe that we should look at specific problems , Like above RPN In the network , Probably (f) This way is not good , But in Fast RCNN It's not so obvious .

Table2

Empathy , take FPN be used for Faster RCNN The experimental results are shown in the following table Table3.

Table3

The following table Table4 Yes, in recent years COCO Comparison of algorithms ranking high in the competition . Note that the improvement of this algorithm in small object detection is relatively obvious .

Table4

Four 、 summary

Proposed by the author FPN(Feature Pyramid Network) The algorithm makes use of the high-resolution information of low-level features and high-resolution features of high-level features , By fusing the features of these different layers, the prediction effect is achieved . And prediction is carried out separately on each feature layer after fusion , The effect is very good .

Publisher : Full stack programmer stack length , Reprint please indicate the source :https://javaforall.cn/131065.html Link to the original text :https://javaforall.cn

边栏推荐

- IM即時通訊開發實現心跳保活遇到的問題

- 投稿开奖丨轻量应用服务器征文活动(5月)奖励公布

- 【php毕业设计】基于php+mysql+apache的教材管理系统设计与实现(毕业论文+程序源码)——教材管理系统

- 部门来了个拿25k出来的00后测试卷王,老油条表示真干不过,已被...

- Nuxt. JS data prefetching

- [nodemon] app crashed - waiting for file changes before starting...解决方法

- In the past six months, it has been invested by five "giants", and this intelligent driving "dark horse" is sought after by capital

- [daily question] 1175 Prime permutation

- process. env. NODE_ ENV

- OJ questions related to complexity (leetcode, C language, complexity, vanishing numbers, rotating array)

猜你喜欢

普通二本,去过阿里外包,到现在年薪40W+的高级测试工程师,我的两年转行心酸经历...

I'm a senior test engineer who has been outsourced by Alibaba and now has an annual salary of 40w+. My two-year career changing experience is sad

【Hot100】17. Letter combination of telephone number

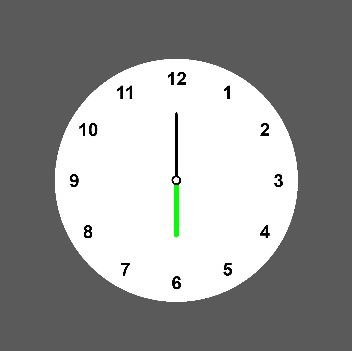

Summer Challenge harmonyos canvas realize clock

Problèmes rencontrés dans le développement de la GI pour maintenir le rythme cardiaque en vie

IM即时通讯开发万人群聊消息投递方案

Authentication processing in interface testing framework

周少剑,很少见

![[IDM] IDM downloader installation](/img/2b/baf8852b422c1c4a18e9c60de864e5.png)

[IDM] IDM downloader installation

What is the digital transformation of manufacturing industry

随机推荐

苹果自研基带芯片再次失败,说明了华为海思的技术领先性

【Hot100】19. Delete the penultimate node of the linked list

Microservice tracking SQL (support Gorm query tracking under isto control)

开机时小键盘灯不亮的解决方案

实现数字永生还有多久?元宇宙全息真人分身#8i

The sharp drop in electricity consumption in Guangdong shows that the substitution of high-tech industries for high-energy consumption industries has achieved preliminary results

Comment utiliser le langage MySQL pour les appareils de ligne et de ligne?

[daily news]what happened to the corresponding author of latex

从 MLPerf 谈起:如何引领 AI 加速器的下一波浪潮

数据库系统原理与应用教程(006)—— 编译安装 MySQL5.7(Linux 环境)

Five years after graduation, I became a test development engineer with an annual salary of 30w+

Sqlserver query: when a.id is the same as b.id, and the A.P corresponding to a.id cannot be found in the B.P corresponding to b.id, the a.id and A.P will be displayed

Comment win11 définit - il les permissions de l'utilisateur? Win11 comment définir les permissions de l'utilisateur

In the era of super video, what kind of technology will become the base?

Origin2018安装与使用(整理中)

There is a difference between u-standard contract and currency standard contract. Will u-standard contract explode

从大湾区“1小时生活圈”看我国智慧交通建设

Solution to the problem that the keypad light does not light up when starting up

智慧党建: 穿越时空的信仰 | 7·1 献礼

When ABAP screen switching, refresh the previous screen