当前位置:网站首页>[first song] Introduction to data science of rebirth -- return to advanced level

[first song] Introduction to data science of rebirth -- return to advanced level

2022-07-27 06:06:00 【Collapse an old face】

The first 1 Turn off : Simple linear regression

Task description

Our mission : Write a gradient descent applet , Output loss function .

Programming requirements

Please read the code on the right , Combined with relevant knowledge , stay Begin-End Code supplement within the area , Output for 1-10 Loss function value after sub gradient descent .

#********** Begin **********#

# Gradient descent function : take None Replace with the code you wrote

def gradient_descent(x_i, y_i, w, b, alpha):

dw =-2 * error(w, b, x_i, y_i)* x_i

db =-2 * error(w, b, x_i, y_i)

w =w - alpha * dw

b = b - alpha * db

return w, b

#********** End **********#

# read data

data_path ='task1/train.csv'

data = pd.read_csv(data_path)

# select property

data = data[['GrLivArea', 'SalePrice']]

# separate train and test data

train = data[:1168]

test = data[1168:]

# a fixed alpha slows down learning; here we use 10 iterations to show the error is decreasing\n",

x = test['GrLivArea'].tolist()

y = test['SalePrice'].tolist()

num_iterations = 10

alpha = 0.0000001

random.seed(0)

w, b = [random.random(), 0]

#********** Begin **********#

# The gradient drops and outputs the value of the loss function : take None Replace with the code you wrote

for i in range(num_iterations):

for x_i, y_i in zip(x, y):

w, b = gradient_descent(x_i, y_i, w, b, alpha)

print(sum_of_squared_errors(w, b, x, y))

#********** End **********# The first 2 Turn off : Multiple linear regression

Task description

Our mission : Write a small program to solve the multivariate linear model , And output the results of training set and test set RMSE value .

Programming requirements

Please read the code on the right , Combined with relevant knowledge , stay Begin-End Code supplement within the area , Output training set and test set RMSE value .

#********** Begin **********# The penalty weight is set to 30

# Output training set prediction error : Replacement and completion None Part of

clf = Ridge(alpha=30)

clf.fit(x,y)

y_pred = clf.predict(x)

print(math.sqrt(mean_squared_error(y, y_pred)))

#********** End **********#

The first 3 Turn off : Neural network regression

Task description

Our mission : Use Keras Build a regression model .

Programming requirements

Please read the code on the right , Combined with relevant knowledge , stay Begin-End Code supplement within the area , Use Keras Build a regression model .SalePrice Is the target variable .

# filter data

predictors = data.select_dtypes(exclude=['object'])

cols_with_no_nans = []

for col in predictors.columns:

if not data[col].isnull().any():

cols_with_no_nans.append(col)

data_filtered = data[cols_with_no_nans]

data = data_filtered[['MSSubClass', 'LotArea', 'OverallQual', 'OverallCond', 'YearBuilt', 'YearRemodAdd', 'BsmtFinSF1', 'BsmtFinSF2', 'BsmtUnfSF', 'TotalBsmtSF', '1stFlrSF', '2ndFlrSF', 'LowQualFinSF', 'GrLivArea', 'BsmtFullBath', 'BsmtHalfBath', 'FullBath', 'HalfBath', 'BedroomAbvGr', 'KitchenAbvGr', 'TotRmsAbvGrd', 'Fireplaces', 'GarageCars', 'GarageArea', 'WoodDeckSF', 'OpenPorchSF', 'EnclosedPorch', '3SsnPorch', 'ScreenPorch', 'PoolArea', 'MiscVal', 'MoSold', 'YrSold', 'SalePrice']]

# separate train and test

train = data[:1168]

test = data[1168:]

y = train[['SalePrice']]

x = train[['MSSubClass', 'LotArea', 'OverallQual', 'OverallCond', 'YearBuilt', 'YearRemodAdd', 'BsmtFinSF1', 'BsmtFinSF2', 'BsmtUnfSF', 'TotalBsmtSF', '1stFlrSF', '2ndFlrSF', 'LowQualFinSF', 'GrLivArea', 'BsmtFullBath', 'BsmtHalfBath', 'FullBath', 'HalfBath', 'BedroomAbvGr', 'KitchenAbvGr', 'TotRmsAbvGrd', 'Fireplaces', 'GarageCars', 'GarageArea', 'WoodDeckSF', 'OpenPorchSF', 'EnclosedPorch', '3SsnPorch', 'ScreenPorch', 'PoolArea', 'MiscVal', 'MoSold', 'YrSold']]

# define model

NN_model = Sequential()# Define a neural network model

#********* Begin *********#

# Mark all None Fill in and replace the part of

# input layer

# add to input layer, Use 128 Neurons , Initialize... In a normal way , Set the regular item to l2( A weight 0.1), Enter the dimension according to x According to the dimension of , use relu As an activation function

NN_model.add(Dense(128,# Fully connected layer

kernel_initializer='normal',# Initialize the weight

kernel_regularizer=l2(0.1),# Set regular items

input_dim = x.shape[1],# Define the input dimension

activation='relu'))# Set activation function

# hidden layers

# Add two layer, Every layer Use 256 Neurons , Initialize... In a normal way , Set the regular item to l2( A weight 0.1), use relu As an activation function

NN_model.add(Dense(256, kernel_initializer='normal', kernel_regularizer=l2(0.1), activation='relu'))

NN_model.add(Dense(256, kernel_initializer='normal', kernel_regularizer=l2(0.1), activation='relu'))

# output layer

# Add output layer, Use a neuron , use linear Activation function

NN_model.add(Dense(1, kernel_initializer='normal',activation='linear'))

# compile and train network

NN_model.compile(loss='mean_squared_error', optimizer='adam', metrics=['mean_squared_error'])# Use adaptive learning rate adam

NN_model.fit(x, y, epochs=500, batch_size=32, validation_split = 0.2,verbose=0)# Training times 、 Batch size 、 Verification and training set division ratio

# evaluate on test set

test_x = test[['MSSubClass', 'LotArea', 'OverallQual', 'OverallCond', 'YearBuilt', 'YearRemodAdd', 'BsmtFinSF1', 'BsmtFinSF2', 'BsmtUnfSF', 'TotalBsmtSF', '1stFlrSF', '2ndFlrSF', 'LowQualFinSF', 'GrLivArea', 'BsmtFullBath', 'BsmtHalfBath', 'FullBath', 'HalfBath', 'BedroomAbvGr', 'KitchenAbvGr', 'TotRmsAbvGrd', 'Fireplaces', 'GarageCars', 'GarageArea', 'WoodDeckSF', 'OpenPorchSF', 'EnclosedPorch', '3SsnPorch', 'ScreenPorch', 'PoolArea', 'MiscVal', 'MoSold', 'YrSold']]

test_y = test[['SalePrice']]

test_y_pred = NN_model.predict(test_x)

# return test error

test_error = math.sqrt(mean_squared_error(test_y, test_y_pred))

return test_error

#********* End *********#

notes : The content is only for reference and sharing , Do not spread without permission , Tort made delete

边栏推荐

- 物联网操作系统多任务基础

- 为什么交叉熵损失可以用于刻画损失

- 韦东山 数码相框 项目学习(四)简易的TXT文档显示器(电纸书)

- 韦东山 数码相框 项目学习(三)freetype的移植

- 8. Mathematical operation and attribute statistics

- Day 3. Suicidal ideation and behavior in institutions of higher learning: A latent class analysis

- [song] rebirth of me in py introduction training (5): List

- 【头歌】重生之数据科学导论——回归进阶

- A photo breaks through the face recognition system: you can nod your head and open your mouth, netizens

- 根据SQL必知必会学习SQL(MYSQL)

猜你喜欢

2021-06-26

【Arduino】重生之Arduino 学僧(1)

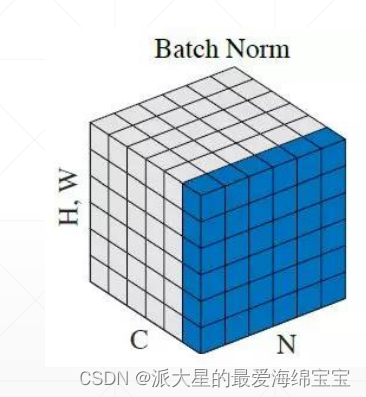

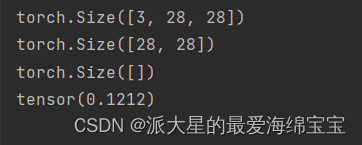

19. Up and down sampling and batchnorm

5. Indexing and slicing

【11】二进制编码:“手持两把锟斤拷,口中疾呼烫烫烫”?

安全帽反光衣检测识别数据集和yolov5模型

13. Logistic regression

15. GPU acceleration, Minist test practice and visdom visualization

17. Attenuation of momentum and learning rate

8. Mathematical operation and attribute statistics

随机推荐

回调使用lambda

Pix2Pix原理解析

【头歌】重生之我在py入门实训中(10): Numpy

pytorch中交叉熵损失函数的细节

Cesium教程 (1) 界面介绍-3dtiles加载-更改鼠标操作设置

STM32-红外遥控

向量和矩阵的范数

数字图像处理——第六章 彩色图像处理

【11】二进制编码:“手持两把锟斤拷,口中疾呼烫烫烫”?

韦东山 数码相框 项目学习(四)简易的TXT文档显示器(电纸书)

【Arduino】重生之Arduino 学僧(1)

[first song] rebirth of me in py introductory training (4): Circular program

Can it replace PS's drawing software?

14. Example - Multi classification problem

[first song] rebirth of me in py introductory training (6): definition and application of functions

百问网驱动大全学习(二)I2C驱动

Weidongshan digital photo frame project learning (III) transplantation of freetype

PS 2022 updated in June, what new functions have been added

10. Gradient, activation function and loss

malloc和new之间的不同-实战篇