当前位置:网站首页>When converting tensor to ndarray in tensorflow, the run or Eval function is constantly called in the loop, and the code runs more and more slowly!

When converting tensor to ndarray in tensorflow, the run or Eval function is constantly called in the loop, and the code runs more and more slowly!

2022-06-12 08:36:00 【A restless wind】

problem

I have such a need : I currently have a trained encoder Model , Its output is Tensor type , I want to turn it into ndarray type . By searching for information , I found it possible to take advantage of sess.run() hold Tensor Convert to ndarray, So I call... In my code sess.run() Successfully converted data type .

however , My data transformation will call in every loop , That is, the loop always calls sess.run(), So the question came , Every cycle ,sess.run It takes longer than the last time , This leads to slower and slower training . It takes time from the first call 0.17s To the next 100 When called 0.27s, And this is 100 Time , If you train 10000 Time , I don't know how long it will take , So this problem has to be solved !

Question why

If you keep building in a cycle tensorflow Figure if the node is running again , It can lead to tensorflow It's getting slower and slower . concrete problems See the code comments , Uncommented lines of code can be ignored , The problem code is as follows :

import gym

from gym.spaces import Box

import numpy as np

from tensorflow import keras

import tensorflow as tf

import time

class MyWrapper(gym.ObservationWrapper):

def __init__(self, env, encoder, latent_dim = 2):

super().__init__(env)

self._observation_space = Box(-np.inf, np.inf, shape=(7 + latent_dim,), dtype=np.float32)

self.observation_space = self._observation_space

self.encoder = encoder # This is the model I have trained in advance

tf.InteractiveSession()

self.sess = tf.get_default_session()

self.sess.run(tf.global_variables_initializer())

def observation(self, obs):

obs = np.reshape(obs, (1, -1))

latent_z_tensor = self.encoder(obs)[2] # That's the problem , This line of code is calling run when , Will continue to create graph nodes , So it's getting slower and slower

t=time.time() # Test run time

latent_z_arr = sels.sess.run(latent_z_tensor) # Every time run when , The above diagram will be rebuilt

print(time.time()-t) # Test run time

obs = np.reshape(obs, (-1,))

latent_z_arr = np.reshape(latent_z_arr, (-1,))

obs = obs.tolist()

obs.extend(latent_z_arr.tolist())

obs = np.array(obs)

return obs

Solutions

At initialization , Just build the graph structure , Use tf.placeholder Placeholders represent obs This variable , Examples of specific schemes are as follows ( You can focus only on the annotated lines ):

import gym

from gym.spaces import Box

import numpy as np

from tensorflow import keras

import tensorflow as tf

import time

class MyWrapper(gym.ObservationWrapper):

def __init__(self, env, encoder, latent_dim = 2):

super().__init__(env)

self._observation_space = Box(-np.inf, np.inf, shape=(7 + latent_dim,), dtype=np.float32)

self.observation_space = self._observation_space

self.encoder = encoder

tf.InteractiveSession()

self.sess = tf.get_default_session()

self.obs=tf.placeholder(dtype=tf.float32,shape=(1,7)) # The point is that these two lines of code , When initializing, build the diagram first , Use placeholders to represent obs, In actual operation, you only need to feed data obs Just fine

self.latent_z_tensor = self.encoder(self.obs)[2] # Build the diagram at initialization

self.sess.run(tf.global_variables_initializer())

def observation(self, obs):

obs = np.reshape(obs, (1, -1))

t=time.time() # Test run time

latent_z_arr = self.sess.run(self.latent_z_tensor, feed_dict={

self.obs:obs}) # Just feed the data here , The diagram will not be rebuilt .

print(time.time()-t) # Test run time

obs = np.reshape(obs, (-1,))

latent_z_arr = np.reshape(latent_z_arr, (-1,))

obs = obs.tolist()

obs.extend(latent_z_arr.tolist())

obs = np.array(obs)

return obs

Now? , Data type conversion completed , The slow running of the code also solves the problem !

This problem has been solved , After four or five days of searching, I finally got it , At this moment , The happiness brought by solving the problem has dispelled the haze of lovelorn in the previous two days , I'm so happy .

边栏推荐

- Gtest/gmock introduction and Practice

- Hands on learning and deep learning -- Realization of linear regression from scratch

- Installation series of ROS system (II): ROS rosdep init/update error reporting solution

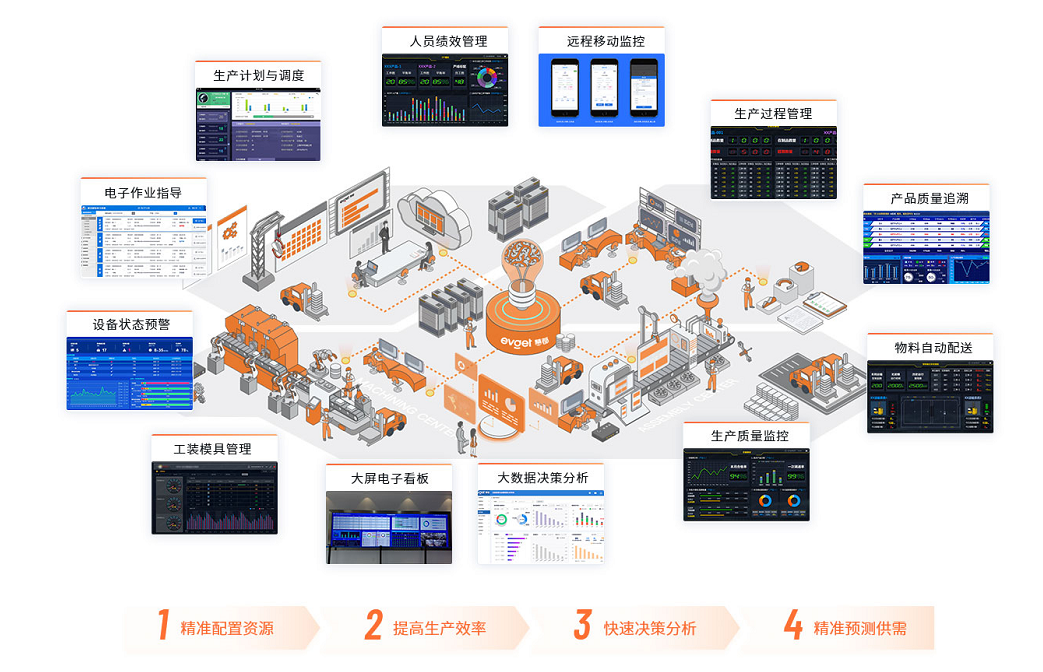

- APS软件有哪些排程规则?有何异常处理方案?

- R loop assignment variable name

- 正则校验用户名

- (p19-p20) delegate constructor (proxy constructor) and inheritance constructor (using)

- x64dbg 调试 EXCEPTION_ACCESS_VIOLATION C0000005

- 超全MES系统知识普及,必读此文

- 网站Colab与Kaggle

猜你喜欢

智能制造的时代,企业如何进行数字化转型

JVM learning notes: three local method interfaces and execution engines

后MES系统的时代,已逐渐到来

Where does the driving force of MES system come from? What problems should be paid attention to in model selection?

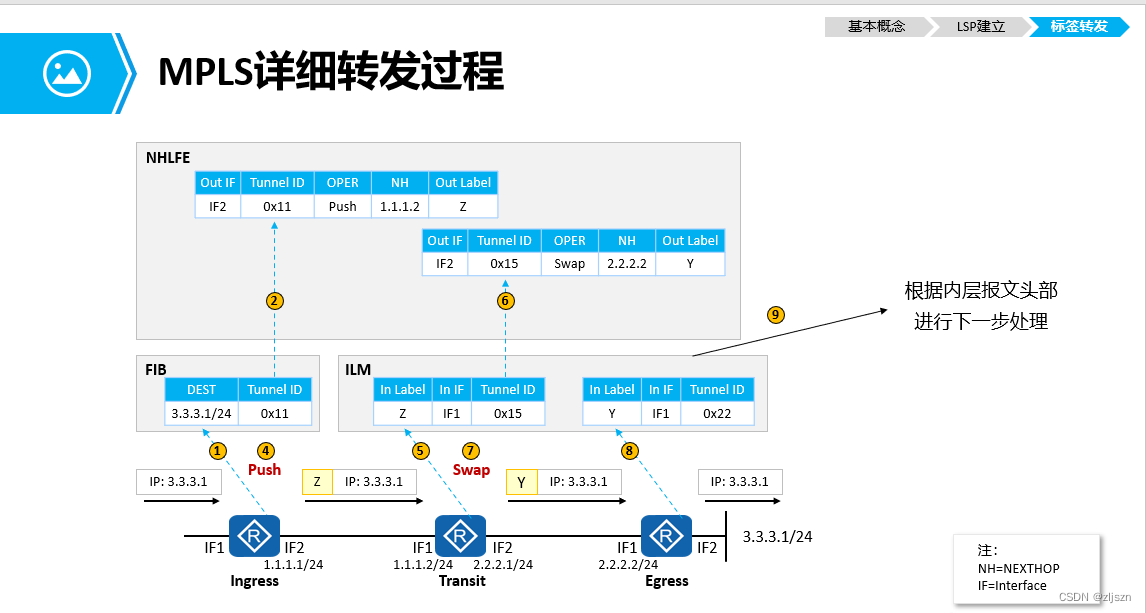

MPLS的原理与配置

FDA审查人员称Moderna COVID疫苗对5岁以下儿童安全有效

(p40-p41) transfer and forward perfect forwarding of move resources

Centso8 installing mysql8.0 (Part 2)

Error: what if the folder cannot be deleted when it is opened in another program

企业上MES系统的驱动力来自哪里?选型又该注意哪些问题?

随机推荐

Calling stored procedures in mysql, definition of variables,

企业为什么要实施MES?具体操作流程有哪些?

(p17-p18) define the basic type and function pointer alias by using, and define the alias for the template by using and typedef

js中的正则表达式

ctfshow web 1-2

调整svg宽高

进制GB和GiB的区别

(P14) use of the override keyword

Hands on learning and deep learning -- simple implementation of linear regression

call方法和apply方法

如何理解APS系统的生产排程?

Install iptables services and open ports

Vision transformer | arXiv 2205 - TRT vit vision transformer for tensorrt

【新规划】

ctfshow web3

MSTP的配置与原理

X64dbg debugging exception_ ACCESS_ VIOLATION C0000005

(p40-p41) transfer and forward perfect forwarding of move resources

Engineers learn music theory (I) try to understand music

超全MES系统知识普及,必读此文