当前位置:网站首页>Vision transformer | arXiv 2205 - TRT vit vision transformer for tensorrt

Vision transformer | arXiv 2205 - TRT vit vision transformer for tensorrt

2022-06-12 08:10:00 【Promising youth】

Arxiv 2205 - TRT-ViT oriented TensorRT Of Vision Transformer

- The paper :https://arxiv.org/abs/2205.09579

- Original document :https://www.yuque.com/lart/papers/pghqxg

primary coverage

This article is from Vision Transformer The practical application of .

The existing Vision Transformer Although the precision is very high , But it's not like ResNet So efficient , And it gradually deviates from the requirements of the actual deployment scenario . The authors believe that this may be due to the current evaluation method for the computational efficiency of the model , for example FLOPs Or the parameters are only one-sided 、 Second best , And it is not sensitive to specific hardware . actually , The model must deal with the environmental uncertainties in the deployment process , It involves hardware features , For example, memory access costs and I/O throughput .

because TensorRT It has become a common and deployment friendly solution in practice , It can provide convenient hardware oriented guidance , Therefore, this paper focuses on the optimization of more targeted efficiency indicators in the model design , That is, on a specific hardware device TensorRT Time delay , This can provide information that involves computing power 、 Comprehensive feedback on memory consumption and bandwidth . This also leads to the main issues around this article : How to design a model , There can be Transformer That kind of high performance , It can be like ResNet That fast prediction speed ?

To address this issue , The article explores systematically CNN and Transformer Hybrid design . Through a series of experiments , I have sorted out four articles for TesnorTR Orientation and deployment friendly model design guidance , It contains two stage Grade , collar strip block Grade :

- stage-level:Transformer block Suitable for later placement in the model , This maximizes the trade-off between efficiency and performance .

- stage-level: First shallow then deep stage Design patterns can improve performance .

- block-level:Transformer and BottleNeck Mixing block Than alone Transformer More effective .

- block-level: Global then local block Design patterns help compensate for performance problems .

Based on these principles , The authors have designed a series of TensorrtTR-oriented Transformers, namely TRT-ViT, These models are CNN And Transformer Mixture . Proposed TRT-ViT In visual classification tasks , On the tradeoff between delay and accuracy , It exceeds the existing convolution network and Vision Transformer. And the delay and accuracy of downstream tasks , It also shows a more significant improvement .

stay TensorRT Practical guidelines for efficient network design on

The runtime analysis here is mainly based on convolution network and vision Transformer Their typical representatives ResNet and ViT:

- They have good performance and are widely used ;

- Their core components BottleNeck and Transformer Blocks are also important components of other more advanced networks .

Performance analysis , Here, several analysis indicators for model efficiency are introduced :

- Params: Model parameters

- FLOPs: Floating point computation of the model

- Lantency: Reasoning delay

- TeraParams: With T Unit , The ratio of parameter quantity to delay . Used to reflect actions or block Of Parameter density .

- TeraFLOPS: With T Unit , The ratio of floating-point computation to delay . That is, every second T Floating point operands in units (FLOPs), Used to represent an operation or model block Of Calculate density , So as to reflect the computing efficiency on the hardware . This continues RepLKNet Set up .

Transformer block It is more appropriate to put it at the later stage of the model

By analyzing the existing Transformer、BottleNeck The module itself calculates the density in the case of four typical feature map sizes in the classification task ( surface 1), And the classification performance and delay of the corresponding typical models ( chart 1), You can see :

- Transformer It can really bring good performance .

- When the input size is large ,Transformer Blcok The calculated density will be very small ; And when its input becomes smaller , The calculated density can reach BottleNeck Approximate level of .

therefore Transformer Block Suitable for later stage of the model , To balance performance and efficiency .

Based on this, the author designed the first version of the model , That is, use Transformer Block Replace convolution network deep BottleNeck. Name it MixNetV. This is more than Transformer Be quick , Also ratio ResNet Good performance ( surface 3).

Shallow stage Shallower , Deep level stage Deeper

A widely accepted view is that the more parameters, the higher the model capacity ( Over parameterization ). In order to improve the parameters of the model as much as possible without compromising efficiency , Here, the parameter density is defined as the ratio of parameter quantity to delay , And take this as the basis for analysis .

stay Transformer and BottleNeck The module can be seen from the comparison of parameter density when different size features are input :

- With the feature size corresponding stage The deepening of , The parameter density of both modules is increasing .

- Deep features ,BottleNeck With greater parameter density .

It means , We should make it deeper , The shallow layer is shallower . meanwhile , Deep stacking BottleNeck It can expand the capacity of the model more effectively without affecting the efficiency of the model .

In the face of ResNet and PVT The modified experiment was carried out according to this observation ( surface 2) We can see from the comparison , Although it has increased FLOPs and Params, But the performance is improved and the delay is also increased .

Based on this , Yes MixNetV Made the same change , Reduced shallow block The number of , Deepens the deepest volume . Got it Refined-MixNetV, Further improved performance , And maintain the time delay . But the current delay is still not ResNet50 higher .

Transformer And BottleNeck Mixed structure is more effective

To further compress the delay , And improve performance at the same time , The authors aim at Transformer It was redesigned .

Designed Transformer And BottleNeck Three kinds of hybrid structures , They are of parallel structure MixBlockA And serial structure MixBlockB as well as MixBlockC. This section only discusses A and B Type structure .

Such a structure achieves a better result than Transformer Higher computational density and parameter density , More efficient and potential . So the author uses them to replace MixNetV Medium Transformer structure .

experiment ( surface 3) The contrast shows , Although the structure after replacement MixNetA and MixNetB Better delay improvement , But still no more than MixNetV, And with the existing pure Transformer structure PVT-Medium There is still a gap .

Global then local can improve performance

in consideration of Transformer The global interaction features of Convolution Local mining properties of , Compare with “ First extract local information and then refine it globally ”,“ First get the global information and then refine it locally ” More reasonable .

Based on this rule , Yes MixBlockB Medium Transformer and Convolution Positions were exchanged , Got it MixBlockC. And the calculated density does not change . surface 3 The experimental results show that this improvement is more effective , Got and PVT-Medium Same accuracy , But it has lower reasoning delay .

thus ,MixBlockC It is regarded as the basic unit of model design in this paper .

Experimental comparison

continue ResNet Basic configuration , The authors constructed TRT-ViT Four variants of , Continue the pyramid structure . The construction of the model also follows these four principles ,MixBlockC Only used in the last stage of the model . In the model Transformer part ,MLP The expansion rate is set to 3, The dimension of the header is set to 32, Use LN and GeLU, and BottleNeck Use in BN and ReLU.

From the following three groups and a crowd SOTA You can see in the comparison of , It has obvious advantages in the tradeoff between reasoning delay and performance .

ImageNet-1K

ADE20K

COCO

Ablation Experiment of module

This is different stage Gradually replace the structure in with MixBlock, as well as MixBlock Channel contraction parameter in R Targeted ablation experiments were conducted . But in front with SOTA In the comparison of “C-C-C-M” and “R=0.5” Configuration of .

边栏推荐

- Talk about the four basic concepts of database system

- Servlet advanced

- Literature reading: raise a child in large language model: rewards effective and generalizable fine tuning

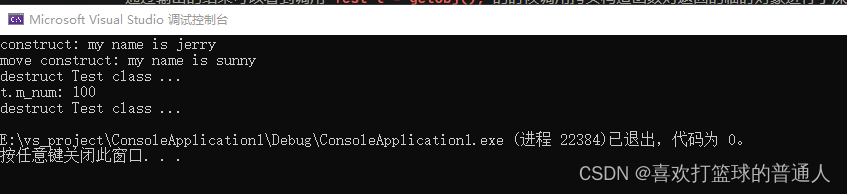

- StrVec类 移动拷贝

- Compiling principle on computer -- function drawing language (II): lexical analyzer

- MES系统是什么?MES系统的操作流程是怎样?

- APS究竟是什么系统呢?看完文章你就知道了

- Leetcode notes: Weekly contest 277

- vscode 下载慢解决办法

- Introduction to coco dataset

猜你喜欢

MinGW offline installation package (free, fool)

ASP.NET项目开发实战入门_项目六_错误报告(自己写项目时的疑难问题总结)

DUF:Deep Video Super-Resolution Network Using Dynamic Upsampling Filters ... Reading notes

(p36-p39) right value and right value reference, role and use of right value reference, derivation of undetermined reference type, and transfer of right value reference

Architecture and performance analysis of convolutional neural network

超全MES系统知识普及,必读此文

Talk about the four basic concepts of database system

Vision transformer | arXiv 2205 - TRT vit vision transformer for tensorrt

Mathematical Essays: Notes on the angle between vectors in high dimensional space

MES系统质量追溯功能,到底在追什么?

随机推荐

Vscode 调试TS

千万别把MES只当做工具,不然会错过最重要的东西

Leetcode notes: biweekly contest 69

Uni app screenshot with canvas and share friends

Alibaba cloud deploys VMware and reports an error

Compiling principle on computer -- functional drawing language (V): compiler and interpreter

(P33-P35)lambda表达式语法,lambda表达式注意事项,lambda表达式本质

Instructions spéciales pour l'utilisation du mode nat dans les machines virtuelles VM

DUF:Deep Video Super-Resolution Network Using Dynamic Upsampling Filters ... Reading notes

(P15-P16)对模板右尖括号的优化、函数模板的默认模板参数

Vision Transformer | Arxiv 2205 - LiTv2: Fast Vision Transformers with HiLo Attention

Summary of 3D point cloud construction plane method

(P13)final关键字的使用

Three data exchange modes: line exchange, message exchange and message packet exchange

模型压缩 | TIP 2022 - 蒸馏位置自适应:Spot-adaptive Knowledge Distillation

Vision Transformer | CVPR 2022 - Vision Transformer with Deformable Attention

Symfony 2: multiple and dynamic database connections

TMUX common commands

Servlet

Final review of Discrete Mathematics (predicate logic, set, relation, function, graph, Euler graph and Hamiltonian graph)