当前位置:网站首页>Vision Transformer | CVPR 2022 - Vision Transformer with Deformable Attention

Vision Transformer | CVPR 2022 - Vision Transformer with Deformable Attention

2022-06-12 08:04:00 【Promising youth】

CVPR 2022 - Vision Transformer with Deformable Attention

- The paper :https://arxiv.org/abs/2201.00520

- Code :https://github.com/LeapLabTHU/DAT

- Core content : Use the strategy of flow field migration to key and value Focus more on relevant areas , So as to obtain more targeted context information .

- First, the shape is H×W×3 Of the input image 4×4 Non overlapping convolution embedding , Then normalize the layer , obtain H4×W4×C Of patch The embedded .

- To build a hierarchical feature pyramid ,Backbone Include 4 Stages ,stride Gradually increase .

- stay 2 Between successive stages , There is a non overlapping 2×2 Convolution and stride=2 To sample down the characteristic graph , Halve the size of the space , And double the feature size .

- The first two stages are mainly to learn local features , And one of them key and value Large size . Globally computed Deformable Attention The operation is not suitable for . In order to realize the trade-off between model capacity and computational burden , Here the Local Attention+Shift Window Attention In the form of , In order to have a better representation at an early stage .

- In the third and fourth stages Local Attention+Deformable Attention Block In the form of . The feature graph is firstly based on Window Of Local Attention Process and aggregate local information , And then through Deformable Attention Block After local enhancement token Modeling the global relationship between .

Main improvements

- Deformable Attention Be brought up against Attention Operations introduce data dependent sparse attention

- Use q Generate a group of offsets. According to these offsets Yes q After migration based on bilinear interpolation k and v Corresponding x, After with q structure MHSA. This is to stabilize the training process , Use a predefined factor for the offset value s To zoom in and out , So as to prevent too large offset . namely Δ p ← s tanh ( Δ p ) \Delta p ← s \tanh (\Delta p) Δp←stanh(Δp).

- In the process , Also based on generated offsets To obtain the corresponding relative position offset . It will be added to QK/sqrt(d) On .

- In order to achieve a variety of offset effects , Here, the feature channel is also divided into G Group , Each group uses a shared offset . In the actual , The number of heads of the attention module M Set as offset group G Multiple .

- In the actual calculation, the input characteristic graph can be sampled down r r r To be passed offset Take the next sample . The model configuration in this paper in r r r All set to 1.

Attention Calculation :

Core code

class DAttentionBaseline(nn.Module):

def __init__(

self,

q_size,

kv_size,

n_heads,

n_head_channels,

n_groups,

attn_drop,

proj_drop,

stride,

offset_range_factor,

use_pe,

dwc_pe,

no_off,

fixed_pe,

):

super().__init__()

self.dwc_pe = dwc_pe

self.n_head_channels = n_head_channels

self.scale = self.n_head_channels**-0.5

self.n_heads = n_heads

self.q_h, self.q_w = q_size

self.kv_h, self.kv_w = kv_size

self.nc = n_head_channels * n_heads

self.n_groups = n_groups

self.n_group_channels = self.nc // self.n_groups

self.n_group_heads = self.n_heads // self.n_groups

self.use_pe = use_pe

self.fixed_pe = fixed_pe

self.no_off = no_off

self.offset_range_factor = offset_range_factor

if self.q_h == 14 or self.q_w == 14 or self.q_h == 24 or self.q_w == 24:

kk = 5

elif self.q_h == 7 or self.q_w == 7 or self.q_h == 12 or self.q_w == 12:

kk = 3

elif self.q_h == 28 or self.q_w == 28 or self.q_h == 48 or self.q_w == 48:

kk = 7

elif self.q_h == 56 or self.q_w == 56 or self.q_h == 96 or self.q_w == 96:

kk = 9

self.conv_offset = nn.Sequential(

nn.Conv2d(self.n_group_channels, self.n_group_channels, kk, stride, kk // 2, groups=self.n_group_channels),

LayerNormProxy(self.n_group_channels),

nn.GELU(),

nn.Conv2d(self.n_group_channels, 2, 1, 1, 0, bias=False),

)

self.proj_q = nn.Conv2d(self.nc, self.nc, kernel_size=1, stride=1, padding=0)

self.proj_k = nn.Conv2d(self.nc, self.nc, kernel_size=1, stride=1, padding=0)

self.proj_v = nn.Conv2d(self.nc, self.nc, kernel_size=1, stride=1, padding=0)

self.proj_out = nn.Conv2d(self.nc, self.nc, kernel_size=1, stride=1, padding=0)

self.proj_drop = nn.Dropout(proj_drop, inplace=True)

self.attn_drop = nn.Dropout(attn_drop, inplace=True)

if self.use_pe:

if self.dwc_pe:

self.rpe_table = nn.Conv2d(self.nc, self.nc, kernel_size=3, stride=1, padding=1, groups=self.nc)

elif self.fixed_pe:

self.rpe_table = nn.Parameter(torch.zeros(self.n_heads, self.q_h * self.q_w, self.kv_h * self.kv_w))

trunc_normal_(self.rpe_table, std=0.01)

else:

self.rpe_table = nn.Parameter(torch.zeros(self.n_heads, self.kv_h * 2 - 1, self.kv_w * 2 - 1))

trunc_normal_(self.rpe_table, std=0.01)

else:

self.rpe_table = None

@torch.no_grad()

def _get_ref_points(self, H_key, W_key, B, dtype, device):

ref_y, ref_x = torch.meshgrid(

torch.linspace(0.5, H_key - 0.5, H_key, dtype=dtype, device=device),

torch.linspace(0.5, W_key - 0.5, W_key, dtype=dtype, device=device),

)

ref = torch.stack((ref_y, ref_x), -1)

ref[..., 1].div_(W_key).mul_(2).sub_(1)

ref[..., 0].div_(H_key).mul_(2).sub_(1)

ref = ref[None, ...].expand(B * self.n_groups, -1, -1, -1) # B * g H W 2

return ref

def forward(self, x):

B, C, H, W = x.size()

dtype, device = x.dtype, x.device

q = self.proj_q(x)

q_off = einops.rearrange(q, "b (g c) h w -> (b g) c h w", g=self.n_groups, c=self.n_group_channels)

offset = self.conv_offset(q_off) # B * g 2 Hg Wg

Hk, Wk = offset.size(2), offset.size(3)

n_sample = Hk * Wk

if self.offset_range_factor > 0:

offset_range = torch.tensor([1.0 / Hk, 1.0 / Wk], device=device).reshape(1, 2, 1, 1)

offset = offset.tanh().mul(offset_range).mul(self.offset_range_factor)

offset = einops.rearrange(offset, "b p h w -> b h w p")

reference = self._get_ref_points(Hk, Wk, B, dtype, device)

if self.no_off:

offset = offset.fill(0.0)

if self.offset_range_factor >= 0:

pos = offset + reference

else:

pos = (offset + reference).tanh()

x_sampled = F.grid_sample(

input=x.reshape(B * self.n_groups, self.n_group_channels, H, W),

grid=pos[..., (1, 0)], # y, x -> x, y

mode="bilinear",

align_corners=True,

) # B * g, Cg, Hg, Wg

x_sampled = x_sampled.reshape(B, C, 1, n_sample)

q = q.reshape(B * self.n_heads, self.n_head_channels, H * W)

k = self.proj_k(x_sampled).reshape(B * self.n_heads, self.n_head_channels, n_sample)

v = self.proj_v(x_sampled).reshape(B * self.n_heads, self.n_head_channels, n_sample)

attn = torch.einsum("b c m, b c n -> b m n", q, k) # B * h, HW, Ns

attn = attn.mul(self.scale)

if self.use_pe:

if self.dwc_pe:

residual_lepe = self.rpe_table(q.reshape(B, C, H, W)).reshape(

B * self.n_heads, self.n_head_channels, H * W

)

elif self.fixed_pe:

rpe_table = self.rpe_table

attn_bias = rpe_table[None, ...].expand(B, -1, -1, -1)

attn = attn + attn_bias.reshape(B * self.n_heads, H * W, self.n_sample)

else:

rpe_table = self.rpe_table

rpe_bias = rpe_table[None, ...].expand(B, -1, -1, -1)

q_grid = self._get_ref_points(H, W, B, dtype, device)

displacement = (

q_grid.reshape(B * self.n_groups, H * W, 2).unsqueeze(2)

- pos.reshape(B * self.n_groups, n_sample, 2).unsqueeze(1)

).mul(0.5)

attn_bias = F.grid_sample(

input=rpe_bias.reshape(B * self.n_groups, self.n_group_heads, 2 * H - 1, 2 * W - 1),

grid=displacement[..., (1, 0)],

mode="bilinear",

align_corners=True,

) # B * g, h_g, HW, Ns

attn_bias = attn_bias.reshape(B * self.n_heads, H * W, n_sample)

attn = attn + attn_bias

attn = F.softmax(attn, dim=2)

attn = self.attn_drop(attn)

out = torch.einsum("b m n, b c n -> b c m", attn, v)

if self.use_pe and self.dwc_pe:

out = out + residual_lepe

out = out.reshape(B, C, H, W)

y = self.proj_drop(self.proj_out(out))

return y, pos.reshape(B, self.n_groups, Hk, Wk, 2), reference.reshape(B, self.n_groups, Hk, Wk, 2)

experimental result

Ablation Experiment

| Using the comparison of the form of spatial information and the introduction of location information | Deformable Attention Where to use | Deformable Attention offset Range factor of ( It's visible in the code ) |

|---|---|---|

|  |  |

Comparative experiments

| classification | object detection | Division |

|---|---|---|

|  |  |

边栏推荐

- 离散 第一章

- Rnorm function of R language generates positive distribution data, calculates descriptive statistical summary information of vector data using sum function of epidisplay package, and visualizes ordere

- 10 lessons from the recommended system

- Ten important properties of determinant

- Windows10 configuration database

- NaiveBayes function of R language e1071 package constructs naive Bayes model, predict function uses naive Bayes model to predict and reason test data, and table function constructs confusion matrix

- 20220607. face recognition

- Parameter estimation of Weibull distribution

- 从AC5到AC6转型之路(1)——补救和准备

- Compiling principle on computer -- function drawing language (III): parser

猜你喜欢

Architecture and performance analysis of convolutional neural network

C # hide the keyboard input on the console (the input content is not displayed on the window)

The computer is connected to WiFi but can't connect to the Internet

Derivation of Poisson distribution

Topic 1 Single_ Cell_ analysis(1)

HDLC protocol

Cookies and sessions

Leverage contextual information

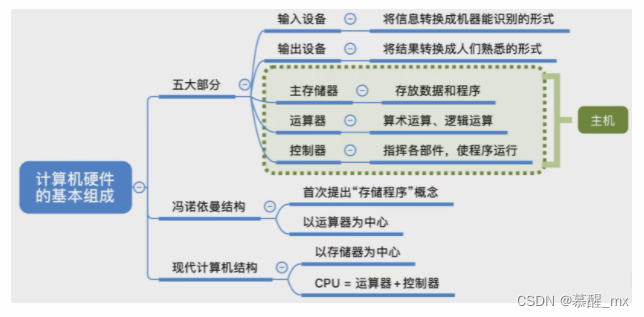

计组第一章

Classic paper review: palette based photo retrieval

随机推荐

The project file contains toolsversion= "14.0". This toolset may be unknown or missing workarounds

Upgrade eigen to version 3.3.5 under Ubuntu 16.04

20220524 深度学习技术点

The latest hbuilderx editing uni app project runs in the night God simulator

Compiling principle on computer -- function drawing language (II): lexical analyzer

Introduction to coco dataset

MinGW offline installation package (free, fool)

ECMAScript6面试题

"Three.js" auxiliary coordinate axis

C # push box

2021.11.3-7 scientific research log

10 lessons from the recommended system

Classic paper review: palette based photo retrieval

Three data exchange modes: line exchange, message exchange and message packet exchange

Topic 1 Single_Cell_analysis(1)

In depth learning, the parameter quantity (param) in the network is calculated. The appendix contains links to floating point computations (flops).

Multithread decompression of tar

Interview questions on mobile terminal, Android and IOS compatibility

2、 Eight, ten and hexadecimal conversion

2021.10.27-28 scientific research log