当前位置:网站首页>Compiling principle on computer -- function drawing language (III): parser

Compiling principle on computer -- function drawing language (III): parser

2022-06-12 07:50:00 【FishPotatoChen】

Catalog

Related articles

Compiling principle on the computer —— Functional drawing language ( One )

Compiling principle on the computer —— Functional drawing language ( Two ): Lexical analyzer

Compiling principle on the computer —— Functional drawing language ( Four ): semantic analyzer

Compiling principle on the computer —— Functional drawing language ( 5、 ... and ): Compiler and interpreter

explain

In order to make the lexical analyzer better serve the parser , I changed part of the lexical analyzer code . So here is a new explanation .

Lexical analyzer

Generate symbol table

This is a table driven lexical analyzer , The symbol table will be saved in the file TOKEN.npy in .

# -*- coding: utf-8 -*-

""" Created on Mon Nov 23 20:05:45 2020 @author: FishPotatoChen Copyright (c) 2020 FishPotatoChen All rights reserved. """

import numpy as np

import math

TOKEN = {

# Constant

'PI': {

'TYPE': 'CONST_ID', 'VALUE': math.pi, 'FUNCTION': None},

'E': {

'TYPE': 'CONST_ID', 'VALUE': math.e, 'FUNCTION': None},

# Variable

'T': {

'TYPE': 'SYMBOL', 'VALUE': None, 'FUNCTION': None},

# function

'SIN': {

'TYPE': 'FUNC', 'VALUE': None, 'FUNCTION': 'math.sin'},

'COS': {

'TYPE': 'FUNC', 'VALUE': None, 'FUNCTION': 'math.cos'},

'TAN': {

'TYPE': 'FUNC', 'VALUE': None, 'FUNCTION': 'math.tan'},

'LN': {

'TYPE': 'FUNC', 'VALUE': None, 'FUNCTION': 'math.log'},

'EXP': {

'TYPE': 'FUNC', 'VALUE': None, 'FUNCTION': 'math.exp'},

'SQRT': {

'TYPE': 'FUNC', 'VALUE': None, 'FUNCTION': 'math.sqrt'},

# Reserved words

'ORIGIN': {

'TYPE': 'KEYWORD', 'VALUE': None, 'FUNCTION': None},

'SCALE': {

'TYPE': 'KEYWORD', 'VALUE': None, 'FUNCTION': None},

'ROT': {

'TYPE': 'KEYWORD', 'VALUE': None, 'FUNCTION': None},

'IS': {

'TYPE': 'KEYWORD', 'VALUE': None, 'FUNCTION': None},

'FOR': {

'TYPE': 'KEYWORD', 'VALUE': None, 'FUNCTION': None},

'FROM': {

'TYPE': 'KEYWORD', 'VALUE': None, 'FUNCTION': None},

'TO': {

'TYPE': 'KEYWORD', 'VALUE': None, 'FUNCTION': None},

'STEP': {

'TYPE': 'KEYWORD', 'VALUE': None, 'FUNCTION': None},

'DRAW': {

'TYPE': 'KEYWORD', 'VALUE': None, 'FUNCTION': None},

# Operator

'+': {

'TYPE': 'OP', 'VALUE': None, 'FUNCTION': None},

'-': {

'TYPE': 'OP', 'VALUE': None, 'FUNCTION': None},

'*': {

'TYPE': 'OP', 'VALUE': None, 'FUNCTION': None},

'/': {

'TYPE': 'OP', 'VALUE': None, 'FUNCTION': None},

'**': {

'TYPE': 'OP', 'VALUE': None, 'FUNCTION': None},

# mark

'(': {

'TYPE': 'MARK', 'VALUE': None, 'FUNCTION': None},

')': {

'TYPE': 'MARK', 'VALUE': None, 'FUNCTION': None},

',': {

'TYPE': 'MARK', 'VALUE': None, 'FUNCTION': None},

# Terminator

';': {

'TYPE': 'END', 'VALUE': None, 'FUNCTION': None},

# empty

'': {

'TYPE': 'EMPTY', 'VALUE': None, 'FUNCTION': None},

# Numbers

'0': {

'TYPE': 'NUMBER', 'VALUE': 0.0, 'FUNCTION': None},

'1': {

'TYPE': 'NUMBER', 'VALUE': 1.0, 'FUNCTION': None},

'2': {

'TYPE': 'NUMBER', 'VALUE': 2.0, 'FUNCTION': None},

'3': {

'TYPE': 'NUMBER', 'VALUE': 3.0, 'FUNCTION': None},

'4': {

'TYPE': 'NUMBER', 'VALUE': 4.0, 'FUNCTION': None},

'5': {

'TYPE': 'NUMBER', 'VALUE': 5.0, 'FUNCTION': None},

'6': {

'TYPE': 'NUMBER', 'VALUE': 6.0, 'FUNCTION': None},

'7': {

'TYPE': 'NUMBER', 'VALUE': 7.0, 'FUNCTION': None},

'8': {

'TYPE': 'NUMBER', 'VALUE': 8.0, 'FUNCTION': None},

'9': {

'TYPE': 'NUMBER', 'VALUE': 9.0, 'FUNCTION': None},

'.': {

'TYPE': 'NUMBER', 'VALUE': None, 'FUNCTION': None},

}

np.save('TOKEN.npy', TOKEN)

Lexical analyzer body

The file named lexer.py

# -*- coding: utf-8 -*-

""" Created on Mon Nov 23 20:05:45 2020 @author: FishPotatoChen Copyright (c) 2020 FishPotatoChen All rights reserved. """

# Lexical analyzer

import math

import re

import numpy as np

class Lexer:

def __init__(self):

# Read the tick table from the file

self.TOKEN = np.load('TOKEN.npy', allow_pickle=True).item()

def getToken(self, sentence):

self.output_list = []

if sentence:

tokens = sentence.split()

for token in tokens:

try:

# No.0

# First, identify the directly identifiable marks

# The normal form is ORIGIN|SCALE|ROT|IS|FOR|FROM|TO|STEP|DRAW|ε

self.output_token(token)

# No.0 End of identification

except:

# If you can't find it, go to a higher level DFA To identify

self.argument_lexer(token)

self.output_token(';')

# The structure is more complex 、 advanced 、 A variety of recognition expressions

def argument_lexer(self, argument):

# Scanning position

i = 0

# String length

length = len(argument)

while(i < length):

# Temporary string , That's buffer

temp = ''

if argument[i] in ['P', 'S', 'C', 'L', 'E', 'T', '*']:

# No.1

# distinguish "*" still "**" The process is a context sensitive grammar

if argument[i] == '*':

i += 1

if i >= length:

self.output_token(argument[i])

break

elif argument[i] == '*':

self.output_token('**')

else:

i -= 1

self.output_token(argument[i])

# No.1 End of identification

else:

# No.2

# DFA Determine the character string that is all letters

# The normal form is PI|E|T|SIN|COS|TAN|LOG|EXP|SQRT

temp = re.findall(r"[A-Z]+", argument[i:])[0]

# See if the string accepts

self.output_token(temp)

i += len(temp)-1

if i >= length:

break

# No.2 End of identification

elif argument[i] in ['0', '1', '2', '3', '4', '5', '6', '7', '8', '9', '.']:

# No.3

# Identification Numbers

if argument[i] == '.':

# Identification begins with "." The number of

# The normal form is .[0-9]+

# Such as :.52=>0.52

i += 1

temp = re.findall(r"\d+", argument[i:])[0]

i += len(temp)-1

temp = '0.' + temp

self.output_token(temp, False)

else:

# Identify general numbers

# The normal form is [0-9]+.?[0-9]*

# Such as :5.52=>5.52;12=>12

temp = re.findall(r"\d+\.?\d*", argument[i:])[0]

i += len(temp)-1

self.output_token(temp, False)

if i >= length:

break

# No.3 End of identification

else:

# No.4

# Recognize other characters

# The normal form is +|-|/|(|)|,|ε

self.output_token(argument[i])

i += 1

# Output function

# Because the interpreter doesn't have to output to the screen , But the title requires output to the screen

# So I especially wrote a function to output , It is convenient for the next parser to call the grammar parser

# When the output is not a screen , Embedding the output into the code makes it hard to change

# If you write it as an independent function , Directly change the output function

def output_token(self, token, NotNumber=True):

if NotNumber:

self.output_list.append([token, self.TOKEN[token]])

else:

tempdic = {

token: {

'TYPE': 'NUMBER', 'VALUE': float(token), 'FUNCTION': None}}

self.output_list.append([token, tempdic[token]])

Scanner

No changes were made

See the following link for the code :

Compiling principle on the computer —— Functional drawing language ( Two ): Lexical analyzer

parsers

Parser body

The file named myparser.py

# -*- coding: utf-8 -*-

""" Created on Wed Dec 2 19:36:32 2020 @author: FishPotatoChen Copyright (c) 2020 FishPotatoChen All rights reserved. """

# parsers

import ast

import astunparse

import scanner

class Parser:

def __init__(self):

self.scanner = scanner.Scanner("test.txt")

self.dataflow = self.scanner.analyze()

self.i = 0 # Data read location

self.length = len(self.dataflow) # Data stream length

def analyze(self):

print('enter in program')

self.i = 0

while(self.i < self.length):

print('enter in statement')

# Match reserved words

# S->'ORIGIN'T|'SCALE'T|'ROT'T|'FOR'P

# T->'IS('E','E')'|'IS'E

# E For an arithmetic expression

# P by FOR Rear special structure

if self.dataflow[self.i][1]['TYPE'] == 'KEYWORD':

self.output(self.dataflow[self.i][0])

print('exit from statement')

print('exit from program')

def output(self, string):

# Output

# Because the output statements have the same structure

# So put it in the same function

# actually , The parsing ends when the syntax tree is obtained

print('enter in '+string.lower()+'_statement')

print('matchtoken '+string.upper())

self.i += 1

if string == 'ORIGIN' or string == 'SCALE':

self.ORIGIN_or_SCALE()

elif string == 'ROT':

self.ROT()

elif string == 'FOR':

self.FOR()

else:

raise SyntaxError()

print('exit from '+string.lower()+'_statement')

def ORIGIN_or_SCALE(self):

self.matchstring('IS')

templist = self.matchparameter()

self.outputTree(templist[0])

self.outputTree(templist[1])

def ROT(self):

self.matchstring('IS')

self.outputTree(self.matchexpression())

def FOR(self):

self.matchstring('T')

self.matchstring('FROM')

self.outputTree(self.matchexpression())

self.matchstring('TO')

self.outputTree(self.matchexpression())

self.matchstring('STEP')

self.outputTree(self.matchexpression())

self.matchstring('DRAW')

templist = self.matchparameter()

self.outputTree(templist[0])

self.outputTree(templist[1])

def matchstring(self, string):

# Match a specific string

if self.dataflow[self.i][0] == string:

print('matchtoken '+string)

self.i += 1

else:

raise SyntaxError()

# matchparameter And matchexpression The difference between

# The former matches double expressions

# Or you can match both double expressions and single expressions

# Reason for separation :

# in consideration of ROT This is followed by a single expression and ORIGIN And SCALE The following are all arithmetic expressions

# also FOR There are both single and double expressions

# Therefore, we hereby distinguish

def matchparameter(self):

# matching (E,E)

# That is, the matching parameter list

# Such as :

# ORIGIN IS (5,5);

# In the following parentheses, the parts including parentheses are parameter lists

temp = self.matchexpression() # buffer

# Convert to list [E,E]

if temp[0] == '(' and temp[-1] == ')':

temp = temp[1:-1].split(',')

else:

raise SyntaxError()

return temp

def matchexpression(self):

# matching E perhaps (E,E)

# That is, match an arithmetic expression

# Such as

# 5*2-LN(3)

# (5*2-3,tan(0.1))

temp = '' # buffer

while(self.dataflow[self.i][0] != ';' and self.i < self.length and self.dataflow[self.i][1]['TYPE'] != 'KEYWORD'):

if self.dataflow[self.i][1]['TYPE'] == 'FUNC':

temp += self.dataflow[self.i][1]['FUNCTION']

elif self.dataflow[self.i][1]['TYPE'] == 'CONST_ID':

temp += str(self.dataflow[self.i][1]['VALUE'])

else:

temp += self.dataflow[self.i][0]

self.i += 1

if self.dataflow[self.i][0] == ';':

self.i += 1 # Skip the semicolon at the end

return temp

def outputTree(self, string):

# Output syntax tree

print('enter in expression')

print(astunparse.dump(ast.parse(string, filename='<unknown>')))

print('exit from expression')

The main function

file name main.py

# -*- coding: utf-8 -*-

""" Created on Wed Dec 3 18:27:18 2020 @author: FishPotatoChen Copyright (c) 2020 FishPotatoChen All rights reserved. """

from myparser import Parser

if __name__ == '__main__':

Parser().analyze()

test

The test file

The file named test.txt

origin is (100*sin(0.5),100*cos(0.6));

origin is (0+tan(0.1),0-ln(e));

origin is (10,10);

origin is (0,0);

origin is (10,10);

ROT is E/4;

ROT is pi ** 4;

ROT is pi /4*5;

ROT is sin( 4.5)*cos(3.5);

ROT is pi/4;

test result

enter in program

enter in statement

enter in origin_statement

matchtoken ORIGIN

matchtoken IS

enter in expression

Module(body=[Expr(value=BinOp(

left=Num(n=100),

op=Mult(),

right=Call(

func=Attribute(

value=Name(

id='math',

ctx=Load()),

attr='sin',

ctx=Load()),

args=[Num(n=0.5)],

keywords=[])))])

exit from expression

enter in expression

Module(body=[Expr(value=BinOp(

left=Num(n=100),

op=Mult(),

right=Call(

func=Attribute(

value=Name(

id='math',

ctx=Load()),

attr='cos',

ctx=Load()),

args=[Num(n=0.6)],

keywords=[])))])

exit from expression

exit from origin_statement

exit from statement

enter in statement

enter in origin_statement

matchtoken ORIGIN

matchtoken IS

enter in expression

Module(body=[Expr(value=BinOp(

left=Num(n=0),

op=Add(),

right=Call(

func=Attribute(

value=Name(

id='math',

ctx=Load()),

attr='tan',

ctx=Load()),

args=[Num(n=0.1)],

keywords=[])))])

exit from expression

enter in expression

Module(body=[Expr(value=BinOp(

left=Num(n=0),

op=Sub(),

right=Call(

func=Attribute(

value=Name(

id='math',

ctx=Load()),

attr='log',

ctx=Load()),

args=[Num(n=2.718281828459045)],

keywords=[])))])

exit from expression

exit from origin_statement

exit from statement

enter in statement

enter in origin_statement

matchtoken ORIGIN

matchtoken IS

enter in expression

Module(body=[Expr(value=Num(n=10))])

exit from expression

enter in expression

Module(body=[Expr(value=Num(n=10))])

exit from expression

exit from origin_statement

exit from statement

enter in statement

enter in origin_statement

matchtoken ORIGIN

matchtoken IS

enter in expression

Module(body=[Expr(value=Num(n=0))])

exit from expression

enter in expression

Module(body=[Expr(value=Num(n=0))])

exit from expression

exit from origin_statement

exit from statement

enter in statement

enter in origin_statement

matchtoken ORIGIN

matchtoken IS

enter in expression

Module(body=[Expr(value=Num(n=10))])

exit from expression

enter in expression

Module(body=[Expr(value=Num(n=10))])

exit from expression

exit from origin_statement

exit from statement

enter in statement

enter in rot_statement

matchtoken ROT

matchtoken IS

enter in expression

Module(body=[Expr(value=BinOp(

left=Num(n=2.718281828459045),

op=Div(),

right=Num(n=4)))])

exit from expression

exit from rot_statement

exit from statement

enter in statement

enter in rot_statement

matchtoken ROT

matchtoken IS

enter in expression

Module(body=[Expr(value=BinOp(

left=Num(n=3.141592653589793),

op=Pow(),

right=Num(n=4)))])

exit from expression

exit from rot_statement

exit from statement

enter in statement

enter in rot_statement

matchtoken ROT

matchtoken IS

enter in expression

Module(body=[Expr(value=BinOp(

left=BinOp(

left=Num(n=3.141592653589793),

op=Div(),

right=Num(n=4)),

op=Mult(),

right=Num(n=5)))])

exit from expression

exit from rot_statement

exit from statement

enter in statement

enter in rot_statement

matchtoken ROT

matchtoken IS

enter in expression

Module(body=[Expr(value=BinOp(

left=Call(

func=Attribute(

value=Name(

id='math',

ctx=Load()),

attr='sin',

ctx=Load()),

args=[Num(n=4.5)],

keywords=[]),

op=Mult(),

right=Call(

func=Attribute(

value=Name(

id='math',

ctx=Load()),

attr='cos',

ctx=Load()),

args=[Num(n=3.5)],

keywords=[])))])

exit from expression

exit from rot_statement

exit from statement

enter in statement

enter in rot_statement

matchtoken ROT

matchtoken IS

enter in expression

Module(body=[Expr(value=BinOp(

left=Num(n=3.141592653589793),

op=Div(),

right=Num(n=4)))])

exit from expression

exit from rot_statement

exit from statement

exit from program

Briefly explain

Library description

Use Python library ast, This is Python Build a package dedicated to the syntax tree , Use astunparse Libraries can make the output look better , More layers .

AST Grammar

In fact, it is the same as the context free grammar in the book , Just put → \rightarrow → Changed to = = =, Everything else is the same , If you don't believe me, you can try to see , It's simple .

for instance :

mod = Module(stmt* body)

| Interactive(stmt* body)

| Expression(expr body)

The way to translate it into a book is

m o d → M o d u l e ( s t m t ∗ b o d y ) ∣ I n t e r a c t i v e ( s t m t ∗ b o d y ) ∣ E x p r e s s i o n ( e x p r b o d y ) mod\rightarrow Module(stmt* body)|Interactive(stmt* body)|Expression(expr body) mod→Module(stmt∗body)∣Interactive(stmt∗body)∣Expression(exprbody)

after stmt* body A series of grammars can be derived , It can be understood as C The pointer in ,stmt The type points to the name body The object of , Only the book uses a single capital letter to represent the non terminal character , Here is just the direct use of word expression

All grammar

-- ASDL's 7 builtin types are:

-- identifier, int, string, bytes, object, singleton, constant

--

-- singleton: None, True or False

-- constant can be None, whereas None means "no value" for object.

module Python

{

mod = Module(stmt* body)

| Interactive(stmt* body)

| Expression(expr body)

-- not really an actual node but useful in Jython's typesystem.

| Suite(stmt* body)

stmt = FunctionDef(identifier name, arguments args,

stmt* body, expr* decorator_list, expr? returns)

| AsyncFunctionDef(identifier name, arguments args,

stmt* body, expr* decorator_list, expr? returns)

| ClassDef(identifier name,

expr* bases,

keyword* keywords,

stmt* body,

expr* decorator_list)

| Return(expr? value)

| Delete(expr* targets)

| Assign(expr* targets, expr value)

| AugAssign(expr target, operator op, expr value)

-- 'simple' indicates that we annotate simple name without parens

| AnnAssign(expr target, expr annotation, expr? value, int simple)

-- use 'orelse' because else is a keyword in target languages

| For(expr target, expr iter, stmt* body, stmt* orelse)

| AsyncFor(expr target, expr iter, stmt* body, stmt* orelse)

| While(expr test, stmt* body, stmt* orelse)

| If(expr test, stmt* body, stmt* orelse)

| With(withitem* items, stmt* body)

| AsyncWith(withitem* items, stmt* body)

| Raise(expr? exc, expr? cause)

| Try(stmt* body, excepthandler* handlers, stmt* orelse, stmt* finalbody)

| Assert(expr test, expr? msg)

| Import(alias* names)

| ImportFrom(identifier? module, alias* names, int? level)

| Global(identifier* names)

| Nonlocal(identifier* names)

| Expr(expr value)

| Pass | Break | Continue

-- XXX Jython will be different

-- col_offset is the byte offset in the utf8 string the parser uses

attributes (int lineno, int col_offset)

-- BoolOp() can use left & right?

expr = BoolOp(boolop op, expr* values)

| BinOp(expr left, operator op, expr right)

| UnaryOp(unaryop op, expr operand)

| Lambda(arguments args, expr body)

| IfExp(expr test, expr body, expr orelse)

| Dict(expr* keys, expr* values)

| Set(expr* elts)

| ListComp(expr elt, comprehension* generators)

| SetComp(expr elt, comprehension* generators)

| DictComp(expr key, expr value, comprehension* generators)

| GeneratorExp(expr elt, comprehension* generators)

-- the grammar constrains where yield expressions can occur

| Await(expr value)

| Yield(expr? value)

| YieldFrom(expr value)

-- need sequences for compare to distinguish between

-- x < 4 < 3 and (x < 4) < 3

| Compare(expr left, cmpop* ops, expr* comparators)

| Call(expr func, expr* args, keyword* keywords)

| Num(object n) -- a number as a PyObject.

| Str(string s) -- need to specify raw, unicode, etc?

| FormattedValue(expr value, int? conversion, expr? format_spec)

| JoinedStr(expr* values)

| Bytes(bytes s)

| NameConstant(singleton value)

| Ellipsis

| Constant(constant value)

-- the following expression can appear in assignment context

| Attribute(expr value, identifier attr, expr_context ctx)

| Subscript(expr value, slice slice, expr_context ctx)

| Starred(expr value, expr_context ctx)

| Name(identifier id, expr_context ctx)

| List(expr* elts, expr_context ctx)

| Tuple(expr* elts, expr_context ctx)

-- col_offset is the byte offset in the utf8 string the parser uses

attributes (int lineno, int col_offset)

expr_context = Load | Store | Del | AugLoad | AugStore | Param

slice = Slice(expr? lower, expr? upper, expr? step)

| ExtSlice(slice* dims)

| Index(expr value)

boolop = And | Or

operator = Add | Sub | Mult | MatMult | Div | Mod | Pow | LShift

| RShift | BitOr | BitXor | BitAnd | FloorDiv

unaryop = Invert | Not | UAdd | USub

cmpop = Eq | NotEq | Lt | LtE | Gt | GtE | Is | IsNot | In | NotIn

comprehension = (expr target, expr iter, expr* ifs, int is_async)

excepthandler = ExceptHandler(expr? type, identifier? name, stmt* body)

attributes (int lineno, int col_offset)

arguments = (arg* args, arg? vararg, arg* kwonlyargs, expr* kw_defaults,

arg? kwarg, expr* defaults)

arg = (identifier arg, expr? annotation)

attributes (int lineno, int col_offset)

-- keyword arguments supplied to call (NULL identifier for **kwargs)

keyword = (identifier? arg, expr value)

-- import name with optional 'as' alias.

alias = (identifier name, identifier? asname)

withitem = (expr context_expr, expr? optional_vars)

}

Illustrate with examples

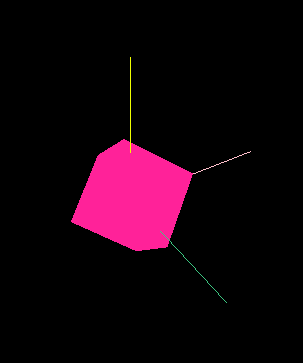

Use 100*sin(0.5) give an example , The tree structure constructed by this expression is as follows

Module(body=[Expr(value=BinOp(

left=Num(n=100),

op=Mult(),

right=Call(

func=Attribute(

value=Name(

id='math',

ctx=Load()),

attr='sin',

ctx=Load()),

args=[Num(n=0.5)],

keywords=[])))])

How does this become a tree ?

value=BinOp, Description is a binary tree , And the root node is the operator ;left=, This is what the left child is ;Num(n=100), The left child is a number , The value is 100;op=Mult(), Through the first step , We have set the root node to the operator , This means that the operator is a multiplication sign ;right=, This is about what the right child is ;Call, This is a function ;id='math', The name ismath, That is to say Python Ofmathlibrary ;attr='sin', The attribute issin, It's calledsinfunction ;args=[Num(n=0.5)], The parameter is a number , The value is 0.5;- adopt 5~9 Step , We know that the right child of this tree is a function , And is

mathIn the librarysinfunction , The function parameter has a value of 0.5 Number of numbers .

边栏推荐

- R language uses neuralnet package to build neural network regression model (feedforward neural network regression model) and calculate MSE value (mean square error) of the model on the test set

- 二、八、十、十六进制相互转换

- Utilize user tag data

- The first demand in my life - batch uploading of Excel data to the database

- 石油储运生产 2D 可视化,组态应用赋能工业智慧发展

- Voice assistant - future trends

- Solve the problem of uploading sftporg apache. commons. net. MalformedServerReplyException: Could not parse respon

- Voice assistant - Multi round conversation (process implementation)

- Voice assistant - potential skills and uncalled call technique mining

- R语言使用neuralnet包构建神经网络回归模型(前馈神经网络回归模型),计算模型在测试集上的MSE值(均方误差)

猜你喜欢

谋新局、促发展,桂林绿色数字经济的头雁效应

电脑连接上WiFi但是上不了网

Gd32f4 (5): gd32f450 clock is configured as 200m process analysis

Vs 2019 MFC connects and accesses access database class library encapsulation through ace engine

Topic 1 Single_Cell_analysis(1)

![[redistemplate method details]](/img/ef/66d8e3fe998d9a788170016495cb10.png)

[redistemplate method details]

2021.11.3-7 scientific research log

![‘CMRESHandler‘ object has no attribute ‘_ timer‘,socket. gaierror: [Errno 8] nodename nor servname pro](/img/de/6756c1b8d9b792118bebb2d6c1e54c.png)

‘CMRESHandler‘ object has no attribute ‘_ timer‘,socket. gaierror: [Errno 8] nodename nor servname pro

『Three.js』辅助坐标轴

Machine learning from entry to re entry: re understanding of SVM

随机推荐

L'effet de l'oie sauvage sur l'économie numérique verte de Guilin

Golang quickly generates model and queryset of database tables

Right click the general solution of file rotation jam, refresh, white screen, flash back and desktop crash

初步认知Next.js中ISR/RSC/Edge Runtime/Streaming等新概念

移动端、安卓、IOS兼容性面试题

LeetCode34. 在排序数组中查找元素的第一个和最后一个位置

Hongmeng OS first training

Work summary of the week from November 22 to 28, 2021

Topic 1 Single_ Cell_ analysis(1)

Classic paper review: palette based photo retrieval

2021.11.3-7 scientific research log

Chapter 3 - Fundamentals of cryptography

Topic 1 Single_ Cell_ analysis(4)

R语言使用epiDisplay包的summ函数计算dataframe中指定变量在不同分组变量下的描述性统计汇总信息并可视化有序点图、使用dot.col参数设置不同分组数据点的颜色

20220607. 人脸识别

Meter Reading Instrument(MRI) Remote Terminal Unit electric gas water

二、八、十、十六进制相互转换

数值计算方法 Chapter5. 解线性方程组的直接法

How to stop MySQL service under Linux

R语言e1071包的naiveBayes函数构建朴素贝叶斯模型、predict函数使用朴素贝叶斯模型对测试数据进行预测推理、table函数构建混淆矩阵