当前位置:网站首页>Architecture and performance analysis of convolutional neural network

Architecture and performance analysis of convolutional neural network

2022-06-12 07:50:00 【FishPotatoChen】

Catalog

Convolutional neural network is now widely used in the field of image recognition , There are mainly the most primitive CNN The Internet , After that CNN The network evolved R-CNN The Internet , But because the speed is not fast enough , And there it is RCNN Improved version Fast R-CNN With a faster framework Faster R-CNN, These improve the detection speed , But the accuracy is not high , In order to improve the accuracy , The number of layers of neural network is increased , The gradient disappears , So it was put forward ResNet. Pool layer is added for different feature sizes , Put forward AlexNet. stay ResNet On the basis of , And then it comes to mask R-CNN. In order to make the detection faster , Improved the selection of candidate boxes , Using the idea of regression to create YOLO, YOLO Although it has improved the shortcomings of the previous method , But it also introduces new shortcomings , To improve YOLO The shortcomings of , take YOLO Combined with previous ideas , To create a SSD. YOLO And SSD Because of the single-stage structure ( one-shot) The speed is fast and the accuracy decreases , To solve this problem , Put forward RetinaNet Solve .

This paper will analyze the above frameworks , And its performance is analyzed and compared .

1 summary

The original idea of neural network comes from brain science , To identify objects , Mimicking the brain to build a low-level neural network , At this time, full connection is used , The programmer can adjust the parameters by himself , Its essence is human speculation , Experiment with different parameters , Observe the output , Then adjust the parameters manually , Until two objects can be distinguished , The essence is a classifier . Obviously this method is slow , And once there are too many layers , There will be no way to support the phenomenon of computational power , for instance , Suppose there is 5 ∗ 5 5*5 5∗5 A set of pictures , Use one layer 10 10 10 A complete connection of neurons , Then this layer has to be calculated 5 ∗ 5 ∗ 10 5*5*10 5∗5∗10 Time calculation , Then add the output of each neuron , Get a number , This number is the characteristic of this object , By adjusting the 10 The parameters of neurons stabilize the eigenvalues of the same object , At this time , If you add another layer 10 10 10 A complete connection of neurons , Then the number of calculations becomes 5 ∗ 5 ∗ 10 + 10 ∗ 10 5*5*10+10*10 5∗5∗10+10∗10 Time calculation , And it needs to be adjusted 20 20 20 Parameters of neurons , This not only causes a lot of pressure on the computer , Moreover, the stability and correctness of the results also depend greatly on the person who adjusts the parameters . To solve the parameter adjustment problem , A back propagation algorithm is proposed , Feed back the results to the neural network , Find the local optimal solution by derivation , Change the parameters of neurons , Optimize it , Local minimum reached . When the local optimal solution is reached , The parameter will get stuck in the local optimal solution , And the best solution is the global optimal solution , So there comes drop out, When the iteration is stable, some neuron parameters are set to zero , In this way, the starting position of the parameter can be placed in another place , Then we will find the optimal solution again .

Every object has its own characteristics , The human brain uses these features to distinguish objects , The computer is not so intelligent , It is impossible to distinguish objects directly by their features , Because the pictures are big and small , This is a different feature in the eyes of the computer , So we need a way , Zoom in and out of features , Make the photo size independent of features . To solve this problem , Convolution kernel and pooling layer are added on the basis of full connection , On the one hand, zoom the picture , On the other hand, it reduces the amount of computation , For better results . So convolutional neural network was born .

2 CNN

CNN Is the most primitive convolutional neural network . It is based on the original full connection , Increase convolution kernel , The extracted features , Then the extracted features are classified by full connection , Select the item with the highest score as the result of object recognition . give an example , To distinguish a cat from a dog , Make a training set , Mark the picture label of the cat as 0 0 0, The picture label of the dog is 1 1 1, So after convolution neural network , It's close to 0 0 0 The picture of the cat , It's close to 1 1 1 The picture of the dog , This distinguishes the cat from the dog .

CNN The Internet extracts the features of photos by itself , Then classify —— This is also the origin of deep learning , That is, the machine extracts features autonomously —— Get the results .CNN The shortcomings are obvious , First of all, there is only one object in the photo ,CNN No segmentation of the photos , Secondly, the gradient will disappear with the increase of network layers , Make the whole network redundant .

3 R-CNN

R-CNN Is for CNN An improvement on the defect of ,R-CNN Multiple bounding boxes are created in the image , Check if there are any target objects in these boxes ,R-CNN Use selective search to extract these borders from an image . Selective search will determine these features of the object in the picture , Then highlight different areas based on these features . The process is as follows :

- Enter a photo ;

- Generate the original segmentation image ;

- Based on color 、 structure 、 Size 、 shape , Merge similar areas into larger areas ;

- Generate ROI(Region of Interest).

use RCNN The steps for detecting the target object are as follows :

- Take a pre trained convolutional neural network ;

- According to the number of target categories to be detected , The last layer of the training network ;

- Get the of each picture ROI, Redevelop these areas , To make it conform to CNN Input dimension requirements for ;

- obtain CNN After output , Training SVM( Support vector machine ) To identify the target object and the background . For each category , We all have to train a binary SVM.

such R-CNN The target object can be detected , The same shortcoming is obvious . In the above paragraph 3 3 3 Step ,ROI The area needs 2000 2000 2000 Multiple , And every one ROI Need one CNN, This leads to training a R-CNN Models are very expensive , Based on selective search , To extract each picture 2000 2000 2000 Separate areas , use CNN Extract the features of each region . Suppose we have N N N A picture , that CNN The characteristic is N ∗ 2000 N*2000 N∗2000, A new photo needs 40 − 50 40-50 40−50 Second to predict , For large data, it is basically impossible to process .

4 Fast R-CNN

R-CNN The basic process is to first generate ROI, Then for each ROI The extracted features , Finally, these features are classified to get the desired target , The main time consumed is CNN On .Fast R-CNN The creator of , If each photo is only used once CNN Can be faster . therefore , take R-CNN The execution steps of have been adjusted , First use... For the whole picture CNN, Get feature mapping (feature map, matrix A A A A matrix is formed by convolution B B B, B B B by A A A Feature mapping of ), Delimit by feature mapping ROI, To use ROI Pool layer will ROI Correct the size to the appropriate size , Then enter the full connection layer for classification . Such a picture only needs one time CNN, Reduce the time . however R-CNN Other shortcomings ,Fast R-CNN Still not solved , It also uses selective search as a way to find regions of interest , The process is usually slow .Fast R-CNN It takes about 2 2 2 second , But this speed is still not ideal for large data sets .

5 Faster R-CNN

Faster R-CNN yes Fast R-CNN Optimized version of , Mainly to optimize ROI Generation ,Fast RCNN Using selective search , and Faster RCNN It's using Region Proposal Network(RPN).RPN working process :

- Enter photos and GT(ground truth, That is, the correct border of the target to be found );

- Each feature mapped pixel is a Anchor( Anchor point ), With Anchor Generate... For the center k k k Three rectangles of different sizes and proportions are used as anchor boxes, k k k The default is 9 9 9;

- By calculation I O U IOU IOU( I n t e r s e c t i o n o v e r U n i o n Intersection over Union IntersectionoverUnion), Label the positive sample with a GT Have the highest IOU Overlapping Anchor box. With any one of them GT Of IOU The overlap is greater than 0.7 0.7 0.7 Of Anchor box . Negative sample label is given to all GT Of IOU The overlap is less than 0.3 0.3 0.3 Of Anchor box. Return to the target proposal (object proposals,OP) And the corresponding score .

- application ROI Pooling layer , Will all proposals Fixed to the same size , Return to the full connectivity layer , Generate the frame of the target object .

At this time OP And GT There is still a big gap , Need to be corrected . Translation and regression , Translation is the translation of a positive sample Anchor translation , That is, move the midpoint of the positive sample ; Regression is fixed Anchor, change box Size . Whether translation or regression , It's all about bringing Anchor box near GT.

And then through the loss function softmax loss(cross entropy loss, Cross entropy loss ) Calculate , The supervision label here is GT( 1 , 0 1,0 1,0), Keep telling whether the training results are right or not . This is the training until the final classification training is completed , Generate the bounding box of the target object .

RPN In short, it's in CNN Then the feature mapping is used as RPN The input of , Training , It uses a network to find the frame of the object ,RPN Separate the foreground from the background for object recognition ,RPN Don't solve what this object is , Then we classify the objects according to the features in their borders , Get what this object is .

At this time ,Faster R-CNN The detection time of a picture can be reduced to 0.2 0.2 0.2 second . The problem lies in , Accuracy is not high enough ,CNN The number of layers is generally limited to 5 5 5 layer .

6 AlexNet

When CNN Increase the number of layers , The gradient disappears , That is, the back-propagation algorithm changes the parameters of the neural network near the input layer slowly or even stagnates , Like gradient explosion, it is mainly caused by parameter multiplication . Gradient vanishing makes the training of deep neural network as effective as using only the last few layers , This hinders the development of deep neural networks .AlexNet Is the first method proposed for gradient vanishing .

AlexNet It contains several relatively new technical points , Also for the first time in CNN Successfully applied ReLU、Dropout and LRN Other methods . meanwhile AlexNet Also used. GPU Speed up the operation .AlexNet take LeNet To carry forward the thought of , hold CNN The basic principle is applied to a very deep and wide network .AlexNet The main new technologies used are as follows :

- Successful use of ReLU As CNN The activation function of , And verify its effect in the deeper network than Sigmoid, Successfully solved Sigmoid The problem of gradient dispersion in deep network . although ReLU The activation function was proposed a long time ago , But until AlexNet The emergence of the will carry it forward .

- Use during training Dropout Ignore some neurons at random , To avoid over fitting the model .Dropout Although there is a separate paper on , however AlexNet Put it to use , Its effect has been proved by practice . stay AlexNet It is mainly used in the last several full connection layers Dropout.

- stay CNN Maximum pooling using overlap in . before CNN Average pooling is commonly used in ,AlexNet All use maximum pooling , Avoid the blurring effect of average pooling . also AlexNet The step size is smaller than that of the pool core , In this way, there will be overlap and coverage between the outputs of the pooling layer , Enhance the richness of features .

- Put forward LRN layer , Create a competitive mechanism for the activity of local neurons , Make the value with larger response relatively larger , And inhibit other neurons with smaller feedback , It enhances the generalization ability of the model .

- Use CUDA Accelerate the training of deep convolution network , utilize GPU Powerful parallel computing power , When dealing with neural network training, a lot of matrix operations .AlexNet Used two pieces GTX 580 GPU Training , Single GTX 580 Only 3GB memory , This limits the maximum size of the network that can be trained . So the author will AlexNet Distributed in two GPU On , At every GPU Half of the neuron's parameters are stored in the video memory . because GPU Easy communication between , They can access each other's memory , It doesn't need to go through host memory , So use multiple pieces at the same time GPU It's also very efficient . meanwhile ,AlexNet The design of Jean GPU The communication between them is only in some layers of the network , Control the performance loss of communication .

- Data to enhance , Randomly from 256 ∗ 256 256*256 256∗256 In the original image 224 ∗ 224 224*224 224∗224 Size area ( And a mirror image that flips horizontally ), It's equivalent to an increase in 2 ∗ ( 256 − 224 ) 2 = 2048 2*(256-224)^2=2048 2∗(256−224)2=2048 Times the amount of data . If there is no data enhancement , Only the amount of original data , There are many parameters CNN Will fall into over fitting , Using data enhancement can greatly reduce over fitting , Improve the generalization ability . When making predictions , Take the four corners of the picture plus the middle 5 A place , And flip left and right , Total obtained 10 10 10 A picture , Make predictions about them and about 10 10 10 Find the mean of the secondary results . meanwhile ,AlexNet It's mentioned in the paper that there will be RGB Data processing PCA Handle , And make a standard deviation for the principal component as 0.1 0.1 0.1 Gaussian perturbation of , Add some noise , This method can reduce the error rate 1 % 1\% 1%.

7 ResNet

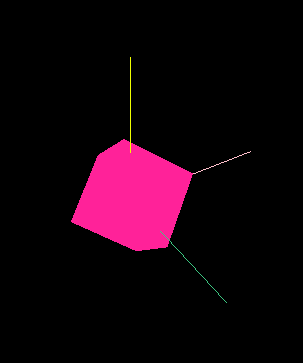

ResNet Is the second method proposed for the disappearance of the gradient .ResNet Through the flying line across the layers , Transfer the information layer by layer , In order to resist the disappearance of gradients , hypothesis ResNet Fly the line every two layers , Here's the picture :

In this way, the number of layers of the neural network can be increased to 152 152 152 layer , This increase in the number of layers leads to a significant increase in accuracy .

8 Mask R-CNN

Prior to Faster R-CNN On the basis of , Added more powerful functions . After the previous analysis ,Faster R-CNN in RPN Only objects can be divided , But semantic segmentation is not possible (Semantic Segmentation) And instance segmentation (Instance Segmentation).Mask R-CNN take Faster R-CNN And semantic segmentation algorithm FCN combination ,Faster R-CNN It can detect the target quickly and accurately ,FCN It can accurately complete semantic segmentation . Because of the discovery ROI Pooling The problem of pixel deviation in , The corresponding ROIAlign Strategy , add FCN Precise pixels MASK, This makes it possible to obtain high accuracy . in other words Mask R-CNN yes Faster R-CNN + ROIAlign(RPN Improve on the basis of ) + FCN. Even though Mask R-CNN Than Faster R-CNN complex , But the speed is still the same Faster R-CNN equally .

The general process is as follows :

- Enter a picture you want to work with , Then carry out the corresponding pretreatment operation , Or pre processed pictures ;

- Input it into a pre trained neural network (ResNet etc. ) Get the corresponding feature map;

- For this feature map Set a pre-set... At each point in ROI, So as to obtain multiple candidates ROI;

- Put these candidates ROI Send in RPN Network binary classification ( Foreground or background ) and BB Return to , Filter out some of the candidates ROI;

- For the rest of this ROI Conduct ROIAlign operation ( That is to say, the original picture and feature map Of pixel correspond , And then feature map And fixed feature correspond );

- For these ROI To classify ( N N N Category classification )、BB Regression and MASK Generate ( In every one of them ROI Carry out inside FCN operation ).

Mask R-CNN The biggest improvement is the use of ROI Align, stay Faster RCNN in , There are two integers :

- region proposal Of x , y , w , h x,y,w,h x,y,w,h It's usually a decimal , But for the convenience of operation, it will be integer .

- Divide the integral boundary area into k ∗ k k*k k∗k A unit , The boundary of each element is integer .

in fact , After the above two integers , At this time, the candidate box has deviated from the original position , This deviation will affect the accuracy of detection or segmentation . To solve this problem ,ROI Align Method cancels the integer operation , Keep decimals , The bilinear interpolation method introduced above is used to obtain the image value on the pixel with floating-point coordinates . But in practice ,ROI Align It is not simply to supplement the coordinate points on the boundary of the candidate region , Then pool , It's a redesign .

Adopt bilinear difference , Interpolate the pixel points of the feature map , use 4 Sample points determine a point in a cell , After that, the cell 4 4 4 I'll do it at two points maxpooling. Here's the picture :

The dotted line is the feature mapping , Each unit ( A small box divided by a red line in the black box ) The point in is determined by 4 The points of feature mapping are double interpolated ( obtain 4 4 4 An arrow points to a point ), And then do the following for each unit maxpooling Get the value of each cell .

9 YOLO

The previous network separated the process of object recognition and location , So it's called two stages (two-stage) object detection , and YOLO Use one stage (one-stage) object detection ,YOLO Combine these two processes , Complete the identification and detection at one time .YOLO Mainly to improve the detection speed , however mAP No, Faster R-CNN high .

YOLO The main idea is to divide a picture into S ∗ S S*S S∗S Cells , Subsequent output is done in cells :

- If one object The center of the falls on a cell , So this cell is responsible for predicting this object .

- Each cell needs to predict B B B individual bbox value (bbox Values include coordinates and width and height ), At the same time for each bbox Value predicts a confidence (confidence scores). That is, each cell needs to be predicted B × ( 4 + 1 ) B×(4+1) B×(4+1) It's worth .

- Each cell needs to predict C C C( Number of object types ) Conditional probability values .

- therefore , Finally, the output dimension of the network is S ∗ S ∗ ( B ∗ 5 + C ) S*S*(B*5+C) S∗S∗(B∗5+C), Although each cell is responsible for predicting an object ( There may be problems when there are small objects ), But each cell can predict multiple bbox value .

YOLO Speed increased to... Per second 45 45 45 A picture , But the accuracy drops , And there are obvious shortcomings : The effect is not good when facing small objects .

10 SSD

SSD Is in YOLO Join on the basis of Faster R-CNN Of anchor box thought , Observe YOLO, It is found that there are two full connection layers behind , After the full connection layer , The entire image is observed for each output , Not very reasonable . however SSD Remove the full connection layer , Change to convolution layer , Each output will only feel the information around the target , Including context , Increase rationality .

YOLO The output of the last layer is Fast NMS Network input , and SSD stay YOLO Several convolutions are added to the , The output of these convolution layers is spliced as Fast NMS Network input .SSD Increase the speed to... Per second 58 58 58 Zhang , And improved mAP, however SSD There are also YOLO The shortcomings of .

11 RetinaNet

RetinaNet The main idea is to change the calculation method of the loss function ,Focal loss It is based on the cross entropy loss function . After people's observation and statistics, it is found that , Most of the targets to be detected are less than the background, or sometimes the sample deviates , For example, the number of positive samples far exceeds the number of negative samples . The network effect trained in this way is very bad . therefore RetinaNet The author of this paper increases the weight of a sample in the loss function , For example, when the number of positive samples far exceeds the number of negative samples , Increase the weight of the negative sample loss function , Decrease the positive sample , Another example is that the background is much larger than the target to be detected , At this time, the weight of the loss function of the background is reduced , Reduce the influence of the background . This can not only cope with the large difference between the number of positive and negative samples , And it can also improve YOLO and SSD The shortcomings of .

边栏推荐

- 谋新局、促发展,桂林绿色数字经济的头雁效应

- Leetcode34. find the first and last positions of elements in a sorted array

- Topic 1 Single_Cell_analysis(4)

- [tutorial] deployment process of yolov5 based on tensorflow Lite

- R语言caTools包进行数据划分、scale函数进行数据缩放、class包的knn函数构建K近邻分类器、比较不同K值超参数下模型准确率(accuracy)

- Vscode 1.68 changes and concerns (sorting and importing statements / experimental new command center, etc.)

- Leetcode notes: Weekly contest 295

- vscode 1.68变化与关注点(整理导入语句/实验性新命令中心等)

- Chapter 2 - cyber threats and attacks

- Utilize user tag data

猜你喜欢

AI fanaticism | come to this conference and work together on the new tools of AI!

Rich dad, poor dad Abstract

tar之多线程解压缩

2022 G3 boiler water treatment recurrent training question bank and answers

Voice assistant - Qu - single entity recall

2022r2 mobile pressure vessel filling test question simulation test platform operation

Chapter V - message authentication and digital signature

Meter Reading Instrument(MRI) Remote Terminal Unit electric gas water

『Three.js』辅助坐标轴

Topic 1 Single_ Cell_ analysis(1)

随机推荐

Vs2019 MFC IP address control control inherits cipaddressctrl class redrawing

Voice assistant - overall architecture and design

Generalized semantic recognition based on semantic similarity

2021.10.27-28 scientific research log

2022 electrician (elementary) examination question bank and simulation examination

20220524 深度学习技术点

Vs 2019 MFC connects and accesses access database class library encapsulation through ace engine

20220525 RCNN--->Faster RCNN

2022r2 mobile pressure vessel filling test question simulation test platform operation

Exposure compensation, white increase and black decrease theory

VS 2019 MFC 通过ACE引擎连接并访问Access数据库类库封装

Chapter 6 - identity authentication, Chapter 7 - access control

Search and rescue strategy of underwater robot (FISH)

R语言使用RStudio将可视化结果保存为pdf文件(export--Save as PDF)

Voice assistant - future trends

Meter Reading Instrument(MRI) Remote Terminal Unit electric gas water

Arrangement of statistical learning knowledge points gradient descent, least square method, Newton method

Summary of machine learning + pattern recognition learning (V) -- Integrated Learning

In depth learning - overview of image classification related models

Modelants II