当前位置:网站首页>Arrangement of statistical learning knowledge points gradient descent, least square method, Newton method

Arrangement of statistical learning knowledge points gradient descent, least square method, Newton method

2022-06-12 07:30:00 【Turned_ MZ】

Collation of statistical learning knowledge points —— gradient descent 、 Least square method 、 Newton method

gradient descent

gradient descent (gradient descent) It is widely used in machine learning , Whether in linear regression or Logistic In the regression , Its main purpose is to find the minimum value of the objective function through iteration , Or converge to the minimum .

Gradient descent is an intuitive explanation , It can be understood from the process of going down the mountain , In the process of going down the mountain , Choose the steepest position to go down the mountain each time , You can reach the lowest point as soon as possible ( Corresponding loss function , That is, minimizing the loss function )

The mathematical formula :

Θ1=Θ0+α▽J(Θ)→evaluatedatΘ0

The meaning of this formula is :J It's about Θ A function of , Our current position is Θ0 spot , From this point to J The minimum value of , At the bottom of the mountain . First of all, let's determine the direction of our progress , That's the reverse of the gradient , And then take a long step , That is to say α, After this step , That's it Θ1 This point .

The algorithm process :

Algorithm tuning :

1. The choice of step size : Step length too long , It can cause the iteration to be too fast , You may even miss the optimal solution . The step size is too small , Iteration speed is too slow , The optimal solution cannot be reached for a long time .

2. Parameter initial value selection : It can be seen from the above figure , Gradient descent does not necessarily result in a global optimal solution , It may be a local optimal solution , So the choice of initial value will affect the final result , If the loss function is convex , Then it must be the optimal solution , Otherwise, it is necessary to run the algorithm with different initial values many times , Choose an algorithm that minimizes the loss function .

3. normalization : Because the value range of different features of the image is different , It can lead to slow iterations , In order to reduce the influence of feature value , Feature data can be normalized , That is to say, it turns into expectation 1, The standard deviation is 1 The distribution of .

Gradient descent method family :

Batch gradient descent method (BGD): The specific method is to use all samples to update the parameters ;

Random gradient descent method (SGD): Just take one sample and find the gradient ;

Small batch gradient descent method (MBGD): yes BGD and SGD A compromise of , That is, randomly select some samples to update ;

Least square method

The formula is as follows :

Observations are our multiple sets of samples , The theoretical value is our hypothetical fitting function , The objective function is also known as the loss function in machine learning .

The least square method is to find a set of ![]() bring

bring  ( The sum of squared residuals ) Minimum , namely , seek

( The sum of squared residuals ) Minimum , namely , seek

The solution of least square method :

Newton method

The basic idea of Newton's method is : In the vicinity of the estimated value of the existing minimum point f(x) Second order Taylor expansion , And then find the next estimate of the minimum . set up xk Is the estimated value of the current minimum point , be :

If you want to find the extreme value , Make ![]() , namely :

, namely :![]() , And then get :

, And then get :

The advantages of Newton's method :

Newton method is an algorithm with quadratic convergence , For non quadratic functions , If the quadratic form of the function is strong , Or have entered the field of minimalism , Its convergence speed is very fast .

The shortcomings of Newton's method :

There is no iteration step in Newton's method , Quadratic fixed length iteration , So for nonquadratic objective functions , Sometimes it makes the value of the function rise , This shows that Newton's method can not guarantee the stable decline of function value .

Gradient descent method 、 Least square method 、 The difference between Newton's methods

The same thing : Are unconstrained optimization algorithms

Difference :

The gradient descent method is relative to the least square method : Gradient descent method needs to choose step size , And the least squares method doesn't need . The gradient descent method is an iterative solution , The least square method is to calculate the analytical solution . If the sample size is not large , And there is an analytic solution , The least square method has an advantage over the gradient descent method , It's very fast . But if the sample size is large , With the least square method, we need to find a super large inverse matrix , At this point, it is very difficult or slow to solve the analytical solution , The iterative gradient descent method has advantages .

Gradient descent method and Newton method / Compared with the quasi Newton method , Both are solved iteratively , But the gradient descent method is a gradient solution , And Newton's method / Quasi Newton method is to use the inverse matrix or pseudo inverse matrix of the second order Hessian matrix to solve . Relatively speaking , Using Newton's method / The quasi Newton method converges faster . But each iteration takes longer than the gradient descent method .

Reference article :

Gradient descent method :https://www.cnblogs.com/pinard/p/5970503.html

Notes on Newton method and quasi Newton method :https://blog.csdn.net/itplus/article/details/21896453

How to understand the least square method :https://blog.csdn.net/ccnt_2012/article/details/81127117

Least square method :https://zhuanlan.zhihu.com/p/38128785

边栏推荐

- R语言使用epiDisplay包的summ函数计算dataframe中指定变量在不同分组变量下的描述性统计汇总信息并可视化有序点图、使用dot.col参数设置不同分组数据点的颜色

- Unity uses shaders to highlight the edges of ugu I pictures

- Planning and design of 1000 person medium-sized campus / enterprise network based on ENSP and firewall (with all configuration commands)

- QT realization tray

- RT thread studio learning (x) mpu9250

- Expansion of D @nogc

- linux下怎么停止mysql服务

- Modelants II

- Summary of machine learning + pattern recognition learning (VI) -- feature selection and feature extraction

- Complete set of typescript Basics

猜你喜欢

Detailed explanation of multi coordinate transformation in ROS (example + code)

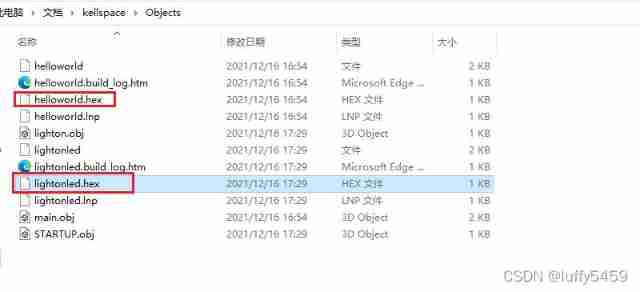

Keil installation of C language development tool for 51 single chip microcomputer

Detailed explanation of addressing mode in 8086

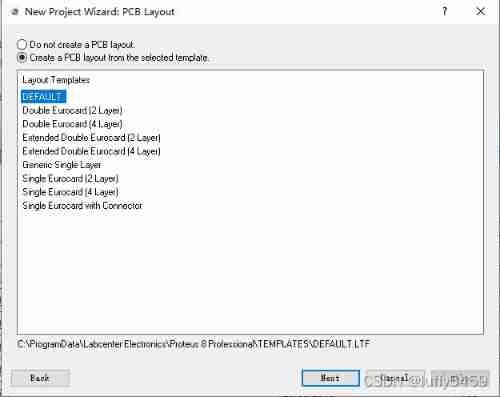

LED lighting experiment with simulation software proteus

私有协议的解密游戏:从秘文到明文

Question bank and answers of special operation certificate examination for safety management personnel of hazardous chemical business units in 2022

Kali and programming: how to quickly build the OWASP website security test range?

sql——课程实验考查

2022年危险化学品经营单位安全管理人员特种作业证考试题库及答案

The function of C language string Terminator

随机推荐

GD32F4(5):GD32F450时钟配置为200M过程分析

Exploring shared representations for personalized federated learning paper notes + code interpretation

Gradient epic memory for continuous learning

How to stop MySQL service under Linux

Model deployment learning notes (I)

LED lighting experiment with simulation software proteus

C language sizeof strlen

R语言使用epiDisplay包的summ函数计算dataframe中指定变量在不同分组变量下的描述性统计汇总信息并可视化有序点图、使用dot.col参数设置不同分组数据点的颜色

BI技巧丨当月期初

Missing getting in online continuous learning with neuron calibration thesis analysis + code reading

Complete set of typescript Basics

Circular linked list and bidirectional linked list - practice after class

5 lines of code identify various verification codes

Thoroughly understand the "rotation matrix / Euler angle / quaternion" and let you experience the beauty of three-dimensional rotation

VS2019 MFC IP Address Control 控件继承CIPAddressCtrl类重绘

Kotlin plug-ins kotlin Android extensions

Expansion of D @nogc

RT thread studio learning summary

xshell安装

Modelarts培训任务1