当前位置:网站首页>Book classification based on Naive Bayes

Book classification based on Naive Bayes

2022-06-12 06:06:00 【Singing under the hedge】

List of articles

Naive Bayes realizes book classification

Naive Bayes is the generating method , Find the feature output directly Y And characteristics X The joint distribution of P(X,Y)P(X,Y), And then use P(Y|X)=P(X,Y)/P(X)P(Y|X)=P(X,Y)/P(X) obtain .

One 、 Data sets

Dataset Links :https://wss1.cn/f/73l1yh2yjny

Data format description :

X.Y

X Represents a Book

Y It means different chapters of the book

Purpose : Judge which book the text comes from

Divide training and test sets by yourself

Two 、 Implementation method

1. Data preprocessing

(1) The original data will be 80% As a training set ,20% As test set

(2) Remove all numbers from the data of training set and test set 、 Characters and extra spaces , And build a dictionary , Take the book number corresponding to the paragraph as the index , Paragraph contents as values .

2. Naive Bayesian implementation

(1) Create a vocabulary

(2) Calculate the prior probability

(3) Calculate the conditional probability

Two 、 Code

naive_bayes_text_classifier.py

import numpy as np

import re

def loadDataSet(filepath):

f = open(filepath, "r").readlines()

raw_data = []

print(" Remove all symbols and numbers ...")

for i in range(0, len(f), 2):

temp = dict()

temp["class"] = int(f[i].strip().split(".")[0])

# Remove all symbols and numbers

mid = re.sub("[1-9,!?,.:\"();&\t]", " ", f[i + 1].strip(), count=0, flags=0)

# Remove extra space

temp["abstract"] = re.sub(" +", " ", mid, count=0, flags=0).strip()

if temp["abstract"] != "":

raw_data.append(temp)

postingList=[i["abstract"].split() for i in raw_data]

classVec = [i["class"] for i in raw_data] #1 is abusive, 0 not

return postingList, classVec

# Uniqueness of elements in a set structure , Create a vocabulary that contains all the words .

def createVocabList(dataSet):

vocabSet = set([]) # Create an empty list

for document in dataSet:

vocabSet = vocabSet | set(document) # Merge two sets

return list(vocabSet)

def setOfWords2Vec(vocabList, inputSet):

returnVec = [0]*len(vocabList)

for word in inputSet:

if word in vocabList:

returnVec[vocabList.index(word)] = 1

else:

continue

print("the word: %s is not in my Vocabulary!" % word)

return returnVec

# Training , Here's the calculation : Conditional probability and Prior probability

def trainNB0(trainMatrix, trainCategory):

numTrainDocs = len(trainMatrix) # Calculate the total number of samples

# Calculate the vectorized length of the sample , This is the length of the dictionary .

numWords = len(trainMatrix[0])

# Calculate the prior probability

p0 = np.sum(trainCategory == 0) / float(numTrainDocs)

p1 = np.sum(trainCategory == 1) / float(numTrainDocs)

p2 = np.sum(trainCategory == 2) / float(numTrainDocs)

p3 = np.sum(trainCategory == 3) / float(numTrainDocs)

p4 = np.sum(trainCategory == 4) / float(numTrainDocs)

p5 = np.sum(trainCategory == 5) / float(numTrainDocs)

p6 = np.sum(trainCategory == 6) / float(numTrainDocs)

#print(p0,p1,p2,p3,p4,p5,p6)

# To initialize , Used for vectorized samples Add up , Why initialize 1 Not all of them 0, The probability of prevention is 0.

p0Num = np.ones(numWords)

p1Num = np.ones(numWords)

p2Num = np.ones(numWords)

p3Num = np.ones(numWords)

p4Num = np.ones(numWords)

p5Num = np.ones(numWords)

p6Num = np.ones(numWords) #change to ones()

# The denominator of the initial conditional probability is 2, Prevent emergence 0, An incalculable situation .

p0Denom = 2.0

p1Denom = 2.0

p2Denom = 2.0

p3Denom = 2.0

p4Denom = 2.0

p5Denom = 2.0

p6Denom = 2.0 #change to 2.0

# Traverse all vectorized samples , And each vectorized length is equal , Equal to the length of the dictionary .

for i in range(numTrainDocs):

# The statistic tag is 1 The sample of : Accumulation of vectorized samples , Sample 1 Sum of total numbers , Finally, divide by log It's conditional probability .

if trainCategory[i] == 0:

p0Num += trainMatrix[i]

p0Denom += sum(trainMatrix[i])

# The statistic tag is 0 The sample of : The vectorized samples are accumulated , Sample 1 Sum of total numbers , Finally, divide by log It's conditional probability .

elif trainCategory[i] == 1:

p1Num += trainMatrix[i]

p1Denom += sum(trainMatrix[i])

elif trainCategory[i] == 2:

p2Num += trainMatrix[i]

p2Denom += sum(trainMatrix[i])

elif trainCategory[i] == 3:

p3Num += trainMatrix[i]

p3Denom += sum(trainMatrix[i])

elif trainCategory[i] == 4:

p4Num += trainMatrix[i]

p4Denom += sum(trainMatrix[i])

elif trainCategory[i] == 5:

p5Num += trainMatrix[i]

p5Denom += sum(trainMatrix[i])

elif trainCategory[i] == 6:

p6Num += trainMatrix[i]

p6Denom += sum(trainMatrix[i])

# Find the conditional probability .

p0Vect = np.log(p0Num / p0Denom)

p1Vect = np.log(p1Num / p1Denom) # Change it to log() Prevent emergence 0

p2Vect = np.log(p2Num / p2Denom)

p3Vect = np.log(p3Num / p3Denom)

p4Vect = np.log(p4Num / p4Denom)

p5Vect = np.log(p5Num / p5Denom)

p6Vect = np.log(p6Num / p6Denom)

# Return conditional probability and Prior probability

return p0Vect, p1Vect, p2Vect, p3Vect, p4Vect, p5Vect, p6Vect, p0,p1,p2,p3,p4,p5,p6

def classifyNB(vec2Classify, p0Vec, p1Vec,p2Vec, p3Vec,p4Vec, p5Vec,p6Vec, p0,p1,p2,p3,p4,p5,p6):

# Vectorized samples , respectively, And Multiply the conditional probabilities of each category Plus a priori probability, take log, Then compare the sizes , Output category .

p0 = sum(vec2Classify * p0Vec) + np.log(p0)

p1 = sum(vec2Classify * p1Vec) + np.log(p1) #element-wise mult

p2 = sum(vec2Classify * p2Vec) + np.log(p2)

p3 = sum(vec2Classify * p3Vec) + np.log(p3)

p4 = sum(vec2Classify * p4Vec) + np.log(p4)

p5 = sum(vec2Classify * p5Vec) + np.log(p5)

p6 = sum(vec2Classify * p6Vec) + np.log(p6)

res=[p0,p1,p2,p3,p4,p5,p6]

return res.index(max(res))

if __name__ == '__main__':

# Generate training samples and label

print(" Get training data ...")

listOPosts, listClasses = loadDataSet("bys_data_train.txt")

print(" Training data set size :",len(listOPosts))

# Creating a dictionary

print(" Build a dictionary ...")

myVocabList = createVocabList(listOPosts)

# Used to save the sample steering volume

trainMat=[]

# Traverse every sample , After steering volume , Save to list .

for postinDoc in listOPosts:

trainMat.append(setOfWords2Vec(myVocabList, postinDoc))

# Calculation Conditional probability and Prior probability

print(" Training ...")

p0V,p1V,p2V,p3V,p4V,p5V,p6V,p0,p1,p2,p3,p4,p5,p6 = trainNB0(np.array(trainMat), np.array(listClasses))

# Given the test sample To test

print(" Get test data ...")

listOPosts, listClasses = loadDataSet("bys_data_test.txt")

print(" Test data set size :", len(listOPosts))

f=open("output.txt","w")

total=0

true=0

for i,j in zip(listOPosts,listClasses):

testEntry = i

thisDoc = np.array(setOfWords2Vec(myVocabList, testEntry))

result=classifyNB(thisDoc, p0V,p1V,p2V,p3V,p4V,p5V,p6V,p0,p1,p2,p3,p4,p5,p6)

print(" ".join(testEntry))

print(' The classification result is : ', result,' The answer is : ',j)

f.write(" ".join(testEntry)+"\n")

f.write(' The classification result is : '+ str(result)+' The answer is : '+str(j)+ "\n")

total+=1

if result==j:

true+=1

print("total acc: ",true/total)

f.write("total acc: "+str(true/total))

f.close()

preprocess.py

from sklearn.model_selection import train_test_split

f=open("AsianReligionsData .txt","r",encoding='gb18030',errors="ignore").readlines()

raw_data=[]

for i in range(0,len(f),2):

raw_data.append(f[i]+f[i+1])

train, test = train_test_split(raw_data, train_size=0.8, test_size=0.2,random_state=42)

print(len(train))

print(len(test))

f=open("bys_data_train.txt","w")

for i in train:

f.write(i)

f.close()

f=open("bys_data_test.txt","w")

for i in test:

f.write(i)

f.close()

experimental result

(1) The predicted results are compared with the real results

(2) Accuracy calculation

边栏推荐

- (UE4 4.27) customize primitivecomponent

- Glossary of Chinese and English terms for pressure sensors

- Project progress on February 28, 2022

- 2D human pose estimation for pose estimation - pifpaf:composite fields for human pose estimation

- Database Experiment 2: data update

- Nrf52832 services et fonctionnalités personnalisés

- dlib 人脸检测

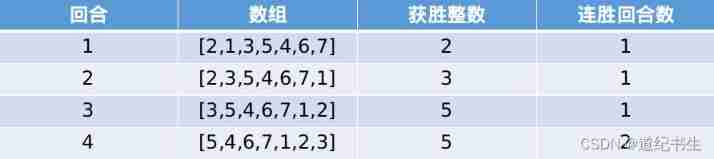

- Leetcode-1535. Find the winner of the array game

- Unity custom translucent surface material shader

- Leetcode sword finger offer II 033 Modified phrase

猜你喜欢

Three years of sharpening a sword: insight into the R & D efficiency of ant financial services

Review notes of naturallanguageprocessing based on deep learning

摄像头拍摄运动物体,产生运动模糊/拖影的原因分析

姿态估计之2D人体姿态估计 - PifPaf:Composite Fields for Human Pose Estimation

关于 Sensor flicker/banding现象的解释

Understanding of distributed transactions

Leetcode-1535. Find the winner of the array game

Login authentication filter

Project and build Publishing

Annotation configuration of filter

随机推荐

Poisson disk sampling for procedural placement

Leetcode-1705. Maximum number of apples to eat

User login (medium)

交叉编译libev

Glossary of Chinese and English terms for pressure sensors

Guns framework multi data source configuration without modifying the configuration file

China Aquatic Fitness equipment market trend report, technical innovation and market forecast

Redis队列

肝了一個月的 DDD,一文帶你掌握

dlib 人脸检测

468. verifying the IP address

Leetcode sword finger offer II 033 Modified phrase

(UE4 4.27) customize globalshader

Unity custom translucent surface material shader

分段贝塞尔曲线

软件项目架构简单总结

相机图像质量概述

项目开发流程简单介绍

IO to IO multiplexing from traditional network

[untitled]