当前位置:网站首页>HMM hidden Markov model and code implementation

HMM hidden Markov model and code implementation

2022-07-04 19:44:00 【Lyttonkeepgoing】

First of all Chinese word segmentation At this stage, there are generally three schools of word segmentation

1. rule-based : Forward and backward maximum matching

2. Based on Statistics :HMM, CRF

3. Based on deep learning :Bilstm+crf

So what we want to talk about today is one of them HMM hidden Markov model

Again, why do we need word segmentation ?

1. Better understand semantics ( It can improve the performance of the model )

2. For more important tasks Such as ( Named entity recognition 、 Sentiment analysis 、 Text classification 、 Semantic recognition and so on )

3. The needs of application scenarios ( TaoBao 、 Baidu ) The search algorithm has very high requirements for word segmentation

But Not all Chinese tasks require word segmentation ! for instance Bert (NSP)

Then one point that cannot be bypassed is corpus

Want to have a good word segmentation effect Then we must have a high-quality corpus ( High quality corpora are usually annotated manually )

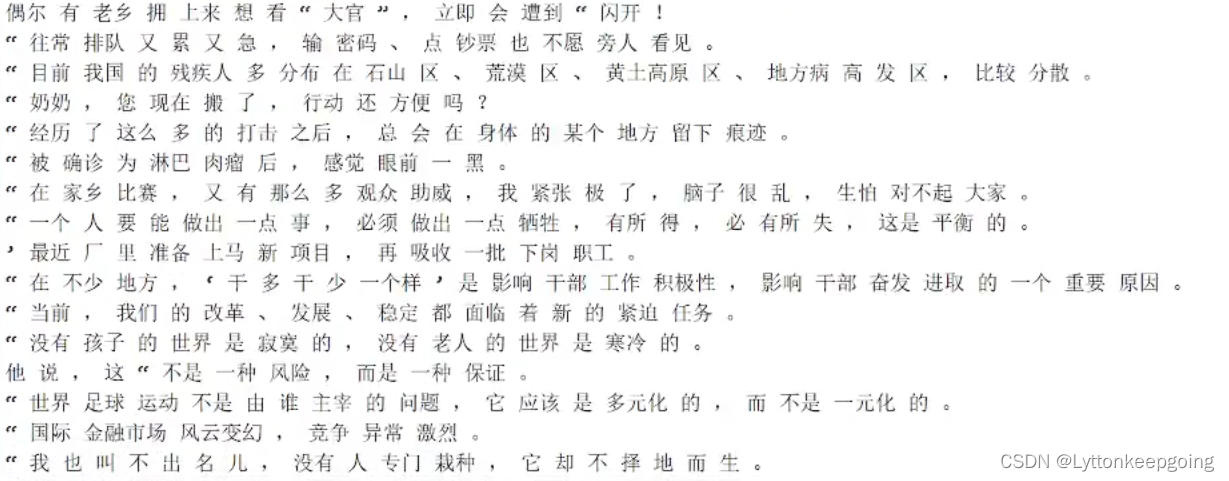

Here I intercepted a small corpus

Here I intercepted a small corpus

We will make every line It is regarded as an article Each article is separated by a space The corpus here is based on word segmentation It is used to help us segment words There are also part of speech tagging corpora Emotional analysis corpus and so on

Then we will talk about the identification of our word segmentation

Every word has a logo ( We can also call it hidden state ) We can get all the logos according to our hidden state

B: Words begin

M: Between words

E: End of word

S: In isolation

So what is the ultimate goal of our Chinese word segmentation ?

Just to get the state !

for instance : I am writing a blog ! So the corresponding BMES The sign is SBEBMES

Then we can segment words according to the continuous state That is to say ‘E’ 'S' Just output a space later Then the word segmentation result we output is I [ Space ] is [ Space ] Blogging [ Space ]![ Space ]

This is the underlying logic of Chinese word segmentation that we usually talk about !

How do we go about the normal word segmentation process ?

First of all, according to our corpus has been divided into words Get the status of each word That's what happened BMES

Then according to the state of each word To count our three matrices ( initial , Transfer , Emission matrix )

After counting the three matrices We can easily get BMES The sequence of components And then it was ES Output space after Our participle is over

Then we HMM What is the real training and prediction process ?

First of all, let's talk about the training process ( In fact, it is the process of solving the above three matrices ) The idea of the same depth learning of these three matrices can be understood as parameters That is to say W and B

Then according to the three matrices we solve When we come across a new sentence We can calculate each word according to these three matrices BMES What's the probability of ( It can also be said to be the probability of each path ) Then we are solving the optimal path according to Viterbi Algorithm That is, we have the greatest probability BMES Sequence distribution

Look at the picture

There's another problem Viterbi algorithm and HMM In fact, it is not strongly related Just if HMM Independent of Viterbi algorithm Then its calculation will become much more complicated

So here's the point How to calculate the initial matrix transition matrix And emission matrix ?

First of all, let's say The initial matrix The function of the initial matrix is : Count the status of the first word of each article

Be careful At the beginning, we counted the frequency That is to say BMES Frequency of Later, it is transformed into probability

Then we can get a table according to the status of the first word of each article And then from our frequency to probability is what our initial matrix does

For more details Let's take an example

We use it 【 today The weather really Pretty good .】 【 Spicy fat cattle delicious !】 【 I like eat delicious Of !】 These three sentences are our corpus

Corresponding BMES The sequence of is 【BEBESBES】 【BMMEBES】 【SBESBESS】 These three sentences are our corpus Then the initial matrix we get is as shown in the figure below

And then our transition matrix :( Is the probability of the current state to the next state )

Here you can explain it in a popular way Namely The current state of this word is B So what is the state of the next word ? BMES Which of them ? Then we can make a matrix

From this matrix we can see B Followed by B There are 0 individual B Followed by M There are 1 individual B Followed by S There are 0 individual B Followed by E There are 6 individual By analogy Then we can calculate the number of each transition state Get the probability ( Be careful We are looking sideways )

The next step is Emission matrix :( Statistics under a certain state , The number of times all words appear ( probability ))

Namely BMES Which words are B Which words are E MS Traverse each word in the corpus in turn

The resulting matrix is below It's actually a double dictionary

So after getting these three matrices Our training part is finished

Next is the prediction process :( Give me a new sentence According to our three matrices obtain BMES Sequence )

So for convenience Let's also give a simple example ![]() Example 【 It's a nice day today 】==》【BESBEBE】

Example 【 It's a nice day today 】==》【BESBEBE】

This sentence is seven words Each word has four states Then the total number of his routes is 4^7 We have to calculate the probability of each route

First Come up and find the initial matrix And then get the first one BS The probabilities are 0.667 and 0.333 So if we go B At the same time His launch matrix also has a probability Namely B The probability of today From the above figure, we can know that 0.142 Then from now on, the next word is transferred Then we will inquire transition matrix hear B Go to E The probability of transfer is 0.857 At the same time, we also need to check the launch matrix E Is the probability of days Then by analogy, we can find the probability of all paths Namely 4^7 A probability

So how can we quickly get our best path Then we need to use our viterbi algorithm

The core idea of Viterbi algorithm is to delete those paths with low probability while calculating

Calculate from the initial matrix assignment Pictured above To reach the second B That is, if the sky is B Then there will be four routes to the second B Then there must be an optimal path among the four paths And Find the optimal path and delete other non optimal paths Similarly, reach the second MSE There will be an optimal path The core idea of Viterbi algorithm is If the current path is the optimal path Then the path to the previous node must also be the optimal path So after we get these four optimal paths You can't find out which path is the best Because they are only locally optimal Then there are still four paths to reach the last word So these four paths We can figure out who is the real global optimal

So in summary According to the above method There will only be 4 A path stay 4 Of the paths Choose the best Then we can get the state sequence The participle ends

Finally Namely HMM Code implementation of ( The notes are very clear I won't explain it in detail here )

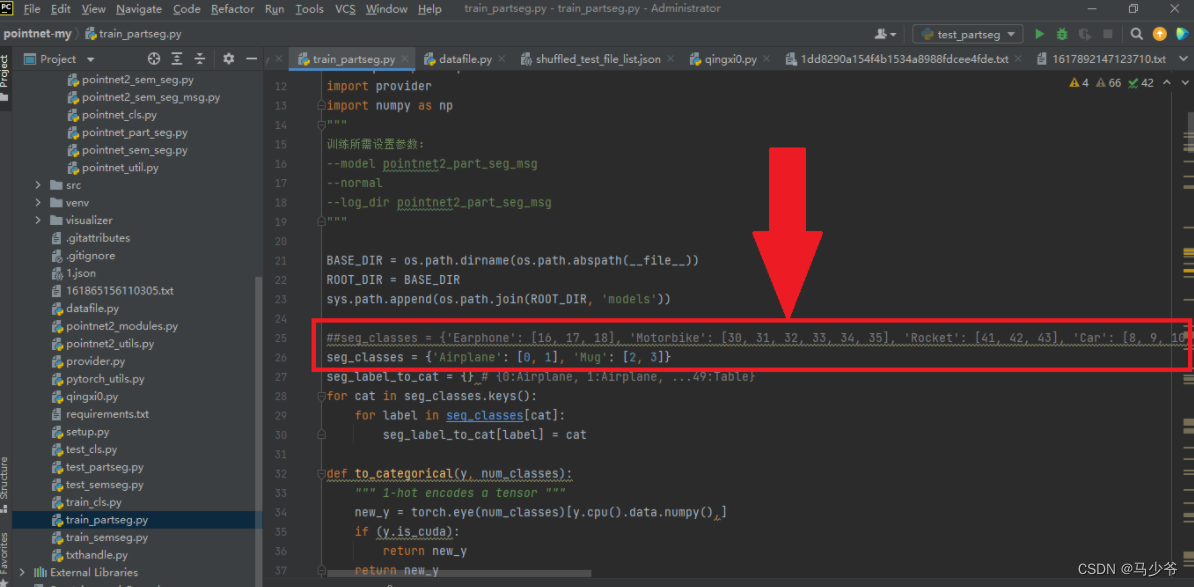

Training set distribution First deal with the training set That is to say BMES Generate the status file from the above original corpus It's the following all_train_state.txt

The following two functions are used to generate this state file

import pickle

from tqdm import tqdm

import numpy as np

import os

def make_label(text_str): # From words to label The transformation of Such as : today --》BE Spicy fat cattle --》BMME

text_len = len(text_str)

if text_len == 1:

return 'S'

return 'B' + 'M' * (text_len - 2) + 'E' # Except that the beginning and the end are BE The rest are M

def text_to_state(file='all_train_text.txt'): # Transform the original corpus into the corresponding state file

if os.path.exists('all_train_state.txt'): # If the file exists Just quit

return

all_data = open(file, 'r', encoding='utf-8').read().split('\n')

# Open the file and slice it to all_data in all_data It's a list

with open('all_train_state.txt', 'w', encoding='utf-8') as f: # Open the written file

for d_index, data in tqdm(enumerate(all_data)): # Traverse line by line

if data: # If data Not empty

state_ = ""

for w in data.split(" "): # The current article is segmented by spaces w Is a word in the article

if w : # If w Not empty

state_ = state_ + make_label(w) + " " # Make a single word label

if d_index != len(all_data) - 1: # Leave the last line blank '\n' Add... To the rest

state_ = state_.strip() + '\n' #

f.write(state_)Then we define HMM class The key is the three matrices Then use Viterbi algorithm to predict

class HMM:

def __init__(self, file_text = 'all_train_text.txt', file_state = 'all_train_state.txt'):

self.all_states = open(file_state, 'r', encoding='utf-8').read().split('\n') # Get all status by line

self.all_texts = open(file_text, 'r', encoding='utf-8').read().split('\n') # Get all text by line

self.states_to_index = {'B': 0, 'M': 1, 'S':2, 'E':3} # Give each state an index , Later, you can get the index according to the status

self.index_to_states = ["B", "M", "S", "E"] # Get the corresponding status according to the index

self.len_states = len(self.states_to_index) # State length : Here is 4 Also have bioes

self.init_matrix = np.zero((self.len_states)) # The initial matrix 1 * 4 Corresponding BMES

self.transfer_matrix = np.zero((self.len_states, self.len_states)) # Transition state matrix 4*4

self.emit_matrix = {"B":{"total": 0}, "M":{"total":0}, "S":{"total":0}, "E":{"total":0}}

# Emission matrix The use of 2 Level dictionary Here we initialize a total key Store the total number of occurrences of the current state Prepare for the following normalization

def cal_init_matrix(self, state): # Calculate the initial matrix

self.init_matrix[self.states_to_index[states[0]]] += 1 # BMSE Four kinds of state The corresponding state appears once plus one

def cal_transfer_matrix(self, states): # Calculate the transfer matrix

sta_join = "".join[states] # State transition from the current state to the next state From sta1 To sta2

sta1 = sta_join[:-1]

sta2 = sta_join[1:]

for s1, s2 in zip(sta1, sta2): # At the same time through s1, s2

self.trainsfer_matrix[self.states_to_index[s1], self.states_to_index[s2]] += 1

def cal_emit_matrix(self, words, states): # Calculate the emission matrix

for word , state in zip("".join(words), "".join(states)): # The first words and states Put them together and go through Because there's a space in the middle

self.emit_matrix[state][word] = self.emit_matrix[state].get(word, 0) + 1

self.emit_matrix[state]["total"] += 1 # Note that there is an additional total key Store the total number of occurrences of the current state For later normalization, use

def normalize(self):

self.init_matrix = self.init_matrix/np.sum(self.init_matrix)

self.transfer_matrix = self.transfer_matrix/np.sum(self.trainsfer_matrix, axis = 1 , keepdims = True)

self.emit_matrix = {state:{word:t/word_times["total"]*100 for word, t in word_times.items() if word != "total"} for state, word_times in self.emit_matrix.items()}

def train(self):

if os.path.exists("three_matrix.pkl"): # If parameters already exist No more training

self.init_matrix, self.transfer_matrix, self.emit_matrix = pickle.load(open("three_matrix.pkl", "rb"))

return

for words, states in tqdm(zip(self.all_texts, self.all_states)): # Read the file by line Call the solving function of the three matrices

words = words.split(" ") # In the document Are divided according to the space

states = states.split(" ")

self.cal_init_matrix(states[0])

self.cal_transfer_matrix(states)

self.cal_emit_matrix(words, states)

self.normalize() # After the matrix is solved, it is normalized

pickle.dump([self.init_matrix, self.transfer_matrix, self.emit_matrix], open("three_matrix.pkl", "wb")) # Save parameters

def viterbi_t(text, hmm):

states = hmm.index_to_states

emit_p = hmm.emit_matrix

trans_p = hmm.transfer_matrix

states_p = hmm.init_matrix

V = [{}]

path = {}

for y in states:

V[0][y] = start_p[hmm.states_to_index[y]] * emit_p[y].get(text[0], 0)

path[y] = [y]

for t in range(1, len(text)):

V.append({})

newpath = {}

neverSeen = text[t] not in emit_p['S'].keys() and \

text[t] not in emit_p['M'].keys() and \

text[t] not in emit_p['E'].keys() and \

text[t] not in emit_p['B'].keys()

for y in states:

emitP = emit_p[y].get(text[t], 0) if not neverSeen else 1.0 # Set to unknown words and form words alone

(prob, state) = max([(V[t-1][y0] * trans_p[hmm.states_to_index[y0], hmm.states_to_index[y]] * emitP, y0) for y0 in states if V[t-1]])

V[t][y] = prob

newpath[y] = path[states] + [y]

path = newapth

(prob. state) = max([(V[len(text)-1][y], y ) for y in state])

result = ""

for t, s in zip(text, path[state]):

result += t

if s == "S" or s == "E" :

result += " "

return result

if __name__ == "__main__":

text_to_state()

text = " Enter the sentence to be segmented "

hmm = HMM()

hmm.train()

result = viterbi_t(text, hmm)

print(result)

边栏推荐

- 欧拉函数

- Abc229 summary (connected component count of the longest continuous character graph in the interval)

- BCG 使用之CBCGPTabWnd控件(相当于MFC TabControl)

- Find the nth power of 2

- Personal thoughts on Architecture Design (this article will be revised and updated continuously later)

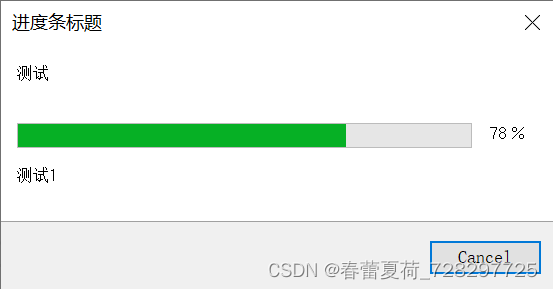

- BCG 使用之CBCGPProgressDlgCtrl進度條使用

- Comment utiliser async awati asynchrone Task Handling au lieu de backgroundworker?

- 1011 World Cup betting (20 points) (pat a)

- English语法_名词 - 使用

- 1011 World Cup Betting (20 分)(PAT甲级)

猜你喜欢

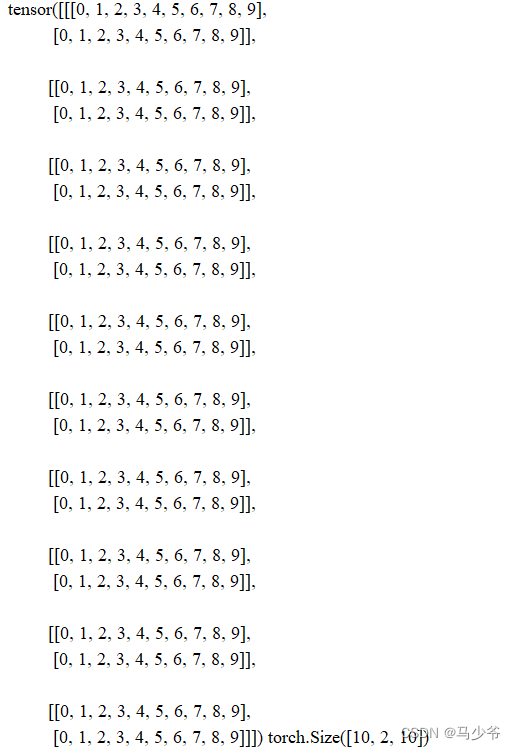

Pytorch学习(四)

How to use async Awati asynchronous task processing instead of backgroundworker?

Introduction to polyfit software

English grammar_ Noun - use

BCG 使用之CBCGPProgressDlg进度条使用

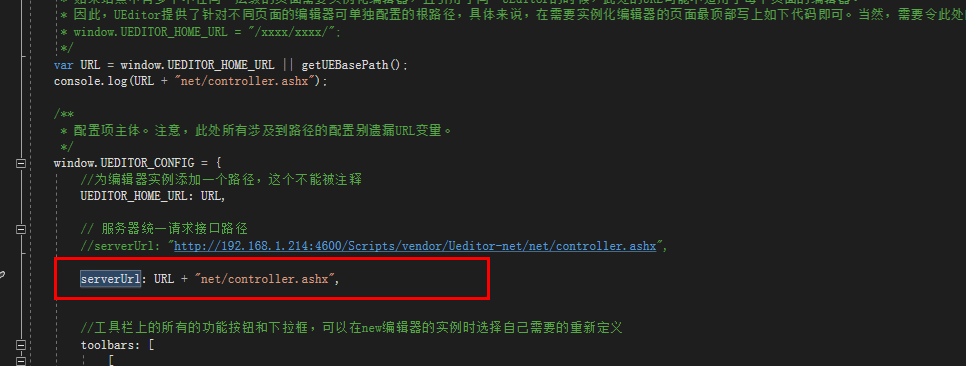

c# .net mvc 使用百度Ueditor富文本框上传文件(图片,视频等)

Pointnet/Pointnet++点云数据集处理并训练

What should we pay attention to when doing social media marketing? Here is the success secret of shopline sellers!

Euler function

Chrome开发工具:VMxxx文件是什么鬼

随机推荐

92. (cesium chapter) cesium building layering

HDU 6440 2018 Chinese college student program design network competition

Technologie de base de la programmation Shell IV

勾股数规律(任意三个数能够满足勾股定理需要满足的条件)

需求开发思考

做社交媒体营销应该注意些什么?Shopline卖家的成功秘笈在这里!

实战模拟│JWT 登录认证

Crawler (6) - Web page data parsing (2) | the use of beautifulsoup4 in Crawlers

HDU 1097 A hard puzzle

BCG 使用之CBCGPTabWnd控件(相当于MFC TabControl)

Shell programming core technology "I"

黑马程序员-软件测试--07阶段2-linux和数据库-09-24-linux命令学习步骤,通配符,绝对路径,相对路径,文件和目录常用命令,文件内容相关操作,查看日志文件,ping命令使用,

Lenovo explains in detail the green smart city digital twin platform for the first time to solve the difficulties of urban dual carbon upgrading

The explain statement in MySQL queries whether SQL is indexed, and several types in extra collate and summarize

HMM隐马尔可夫模型最详细讲解与代码实现

C# 使用StopWatch测量程序运行时间

HDU 1372 & POJ 2243 Knight Moves(广度优先搜索)

BCG 使用之CBCGPProgressDlgCtrl進度條使用

Jetpack compose tutorial

BCG 使用之CBCGPProgressDlg进度条使用