当前位置:网站首页>Pytorch learning (III)

Pytorch learning (III)

2022-06-30 18:56:00 【Master Ma】

1、Z-score Standardization (standardization)

Strictly speaking z-score It is a standardized operation , In some places it is written as normalization (normalization), It's wrong to say .1) Standardization is to make the data conform to the mean value of 0, The variance of 1 The distribution of .2) Normalization is to change the data value to [0, 1] In this interval . There is an essential difference between the two .

1) Standard deviation calculation formula :

2)Z-score Standardized calculation formula :

notes :Z-score Standardization can only transform the data into a mean value of 0, The variance of 1, Will not change the distribution of the original data

Normalization formula :

2、torch.tensor.permute() function

Permute The function of the operator is to transform the order of tensor data dimensions , for instance :

data1=torch.randn((3,2,1))

print('data1 Data type of :',type(data1))

print('data1 The data dimension of :',data1.shape)

print('data1:',data1)

data2=data1.permute(2,1,0)

print('data2 Data type of :',type(data2))

print('data2 The data dimension of :',data2.shape)

print('data2:',data2)

3、torch.matmul

pytorch The multiplication of two tensors in can be divided into two kinds :

The corresponding elements of two tensors are multiplied , stay PyTorch Through torch.mul function ( or * Operator ) Realization ;

Multiply two tensor matrices , stay PyTorch Through torch.matmul Function implementation ;

torch.matmul() It is also similar to matrix multiplication tensor Tandem operation . But it can take advantage of python The broadcast mechanism in , Deal with some different dimensions tensor Structure to multiply . This is also the function and torch.bmm() The difference is .

If two tensor It's all one-dimensional , Returns the dot product result of two vectors :

import torch

x = torch.tensor([1,2])

y = torch.tensor([3,4])

print(x,y)

print(torch.matmul(x,y),torch.matmul(x,y).size())

If two tensor It's all two-dimensional , Returns the matrix multiplication result of the two matrices :

import torch

x = torch.tensor([[1,2],[3,4]])

y = torch.tensor([[5,6,7],[8,9,10]])

print(torch.matmul(x,y),torch.matmul(x,y).size())

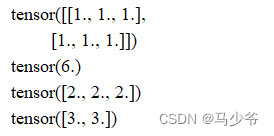

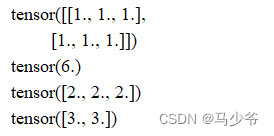

4、torch.sum

a = torch.ones((2, 3))

a1 = torch.sum(a)

a2 = torch.sum(a, dim=0)

a3 = torch.sum(a, dim=1)

print(a)

print(a1)

print(a2)

print(a3)

If you add keepdim=True, Will keep dim Dimension of is not squeeze

a1 = torch.sum(a, dim=(0, 1), keepdim=True)

a2 = torch.sum(a, dim=(0, ), keepdim=True)

a3 = torch.sum(a, dim=(1, ), keepdim=True)

5、torch.view

stay pytorch in view The function reconstructs the dimension of the tensor , amount to numpy in resize() The function of , But the usage may be different . As shown in the following example

such as

import torch

a=torch.Tensor([[[1,2,3],[4,5,6]]])

b=torch.Tensor([1,2,3,4,5,6])

print(a.view(1,6))

print(b.view(1,6))

a=torch.Tensor([[[1,2,3],[4,5,6]]])

print(a.view(-1))

a=torch.Tensor([[[1,2,3],[4,5,6]]])

a=a.view(3,2)

print(a)

a=a.view(2,-1)

print(a)

6、python in numpy Modular size,shape, len Usage of

import numpy as np

X=np.array([[1,2,3,4],

[5,6,7,8],

[9,10,11,12]])

number=X.size # Calculation X Number of all elements in

X_row=np.size(X,0) # Calculation X The number of elements in a row

X_col=np.size(X,1) # Calculation X The number of elements in a column

print("number:",number)

print("X_row:",X_row)

print("X_col:",X_col)

import numpy as np

X=np.array([[1,2,3,4],

[5,6,7,8],

[9,10,11,12]])

X_dim=X.shape # In the form of a tuple , Returns the dimension of the array

print("X_dim:",X_dim)

print(X.shape[0]) # Number of output lines

print(X.shape[1]) # Number of output columns

import numpy as np

X=np.array([[1,2,3,4],

[5,6,7,8],

[9,10,11,12]])

length=len(X) # Returns the length of the object It's not the number of elements

print("length of X:",length)

7、pytorch The operation of the tensor of : Splicing 、 segmentation 、 Indexing and transformation

7.1 The splicing of tensors

torch.cat(tensors, dim=0, out=None)

function : Divide the tensor into dimensions dim Splicing

·tensors: Tensor sequence

·dim: Dimensions to be spliced

import torch

t = torch.ones((2,3))

t_0 = torch.cat([t,t], dim=0)# Line splicing

t_1 = torch.cat([t,t], dim=1)# Column splicing

print('t_0:{} shape:{}\nt_1:{} shape:{}'.format(t_0,t_0.shape,t_1,t_1.shape))

7.2 Tensor segmentation

torch.chunk(input, chunks, dim=0)

function : Divide the tensor into dimensions dim Average segmentation

Return value : Tensor list

matters needing attention : If you can't divide , The last tensor is smaller than the others

·input: The tensor to be segmented

·chunks: The number of portions to cut

·dim: The dimension to be segmented

t = torch.ones((2,5))

list_of_tensors = torch.chunk(t, dim=1, chunks=2)

for idx, mat in enumerate(list_of_tensors):

print(' The first {} A tensor :{}, Dimension for {}'.format(idx+1,mat,mat.shape))

7.3 Tensor index

torch.index_select(input,dim=0,index=None)

function : In dimension dim On , Press index Index data

Return value : In accordance with the index Tensor of index data splicing

·input: The tensor to index

·dim: Dimensions to index

·index: The sequence number of the data to be indexed

t = torch.randint(0,9,size=(3,3))

idx = torch.tensor([0,2], dtype=torch.long) #float

t_select = torch.index_select(t, dim=0, index=idx)# Row index

t_select2 = torch.index_select(t, dim=1, index=idx)# Column index

print('{}\n{}\n{}'.format(t, t_select, t_select2))

torch.masked_select(input, mask, out=None)

function : Press mask Medium True Index

Return value : One dimensional tensor

·input: The tensor to index

·mask: And input Boolean type tensors of the same shape

t = torch.randint(0,9,size=(3,3))

# The return size is t Matrix , Where is greater than or equal to 5 The element of is True, Less than 5 For the False

mask = t.ge(5)

t_select = torch.masked_select(t, mask)

print('t:\n{}\nmask:\n{}\nt_select:\n{}'.format(t,mask,t_select))

7.4 Tensor transformation

torch.reshape(input, shape)

function : Transform tensor shape

matters needing attention : When the tensor is continuous in memory , New tensor and input Shared data memory

·input: The tensor to be transformed

·shape: The shape of the new tensor

t = torch.randperm(8)

t_reshape = torch.reshape(t, (-1,2,2))

print('t:\n{}\nt_reshape:\n{}'.format(t, t_reshape))

print('t Memory address {}'.format(id(t.data)))

print('t_reshape Memory address {}'.format(id(t_reshape.data)))

torch.transpose(input, dim0, dim1)

function : The two dimensions of the exchange tensor

·input: The tensor to be transformed

·dim0: Dimensions to be transformed

·dim1: Dimensions to be transformed

t = torch.rand((2,3,4))

t_transpose = torch.transpose(t, dim0=1, dim1=2)

print('t shape:{} t_transpose shape:{}'.format(t.shape, t_transpose.shape))

torch.t(input)

function :2 Dimensional tensor transpose , For matrices , Equivalent to torch.transpsoe(input,0,1)

torch.squeeze(input, dim=None, out=None)

function : The compression length is 1 Dimensions ( Axis )

·dim: if None, Remove all items of length 1 The shaft ; If dimension is specified , If and only if the shaft length is 1 when , Can be removed ;

t = torch.rand((1,2,3,1))

t_sq = torch.squeeze(t)

t_0 = torch.squeeze(t, dim=0)

t_1 = torch.squeeze(t, dim=1)

print(t.shape)

print(t_sq.shape)

print(t_0.shape)

print(t_1.shape)# The second dimension is 2 Therefore, it cannot be compressed

torch.usqueeze(input, dim, out=None)

function : basis dim Expand dimensions

·dim: Extended dimensions

t = torch.rand((1,2,3))

t_sq1 = torch.unsqueeze(t,dim=1)

t_sq2= torch.unsqueeze(t,dim=2)

t_sq3 = torch.unsqueeze(t,dim=3)

print(t.shape)

print(t_sq1.shape)

print(t_sq2.shape)

print(t_sq3.shape)

边栏推荐

猜你喜欢

如何利用AI技术优化独立站客服系统?听听专家怎么说!

What if the apple watch fails to power on? Apple watch can not boot solution!

英飞凌--GTM架构-Generic Timer Module

【合集- 行业解决方案】如何搭建高性能的数据加速与数据编排平台

煤炭行业数智化供应商管理系统解决方案:数据驱动,供应商智慧平台助力企业降本增效

LeetCode动态规划经典题(一)

系统集成项目管理工程师认证高频考点:编制项目范围管理计划

Adhering to the concept of 'home in China', 2022 BMW children's traffic safety training camp was launched

PyTorch学习(三)

Four tips tell you how to use SMS to promote business sales?

随机推荐

MySQL事务并发问题和MVCC机制

秉持'家在中国'理念 2022 BMW儿童交通安全训练营启动

CODING 正式入驻腾讯会议应用市场!

Tensorflow2 深度学习十必知

《客从何处来》

如何利用AI技术优化独立站客服系统?听听专家怎么说!

屏幕显示技术进化史

The online procurement system of the electronic components industry accurately matches the procurement demand and leverages the digital development of the electronic industry

Infineon - GTM architecture -generic timer module

系统集成项目管理工程师认证高频考点:编制项目范围管理计划

使用excel快速生成sql语句

Openlayers roller shutter map

depends工具查看exe和dll依赖关系

Rhai 脚本引擎的简单应用示例

EasyNVR平台设备通道均在线,操作出现“网络请求失败”是什么原因?

php利用队列解决迷宫问题

【TiDB】TiCDC canal_ Practical application of JSON

MRO工业品采购管理系统:赋能MRO企业采购各节点,构建数字化采购新体系

C# Winform程序界面优化实例

Another CVPR 2022 paper was accused of plagiarism, and Ping An insurance researchers sued IBM Zurich team