当前位置:网站首页>Hazel engine learning (V)

Hazel engine learning (V)

2022-07-07 03:08:00 【Little Liu Ya playing guitar】

I maintain the engine myself github Address ad locum , There are a lot of notes in it , You can have a look if you need

Render Flow And Submission

background

stay Hazel Engine learning ( Four ), From scratch , The triangle is drawn , And then put the relevant VerterBuffer、VertexArray、IndexBuffer Abstracted , That is to say, at present Application There will be no specific OpenGL This kind of platform related code , There is one left DrawCall No abstraction , That's what's inside glDrawElements function , And related glClear and glClearColor No abstraction .

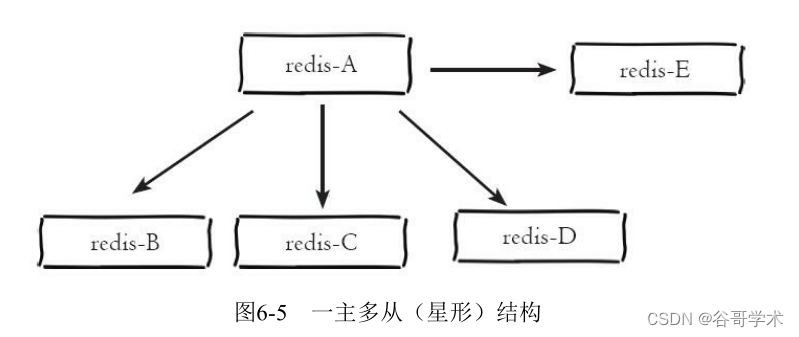

Renderer Architecture

The previous abstraction , such as VertexBuffer、VertexArray, These are class abstractions of related concepts to be used in rendering , True cross platform For rendering Renderer Class has not been created .

Think about it , One Renderer What needs to be done It needs to Render One Geometry.Render One Geometry You need the following :

- One Vertex Array, Contains VertexBuffers And a IndexBuffer

- One Shader

- The perspective of the character , namely Camera System , It's essentially one Projection and View matrix

- Draw the world coordinates of the object , Ahead VertexBuffer Local coordinates are recorded in , That is to say Model(World) matrix

- Cube Material properties of the surface ,wooden perhaps plastic, Metallicity and other related properties , This can also belong to Shader The category of

- environmental information : Such as ambient light 、 such as Environment Map、Radiance Map

This information can be divided into two categories :

- Environment related information : When rendering different objects , Environmental information is generally the same , Such as ambient light 、 The perspective of the characters

- Information about the rendered object : There are many different information about different objects , such as VertexArray, Some properties may also be the same ( Like the material ), These same contents can be processed in batch , To optimize performance

To sum up , One Renderer It should have the following functions :

- Set environment related information

- Accept the rendered object , Pass in the corresponding data , such as Vertex Array、 Refer to the Material and Shader

- Render Objects , call DrawCall

- The batch , In order to optimize performance , Render objects of the same material together

You can put Renderer The task performed in each frame is divided into four steps :

- BeginScene: Responsible for setting the environment before rendering each frame

- Submit: Collect scene data , At the same time, collect rendering commands , Submit rendering commands to the queue

- EndScene: Optimize the collected scene data

- Render: According to the rendering queue , Rendering

The specific steps are as follows :

1. BeginScene

Because the information related to the environment is the same , So in Renderer Start the stage of rendering , You need to build the relevant environment first , For this purpose, a Begin Scene function .Begin Scene Stage , Basically, tell Renderer, I'm going to start rendering a scene , Then the surrounding environment will be set ( Such as ambient light )、Camera.

2. Submit

This stage , You can render every Mesh 了 , their Transform Matrices are generally different , Pass to Renderer That's all right. , All rendering commands will be commit To RenderCommandQueue in .

3. End Scene

It should be at this stage , After collecting the scene data , Do some optimization , such as

- Combine objects with the same material (Batch)

- Put in Frustum External objects Cull fall

- Sort by location

4. Render

Putting everything commit To RenderCommandQueue Back inside , be-all Scene Relevant stuff , Now? Renderer It's all taken care of , Also have the data , You can start rendering .

The code of the overall four processes is roughly as follows :

// stay Render Loop in

while (m_Running)

{

// This ClearColor It is the bottom color of the game , It usually doesn't appear in the user interface , It may be used less

RenderCommand::SetClearColor();// Parameter omitted

RenderCommand::Clear();

RenderCommand::DrawIndexed();

Renderer::BeginScene();// Used for setting up Camera、Environment and lighting etc.

Renderer::Submit();// Submit Mesh to Renderer

Renderer::EndScene();

// In multithreaded rendering , It may be executed by another thread at this stage Render::Flush operation , Need to combine Render Command Queue

Renderer::Flush();

...

}

The actual content of this lesson

Although the rendering architecture has been introduced above , Basically, we deal with objects in a unified way , Then unified rendering , However, the relevant architecture has not been set up yet , So this class is still Bind One VAO, And then call once DrawCall, It will be improved in the future . The purpose of this lesson is still abstract Application.cpp Inside OpenGL The relevant part of the code .

There's only one left glClear、glClearColor and DrawCall Your code needs to be abstracted , These three sentences :

glClearColor(0.1f, 0.1f, 0.1f, 1);

glClear(GL_COLOR_BUFFER_BIT);

glDrawElements(GL_TRIANGLES, m_QuadVertexArray->GetIndexBuffer()->GetCount(), GL_UNSIGNED_INT, nullptr);

These codes , I intend to abstract it into :

// This ClearColor It is the bottom color of the game , It usually doesn't appear in the user interface , Use magenta offensive The color of is better

RenderCommand::SetClearColor(glm::vec4(1.0, 0.0, 1.0, 1.0));// Direct use glm Inside vec4 Okay

RenderCommand::Clear();

RenderCommand::DrawIndexed();

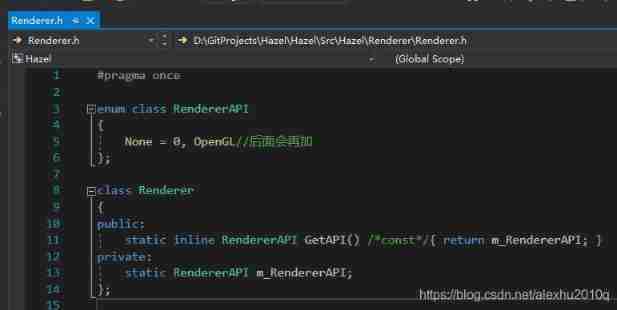

Then look at what was written before now Renderer and RendererAPI class , Very simple , only one static The function is used to represent the currently used rendering API type :

RendererAPI class , In addition to identifying the currently used API Function of type , There are also many platform independent rendering API, Like emptying Buffer、 according to Vertex Array To call DrawCall Such as function , So let's put RendererAPI Class enrichment , Create a RendererAPI.h file :

class RendererAPI

{

public:

// Rendering API The type of , This piece should be made of RendererAPI be responsible for , instead of Renderer be responsible for

enum class APIType// Move the original content here

{

None = 0, OpenGL

};

public:

// Abstract the relevant code into the following three interfaces , Put it in RenderAPI In class

virtual void Clear() const = 0;

virtual void SetClearColor(const glm::vec4&) const = 0;

virtual void DrawIndexed(const std::shared_ptr<VertexArray>&) const = 0;

};

For the original Renderer Inside GetAPI function , It should be RendererAPI be responsible for , instead of Renderer be responsible for , Here, move it to RendererAPI in , current RendererAPI Class becomes :

class RendererAPI

{

...

public:

inline static GetAPIType() const {

return s_APIType; }

private:

static APIType s_APIType;

}

// stay RendererAPI Of cpp Initialize in the file :

RendererAPI::APIType RendererAPI::s_APIType = RendererAPI::APIType::OpenGL;

For convenience , It can also be in Renderer Provide one in GetAPIType function , Just replace the bottom layer with return RendererAPI Under class GetAPIType Functions .

RendererAPI Is an interface class , It's not about the platform , Now you can achieve OpenGL Platform OpenGLRendererAPI 了 ,Platform Create the corresponding... Under the folder cpp and h file , Similar to the previous practice , Not much to say .

Next , Namely Realization Renderer class 了 , At present, this class only implements one GetAPIType function , Class is declared as follows :

class Renderer

{

public:

// TODO: The future will accept Scene Relevant parameters of the scene , such as Camera、lighting, Guarantee shaders Can get the right environment related uniforms

static void BeginScene();

// TODO:

static void EndScene();

// TODO: Will be able to VAO adopt RenderCommand The order , Pass to RenderCommandQueue

// At present, I'm lazy , Call directly RenderCommand::DrawIndexed() function

static void Submit(const std::shared_ptr<VertexArray>&);

inline static RendererAPI::API GetAPIType() {

return RendererAPI::GetAPIType(); }

};

We will implement member functions later , Now declare all classes , And then there were one RenderCommand class 了 , Also create a RenderCommand.h The header file

class RenderCommand

{

public:

// Be careful RenderCommand The functions in should be single function functions , There should be no other coupling functions

inline static void DrawIndexed(const std::shared_ptr<VertexArray>& vertexArray)

{

// For example, you cannot call vertexArray->Bind() function

s_RenderAPI->DrawIndexed(vertexArray);

}

// ditto , Re realization Clear and ClearColor Of static function

...

private:

static RendererAPI* s_RenderAPI;

};

You can see ,RenderCommand Classes just put RendererAPI The content of , Make a static package , This is done in order to add functions to RenderCommandQueue Architecture design done in , It is also for the later multi-threaded rendering .

And then in Renderer Of submit Function to implement the following content :

void Renderer::submit(const std::shared_ptr<VertexArray>& vertexArray)

{

vertexArray->Bind();

RenderCommand::DrawIndexed(vertexArray);

}

RenderAPI、Renderer and RenderCommand Class comparison

- Renderer Be clear about the concept of , At present, it is mainly to prepare the interface related to rendering data .

- RenderAPI and RenderCommand Classes are a little hard to distinguish ,RenderCommand Classes should all be static function , and RendererAPI Is a virtual base class , Each platform has its own PlatformRendererAPI class , and RenderCommand Generic static The function will directly call RendererAPI class , in other words ,RenderCommand and Renderer Classes are all related to Platform Irrelevant , No subclasses need to inherit them .

final Loop The code structure

After this class , The code structure is like this :

void Application::Run()

{

std::cout << "Run Application" << std::endl;

while (m_Running)

{

// Every frame starts Clear

RenderCommand::Clear();

RenderCommand::ClearColor(glm::vec4(1, 0, 1.0, 1));

Renderer::BeginScene();

m_BlueShader->Bind();

Renderer::Submit(m_QuadVertexArray); // call vertexArray->Bind function

RenderCommand::DrawIndexed(m_QuadVertexArray);

m_Shader->Bind();

Renderer::Submit(m_VertexArray);

RenderCommand::DrawIndexed(m_VertexArray);

Renderer::EndScene();

// Application You should not know which platform is called window,Window Of init Operate on Window::Create Inside

// So it's done window after , You can call it directly loop Start rendering

for (Layer* layer : m_LayerStack)

layer->OnUpdate();

m_ImGuiLayer->Begin();

for (Layer* layer : m_LayerStack)

// every last Layer Are calling ImGuiRender function

// There are two at present Layer, Sandbox Defined ExampleLayer And constructors ImGuiLayer

layer->OnImGuiRender();

m_ImGuiLayer->End();

// Call... At the end of each frame glSwapBuffer

m_Window->OnUpdate();

}

}

Camera

Code framework of camera system (architecture) Very important , It determines whether the game engine can spend more time on rendering , Thus increasing the number of frames .Camera In addition to rendering , It also interacts with players , such as User Input、 For example, when players move ,Camera Often also need to move , So ,Camera Accepted GamePlay influence , Will also be Submit To Renderer Do rendering work , The main purpose of this class is Planning.

Camera Itself is a virtual concept , Its essence is View and Projection Matrix settings , Its attributes are :

- The location of the camera

- Camera related properties , such as FOV, such as Aspect Ratio

- MVP In three matrices ,M Is closely related to the model , But different models are under the same camera ,V and P The matrix is the same , So ,VP Matrix belongs to the attribute of camera

When actually rendering , The default camera is at the origin of the world coordinate system , toward -z Looking in the direction , When adjusting camera properties , for instance Zoom In When , The position of the camera has not changed , In fact, the objects of the whole world are approaching the camera , That is to say Camera Pan here ; When we Move the camera to the left When , There is no Camera The concept , In fact, we put all The object of the world moves to the right , therefore , Camera's transform Change matrix and object transform The change matrix is exactly inverse . in other words , We can record the camera's transformation matrix , Then take the inverse matrix , You can get the corresponding View Matrix , All we need here is Position and Rotation, Because the camera is not scaled .

The assignment of vertex coordinates

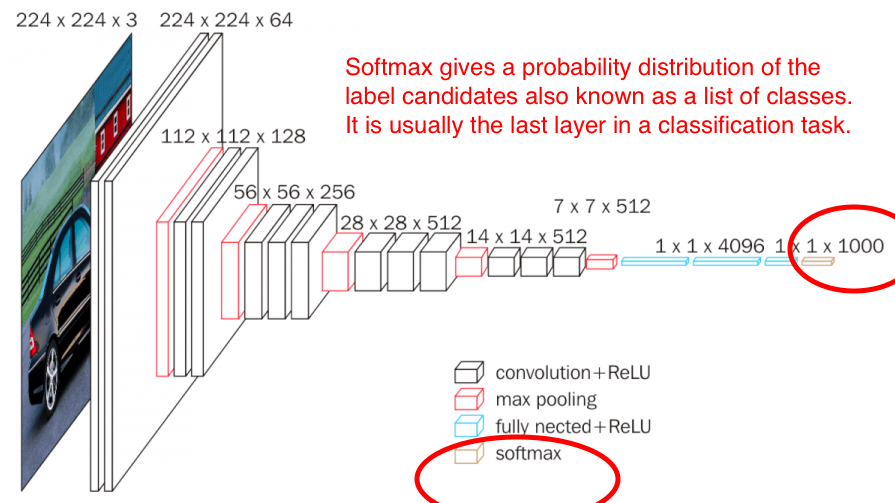

As shown in the figure below , It is a process from vertex calculation to screen coordinate system :

gl_Position = project * view * model * vertPos;

among ,project*view, combined term vp matrix , It should belong to Camera, Because of all the objects in the same camera vp Matrices are the same , and model The matrix should belong to the corresponding object Object( such as Unity Of GameObject),vertPos Belong to Mesh The points on the .

Camera As a parameter to Renderer Of BeginScene function

The specific idea in the code is , At the end of the game Game Loop There's one in it BeginScene function , This function is Renderer The static function of , I will update my camera 、 Lighting and other settings , So here BeginScene Function needs to accept Camera Class as parameters , But here's as a parameter , There are two ways :

- Camera Object is passed in as a reference BeginScene function , What is passed in is a reference

- Camera Object is passed in as a value BeginScene function , The value passed in is

Under normal circumstances , I must be thinking about the first kind of biography Camera Methods , But here we need to choose the second , And the reason is that , Here is Multithreaded rendering , Because in multithreaded rendering , Will put all the functions in RenderCommandQueue Internal execution , This BeginScene It will also be put in , And in the RenderCommandQueue The function in , If the reference of the camera is saved , It's a dangerous thing , Because multithreaded code has camera References to ,camera When rendering with multiple threads, it is not guaranteed to be unchanged , If the main thread changes during rendering Camera Information about , such as Camera Of Pos, It'll go haywire ( Be careful , Pass in const& Not good either. , Because this can only guarantee not to RenderCommandQueue Go inside and change Camera Information about , It does not mean that it cannot be changed in the main thread Camera Information about )

The code is as follows :

// This BeginScene function , It will bring Camera Information about , such as vp matrix ,fov Wait for the information to be copied into Renderer

BeginScene(m_Camera, ...);// Yes m_Camera Carry out value transfer

Leave the Camera The homework

Cherno Let's realize one by ourselves Camera System , Here's what I designed Camera Interfaces and ideas , I feel there are many uncertain places :

// This should be a class independent of the rendering platform , Subsequently, it will be derived into the corresponding Camera?

class Camera

{

public:

Camera(float fov) : m_Fov(fov) {

}

// How to make this matrix platform independent , You may have to design a math class by yourself , representative mat4

mat4 GetCameraMatrix();

void SetCameraMatrix();

void GetFov() {

return m_Fov; }

void SetFov(float fov) {

m_Fov = fov; }

private:

float m_Fov;

// Perspective matrix or orthogonal matrix , use enum Do you

};

Camera The concrete realization of

Watched the video , This chapter does not implement a general Camera class , But according to the different projection methods , Divided into perspective projection Camera And orthogonal projection Camera, This set first realizes a simpler orthogonal projection Camera.

First, from the design , Correct the above problem :

- It shouldn't be a return GetCameraMatrix,Camera The essence is two Matrix, That is to say View Matrix and projection matrix , So it should be designed to return V、P and VP Three interfaces of matrix

- The type of the returned matrix is not customized here , Or use of

glm::mat4, As if glm::mat4 It's cross platform C++ Code - stay Camera In the constructor of , Input build Project Matrix data , And build View Matrix data

The interface design here is as follows :

// At present, it is regarded as a 2D Of Camera, Because at present, the rotation of the camera has only one dimension

class OrthographicCamera

{

public:

// Constructors , Due to the orthogonal projection , need Frustum, Default near by -1, far by 1, Don't write

// However, this constructor does not specify Camera The location of , So it should be the default location

OrthographicCamera(float left, float right, float bottom, float top);

// Reading and writing Camera The position and orientation of , These data are used to set View Matrix

const glm::vec3& GetPosition() const {

return m_Position; }

void SetPosition(const glm::vec3& position) {

m_Position = position; RecalculateViewMatrix(); }

float GetRotation()const {

return m_Rotation; }

void SetRotation(float rotation) {

m_Rotation = rotation; RecalculateViewMatrix(); }

// Return the interface of three matrices , These data are used to set Projection matrix

const glm::mat4& GetProjectionMatrix() const {

return m_ProjectionMatrix; }

const glm::mat4& GetViewMatrix() const {

return m_ViewMatrix; }

const glm::mat4& GetViewProjectionMatrix() const {

return m_ViewProjectionMatrix; }

private:

void RecalculateViewMatrix();

private:

glm::mat4 m_ProjectionMatrix;

glm::mat4 m_ViewMatrix;

glm::mat4 m_ViewProjectionMatrix;// As a calculation Cache

glm::vec3 m_Position; // The location of the camera for orthographic projection does not seem to matter

float m_Rotation = 0.0f;// The camera under orthographic projection will only have a circle Z The rotation of the shaft

};

The class implementation code is as follows , It's not hard :

#include "hzpch.h"

#include "OrthographicCamera.h"

#include <glm/gtc/matrix_transform.hpp>

namespace Hazel

{

OrthographicCamera::OrthographicCamera(float left, float right, float bottom, float top)

: m_ProjectionMatrix(glm::ortho(left, right, bottom, top, -1.0f, 1.0f)), m_ViewMatrix(1.0f)

{

m_ViewProjectionMatrix = m_ProjectionMatrix * m_ViewMatrix;

}

void OrthographicCamera::RecalculateViewMatrix()

{

glm::mat4 transform = glm::translate(glm::mat4(1.0f), m_Position) *

glm::rotate(glm::mat4(1.0f), glm::radians(m_Rotation), glm::vec3(0, 0, 1));

m_ViewMatrix = glm::inverse(transform);

m_ViewProjectionMatrix = m_ProjectionMatrix * m_ViewMatrix;

}

}

application OrthographicCamera

OrthographicCamera Nature is VP matrix , Here we are creating Application when , Write a dead orthogonal projection Camera, Then at each frame Render In the process of , From the Camera To get VP Matrix exists SceneData in , The code is as follows :

void Application::Run()

{

std::cout << "Run Application" << std::endl;

while (m_Running)

{

// Every frame starts Clear

RenderCommand::Clear();

RenderCommand::ClearColor(glm::vec4(1.0f, 0.0f, 1.0f, 1.0f));

// hold Camera Inside VP Matrix information is transmitted to Renderer Of SceneData in

Renderer::BeginScene(*m_Camera);

{

// todo: Subsequent operations should include Batch

// bind shader, Upload VP matrix , And then call DrawCall

Renderer::Submit(m_BlueShader, m_VertexArray);

// bind, And then call DrawCall

Renderer::Submit(m_Shader, m_QuadVertexArray);

}

Renderer::EndScene();

// Update others Layer and ImGUILayer

...

}

}

// Submit Function as follows

void Renderer::Submit(const std::shared_ptr<Shader>& shader, const std::shared_ptr<VertexArray>& va)

{

shader->Bind();

shader->UploadUniformMat4("u_ViewProjection", m_SceneData->ViewProjectionMatrix);

RenderCommand::DrawIndexed(va);

}

TimeStep System

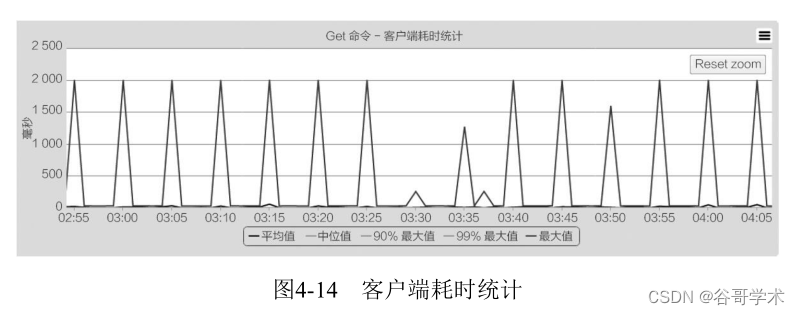

current Hazel In the game engine , How many times in a second OnUpdate function , It all depends on CPU Of ( When open VSync It depends on the frequency of the display ). Suppose I design a function that uses code to control camera movement , The code is as follows :

void Application::Run()

{

std::cout << "Run Application" << std::endl;

while (m_Running)

{

auto m_CameraPosition = m_Camera->GetPosition();

float m_CameraMoveSpeed = 0.01f;

if (Hazel::Input::IsKeyPressed(HZ_KEY_LEFT))

m_CameraPosition.x -= m_CameraMoveSpeed;

m_Camera->SetPosition(m_CameraPosition);

...

}

}

There's a problem : If you execute this code on different machines at the same time , Better performance CPU,1s The more internal circulation runs at this time , The camera will move faster . Different machines have different execution effects , This is definitely not going to work , So design TimeStep System .

Three different Timestep System

Generally speaking , There are three kinds of Timestep System , They are all used to help solve the problem of different cycle execution speeds on different machines :

- Fix delta time Of Timestep System

- flexible delta time Of Timestep System ,delta time Depends on the time of this frame

- Semi fixed delta time Of Timestep System (Semi-fixed timestep)

See the appendix for details .

Hazel In the engine Timestep System

Because there is no physical engine part at present , So here we choose the second one mentioned above Timestep System .

The second kind Timestep The design principle of the system is : Although different machines execute once Loop Functions take different times , But just put the motion in each frame , Multiply by the time of the frame , It can offset the problem of data inconsistency caused by frame rate . Because the speed of function execution is inversely proportional to the time of function execution , In this way, the effect of calling functions too fast can be eliminated , After all, the more functions are called per second , The less time it takes per frame . For example, the speed of the object in the function is X m/s, that X* DeltaTime, Even machines A Rendering 1 Seconds render 5 frame , machine B1 Seconds render 8 frame , They are 1s The total time point DeltaTime It's still the same , Displacement is also X

So you only need to record each frame DeltaTime, And then in Movement Multiply by it , In fact, it refers to the function called in the loop before , such as OnUpdate function , From a nonparametric function to a function with a TimeStep Parameter function , The code is as follows :

// ============== Timestep.cpp =============

// Timestep It's actually a float It's worth it wrapper

class Timestep

{

public:

Timestep(float time = 0.0f)

: m_Time(time)

{

}

operator float() const {

return m_Time; }

// to float add to wrapper It is convenient to convert seconds and milliseconds

float GetSeconds() const {

return m_Time; }

float GetMilliseconds() const {

return m_Time * 1000.0f; }

private:

float m_Time;

};

// ============== Application.cpp =============

void Application::Run()

{

while(m_Running)

{

float time = (float)GetTime();// All platform general package API, OpenGL Up is glfwGetTime();

// Be careful , here time - m_LastFrameTIme, It should be the time of the current frame , Instead of the time experienced in the previous frame

Timestep timestep = time - m_LastFrameTIme;

m_LastFrameTime = time;

...// Call the engine LayerStack Each inside layer Of OnUpdate function

...// Call engine ImGUILayer Of OnUpdate function

...// call m_Window Of OnUpdate function

}

}

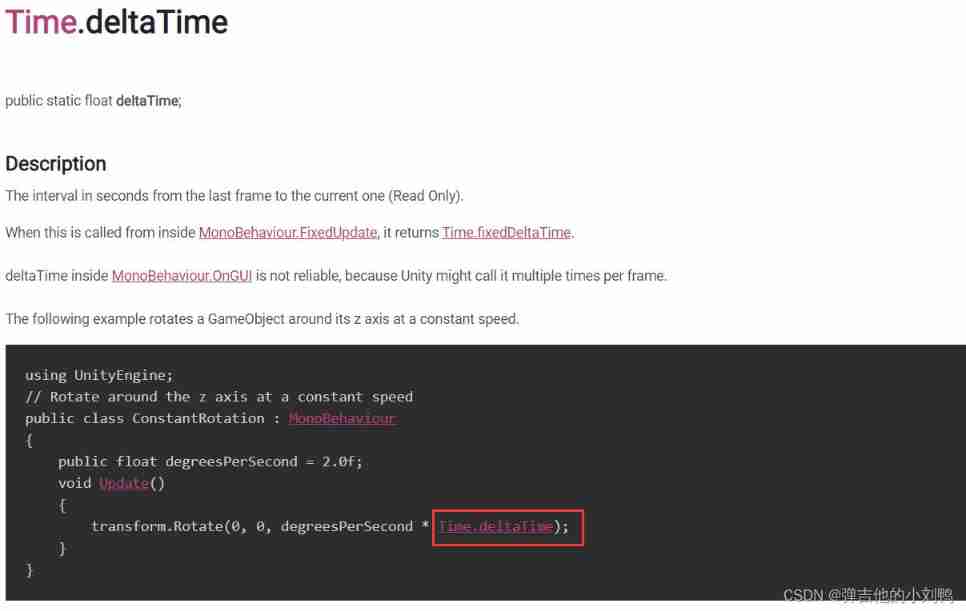

Most other game engines also do this , such as Unity, Its Update Although there is no transmission in the function DeltaTime, But it can go through Time.DeltaTime To get it , As shown in the figure below :

hold Application.cpp Move the content of to Sandbox Corresponding Project

Application Class should be mainly responsible for While loop , Call each inside Layer Of Update function , and SandboxApp Class inherits from Application class , But it's just a rough shell , It exists to put new Coming out ExampleLayer Add to the inherited m_LayerStack in , Specific drawing Quad and Triangle The operation of should be put in Sandbox Corresponding Project Of Layer in , Something like that :

#pragma once

#include "Hazel/Layer.h"

#include "Hazel/Renderer/Shader.h"

#include "Hazel/Renderer/VertexArray.h"

#include "Hazel/Renderer/OrthographicCamera.h"

class ExampleLayer : public Hazel::Layer

{

public:

ExampleLayer();

private:

void OnAttach() override;

void OnDettach() override;

void OnEvent(Hazel::Event& e) override;

void OnUpdate(const Timestep& step) override;

void OnImGuiRender() override;

// The camera 、 Draw the VertexArray、 Corresponding Shader and Camera All belong to a single Layer

// there Camera It belongs to Layer Of , It doesn't exist Application or Sandbox In class

private:

Hazel::OrthographicCamera m_Camera;

std::shared_ptr<Hazel::Shader> m_Shader;

std::shared_ptr<Hazel::Shader> m_BlueShader;

std::shared_ptr<Hazel::VertexArray> m_VertexArray;

std::shared_ptr<Hazel::VertexArray> m_QuadVertexArray;

};

// Be careful , there SandboxApp Only corresponding Layer, Then join in Application Of

// m_LayerStack in , The specific function called is Application.cpp Of Run In the function

// Run Functions are not exposed to subclasses override Of

class SandboxApp : public Hazel::Application

{

public:

// In fact, the function definition of constructor is placed in cpp In the file , It's convenient to display it here in the class declaration

SandboxApp()

{

HAZEL_ASSERT(!s_Instance, "Already Exists an application instance");

m_Window = std::unique_ptr<Hazel::Window>(Hazel::Window::Create());

m_Window->SetEventCallback(std::bind(&Application::OnEvent, this, std::placeholders::_1));

m_ImGuiLayer = new Hazel::ImGuiLayer();

m_LayerStack.PushOverlay(m_ImGuiLayer);

m_LayerStack.PushLayer(new ExampleLayer());

//m_Window->SetVSync(true);

}

~SandboxApp() {

};

};

Transforms

current Transform All are World In coordinate system Transform, There is no hierarchical parent-child relationship , Nature is globalPosition,globalRotation and globalScale, It can form a matrix to express . It's very simple here , I didn't even create one alone Transform class , Is represented by a matrix Model matrix , As uniform Pass to Shader nothing more , It's simple , The key function to be modified here is Submit function , The original function is as follows :

void Renderer::Submit(const std::shared_ptr<Shader>& shader, const std::shared_ptr<VertexArray>& va)

Now change it to :

// In rendering Vertex Array When , Add corresponding model Of transform Corresponding matrix information

void Renderer::Submit(const std::shared_ptr<Shader>& shader, const std::shared_ptr<VertexArray>& vertexArray, const glm::mat4& transform)

{

shader->Bind();

shader->UploadUniformMat4("u_ViewProjection", s_SceneData->ViewProjectionMatrix);

shader->UploadUniformMat4("u_Transform", transform);

RenderCommand::DrawIndexed(va);

}

add to Hazel::Scope and Hazel::Ref

The code is as follows :

// Core.h In the document

namespace Hazel

{

// I don't think it's necessary , I won't write this first , Write when necessary

template<typename T>

using Scope = std::unique_ptr<T>;

template<typename T>

using Ref = std::shared_ptr<T>;

}

Although it seems a little superfluous to write like this , But it can distinguish Hazel Internal and Hazel External smart pointer , For example, these two codes , It won't work Hazel::Ref To express , Used to indicate that it is the external content used , Even different modules inside the engine can use different Ref, Used to distinguish :

std::shared_ptr<spdlog::logger> Log::s_CoreLogger;

std::shared_ptr<spdlog::logger> Log::s_ClientLogger;

in addition , Several knowledge points were mentioned in the class :

shared_ptrIt's thread safe , The addition and subtraction of its reference count are atomic , To ensure multithreading , It will cause additional consumption , So if it is not used in multithreading , In order to be more efficient , You may also need to implement your own engine in the futureshared_ptrclass , Nothing but thread safe- According to programming experience , In most cases , have access to

shared_ptr, instead ofunique_ptr, The performance overhead of the two is actually small , If the performance overhead of both is large , Maybe it's better to use it raw pointers - there

Hazel::Ref, That is to sayshared_ptrPart of the reference count , It can be regarded as a very rough AssetManager, Once the reference count of the resource is 0, Then the resource will be automatically destroyed

Textures

The content of this chapter is not difficult , The goal is to create a Texture class , there Texture Class is the same as the previous VertexArray,Buffer Class is similar . First create an abstract base class , This base class represents the common Texture, And then create the corresponding Create Texture function , And then according to RenderAPI Of Platform type , stay Create Function returns Texture object , The code is as follows :

namespace Hazel

{

// Texture It can be divided into many types , such as CubeTexture, Texture2D

class Texture

{

public:

virtual ~Texture() = default;

virtual uint32_t GetWidth() const = 0;

virtual uint32_t GetHeight() const = 0;

virtual void Bind(uint32_t slot = 0) const = 0;

};

// Be careful , Here is an extra package Texture2D class , Inherited from Texture class

class Texture2D : public Texture

{

public:

static Ref<Texture2D> Create(const std::string& path);

};

std::shared_ptr<Texture2D> Texture2D::Create(const std::string& path)

{

switch (Renderer::GetAPI())

{

case RendererAPI::API::None: HZ_CORE_ASSERT(false, "RendererAPI::None is currently not supported!"); return nullptr;

case RendererAPI::API::OpenGL: return std::make_shared<OpenGLTexture2D>(path);

}

HZ_CORE_ASSERT(false, "Unknown RendererAPI!");

return nullptr;

}

}

Additional content mentioned in the video :

- Textures It's not just a picture made up of simple colors , It can also store some offline calculation results , And normal mapping , For example, in animation , It can even be used to store skin matrix

- When loading Texture When the failure , You can return a magenta map , Or draw magenta directly , It means that this material is lost

- current Texture2D Instance class of , such as OpenGLTexture2D, Read the file from the constructor , So at present, it does not support the popularity of resources , It needs to be passed later AssetManager Realization ,Texture2D It's hotter , So when I edit , Replace file , It can immediately display the latest mapping effect

- Through here

stb_imageLibrary to load maps , All the required contents are put instb_image.hIn the header file of , Support JPG, PNG, TGA, BMP, PSD, GIF, HDR, PIC Format - When reading the map here , Will return an integer , be called channels, It represents the number of channels in the map , such as RGB Format picture , The number of channels is 3,RGBA The is 4, For grayscale maps , The number of channels may even be 1.

Blend

I learned before OpenGL, This chapter is also very simple , It's nothing more than OpenGLRenderer Add a Init function , Then open Blend, Then draw one more picture , The code is as follows :

glEnable(GL_BLEND);

// This function is used to determine , pixel When drawing , If you have already drawn pixel 了 , So new pixel The weight of is its alpha value , The original pixel The weight value of is 1-alpha value

glBlendFunc(GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA); //source The weight value is used alpha value ,destination The weight value is 1-source Weight value

I wrote one before OpenGL note ( 5、 ... and ), You can see

Shader Asset Files

current Shader It was written dead in the code , The code written in this way will serve as static Constants are stored in memory . But there is a common requirement in game engines , Namely Yes Shader It's hotter , For example, I change one Shader, I want to see the changed in the game immediately Shader The effect of . If you put Shader In a separate file , You can be alone again Reload And compile this new file . One more question , The game engine , such as Unity, It supports writing in the editor Shader, The current writing method does not meet this kind of user needs .

Learn before OpenGL when , One ShaderProgram There are multiple files , Separate storage vert shader、fragment shader etc. , and DX It's all in one file . It feels more scientific to put them in a file , So the file created here is in the following format :

#type vertex // Be careful , This thing is separated by its own defined string Shader Methods

...// Write the original vertex shader

#type fragment

...// Write the original fragment shader

And then use it ifstream To read the file , obtain string, Then look for the above #type ... This kind of thing , Take a big one string, Divided into several string, After each subdivision string It corresponds to a kind of shader.

Additional content mentioned in the video :

- Generally speaking , In the game engine Shader, It's all in Editor Under the precompiled binary file , And then in Runtime Combine and apply them

ShaderLibrary

This chapter is also very simple , It's just a way of Shader The read and storage locations of are allocated to ShaderLibrary In class ,ShaderLibrary The essence is a hash map,key yes shader Name ,value yes shader The content of , The code is as follows :

class ShaderLibrary

{

public:

void Add(const std::string& name, const Ref<Shader>& shader);

void Add(const Ref<Shader>& shader);

Ref<Shader> Load(const std::string& filepath);

Ref<Shader> Load(const std::string& name, const std::string& filepath);

Ref<Shader> Get(const std::string& name);

bool Exists(const std::string& name) const;

private:

std::unordered_map<std::string, Ref<Shader>> m_Shaders;

};

And then put the original ExampleLayer Inside shader Variable , Change to a ShaderLibrary Object can , Ask for whatever you need .

Appendix

A warning :warning C4227: anachronism used : qualifiers on reference are ignored

anachronism Translate it into anachronism , The specific warning code appears on this line :

virtual void DrawIndexed(std::shared_ptr<VertexArray>& const) = 0;

If you put & Symbols or const Keywords are removed , The warning disappears , in other words ,std::shared_ptr<T>& const Type does not exist , The compiler will automatically ignore & Symbol .

Or did you make a fool of yourself , Should write const &,reference Itself is const Of , Only const & That's what I'm saying , No, & const That's what I'm saying . The pointer is different , both const*, Also have *const, The following sentence will trigger the same warning :

const int j = 0;

int &const i = j; // C4227

By the way, you can judge which of the following will fail to compile :

const int j = 0;

int const& i = j;

int const* p1 = j;

int const* p2 = &j;

int const* const p3 = j;

int *const p4 = j;

int c = 5;

int const* p5 = &c;

The answer is :

const int j = 0;

int const& i = j;

int const* p1 = j; // Can be compiled by , But there's a problem , p1 It's a pointer , here p1 The value of is 0x00000000

int const* p2 = &j; // p2 It's a pointer , The record is j The address of

int const* const p3 = &j;

int *const p4 = j; // Compiler error , because p4 It points to ordinary int, No const int

int c = 5;

int const* p5 = &c; // Compile correctly

Pass in shared_ptr Pay attention to

Reference resources :https://stackoverflow.com/questions/37610494/passing-const-shared-ptrt-versus-just-shared-ptrt-as-parameter

Take a look at the following two function signatures , What's the difference

void doSomething(std::shared_ptr<T> o) {

}

// this signature seems to defeat the purpose of a shared pointer

void doSomething(const std::shared_ptr<T> &o) {

}

The second way , What's coming in is const &, It does not replicate shared_ptr object , Then the corresponding reference count will not change . and shared_ptr It's thread safe , The increase or decrease of its reference count is an atomic operation , This requires a lot of performance , therefore , The second function signature will be more efficient than the first function signature .

Think more deeply , If the incoming is const &, It means that this pointer is passed in by reference , in other words , If in the function called layer by layer , Each function passes in a reference to this pointer as a parameter , So in the last function , Let the pointer change to nullptr, Then the whole layer of calls will fail . and Shared_ptr The idea of is that an object shares multiple pointers , If you pass in const &, So it seems to be related to shared_ptr The design concept of the

The right thing to do is ,When calling down to functions pass the raw pointer or a reference to the object the smart pointer is managing. according to CppCoreGuidelines Inside F7, There is such a suggestion :

For general use, take T* or T& arguments rather than smart pointers

because Passing in a smart pointer will transfer control of the object , Only when there is a real need to transfer control , Smart pointer should be passed .Passing by smart pointer restricts the use of a function to callers that use smart pointers, If the parameter is a smart pointer , It will limit the range of parameters . for instance , One accepts widget As a function of parameters , Its parameters should be any widget Object of type , Not just those objects whose lifetimes are controlled by smart pointers . Look at the code below :

// Different function signatures correspond to the way

// accepts any int*

void f(int*);

// can only accept ints for which you want to transfer ownership

void g(unique_ptr<int>);

// can only accept ints for which you are willing to share ownership

void g(shared_ptr<int>);

// doesn't change ownership, but requires a particular ownership of the caller

void h(const unique_ptr<int>&);

// accepts any int

void h(int&);

This is a bad example :

// callee, Bad signature design

void f(shared_ptr<widget>& w)

{

// ...

// There is no use of what smart pointers involve lifetime Relevant stuff , It's better to send it directly widget&

use(*w); // only use of w -- the lifetime is not used at all

// ...

};

// caller

// establish shared_ptr Then incoming , success

shared_ptr<widget> my_widget = /* ... */;

f(my_widget);

// Create a normal object and pass it in , Failure

widget stack_widget;

f(stack_widget); // error

This is a good example :

// callee

void f(widget& w)

{

// ...

use(w);

// ...

};

// caller

shared_ptr<widget> my_widget = /* ... */;

f(*my_widget);// Pointer dereference

widget stack_widget;

f(stack_widget); // ok -- now this works

Finally, sum up and emphasize two points :

- If the parameters of a function , Using the smart pointer type( Not necessarily std The smart pointer of , It can also be achieved by yourself operator-> and operator* Class ), And the corresponding smart pointer is copyable Of , If only

operator*,operator->orget()operation , Then the function parameter is suggested to beT*orT&

Objects of abstract classes cannot be created , But the pointer can

That's fine

// header in

class RenderCommand

{

static RendererAPI* s_RendererAPI;

};

// cpp in

RendererAPI* RenderCommand::s_RendererAPI = new OpenGLRendererAPI();

There is a problem with this writing :

class RenderCommand

{

static RendererAPI s_RendererAPI;// error , Abstract classes cannot have objects

};

Public inheritance and private inheritance

Reference resources :https://www.bogotobogo.com/cplusplus/private_inheritance.php#:~:text=Private%20Inheritance%20is%20one%20of,interface%20of%20the%20derived%20object.

When class inheritance was written before ,public Write out , Becomes the default private inheritance , Cause this line of code to go wrong :

std::shared_ptr<Texture2D> Texture2D::Create(const std::string& path)

{

...

return std::make_shared<OpenGLTexture2D>(path);// Compile error , OpenGLTexture2D Cannot be transformed into Texture2D

}

Public inheritance is is-a The relationship between , Private inheritance is has-a The relationship between , The code is as follows :

// Patients with a : explain private Inheritance is not is-a Relationship

class Person {

};

class Student:private Person {

}; // private

void eat(const Person& p){

} // anyone can eat

void study(const Student& s){

} // only students study

int main()

{

Person p; // p is a Person

Student s; // s is a Student

eat(p); // fine, p is a Person

eat(s); // error! s isn't a Person

return 0;

}

// Example 2 , It introduces private The use of inheritance

#include <iostream>

using namespace std;

class Engine

{

public:

Engine(int nc){

cylinder = nc;

}

void start() {

cout << getCylinder() <<" cylinder engine started" << endl;

};

int getCylinder() {

return cylinder;

}

private:

int cylinder;

};

class Car : private Engine

{

// Car has-a Engine

public:

Car(int nc = 4) : Engine(nc) {

}

void start() {

// This kind of writing is very strange , there getCylinder It's not a static function , Why do you write so

cout << "car with " << Engine::getCylinder() <<

" cylinder engine started" << endl;

Engine:: start();

}

};

int main( )

{

Car c(8);

c.start();

return 0;

}

// output by

// car with 8 cylinder engine started

// 8 cylinder engine started

Three Timestep System

Reference resources :https://gafferongames.com/post/fix_your_timestep/

Reference resources :https://johnaustin.io/articles/2019/fix-your-unity-timestep

Reference resources :https://www.youtube.com/watch?v=ReFmKHfSOg0&ab_channel=InfallibleCode

Fixed delta time

This is also the simplest way , The code is as follows :

double t = 0.0;

double dt = 1.0 / 60.0;

while (!quit)

{

integrate(state, t, dt);

render(state);

t += dt;

}

This code is generally enabled VSync Called when , Because you already know how to call rendering Loop The frequency of , For example, the display here is 60 The frame rate of , You can write this directly . But it's not good to write like this , Because it has a problem : If CPU The rendering frequency cannot keep up with the frequency of the display , Then the function here can't run in a second 60 Time , The logic in the game will slow down . And if it doesn't open VSync, There will be problems .

Variable delta time

The following code is similar to Unity Inside Update Function execution logic , Actually here delta time It records the time taken for the previous frame to run to the current frame .

double t = 0.0;

double currentTime = hires_time_in_seconds();

while (!quit )

{

double newTime = hires_time_in_seconds();

double frameTime = newTime - currentTime;// amount to Unity Of Time.deltaTime

currentTime = newTime;

integrate(state, t, frameTime);

t += frameTime;

render(state);

}

But this way , If the machine is bad , Between frames delta time Not only may it be very big , And every frame delta time It's all different . For the system of physical simulation , What is needed is stability , And smaller delta time, That's the only way , To smoothly calculate the changes between physics .

Semi-fixed timestep

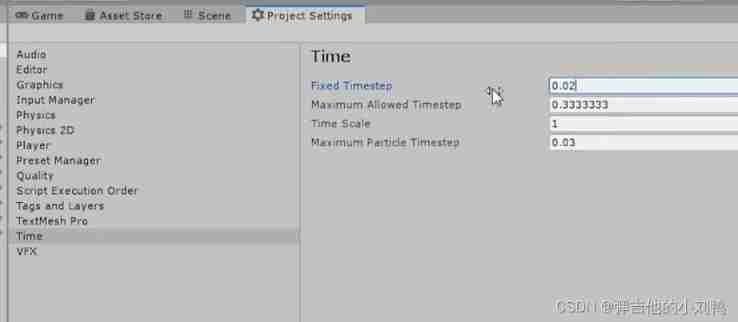

In fact, it's similar to Unity Of FixedUpdate function ,Unity Inside Update A function is a function that is executed once per frame , and FixedUpdate Each frame can be executed 0、1 Any number of times , It depends. Unity Of time The relevant settings are the same as those in the game framerate, As shown in the figure below :

FixedUpdate is used when you need to have something persistently cleanly applying at the same rate, and that’s usually physics, because physics needs to calculate stuff using time as an actual parameter.

The preliminary idea is , When the duration of a frame , namely DeltaTime When it is greater than the specified maximum value per frame , Process multiple times in this frame DeltaTime, It is similar to frame filling operation , hold DeltaTime Refine into several smaller DeltaTime, The relevant code is as follows :

double t = 0.0;

double dt = 1 / 60.0;// This is a constant , representative delta time The maximum of

double currentTime = hires_time_in_seconds();

while (!quit)

{

// Get the last frame here delta time

double newTime = hires_time_in_seconds();

double frameTime = newTime - currentTime;

currentTime = newTime;

// Notice the loop here , When frameTime Greater than 1/60 when , There will be several extra runs of this cycle

// It's equivalent to putting frameTime Subdivide into multiple Timestep, But they are all executed within this frame

while (frameTime > 0.0)

{

float deltaTime = min(frameTime, dt);// delta time It is not allowed to exceed 1/60

//

integrate(state, t, deltaTime);

frameTime -= deltaTime;

// t It should be the real time of current experience

t += deltaTime;

}

render(state);

}

There will also be a disadvantage in writing like this , If it's here Integrate Contents of Li CPU If the consumption is high , Easy to fall into Spiral Of Death, It translates into circular death . for instance , When the previous code integrate function , It cannot be assigned frameTime When it's finished , Will fall into an endless cycle

About Spiral of Death

What is the spiral of death? It’s what happens when your physics simulation can’t keep up with the steps it’s asked to take. For example, if your simulation is told: “OK, please simulate X seconds worth of physics” and if it takes Y seconds of real time to do so where Y > X, then it doesn’t take Einstein to realize that over time your simulation falls behind. It’s called the spiral of death because being behind causes your update to simulate more steps to catch up, which causes you to fall further behind, which causes you to simulate more steps…

The new code looks like this :

double t = 0.0;

const double dt = 0.01;

double currentTime = hires_time_in_seconds();

double accumulator = 0.0;

while (!quit)

{

double newTime = hires_time_in_seconds();

double frameTime = newTime - currentTime;

currentTime = newTime;

accumulator += frameTime;

while (accumulator >= dt)

{

integrate(state, t, dt);

accumulator -= dt;

t += dt;

}

render(state);

}

It feels a little complicated , We'll study this problem later when we write about the physics engine , It should be in This article The back part of ,Remain.

C++ Find line breaks

Here we must pay attention to the writing of slashes

// Be careful , The escape character is \r\n, windows On behalf of line feed , \r Is the cursor moving to the beginning of the line , \n The cursor jumps to the next line

sizeof("\r\n");// 3

sizeof("/r/n");// 5

But I think the string is actually \n\n, It seems that it is also a line break

std::string.find_first_of Misuse of functions

size_t newP2 = (string("fdasftvv")).find_first_of("#type ", 0);// incredibly newP2 by 5

I thought it was to query this string , And then I thought about it , It is actually any character of the input string of the query , For example, input here "type ", It will come from "fdasftvv" in , seek t、y、p、e and Five characters (const char* The ending character at the end should not count )

The function that should be used is string.find():

string str = "geeksforgeeks a computer science";

string str1 = "geeks";

// Find first occurrence of "geeks"

size_t found = str.find(str1);

if (found != string::npos)

cout << "First occurrence is " << found << endl;

std::make_shared Usage of

That's how it was written before , Then compile and report errors :

{

std::shared_ptr<Shader> Shader::Create(const std::string& path)

{

RendererAPI::APIType type = Renderer::GetAPI();

switch (type)

{

case RendererAPI::APIType::OpenGL:

return std::make_shared<OpenGLShader>(new OpenGLShader(path));

...

}

It's actually a matter of writing , Need to change to :

return std::make_shared<OpenGLShader>(path);

边栏推荐

- A complete tutorial for getting started with redis: RDB persistence

- 又一百万量子比特!以色列光量子初创公司完成1500万美元融资

- HDU 4337 King Arthur&#39;s Knights 它输出一个哈密顿电路

- Install redis from zero

- 尚硅谷JVM-第一章 类加载子系统

- 掘金量化:通过history方法获取数据,和新浪财经,雪球同用等比复权因子。不同于同花顺

- unrecognized selector sent to instance 0x10b34e810

- How to write test cases for test coupons?

- QT常见概念-1

- 杰理之发射端在接收端关机之后假死机【篇】

猜你喜欢

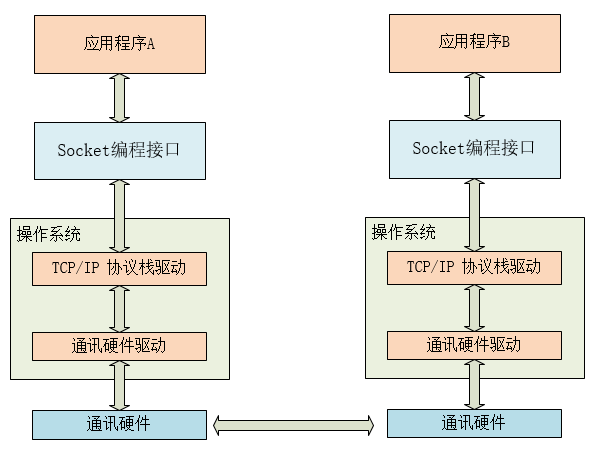

【Socket】①Socket技术概述

The 8 element positioning methods of selenium that you have to know are simple and practical

Classify the features of pictures with full connection +softmax

Redis introduction complete tutorial: client case analysis

![[secretly kill little partner pytorch20 days] - [Day1] - [example of structured data modeling process]](/img/f0/79e7915ba3ef32aa21c4a1d5f486bd.jpg)

[secretly kill little partner pytorch20 days] - [Day1] - [example of structured data modeling process]

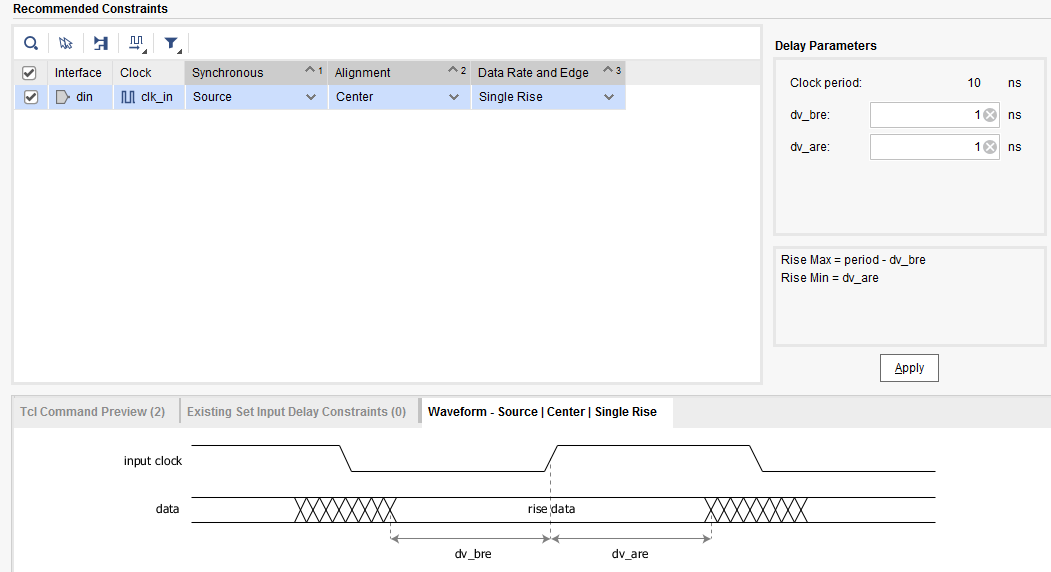

input_delay

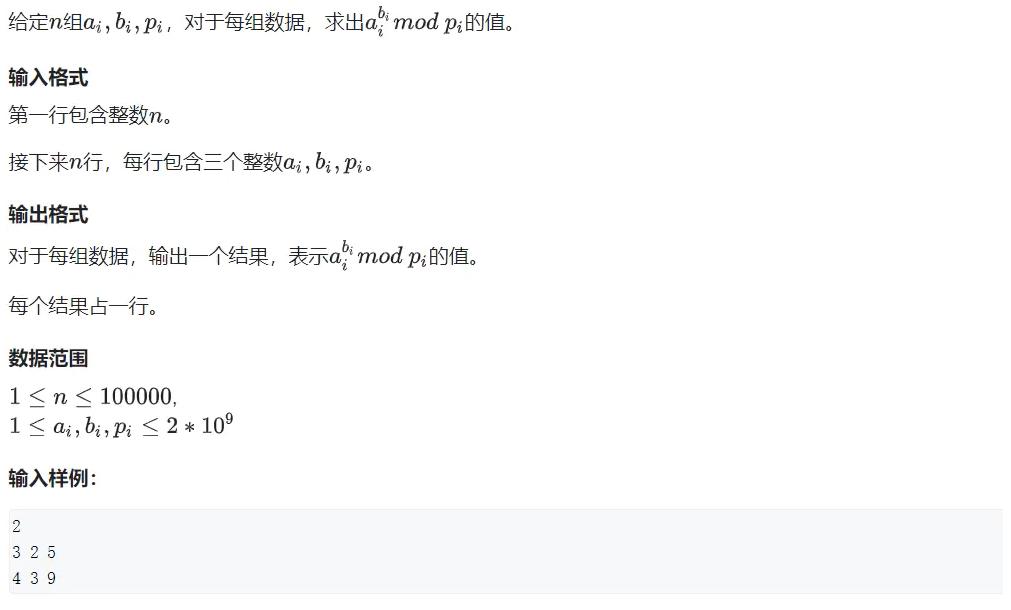

Number theory --- fast power, fast power inverse element

Redis getting started complete tutorial: replication topology

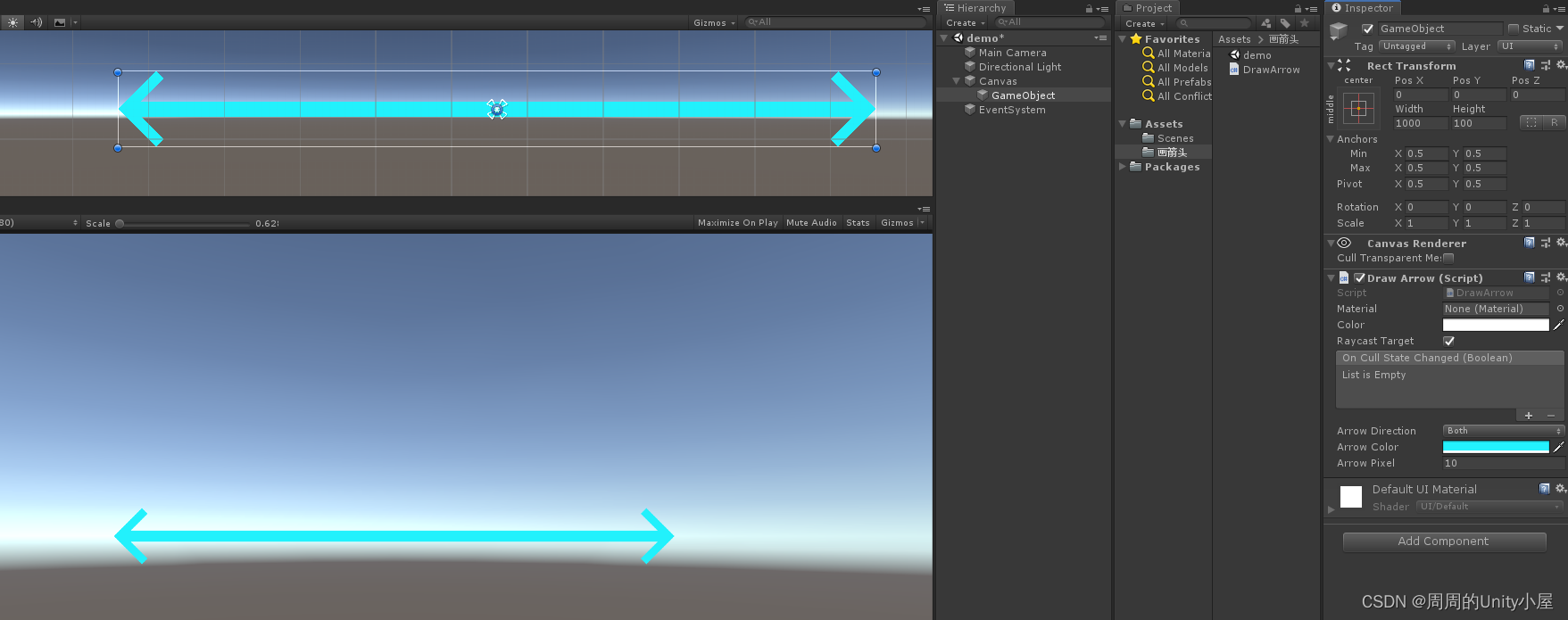

Unity uses maskablegraphic to draw a line with an arrow

Left path cloud recursion + dynamic planning

随机推荐

INS/GPS组合导航类型简介

从零安装Redis

Redis入门完整教程:客户端常见异常

Kubernetes源码分析(二)----资源Resource

What management points should be paid attention to when implementing MES management system

Read fast RCNN in one article

leetcode-02(链表题)

杰理之开启经典蓝牙 HID 手机的显示图标为键盘设置【篇】

Data analysis from the perspective of control theory

Oauth2协议中如何对accessToken进行校验

Redis入門完整教程:問題定比特與優化

tensorboard的使用

杰理之开 BLE 退出蓝牙模式卡机问题【篇】

Redis入门完整教程:复制拓扑

新标杆!智慧化社会治理

Use of promise in ES6

Qpushbutton- "function refinement"

杰理之播内置 flash 提示音控制播放暂停【篇】

左程云 递归+动态规划

Household appliance industry under the "retail is king": what is the industry consensus?