当前位置:网站首页>Starting from 1.5, build a micro Service Framework -- log tracking traceid

Starting from 1.5, build a micro Service Framework -- log tracking traceid

2022-07-07 03:06:00 【Li ziti】

High quality resource sharing

| Learning route guidance ( Click unlock ) | Knowledge orientation | Crowd positioning |

|---|---|---|

| 🧡 Python Actual wechat ordering applet 🧡 | Progressive class | This course is python flask+ Perfect combination of wechat applet , From the deployment of Tencent to the launch of the project , Create a full stack ordering system . |

| Python Quantitative trading practice | beginner | Take you hand in hand to create an easy to expand 、 More secure 、 More efficient quantitative trading system |

First text : WeChat official account , Wukong chat structure ,https://mp.weixin.qq.com/s/SDxH9k96aP5-X12yFtus0w

Hello , I'm Wukong .

Preface

Recently, I am building a basic version of the project framework , be based on SpringCloud Microservice framework .

If you put SpringCloud This framework is used as 1, Now there are some basic components, such as swagger/logback Wait, that's it 0.5 , Then I'm here 1.5 Assemble based on , Complete a microservice project framework .

Why build the second generation wheels ? Isn't the ready-made project framework on the market fragrant ?

Because the project team is not allowed to use external ready-made frameworks , such as Ruoyi. In addition, our project needs have their own characteristics , Technology selection will also choose the framework we are familiar with , So it's also a good choice to build the second generation wheels by yourself .

Core functions

The following core functions need to be included :

- Split multiple micro service modules , Draw out one demo Microservice module for expansion , Completed

- Extract core framework modules , Completed

- Registry Center Eureka, Completed

- The remote invocation OpenFeign, Completed

- journal logback, contain traceId track , Completed

- Swagger API file , Completed

- Configure file sharing , Completed

- Log retrieval ,ELK Stack, Completed

- Customize Starter, undetermined

- Consolidated cache Redis,Redis Sentinel high availability , Completed

- Consolidate databases MySQL,MySQL High availability , Completed

- Integrate MyBatis-Plus, Completed

- Link tracking component , undetermined

- monitor , undetermined

- Tool class , To be developed

- gateway , Technology selection to be determined

- Audit log entry ES, undetermined

- distributed file system , undetermined

- Timing task , undetermined

- wait

This article is about log link tracking .

One 、 Pain points

It hurts a little : Multiple logs in the process cannot be traced

A request calls , Suppose you will call more than a dozen methods on the back end , Print the log more than ten times , These logs cannot be concatenated .

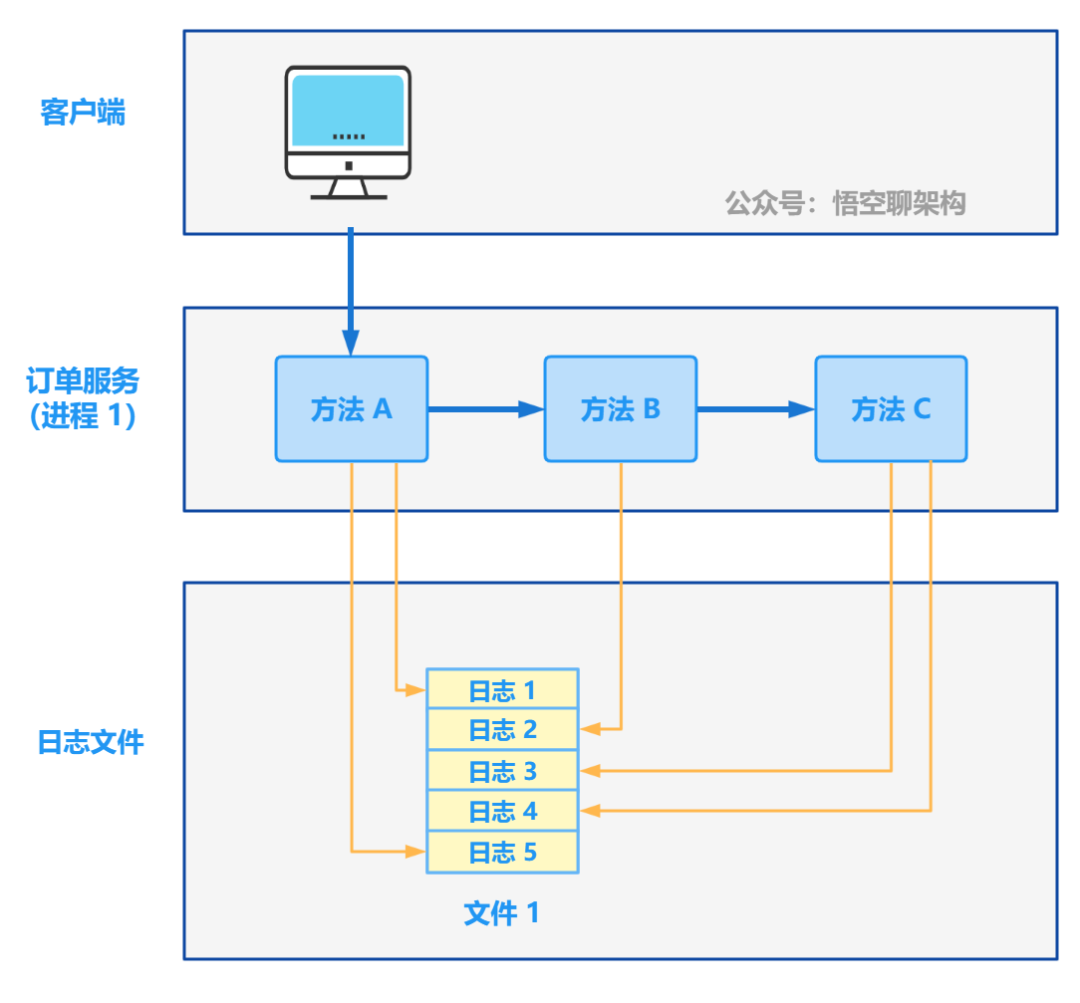

As shown in the figure below : The client calls the order service , Methods in order service A Calling method B, Method B Calling method C.

Method A Print the first log and the fifth log , Method B Print the second log , Method C Print the third log and the fourth log , But this 5 This log has no connection , The only connection is that time is printed in chronological order , But if there are other concurrent request calls , It will interfere with the continuity of the log .

Pain point two : How to correlate logs across Services

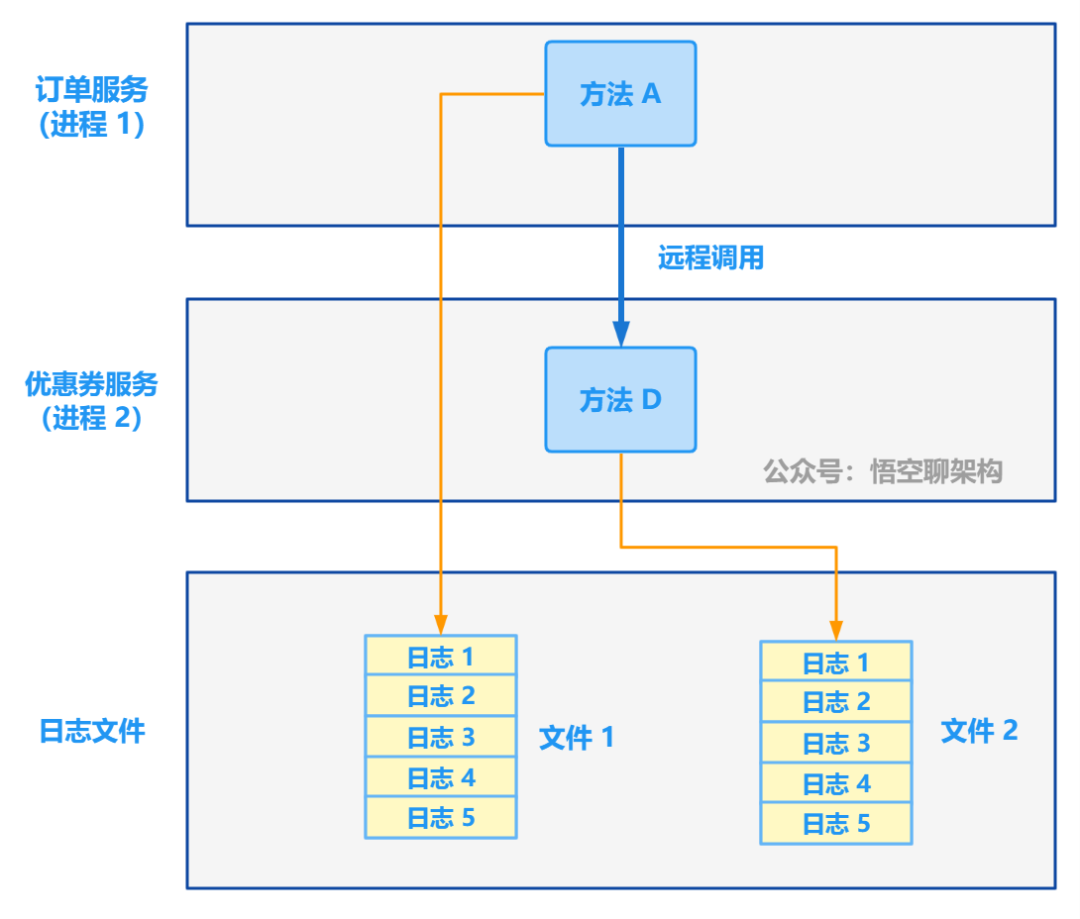

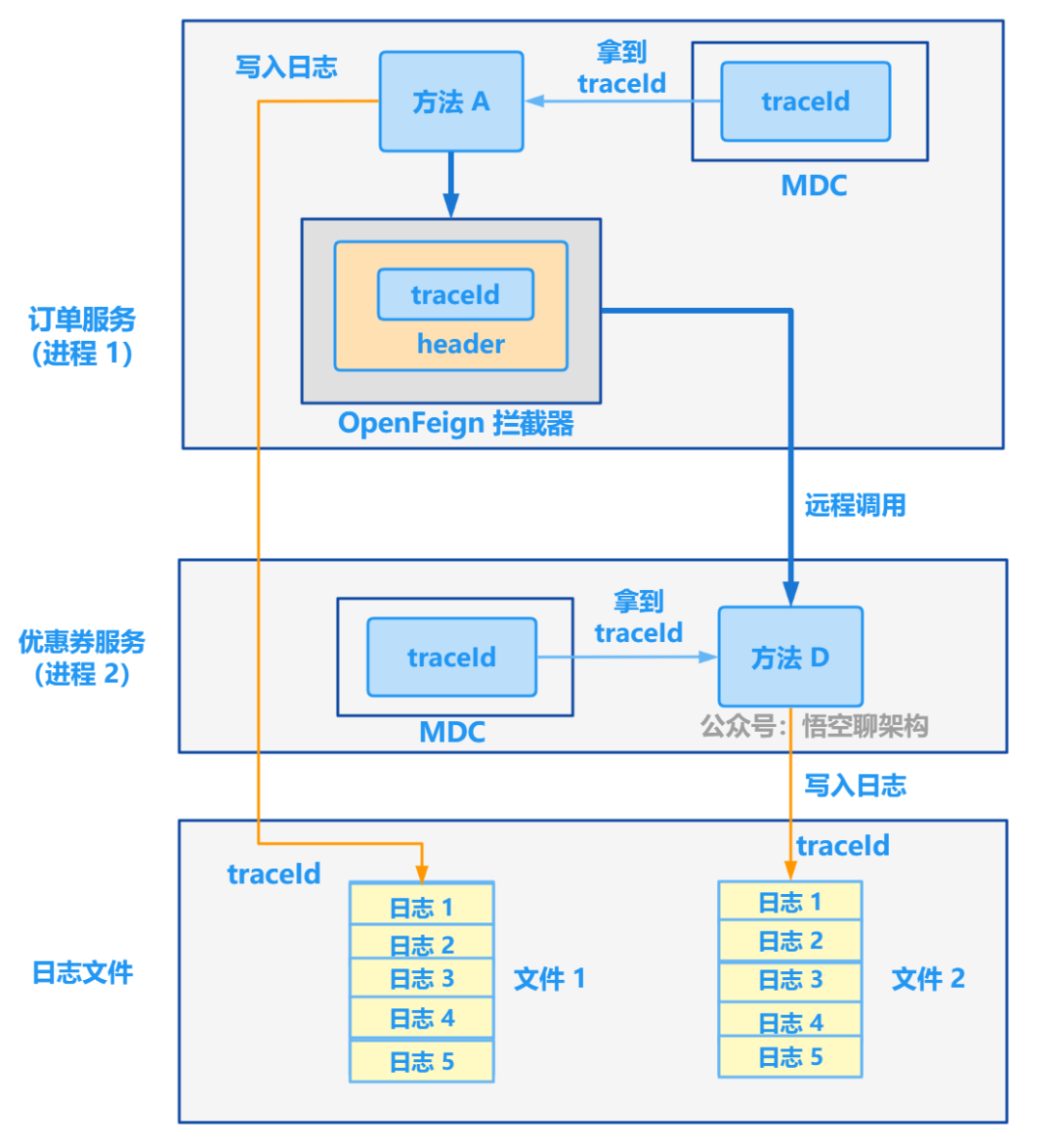

Every micro service will record its own log of this process , How to correlate logs across processes ?

As shown in the figure below : Order service and coupon service belong to two micro Services , Deployed on two machines , Order service A Method to call the coupon service remotely D Method .

Method A Print the log to the log file 1 in , Recorded 5 Logs , Method D Print the log to the log file 2 in , Recorded 5 Logs . But this 10 Logs cannot be associated .

Pain point three : How to correlate logs across threads

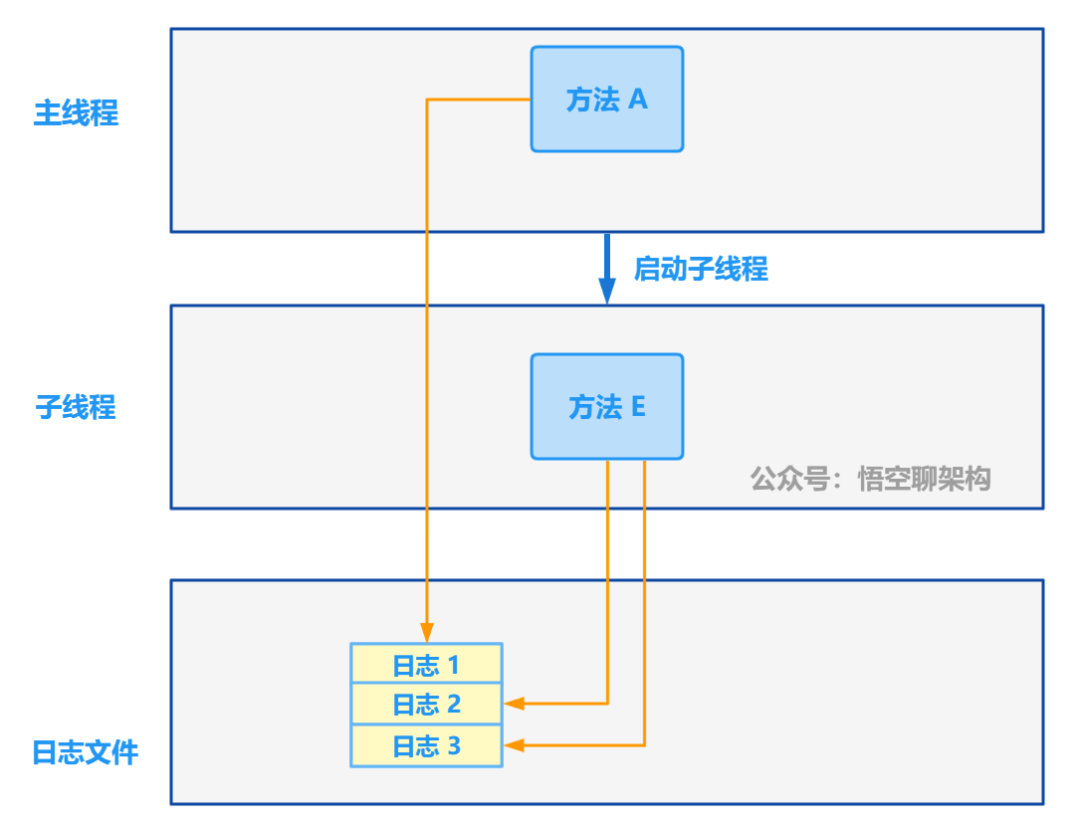

How the logs of the main thread and the sub thread are related ?

As shown in the figure below : Method of main thread A Started a sub thread , The sub thread executes the method E.

Method A Printed the first log , Sub thread E The second log and the third log were printed .

It hurts four : The third party calls our service , How to track ?

The core problem to be solved in this article is the first and second problems , Multithreading has not been introduced yet , At present, there is no third party to call , We will optimize the third and fourth questions later .

Two 、 programme

1.1 Solution

① Use Skywalking traceId Link tracking , perhaps sleuth + zipkin programme .

② Use Elastic APM Of traceId Link tracking

③ MDC programme : Make your own traceId and put To MDC Inside .

At the beginning of the project , Don't introduce too many middleware , Try a simple and feasible solution first , So here is the third solution MDC.

1.2 MDC programme

MDC(Mapped Diagnostic Context) Used to store context data for a specific thread running context . therefore , If you use log4j Logging , Each thread can have its own MDC, The MDC Global to the entire thread . Any code belonging to the thread can easily access the thread's MDC Exists in .

3、 ... and 、 Principle and practice

2.1 Track multiple logs of a request

Let's first look at the first pain point , How to in a request , Connect multiple logs .

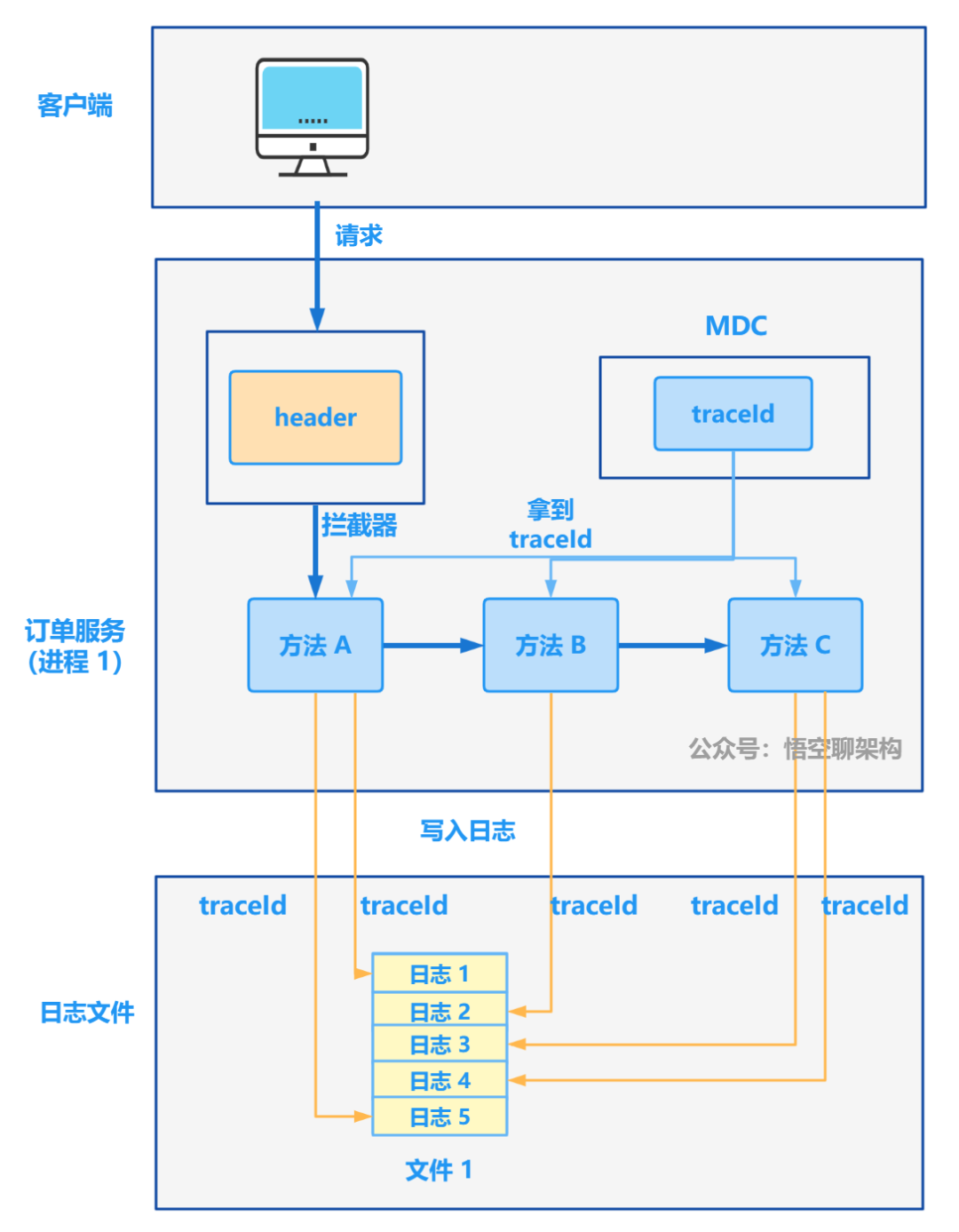

The principle of this scheme is shown in the figure below :

(1) stay logback Add... To the log format in the log configuration file %X{traceId} To configure .

%d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %X{traceId} %-5level %logger - %msg%n

(2) Customize an interceptor , From request header In order to get traceId , If it exists, put it in MDC in , Otherwise, use it directly UUID treat as traceId, Then put MDC in .

(3) Configure interceptors .

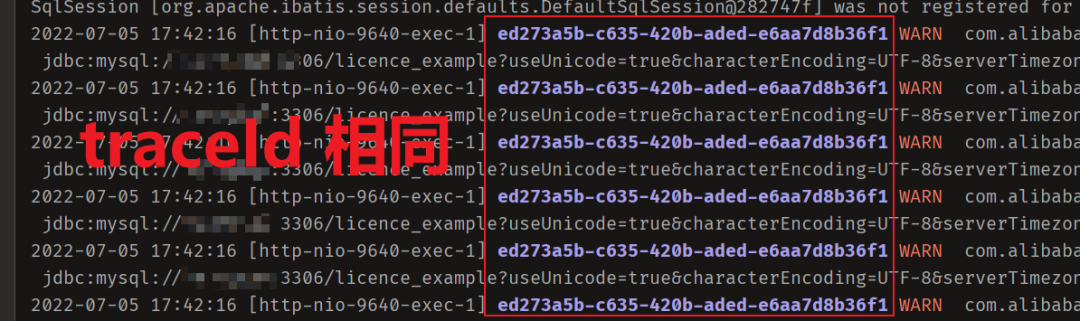

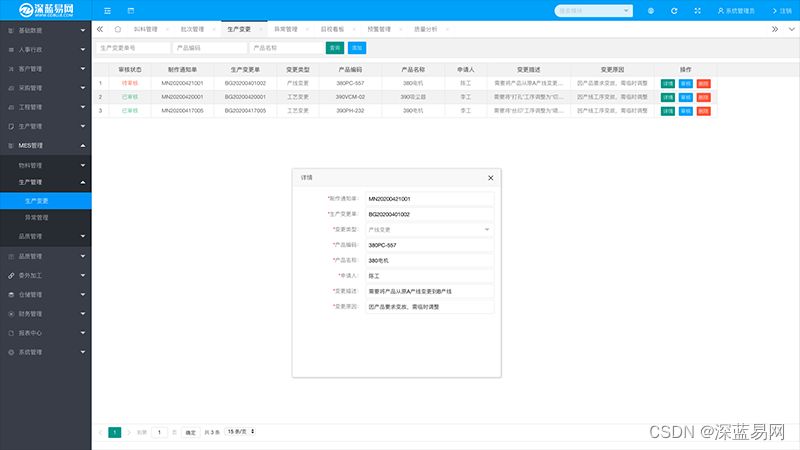

When we print the log , It will print automatically traceId, As shown below , Of multiple logs traceId identical .

Sample code

Interceptor code :

/**

* @author www.passjava.cn, official account : Wukong chat structure

* @date 2022-07-05

*/

@Service

public class LogInterceptor extends HandlerInterceptorAdapter {

private static final String TRACE\_ID = "traceId";

@Override

public boolean preHandle(HttpServletRequest request, HttpServletResponse response, Object handler) throws Exception {

String traceId = request.getHeader(TRACE_ID);

if (StringUtils.isEmpty(traceId)) {

MDC.put("traceId", UUID.randomUUID().toString());

} else {

MDC.put(TRACE_ID, traceId);

}

return true;

}

@Override

public void postHandle(HttpServletRequest request, HttpServletResponse response, Object handler, ModelAndView modelAndView) throws Exception {

// Prevent memory leaks

MDC.remove("traceId");

}

}

Configure interceptors :

/**

* @author www.passjava.cn, official account : Wukong chat structure

* @date 2022-07-05

*/

@Configuration

public class InterceptorConfig implements WebMvcConfigurer {

@Resource

private LogInterceptor logInterceptor;

@Override

public void addInterceptors(InterceptorRegistry registry) {

registry.addInterceptor(logInterceptor).addPathPatterns("/**");

}

}

2.2 Track multiple logs across Services

The schematic diagram of the solution is shown below :

The order service calls the coupon service remotely , You need to add OpenFeign Interceptor , What the interceptor does is go Requested header Add traceId, When calling the coupon service in this way , From the header Get this request traceId.

The code is as follows :

/**

* @author www.passjava.cn, official account : Wukong chat structure

* @date 2022-07-05

*/

@Configuration

public class FeignInterceptor implements RequestInterceptor {

private static final String TRACE\_ID = "traceId";

@Override

public void apply(RequestTemplate requestTemplate) {

requestTemplate.header(TRACE\_ID, (String) MDC.get(TRACE\_ID));

}

}

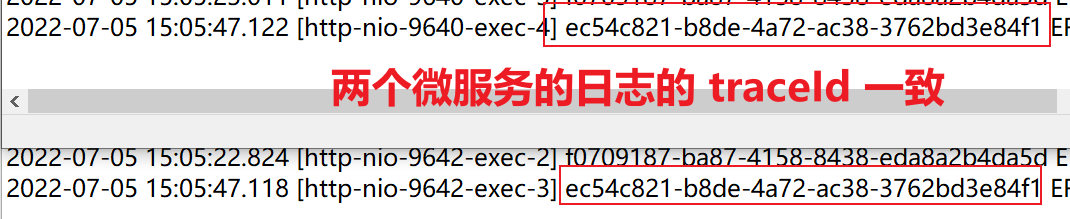

In the logs printed by two microservices , Two logs traceId Agreement .

Of course, these logs will be imported into Elasticsearch Medium , And then through kibana Visual interface search traceId, You can string the whole call link !

Four 、 summary

This article passes the interceptor 、MDC function , The full link is added traceId, And then traceId Output to log , You can trace the call link through the log . Whether it's in-process method level calls , Or cross process service invocation , Can be tracked .

In addition, the log also needs to pass ELK Stack Technology imports logs into Elasticsearch in , Then you can search traceId, The whole call link is retrieved .

- END -

边栏推荐

- Qpushbutton- "function refinement"

- centerX: 用中国特色社会主义的方式打开centernet

- The annual salary of general test is 15W, and the annual salary of test and development is 30w+. What is the difference between the two?

- [node learning notes] the chokidar module realizes file monitoring

- 基于ensp防火墙双击热备二层网络规划与设计

- “零售为王”下的家电产业:什么是行业共识?

- 首届“量子计算+金融科技应用”研讨会在京成功举办

- Error: could not find a version that satisfies the requirement xxxxx (from versions: none) solutions

- Matlb| economic scheduling with energy storage, opportunity constraints and robust optimization

- Redis Getting started tutoriel complet: positionnement et optimisation des problèmes

猜你喜欢

Leetcode 77: combination

What management points should be paid attention to when implementing MES management system

Redis introduction complete tutorial: client case analysis

Digital scrolling increases effect

Redis入门完整教程:问题定位与优化

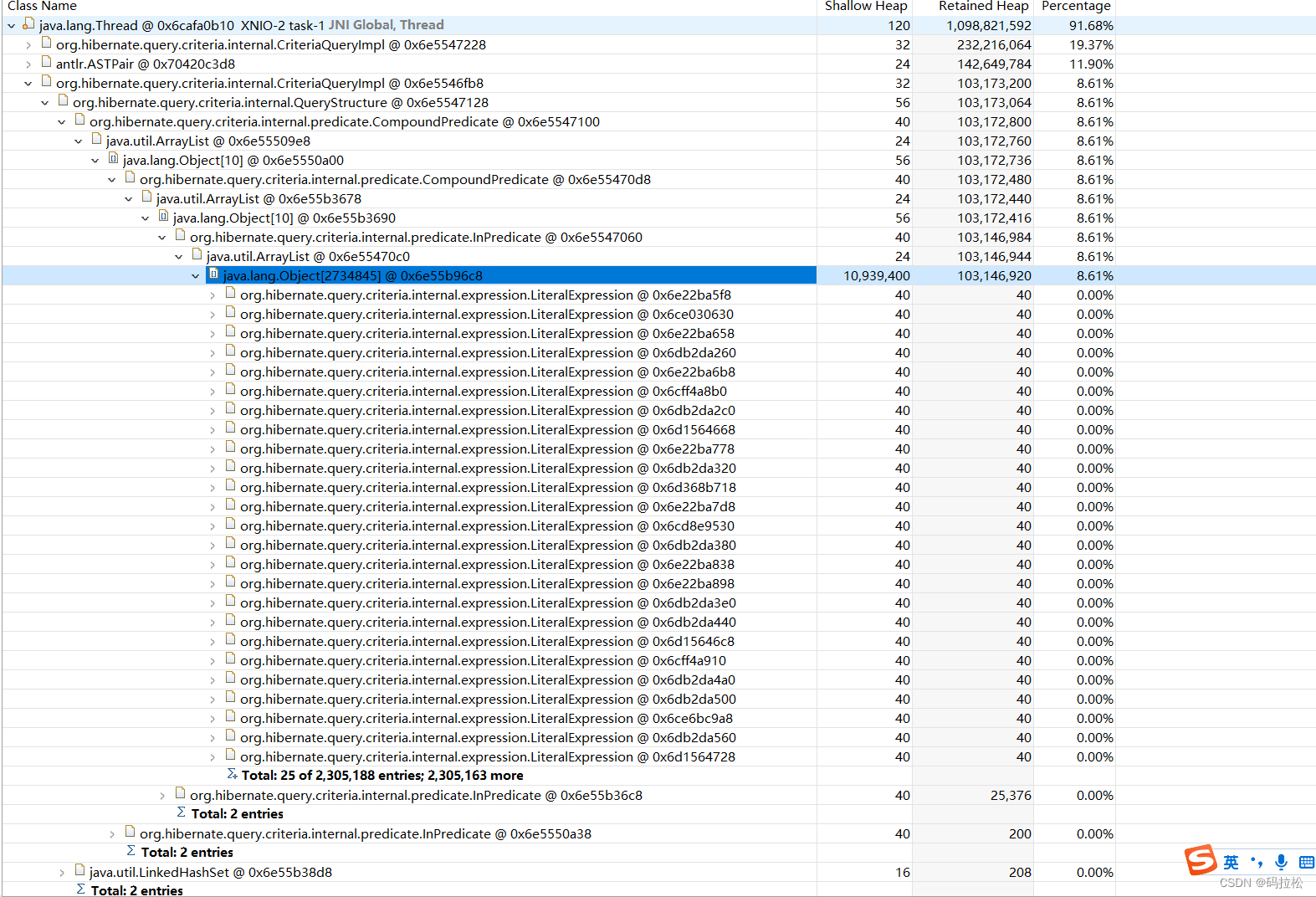

Remember the problem analysis of oom caused by a Jap query

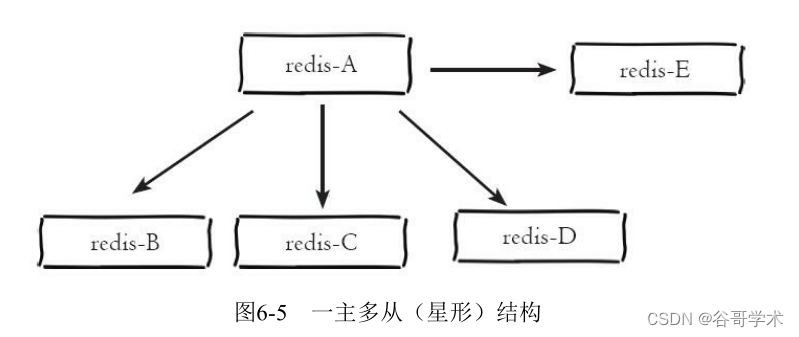

Redis getting started complete tutorial: replication topology

Electrical engineering and automation

![[secretly kill little partner pytorch20 days] - [Day1] - [example of structured data modeling process]](/img/f0/79e7915ba3ef32aa21c4a1d5f486bd.jpg)

[secretly kill little partner pytorch20 days] - [Day1] - [example of structured data modeling process]

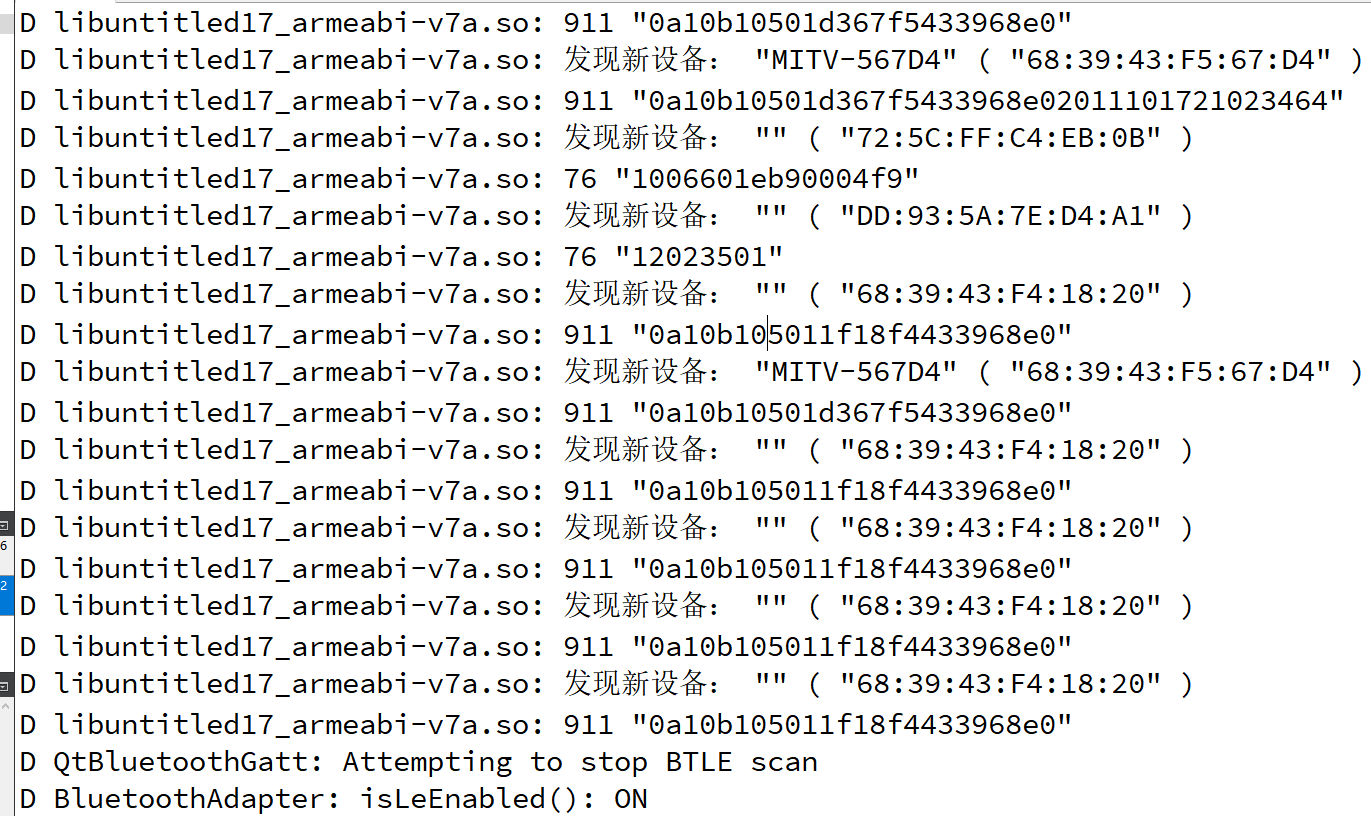

QT Bluetooth: qbluetooth DeviceInfo

随机推荐

Classify the features of pictures with full connection +softmax

c语言(字符串)如何把字符串中某个指定的字符删除?

Utilisation de la promesse dans es6

mos管实现主副电源自动切换电路,并且“零”压降,静态电流20uA

PSINS中19维组合导航模块sinsgps详解(初始赋值部分)

哈希表及完整注释

wzoi 1~200

Redis入门完整教程:客户端管理

How to analyze fans' interests?

Digital scrolling increases effect

Statistics of radar data in nuscenes data set

Cglib agent in agent mode

Redis入门完整教程:问题定位与优化

New benchmark! Intelligent social governance

商城商品的知识图谱构建

Niuke programming problem -- double pointer of 101 must be brushed

Convert widerperson dataset to Yolo format

widerperson数据集转化为YOLO格式

代码调试core-踩内存

The 8 element positioning methods of selenium that you have to know are simple and practical