当前位置:网站首页>Bigvision code

Bigvision code

2022-07-03 03:13:00 【AphilGuo】

classifier

#ifndef CLASSIFIER_H

#define CLASSIFIER_H

#include<opencv2/opencv.hpp>

#include<opencv2/dnn.hpp>

#include<iostream>

#include<fstream>

#include<vector>

#include<opencv2/core/utils/logger.hpp>

#include <QString>

#include <QByteArray>

using namespace cv;

using namespace std;

class ONNXClassifier{

public:

ONNXClassifier(const string& model_path, Size input_size);

void Classifier(const Mat& input_image, string& out_name, double& confidence);

private:

void preprocess_input(Mat& image);

bool read_labels(const string& label_path);

private:

Size input_size;

cv::dnn::Net net;

cv::Scalar default_mean;

cv::Scalar default_std;

string m_labels[2]= {

"ant", "bee"};

};

#endif // CLASSIFIER_H

mianwindow.h

#ifndef MAINWINDOW_H

#define MAINWINDOW_H

#include <QMainWindow>

#include <QLabel>

#include <opencv2/opencv.hpp>

using namespace cv;

namespace Ui {

class MainWindow;

}

class MainWindow : public QMainWindow

{

Q_OBJECT

public:

explicit MainWindow(QWidget *parent = 0);

QImage MatToImage(Mat &m);

~MainWindow();

private slots:

void on_OpenImageButton_clicked();

void on_ResizeImageButton_clicked();

void on_ViewOriImageButton_clicked();

void on_GrayImaageButton_clicked();

void on_GaussionFiltterButton_clicked();

void on_BilateralFiltterButton_clicked();

void on_ErodeButton_clicked();

void on_DilateButton_clicked();

void on_ThreshButton_clicked();

void on_CannyDetectButton_clicked();

void on_ClassifierButton_clicked();

void on_ModelButton_clicked();

void on_ObjPushButton_clicked();

private:

Ui::MainWindow *ui;

Mat m_mat;

Mat* temp;

QLabel* pic;

Mat mid_mat;

QString modelPath;

String m_model;

};

#endif // MAINWINDOW_H

yolov3.h

#ifndef YOLOV3_H

#define YOLOV3_H

#include <iostream>

#include <opencv2/opencv.hpp>

using namespace cv;

using namespace std;

class YOLOV3

{

public:

YOLOV3(){

}

void InitModel(string weightFile);

void detectImg(Mat& img);

void drawImg(int classId, float confidence, int left, int top, int right, int bottom, Mat& img);

private:

string classes[80] = {

"person", "bicycle", "car", "motorbike", "aeroplane", "bus",

"train", "truck", "boat", "traffic light", "fire hydrant",

"stop sign", "parking meter", "bench", "bird", "cat", "dog",

"horse", "sheep", "cow", "elephant", "bear", "zebra", "giraffe",

"backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee",

"skis", "snowboard", "sports ball", "kite", "baseball bat",

"baseball glove", "skateboard", "surfboard", "tennis racket",

"bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl",

"banana", "apple", "sandwich", "orange", "broccoli", "carrot",

"hot dog", "pizza", "donut", "cake", "chair", "sofa", "pottedplant",

"bed", "diningtable", "toilet", "tvmonitor", "laptop", "mouse",

"remote", "keyboard", "cell phone", "microwave", "oven", "toaster",

"sink", "refrigerator", "book", "clock", "vase", "scissors",

"teddy bear", "hair drier", "toothbrush"

};

int inWidth = 416;

int inHeight = 416;

float confThreshold = 0.5;

float nmsThreshold = 0.5;

float objThreshold = 0.5;

vector<int> blob_size{

1, 3, 416, 416};

Mat blob = Mat(blob_size, CV_32FC1, Scalar(0, 0, 0));

vector<int> classids;

vector<int> indexes;

vector<float> confidences;

vector<Rect> boxes;

vector<Mat> outs;

dnn::Net net;

};

#endif // YOLOV3_H

main.cpp

#include "mainwindow.h"

#include <QApplication>

int main(int argc, char *argv[])

{

QApplication a(argc, argv);

MainWindow w;

w.show();

return a.exec();

}

main.window.cpp

#include "mainwindow.h"

#include "ui_mainwindow.h"

#include <QFileDialog>

#include <QDir>

#include<QDebug>

#include <QPixmap>

#include <QByteArray>

#include <QMessageBox>

#include <classifier.h>

#include "yolov3.h"

MainWindow::MainWindow(QWidget *parent) :

QMainWindow(parent),

ui(new Ui::MainWindow)

{

ui->setupUi(this);

}

QImage MainWindow::MatToImage(Mat &m)

{

switch(m.type())

{

case CV_8UC1:

{

QImage img((uchar* )m.data, m.cols, m.rows, m.cols*1, QImage::Format_Grayscale8);

return img;

break;

}

case CV_8UC3:

{

QImage img((uchar* )m.data, m.cols, m.rows, m.cols*3, QImage::Format_RGB888);

return img.rgbSwapped();

break;

}

case CV_8UC4:

{

QImage img((uchar* )m.data, m.cols, m.rows, m.cols*4, QImage::Format_ARGB32);

return img;

break;

}

default:

{

QImage img;

return img;

}

}

}

MainWindow::~MainWindow()

{

delete ui;

delete pic;

delete temp;

}

void MainWindow::on_OpenImageButton_clicked()

{

auto strPath = QFileDialog::getOpenFileName(nullptr, " Select Picture ", QDir::homePath(),

"Images (*.png *.xpm *.jpg)");

pic = new QLabel;

pic->setPixmap(QPixmap(strPath));

ui->ViewImageScrollArea->setWidget(pic);

QByteArray ba;

ba.append(strPath);

m_mat = imread(ba.data());

mid_mat = m_mat.clone();

}

void MainWindow::on_ViewOriImageButton_clicked()

{

temp = &m_mat;

if(temp->empty()){

QMessageBox::warning(nullptr, " Warning !", " Please choose a picture !!!");

return ;

}

QImage temp1 = MatToImage(*temp);

pic->setPixmap(QPixmap::fromImage(temp1));

ui->ViewImageScrollArea->setWidget(pic);

// While displaying the original image

mid_mat = m_mat.clone();

}

void MainWindow::on_ResizeImageButton_clicked()

{

Mat* temp2 = &mid_mat;

if((*temp2).empty()){

QMessageBox::warning(nullptr, " Warning !", " Please choose a picture !!!");

return ;

}

Mat dst;

// Add mouse wheel adjustment parameters

cv::resize(*temp2,dst, Size(400, 400));

QImage temp1 = MatToImage(dst);

pic->setPixmap(QPixmap::fromImage(temp1));

ui->ViewImageScrollArea->setWidget(pic);

mid_mat = dst;

}

void MainWindow::on_GrayImaageButton_clicked()

{

Mat* temp2 = &mid_mat;

if((*temp2).empty()){

QMessageBox::warning(nullptr, " Warning !", " Please choose a picture !!!");

return ;

}

Mat gray_img;

if((*temp2).type()==CV_8UC1){

QMessageBox::warning(nullptr, " Wrong operation ", " At this time, the picture is grayscale ");

return;

}

cvtColor(*temp2, gray_img, COLOR_BGR2GRAY);

// qDebug()<<gray_img.type();

QImage temp1 = MatToImage(gray_img);

pic->setPixmap(QPixmap::fromImage(temp1));

ui->ViewImageScrollArea->setWidget(pic);

mid_mat = gray_img;

}

void MainWindow::on_GaussionFiltterButton_clicked()

{

/*

Weighted average the whole image , Each pixel is determined by itself and others in the field

The pixel value is obtained after weighted average

*/

Mat* temp2 = &mid_mat;

if((*temp2).empty()){

QMessageBox::warning(nullptr, " Warning ", " Please choose a picture !!!");

return ;

}

Mat gaussianImg;

cv::GaussianBlur(*temp2, gaussianImg, cv::Size(5, 5), 0, 0);

QImage temp1 = MatToImage(gaussianImg);

pic->setPixmap(QPixmap::fromImage(temp1));

ui->ViewImageScrollArea->setWidget(pic);

mid_mat = gaussianImg;

}

void MainWindow::on_BilateralFiltterButton_clicked()

{

/*

* Airspace core 、 Range core

*/

Mat* temp2 = &mid_mat;

if((*temp2).empty()){

QMessageBox::warning(nullptr, " Warning ", " Please choose a picture !!!");

return ;

}

Mat bilateralImg, dst;

cv::bilateralFilter(*temp2, bilateralImg, 15, 100, 3);

Mat kernel = (Mat_<int>(3, 3)<<0, -1, 0, -1, 5, -1, 0, -1, 0);

filter2D(bilateralImg, dst, -1, kernel, Point(-1, -1), 0);

QImage temp1 = MatToImage(dst);

pic->setPixmap(QPixmap::fromImage(temp1));

ui->ViewImageScrollArea->setWidget(pic);

mid_mat = dst;

}

void MainWindow::on_ErodeButton_clicked()

{

Mat* temp2 = &mid_mat;

if((*temp2).empty()){

QMessageBox::warning(nullptr, " Warning ", " Please choose a picture !!!");

return ;

}

// Get structure elements

Mat erodeImg;

Mat structureElement = cv::getStructuringElement(cv::MORPH_RECT, cv::Size(5, 5), Point(-1, -1));

cv::erode(*temp2, erodeImg, structureElement);

QImage temp1 = MatToImage(erodeImg);

pic->setPixmap(QPixmap::fromImage(temp1));

ui->ViewImageScrollArea->setWidget(pic);

mid_mat = erodeImg;

}

void MainWindow::on_DilateButton_clicked()

{

Mat* temp2 = &mid_mat;

if((*temp2).empty()){

QMessageBox::warning(nullptr, " Warning ", " Please choose a picture !!!");

return;

}

Mat dilateImg;

Mat structureElement = cv::getStructuringElement(cv::MORPH_RECT, cv::Size(5, 5), Point(-1, -1));

cv::dilate(*temp2, dilateImg, structureElement);

QImage temp1 = MatToImage(dilateImg);

pic->setPixmap(QPixmap::fromImage(temp1));

ui->ViewImageScrollArea->setWidget(pic);

mid_mat = dilateImg;

}

void MainWindow::on_ThreshButton_clicked()

{

Mat* temp2 = &mid_mat;

if((*temp2).empty()){

QMessageBox::warning(nullptr, " Warning ", " Please choose a picture !!!");

return ;

}

if(!((*temp2).type()==CV_8UC1)){

QMessageBox::warning(nullptr, " Warning ", " Please gray the picture first ");

return ;

}

Mat bin_Img;

cv::adaptiveThreshold(*temp2, bin_Img, 255, ADAPTIVE_THRESH_MEAN_C, THRESH_BINARY, 5, 2);

QImage temp1 = MatToImage(bin_Img);

pic->setPixmap(QPixmap::fromImage(temp1));

ui->ViewImageScrollArea->setWidget(pic);

mid_mat = bin_Img;

}

void MainWindow::on_CannyDetectButton_clicked()

{

Mat* temp2 = &mid_mat;

if((*temp2).empty()){

QMessageBox::warning(nullptr, " Warning ", " Please choose a picture !!!");

return ;

}

if(!((*temp2).type()==CV_8UC1)){

QMessageBox::warning(nullptr, " Warning ", " Please gray the picture first ");

return ;

}

Mat cannyImg;

cv::Canny(*temp2, cannyImg, 100, 200, 3, false);

QImage temp1 = MatToImage(cannyImg);

pic->setPixmap(QPixmap::fromImage(temp1));

ui->ViewImageScrollArea->setWidget(pic);

mid_mat = cannyImg;

}

ONNXClassifier::ONNXClassifier(const std::string& model_path, cv::Size _input_size) :default_mean(0.485, 0.456, 0.406),

default_std(0.229, 0.224, 0.225), input_size(_input_size)

{

net = cv::dnn::readNet(model_path);

net.setPreferableBackend(cv::dnn::DNN_BACKEND_OPENCV);

net.setPreferableTarget(cv::dnn::DNN_TARGET_CPU);

}

void ONNXClassifier::preprocess_input(Mat& image){

image.convertTo(image, CV_32F, 1.0/255.0);

cv::subtract(image, default_mean, image);

cv::divide(image, default_std, image);

}

void ONNXClassifier::Classifier(const cv::Mat& input_image, std::string& out_name, double& confidence){

out_name.clear();

cv::Mat image = input_image.clone();

preprocess_input(image);

cv::Mat input_blob = cv::dnn::blobFromImage(image, 1.0, input_size, cv::Scalar(0,0,0), true);

net.setInput(input_blob);

const std::vector<cv::String>& out_names = net.getUnconnectedOutLayersNames();

cv::Mat out_tensor = net.forward(out_names[0]);

cv::Point classNumber;

double classProb;

cv::minMaxLoc(out_tensor, NULL, &classProb, NULL, &classNumber);

confidence = classProb;

out_name = m_labels[classNumber.x];

}

void MainWindow::on_ClassifierButton_clicked()

{

Mat drawImg = mid_mat.clone();

String* using_model = &m_model;

if(drawImg.empty()){

QMessageBox::warning(nullptr, " Warning ", " Please choose a picture !!!");

return ;

}

if(using_model->empty()){

QMessageBox::warning(nullptr, " Warning ", " Please select a model !!!");

return ;

}

Size input_size(300, 300);

ONNXClassifier classifier(*using_model, input_size);

string result;

double confidence = 0;

classifier.Classifier(drawImg, result, confidence);

putText(drawImg, result, Point(20, 20), FONT_HERSHEY_SIMPLEX, 1.0, Scalar(0, 0, 255), 2, 8);

QImage temp1 = MatToImage(drawImg);

pic->setPixmap(QPixmap::fromImage(temp1));

ui->ViewImageScrollArea->setWidget(pic);

mid_mat = drawImg;

}

void MainWindow::on_ModelButton_clicked()

{

modelPath = QFileDialog::getOpenFileName(nullptr, " Select File ", QDir::homePath(), "*.onnx *.weights");

QByteArray ba;

ba.append(modelPath);

m_model = modelPath.toStdString();

QMessageBox::information(nullptr, " Tips ", " Model loaded successfully ");

}

void MainWindow::on_ObjPushButton_clicked()

{

String* yoloModel = &m_model;

Mat detectImg = mid_mat.clone();

if(detectImg.empty()){

QMessageBox::warning(nullptr, " Warning ", " Please choose a picture !!!");

return ;

}

if(yoloModel->empty()){

QMessageBox::warning(nullptr, " Warning ", " Please select a model !!!");

return ;

}

YOLOV3 yolo;

yolo.InitModel(*yoloModel);

yolo.detectImg(detectImg);

// Convert picture type

QImage temp1 = MatToImage(detectImg);

pic->setPixmap(QPixmap::fromImage(temp1));

ui->ViewImageScrollArea->setWidget(pic);

mid_mat = detectImg;

}

yolov3.cpp

#include "yolov3.h"

RNG rng(12345);

void YOLOV3::InitModel(string weightFile)

{

string configPath = "D:\\Projects\\QtProjects\\GithubProject\\models\\yolov3\\yolov3.cfg";

this->net = cv::dnn::readNetFromDarknet(configPath, weightFile);

net.setPreferableBackend(cv::dnn::DNN_BACKEND_OPENCV);

net.setPreferableTarget(cv::dnn::DNN_TARGET_CPU);

}

void YOLOV3::detectImg(Mat &img)

{

cv::dnn::blobFromImage(img, blob, 1/255.0, Size(this->inWidth, this->inHeight), Scalar::all(0), true, false);

net.setInput(blob);

net.forward(outs, net.getUnconnectedOutLayersNames());

for(size_t i=0; i<outs.size();i++)

{

float* pdata = (float*)outs[i].data;

for(int j=0;j<outs[i].rows;j++, pdata += outs[i].cols)

{

Mat scores = outs[i].row(j).colRange(5, outs[i].cols);

Point classId;

double confidence;

minMaxLoc(scores, 0, &confidence, 0, &classId);

if(confidence>this->confThreshold)

{

int centerX = (int)(pdata[0]*img.cols);

int centerY = (int)(pdata[1]*img.rows);

int width = (int)(pdata[2]*img.cols);

int height = (int)(pdata[3]*img.rows);

int left = centerX - width / 2;

int top = centerY - height / 2;

classids.push_back(classId.x);

confidences.push_back(confidence);

boxes.push_back(Rect(left, top, width, height));

}

}

}

dnn::NMSBoxes(boxes, confidences, this->confThreshold, this->nmsThreshold, this->indexes);

for(size_t i=0;i<indexes.size();i++)

{

int idx = indexes[i];

Rect box = boxes[idx];

this->drawImg(classids[idx], confidences[idx], box.x, box.y, box.x+box.width, box.y+box.height, img);

}

}

void YOLOV3::drawImg(int classId, float confidence, int left, int top, int right, int bottom, Mat &img)

{

Scalar color = Scalar(rng.uniform(0, 255), rng.uniform(0, 255), rng.uniform(0, 255));

rectangle(img, Point(left, top), Point(right, bottom), color, 2);

string label = format("%.2f", confidence);

label = this->classes[classId] + ":" + label;

int baseLine;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

top = max(top, labelSize.height);

putText(img, label, Point(left, top), FONT_HERSHEY_SIMPLEX, 0.75, color, 2, 8);

}

边栏推荐

- I2C 子系统(三):I2C Driver

- [error record] the parameter 'can't have a value of' null 'because of its type, but the im

- PAT乙级常用函数用法总结

- Agile certification (professional scrum Master) simulation exercises

- Anhui University | small target tracking: large-scale data sets and baselines

- Destroy the session and empty the specified attributes

- How to limit the size of the dictionary- How to limit the size of a dictionary?

- Installation and use of memory leak tool VLD

- Spark on yarn resource optimization ideas notes

- L'index des paramètres d'erreur est sorti de la plage pour les requêtes floues (1 > Nombre de paramètres, qui est 0)

猜你喜欢

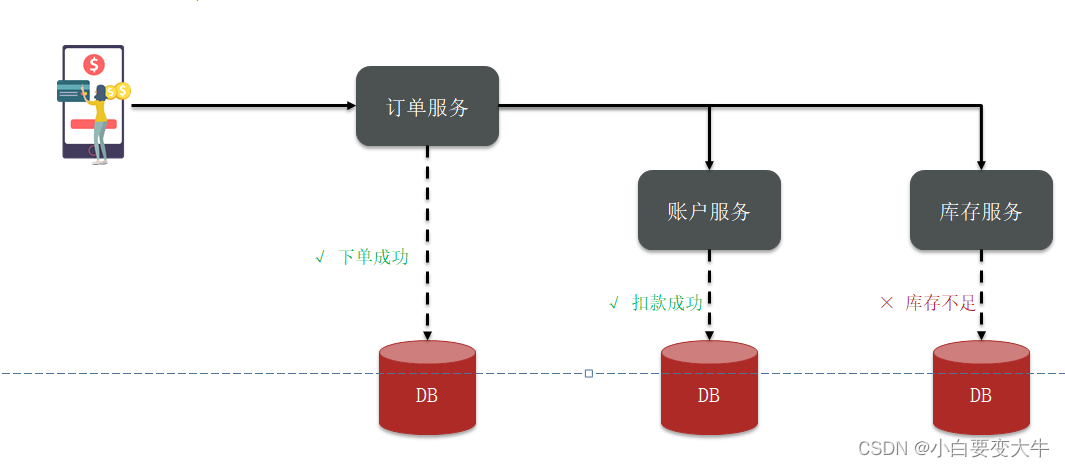

Distributed transaction

900W+ 数据,从 17s 到 300ms,如何操作

el-tree搜索方法使用

The idea setting code is in UTF-8 idea Properties configuration file Chinese garbled

Creation and destruction of function stack frame

On the adjacency matrix and adjacency table of graph storage

![MySQL practice 45 [global lock and table lock]](/img/23/fd58c185ae49ed6c04f1a696f10ff4.png)

MySQL practice 45 [global lock and table lock]

Thunderbolt Chrome extension caused the data returned by the server JS parsing page data exception

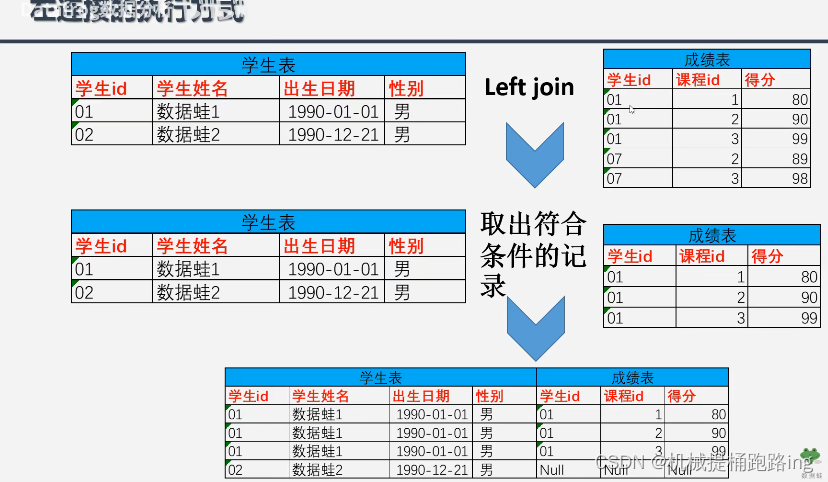

Left connection, inner connection

The idea cannot be loaded, and the market solution can be applied (pro test)

随机推荐

node 开启服务器

Vs 2019 configure tensorrt to generate engine

VS 2019安装及配置opencv

Docker install MySQL

程序员新人上午使用 isXxx 形式定义布尔类型,下午就被劝退?

MySql实战45讲【索引】

Tensorflow to pytorch notes; tf. gather_ Nd (x, y) to pytorch

Vs 2019 configuration du moteur de génération de tensorrt

I2C 子系统(二):I3C spec

TCP 三次握手和四次挥手机制,TCP为什么要三次握手和四次挥手,TCP 连接建立失败处理机制

labelme标记的文件转换为yolov5格式

C # general interface call

VS code配置虚拟环境

docker安装mysql

Spark on yarn资源优化思路笔记

复选框的使用:全选,全不选,选一部分

Sqlserver row to column pivot

LVGL使用心得

VS 2019配置tensorRT

【PyG】理解MessagePassing过程,GCN demo详解