当前位置:网站首页>Evaluating Machine Learning Models - Excerpt

Evaluating Machine Learning Models - Excerpt

2022-07-31 06:17:00 【Young_win】

Overview

Typically, models are not evaluated on the same data on which they were trained.Because, as training progresses, model performance on training data keeps improving, but performance on never-before-seen data stops changing or startsdecline.

The goal of machine learning is to get a model that can generalize, that is, a model that performs well on never-before-seen data, so how to It is very important to reliably measure the generalization ability of a model. The following content mainly introduces how to measure the generalization ability of a model!

In addition, the difficulty of improving the generalization of the model is overfitting, which will be introduced later!

train/valid/test

When evaluating the model, divide the data into 3 sets: train/valid/test.

train: train the model on this dataset;

valid: evaluate the model on this dataset;

test: once the best parameters are found, thenOn the dataset, the last test is performed.

A. Why do you need test data?

(1.) When developing a model, always adjust the model configuration, such as the number of layers and the size of each layer (hyperparameters). This adjustment process uses the model in the valid data.The performance on it is used as a feedback signal, and the process is essentially a kind of learning: in a certain parameter space, to find a good model configuration.

(2.) Therefore, adjusting the model configuration based on the performance of the model on the valid will soon overfit the model on the valid, even if you do not directly train the model on the valid>.The key to this phenomenon is information leakage: every time the model hyperparameters are adjusted based on the performance of the model on the valid, some data information about the valid is leaked into the model.

(3.) Even if the final model has good performance on valid, because this is the purpose of your optimization; and we care about the performance of the model on new data, not on validperformance.Therefore, you need to evaluate the model on a completely different, never-before-seen data set, the test dataset.

(4.) Your model must cannot read any information related to the test data, even indirectly.The measure of generalization ability is inaccurate if the model is tuned based on the performance of the test data.

B. There is less available data, how to divide train/valid/test?

(1.) Easiest set aside for validation

Once the hyperparameters are tuned, train the final model from scratch on all non-test data.

Disadvantages of this evaluation method: If few data are available, the valid and test data contain too few samples to be statistically representative.

The specific way to find this problem through experiments: If different random scrambles are performed before dividing the data, the performance of the final model is very different, and this problem exists.

(2.) K-fold verification

In order to solve the above-mentioned problem that the performance of the model obtained by different divisions of "trian-test" varies greatly, "K-fold verification" is introduced.

K-fold validation, that is, dividing the data into K partitions of the same size, for each partition i, train the model on the remaining K-1 partitions, and then evaluate the model on partition i.Final score = average of K scores.

K model train training + valid evaluation to get the optimal hyperparameters;

Use the hyperparameters to train a model M on train+valid;

Use model M to evaluate on test!

(3.) Repeated K-fold validation with scrambled data

Method: Use K-fold validation multiple times, and scramble the data before dividing the data into K partitions each time.The final score is the average of each K-fold validation score.

Notes on evaluating models

Data representation

Usually, it is required that both train and test can represent the current data, so, before dividing the data set, the data should be randomly shuffled; to avoid the mnist data set train only containsNumbers 1-7, test only contains numbers 9, such a ridiculous mistake.

Time Arrow

If you want to predict the future from the past, splitting the data should not randomly shuffle the data, because shuffling creates a time leak: your modelWill be training on future data.In this case, it should be ensured that the time of test data is later than that of train data.

Data redundancy

Some samples in the data appear multiple times, then shuffling the data and dividing train/valid will lead to data redundancy between the two data sets, soThere will be a problem of evaluating model performance on part of the train data.That is, make sure that there is no sample intersection between train and valid.

边栏推荐

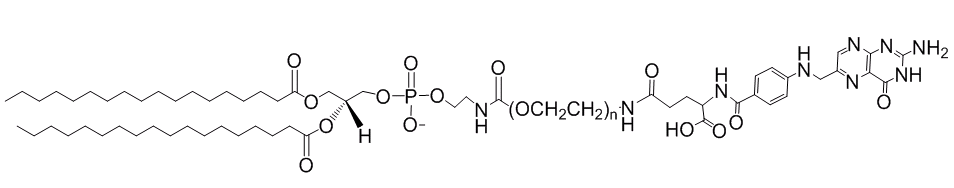

- CAS:1403744-37-5 DSPE-PEG-FA 科研实验用磷脂-聚乙二醇-叶酸

- DSPE-PEG-Biotin, CAS: 385437-57-0, phospholipid-polyethylene glycol-biotin prolongs circulating half-life

- cv2.resize()是反的

- 日志jar包冲突,及其解决方法

- Talking about the understanding of CAP in distributed mode

- 我的训练函数模板(动态修改学习率、参数初始化、优化器选择)

- RuntimeError: CUDA error: no kernel image is available for execution on the device问题记录

- VS2017连接MYSQL

- ERROR Error: No module factory availabl at Object.PROJECT_CONFIG_JSON_NOT_VALID_OR_NOT_EXIST ‘Error

- Principle analysis of famous website msdn.itellyou.cn

猜你喜欢

化学试剂磷脂-聚乙二醇-氨基,DSPE-PEG-amine,CAS:474922-26-4

jenkins +miniprogram-ci 一键上传微信小程序

wangeditor富文本编辑器上传图片以及跨域问题解决

【解决问题】RuntimeError: The size of tensor a (80) must match the size of tensor b (56) at non-singleton

Cholesterol-PEG-Amine CLS-PEG-NH2 胆固醇-聚乙二醇-氨基科研用

CAS: 1403744-37-5 DSPE-PEG-FA Phospholipid-Polyethylene Glycol-Folic Acid for Scientific Research

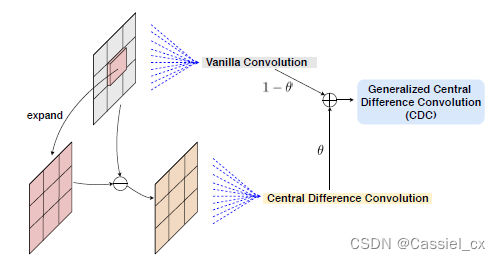

Multi-Modal Face Anti-Spoofing Based on Central Difference Networks学习笔记

UiBot has an open Microsoft Edge browser and cannot perform the installation

opencv之图像二值化处理

CAS:474922-22-0 Maleimide-PEG-DSPE 磷脂-聚乙二醇-马来酰亚胺简述

随机推荐

Tensorflow相关list

计算图像数据集均值和方差

pytorch学习笔记10——卷积神经网络详解及mnist数据集多分类任务应用

Tensorflow边用边踩坑

mPEG-DMPE 甲氧基-聚乙二醇-双肉豆蔻磷脂酰乙醇胺用于形成隐形脂质体

活体检测FaceBagNet阅读笔记

Cholesterol-PEG-Acid CLS-PEG-COOH Cholesterol-Polyethylene Glycol-Carboxyl Modified Peptides

mysql 事务原理详解

Research reagents Cholesterol-PEG-Maleimide, CLS-PEG-MAL, Cholesterol-PEG-Maleimide

二进制转换成十六进制、位运算、结构体

RuntimeError: CUDA error: no kernel image is available for execution on the device问题记录

Cholesterol-PEG-Amine CLS-PEG-NH2 胆固醇-聚乙二醇-氨基科研用

Tensorflow steps on the pit while using it

为数学而歌之伯努利家族

MySQL 主从切换步骤

Embedding前沿了解

UiBot has an open Microsoft Edge browser and cannot perform the installation

box-shadow相关属性

我的训练函数模板(动态修改学习率、参数初始化、优化器选择)

超参数优化-摘抄