当前位置:网站首页>Deep learning - residual networks resnets

Deep learning - residual networks resnets

2022-06-30 07:43:00 【Hair will grow again without it】

Residual network

ResNets By Residual block (Residual block) Built , First of all, I'll explain what residual blocks are .

This is a two-layer neural network , stay 𝐿 Layer to activate , obtain 𝑎[𝑙+1], Activate again , Two layers later you get 𝑎[𝑙+2]. The calculation process is from 𝑎[𝑙] Start , Start with linear activation , According to this formula :

𝑧[𝑙+1] = 𝑊[𝑙+1]*𝑎[𝑙] + 𝑏[𝑙+1], adopt 𝑎[𝑙] Work out 𝑧[𝑙+1], namely 𝑎[𝑙] Multiply by the weight matrix , Plus the deviation factor . And then through ReLU The nonlinear activation function gives 𝑎[𝑙+1] ,𝑎[𝑙+1] = 𝑔(𝑧[𝑙+1])meter count have to Out . Pick up the Again Time Into the That's ok Line sex Thrill live , In accordance with the According to the etc. type𝑧[𝑙+2] = 𝑊[2+1]𝑎[𝑙+1] + 𝑏[𝑙+2], Finally, according to this equation again ReLu Nonlinear activation , namely𝑎[𝑙+2] = 𝑔(𝑧[𝑙+2]), there 𝑔 Refer to ReLU Nonlinear functions , The result is 𝑎[𝑙+2]. let me put it another way , Information flows from 𝑎[𝑙] To 𝑎[𝑙+2] All the above steps are required , That is, the main path of the network layer .

There's a little change in the residual network , We take 𝑎[𝑙] Straight back , Copy to the deep layer of the neural network , stay ReLU The nonlinear activation function is preceded by 𝑎[𝑙], It's a shortcut .𝑎[𝑙] The information of the neural network directly reaches the deep layer , No longer passing along the main path , That means the last equation(𝑎[𝑙+2] = 𝑔(𝑧[𝑙+2]))Removed , Instead, there is another ReLU Nonlinear functions , Still right 𝑧[𝑙+2] Conduct 𝑔 Function processing , But this time, add 𝑎[𝑙], namely :𝑎[𝑙+2] = 𝑔(𝑧[𝑙+2] + 𝑎[𝑙]),

That's the one you added 𝑎[𝑙] A residual block is generated .

ResNet The inventor of is hekaiming (Kaiming He)、 Zhang Xiangyu (Xiangyu Zhang)、 Ren Shaoqing (Shaoqing Ren) He SunJian (Jiangxi Sun), They found that using residual blocks can train deeper neural networks . So build a ResNet The network is by stacking many of these residual blocks together , Form a deep neural network .

The following network is not a residual network , It's an ordinary network (Plain network), The term comes from ResNet The paper .

Turn it into ResNet The way to do this is to add all the jump Links , As you saw on the previous slide , Add a shortcut every two layers , Make a residual block . As shown in the figure ,5 The residual blocks are connected together to form a residual network .

Why use residual networks :

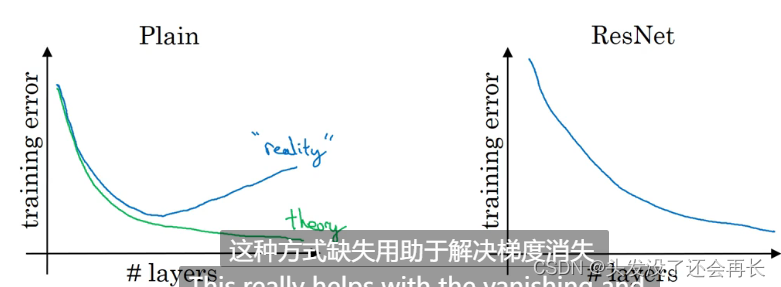

If we use standard optimization algorithm to train a normal network , For example, gradient descent method , Or other popular optimization algorithms . If there is no residual , There are no shortcuts or jumping Links , From experience, you will find that with the deepening of network depth , Training mistakes will be reduced first , And then increase . In theory , With the deepening of network depth , We should train better and better . in other words , Theoretically, the deeper the network, the better . But actually , If there is no residual network , For an ordinary network , The deeper the depth, the more difficult it is to train with the optimization algorithm . actually , With the deepening of network depth , There will be more and more training mistakes .

But there is. ResNets Not so , Even if the network is deeper , Training is good , For example, training error reduction , Even if it's deep training 100 Layer network is no exception . But yes. 𝑥 Activation , Or these intermediate activations can reach deeper layers of the network . This way really It is helpful to solve the problems of gradient disappearance and gradient explosion , Let's train the deeper network at the same time , It can guarantee good performance . Maybe from another point of view , As the Internet gets deeper , Network connections will become bloated , however ResNet It's really effective in training deep networks .

边栏推荐

- Common sorting methods

- 期末复习-PHP学习笔记3-PHP流程控制语句

- 深度学习——GRU单元

- Final review -php learning notes 3-php process control statement

- Deloitte: investment management industry outlook in 2022

- Shell command, how much do you know?

- Intersection of two lines

- Efga design open source framework openlane series (I) development environment construction

- 深度学习——序列模型and数学符号

- Use of nested loops and output instances

猜你喜欢

![Cadence physical library lef file syntax learning [continuous update]](/img/5a/b42269d80c13779762a8da67ba6989.jpg)

Cadence physical library lef file syntax learning [continuous update]

冰冰学习笔记:快速排序

![December 19, 2021 [reading notes] - bioinformatics and functional genomics (Chapter 5 advanced database search)](/img/e9/8646f3e2da0ece853e7135eb6e30d9.jpg)

December 19, 2021 [reading notes] - bioinformatics and functional genomics (Chapter 5 advanced database search)

回文子串、回文子序列

![November 16, 2021 [reading notes] - macro genome analysis process](/img/c4/4c74ff1b4049f5532c871eb00d5ae7.jpg)

November 16, 2021 [reading notes] - macro genome analysis process

Application of stack -- using stack to realize bracket matching (C language implementation)

Three software installation methods

2021 private equity fund market report (62 pages)

Examen final - notes d'apprentissage PHP 3 - Déclaration de contrôle du processus PHP

Multi whale capital: report on China's education intelligent hardware industry in 2022

随机推荐

Introduction notes to pytorch deep learning (10) neural network convolution layer

STM32 infrared communication

2021-10-29 [microbiology] qiime2 sample pretreatment form automation script

想转行,却又不知道干什么?此文写给正在迷茫的你

December 4, 2021 [metagenome] - sorting out the progress of metagenome process construction

Three software installation methods

期末复习-PHP学习笔记3-PHP流程控制语句

PMIC power management

STM32 infrared communication 3 brief

Parameter calculation of deep learning convolution neural network

Processes, jobs, and services

Final review -php learning notes 9-php session control

STM32 infrared communication 2

STM32 register

Simple application of generating function

Tue Jun 28 2022 15:30:29 GMT+0800 (中国标准时间) 日期格式化

C. Fishingprince Plays With Array

Permutation and combination of probability

Cross compile opencv3.4 download cross compile tool chain and compile (3)

深度学习——LSTM