当前位置:网站首页>Opencv learning notes 8 -- answer sheet recognition

Opencv learning notes 8 -- answer sheet recognition

2022-07-06 07:32:00 【Cloudy_ to_ sunny】

opencv Learning notes 8 -- Answer card recognition

Import toolkit

# Import toolkit

import numpy as np

import argparse

import imutils

import cv2

import matplotlib.pyplot as plt#Matplotlib yes RGB

# right key

ANSWER_KEY = {

0: 1, 1: 4, 2: 0, 3: 3, 4: 1}

Defined function

def order_points(pts):

# altogether 4 Coordinates

rect = np.zeros((4, 2), dtype = "float32")

# Find the corresponding coordinates in order 0123 Namely Top left , The upper right , The lower right , The lower left

# Calculate top left , The lower right

s = pts.sum(axis = 1)

rect[0] = pts[np.argmin(s)]

rect[2] = pts[np.argmax(s)]

# Count right up and left down

diff = np.diff(pts, axis = 1)

rect[1] = pts[np.argmin(diff)]

rect[3] = pts[np.argmax(diff)]

return rect

def four_point_transform(image, pts):

# Get the input coordinate point

rect = order_points(pts)

(tl, tr, br, bl) = rect

# Calculate the input w and h value

widthA = np.sqrt(((br[0] - bl[0]) ** 2) + ((br[1] - bl[1]) ** 2))

widthB = np.sqrt(((tr[0] - tl[0]) ** 2) + ((tr[1] - tl[1]) ** 2))

maxWidth = max(int(widthA), int(widthB))

heightA = np.sqrt(((tr[0] - br[0]) ** 2) + ((tr[1] - br[1]) ** 2))

heightB = np.sqrt(((tl[0] - bl[0]) ** 2) + ((tl[1] - bl[1]) ** 2))

maxHeight = max(int(heightA), int(heightB))

# Corresponding coordinate position after transformation

dst = np.array([

[0, 0],

[maxWidth - 1, 0],

[maxWidth - 1, maxHeight - 1],

[0, maxHeight - 1]], dtype = "float32")

# Calculate the transformation matrix

M = cv2.getPerspectiveTransform(rect, dst)

warped = cv2.warpPerspective(image, M, (maxWidth, maxHeight))

# Return the result after transformation

return warped

def sort_contours(cnts, method="left-to-right"):

reverse = False

i = 0

if method == "right-to-left" or method == "bottom-to-top":

reverse = True

if method == "top-to-bottom" or method == "bottom-to-top":

i = 1

boundingBoxes = [cv2.boundingRect(c) for c in cnts]

(cnts, boundingBoxes) = zip(*sorted(zip(cnts, boundingBoxes),

key=lambda b: b[1][i], reverse=reverse))

return cnts, boundingBoxes

# Show function

def cv_show(name,img):

b,g,r = cv2.split(img)

img_rgb = cv2.merge((r,g,b))

plt.imshow(img_rgb)

plt.show()

def cv_show1(name,img):

plt.imshow(img)

plt.show()

cv2.imshow(name,img)

cv2.waitKey()

cv2.destroyAllWindows()

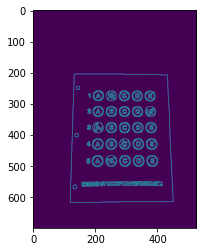

scanning

# Preprocessing

image = cv2.imread("./images/test_01.png")

contours_img = image.copy()

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

blurred = cv2.GaussianBlur(gray, (5, 5), 0) # Gauss filtering

cv_show1('blurred',blurred)

edged = cv2.Canny(blurred, 75, 200) # edge detection

cv_show1('edged',edged)

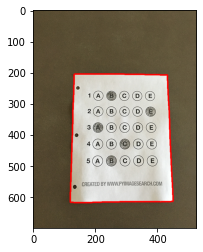

# Contour detection

cnts = cv2.findContours(edged.copy(), cv2.RETR_EXTERNAL,

cv2.CHAIN_APPROX_SIMPLE)[1]

cv2.drawContours(contours_img,cnts,-1,(0,0,255),3)

cv_show('contours_img',contours_img)

docCnt = None

# Make sure that... Is detected

if len(cnts) > 0:

# Sort by outline size

cnts = sorted(cnts, key=cv2.contourArea, reverse=True)

# Traverse every contour

for c in cnts:

# The approximate

peri = cv2.arcLength(c, True)

approx = cv2.approxPolyDP(c, 0.02 * peri, True)

# Prepare for perspective change

if len(approx) == 4:

docCnt = approx

break

# Perform perspective transformation

warped = four_point_transform(gray, docCnt.reshape(4, 2))

cv_show1('warped',warped)

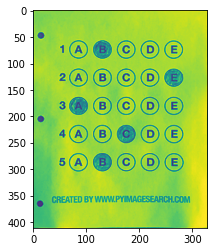

Adaptive threshold processing

# Otsu's Threshold processing

thresh = cv2.threshold(warped, 0, 255,

cv2.THRESH_BINARY_INV | cv2.THRESH_OTSU)[1]

thresh_Contours = thresh.copy()

cv_show1('thresh_Contours',thresh_Contours)

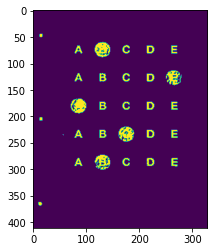

Detect the outline of each option

# Find the outline of each circle

cnts = cv2.findContours(thresh.copy(), cv2.RETR_EXTERNAL,

cv2.CHAIN_APPROX_SIMPLE)[1]

cv2.drawContours(thresh_Contours,cnts,-1,(0,0,255),3) # Because it is a binary image , So as long as it's not 255,255,255 Will turn black

print(len(cnts))

cv_show1('thresh_Contours',thresh_Contours)

questionCnts = []

82

# Traverse

for c in cnts:

# Calculate scale and size

(x, y, w, h) = cv2.boundingRect(c)

ar = w / float(h)

# Specify the standard according to the actual situation

if w >= 20 and h >= 20 and ar >= 0.9 and ar <= 1.1:

questionCnts.append(c)

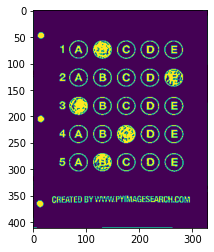

Sort the outline to get the sequence number

# Sort from top to bottom

questionCnts = sort_contours(questionCnts,

method="top-to-bottom")[0]

correct = 0

# Each row has 5 An option

for (q, i) in enumerate(np.arange(0, len(questionCnts), 5)):

# Sort

cnts = sort_contours(questionCnts[i:i + 5])[0]

bubbled = None

# Traverse every result

for (j, c) in enumerate(cnts):

# Use mask To judge the result

mask = np.zeros(thresh.shape, dtype="uint8")

cv2.drawContours(mask, [c], -1, 255, -1) #-1 Indicates filling

# Choose this answer by calculating the number of nonzero points

mask = cv2.bitwise_and(thresh, thresh, mask=mask)

total = cv2.countNonZero(mask)

cv_show1('mask',mask)

# Judging by threshold

if bubbled is None or total > bubbled[0]:

bubbled = (total, j)

# Compare the right answers

color = (0, 0, 255)

k = ANSWER_KEY[q]

# To judge correctly

if k == bubbled[1]:

color = (0, 255, 0)

correct += 1

# mapping

cv2.drawContours(warped, [cnts[k]], -1, color, 3)

|  |  |  |  |

|  |  |  |  |

|  |  |  |  |

|  |  |  |  |

|  |  |  |  |

Print the results

score = (correct / 5.0) * 100

print("[INFO] score: {:.2f}%".format(score))

cv2.putText(warped, "{:.2f}%".format(score), (10, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.9, (0, 0, 255), 2)

cv_show("Original", image)

cv_show1("Exam", warped)

[INFO] score: 80.00%

Reference resources

边栏推荐

- On the world of NDK (2)

- 1015 reversible primes (20 points) prime d-ary

- Excel的相关操作

- After the hot update of uniapp, "mismatched versions may cause application exceptions" causes and Solutions

- Ali's redis interview question is too difficult, isn't it? I was pressed on the ground and rubbed

- How Navicat imports MySQL scripts

- [MySQL learning notes 30] lock (non tutorial)

- http缓存,强制缓存,协商缓存

- The ECU of 21 Audi q5l 45tfsi brushes is upgraded to master special adjustment, and the horsepower is safely and stably increased to 305 horsepower

- js对象获取属性的方法(.和[]方式)

猜你喜欢

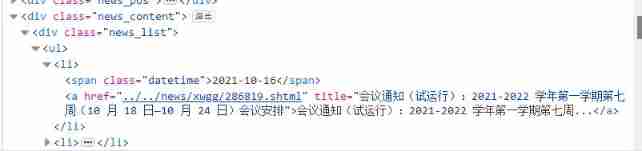

Crawling exercise: Notice of crawling Henan Agricultural University

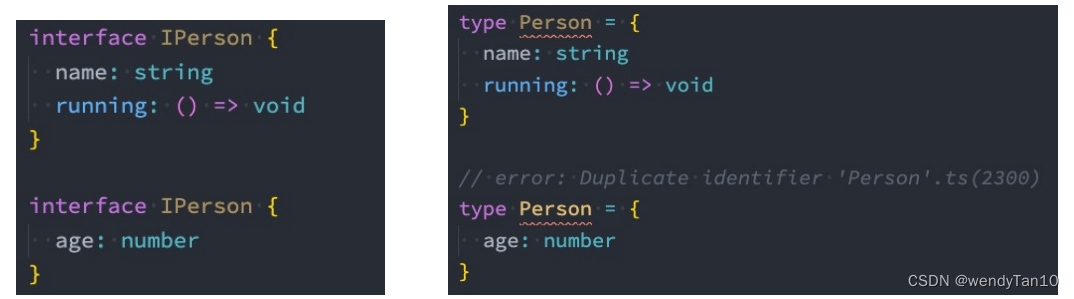

TypeScript接口与泛型的使用

The ECU of 21 Audi q5l 45tfsi brushes is upgraded to master special adjustment, and the horsepower is safely and stably increased to 305 horsepower

![[window] when the Microsoft Store is deleted locally, how to reinstall it in three steps](/img/57/ee979a7db983ad56f0df7345dbc91f.jpg)

[window] when the Microsoft Store is deleted locally, how to reinstall it in three steps

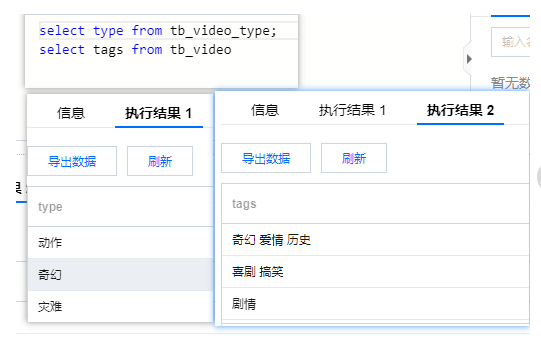

mysql如何合并数据

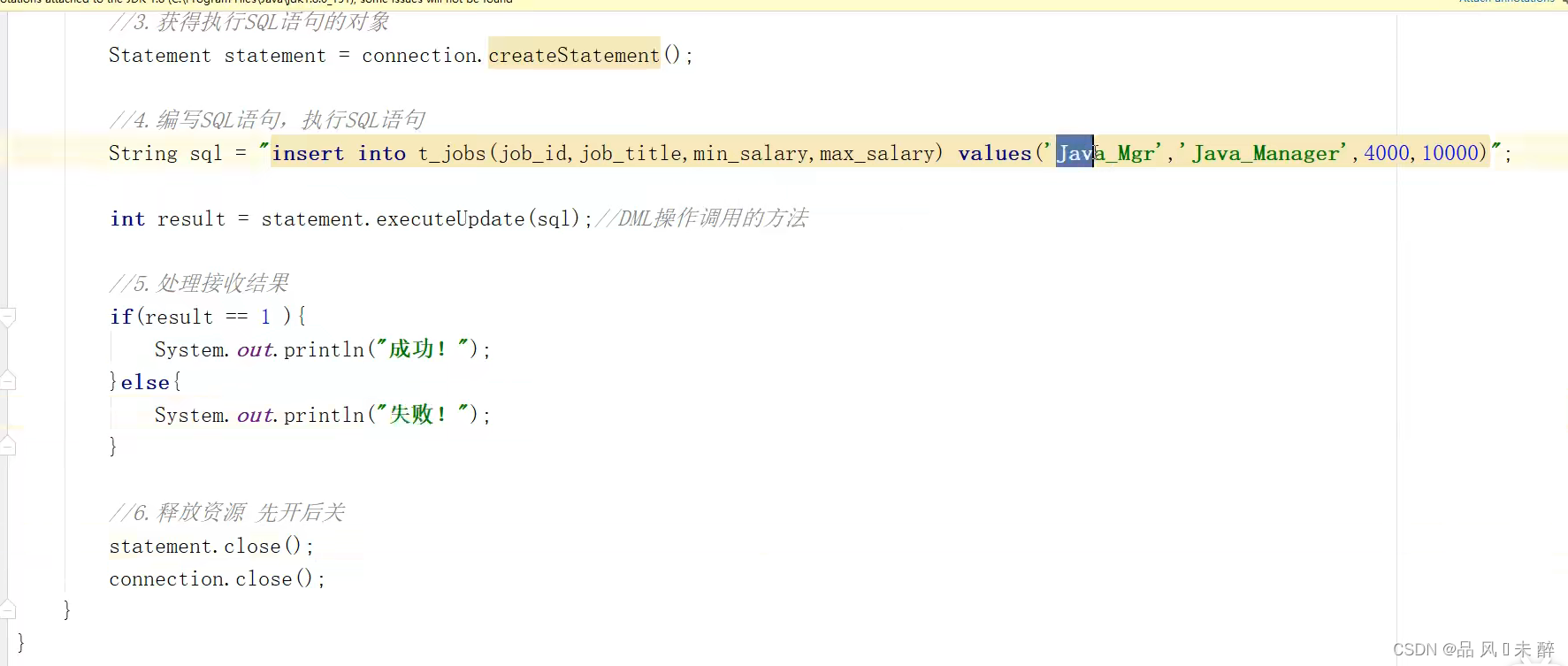

JDBC learning notes

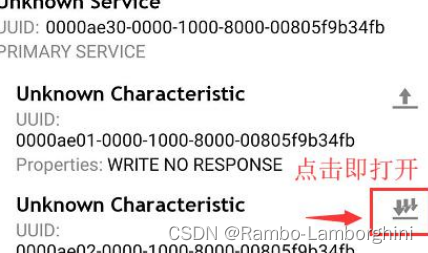

杰理之蓝牙设备想要发送数据给手机,需要手机先打开 notify 通道【篇】

![When the Jericho development board is powered on, you can open the NRF app with your mobile phone [article]](/img/3e/3d5bff87995b4a9fac093a6d9f9473.png)

When the Jericho development board is powered on, you can open the NRF app with your mobile phone [article]

Pre knowledge reserve of TS type gymnastics to become an excellent TS gymnastics master

【mysql学习笔记30】锁(非教程)

随机推荐

Simulation of Michelson interferometer based on MATLAB

Résumé de la structure du modèle synthétisable

数字IC设计笔试题汇总(一)

TS 体操 &(交叉运算) 和 接口的继承的区别

#systemverilog# 可綜合模型的結構總結

word怎么只删除英语保留汉语或删除汉语保留英文

The difference between TS Gymnastics (cross operation) and interface inheritance

After the hot update of uniapp, "mismatched versions may cause application exceptions" causes and Solutions

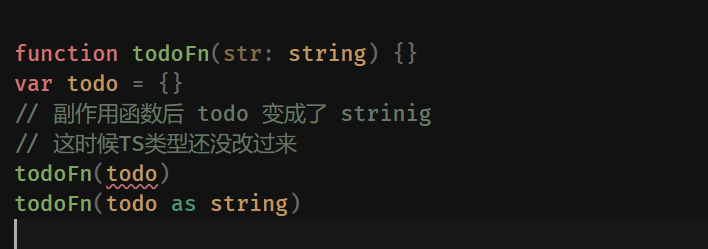

TS 类型体操 之 循环中的键值判断,as 关键字使用

If Jerry needs to send a large package, he needs to modify the MTU on the mobile terminal [article]

超级浏览器是指纹浏览器吗?怎样选择一款好的超级浏览器?

TS 类型体操 之 extends,Equal,Alike 使用场景和实现对比

Significance and measures of encryption protection for intelligent terminal equipment

[MySQL learning notes 29] trigger

OpenJudge NOI 2.1 1661:Bomb Game

[MySQL learning notes 30] lock (non tutorial)

Méthode d'obtention des propriétés de l'objet JS (.Et [] méthodes)

Do you really think binary search is easy

NiO programming introduction

Emo diary 1