当前位置:网站首页>Convolutional neural network CNN based cat dog battle picture classification (tf2.1 py3.6)

Convolutional neural network CNN based cat dog battle picture classification (tf2.1 py3.6)

2022-06-12 08:07:00 【HNU_ Liu Yuan】

Preface : Convolutional neural network is more and more widely used in life , And it can really solve some problems . such as : Fruit classification 、 Gender classification, etc . And the information we usually contact is mostly in the form of images , So we want to use neural network to classify and recognize pictures , Because I haven't touched deep learning before , So it is also a try and learn . For this neural network , It can not only be used to classify cats and dogs , As long as there are corresponding training pictures , The corresponding two classification training can be carried out , For example, use the image training of face database , You can classify gender by photos , Has certain universality .

This routine uses kaggle The data set of cat dog battle is as follows : Cat and dog competition data set download link

The first convolutional neural network is 1987 Year by year Alexander Waibel And so on (Time Delay Neural Network, TDNN),TDNN It is a convolutional neural network applied to speech recognition .

Then convolutional neural network has some development , stay 2006 year Deep learning After the theory was put forward , The representational learning ability of convolutional neural networks has been concerned , And with the update of computing equipment . since 2012 Year of AlexNet Start , obtain GPU The complex convolutional neural networks supported by computing clusters have become ImageNet Large scale visual recognition competition (ImageNet Large Scale Visual Recognition Challenge, ILSVRC) The winning algorithm of .

stay 2015 year ,ResNet To obtain the ILSVRC 2015 The winner of the .ResNet The biggest contribution is to solve the problem that it is difficult to train the deep network ( Back propagation gradient dispersion ), Its network depth has reached 152 layer !

With the improvement of computer computing power , The complexity and accuracy of neural networks are improving .

By 2017 year , A typical CNN The accuracy and complexity of the network :

The data set used in this training is kaggle Part of the data in the competition of cats and dogs , The training set is about 2000 Zhang , The validation set is approximately 200 Zhang . This game is Top2% The correct rate of 99% above ,Top1 Of Tog Loss Reached 0.03302.

Of course, it is limited by the hardware 、 Algorithm and other limitations , This tutorial is just an introduction to .

Convolution network (ConvNets) It is a special type of neural network , It is especially suitable for computer vision applications , Because they have strong abstract representation ability for local operations .

The general flow of neural network is as follows :

The training data and inspection data are stored in the folder of the following example .

Data Medium cat and dog The training pictures are stored separately , Each kind is roughly 1000 Zhang .

Validation Medium cat and dog Store the inspection photos separately , Each kind is roughly 100 Zhang .

Remove To clean the data program ;

Test To judge the picture categorizer ;

Training For training neural network program .

Training course ( One )

First, run the first version of the program to get the following neural network output :

The left figure shows the accuracy of the training set and the verification set ( Points are training sets , The line is the verification set );

The figure on the right shows the training set and the verification set loss( Points are training sets , The line is the verification set ).

You can see from the curve that : The model is basically unchanged , It can be seen that there is a problem with the structure of the model . The default activation function of the analysis neural network is not applicable , The problem to be dealt with is a binary classification problem , Output is 0 and 1, Then select the output layer sigmoid function , Other neuron choices ReLU function .

Solution : Set the activation function of the output layer to “sigmoid” type , namely : tf.keras.layers.Dense(1, activation ='sigmoid'), Used for dichotomies . The remaining activation functions are relu Activation function .

Training course ( Two )

Then run the second version of the program to get the following neural network output :

You can see from the curve that : The accuracy of the training set of the model is always higher than that of the verification set , Too close to the characteristics of training data , Better in the training set , But the accuracy in the verification set is not enough , No generalization , There is a phenomenon of over fitting .

The solution is to enlarge the data set for training . For example, in the image field, the training data set can be increased by stretching, rotation, transformation and clipping , And then through Dropout Random zeroing of parameters to avoid over fitting . So we used both methods , The first use of tf.keras Provided ImageDataGenerator Class to process images randomly ( The zoom 、 rotate 、 Mirror image, etc ), In this way, more pictures can be derived from one picture ; Then use the random deactivation layer Dropout, Random zeroing of partial weights or outputs of hidden layers .tf.keras.layers.Dropout(x), Among them x take 0.3 about , Can solve the over fitting problem .

Training course ( 3、 ... and )

Then run the third version of the program to get the following neural network output :

After solving the problem of over fitting , It is found that the accuracy of the training set is almost always higher than that of the training set , The reason may be that the training set data is not pure , Cleaning required .

Solution : To write remove Program , Load the neural network saved before , Clean the training set , Clear the training data which is quite different from the neural network prediction . Through the following code , Which makes the training focus different from the prediction results , Move to another folder .

if abs(predict[0]) < 0.05:

shutil.move(image_path, 'D:/Python/AI/catvsdog/remove/cat')

The above are some of the cleaned photos , It can be seen that the reasons why the photos were cleaned are as follows :

1. Dogs or cats are black , Or the light is dim

2. The background of the photo is complex , There are often people in it

3. The picture is blurry, etc

Training course ( Four )

Then run the fourth version of the program to get the following neural network output :

Finally, we continuously adjust the parameters ( For example, the parameters of the deactivation layer 、 The number of iterations 、 Number of data per feed 、 Learning rate ), Make the model accuracy close to 90%, It meets our requirements .

adopt model_new.save('the_save_model.h5'), With h5 Save the model obtained from the final training in a complete format , For later model verification loading .

Screenshot of training interface , Use GPU Speed up , The training set consists of 2041 A picture , The test set consists of 201 Photo data .

Training iterations 30 Time , The last correct rate is 85 above , And save the trained neural network model .

Neural network effect display

use first tf.keras.models.load_model(‘’) Load the model saved by the training , Then read the picture to be judged , Process the pictures , Change the format of its data 、 dimension , Then it is sent to the neural network , adopt predict = new_model.predict(), To get the output .

Because the output layer adopts Sigmoid Function for binary classification , Therefore, in the model test, it is necessary to Sigmoid Function output , Output is 0-1 Between .

With 0.5 For the dividing line , The closer the 1 The more likely you are to be a dog , The closer the 0 The more likely you are to be considered a cat .

Considering that the actual input is not a picture of a cat or a dog , So we are 0.5 Set a threshold value near , When the predicted value subtracts 0.5 The absolute value of is less than 0.05 when , That neither belongs to the cat , Nor does it belong to dogs .

The core code of judgment is as follows :

if abs(predict[0]-0.5) < 0.05:

print("can not predict")

else:

if predict[0]>0.5:

print("it is a dog")

else:

print("it is a cat")

Actual sample inspection display

The training code of convolutional neural network is as follows :

import os

import matplotlib.pyplot as plt

import numpy as np

import tensorflow as tf

from tensorflow.keras.callbacks import ModelCheckpoint

from tensorflow.keras.optimizers import RMSprop

base_dir = 'D:/Python/AI/catvsdog/'

train_dir = os.path.join(base_dir, 'data/')

validation_dir = os.path.join(base_dir, 'validation/')

train_cats_dir = os.path.join(train_dir, 'cat') # directory with our training cat pictures

train_dogs_dir = os.path.join(train_dir, 'dog') # directory with our training dog pictures

validation_cats_dir = os.path.join(validation_dir, 'cat') # directory with our validation cat pictures

validation_dogs_dir = os.path.join(validation_dir, 'dog') # directory with our validation dog pictures

batch_size = 64 # 64

epochs = 40 # 40

IMG_HEIGHT = 128

IMG_WIDTH = 128

num_cats_tr = len(os.listdir(train_cats_dir))

num_dogs_tr = len(os.listdir(train_dogs_dir))

num_cats_val = len(os.listdir(validation_cats_dir))

num_dogs_val = len(os.listdir(validation_dogs_dir))

total_train = num_cats_tr + num_dogs_tr

total_val = num_cats_val + num_dogs_val

def plotImages(images_arr):

fig, axes = plt.subplots(1, 5, figsize=(20, 20))

axes = axes.flatten()

for img, ax in zip(images_arr, axes):

ax.imshow(img)

ax.axis('off')

plt.tight_layout()

plt.show()

image_gen_train = tf.keras.preprocessing.image.ImageDataGenerator(

rescale = 1. / 255,

rotation_range=5,

width_shift_range=.1,

height_shift_range=.1,

horizontal_flip=True,

zoom_range=0.1

)

train_data_gen = image_gen_train.flow_from_directory(batch_size=batch_size,

directory=train_dir,

shuffle=True,

target_size=(IMG_HEIGHT, IMG_WIDTH),

class_mode='binary')

augmented_images = [train_data_gen[0][0][0] for i in range(5)]

plotImages(augmented_images)

# Create validation set data generator

image_gen_val = tf.keras.preprocessing.image.ImageDataGenerator(rescale=1. / 255)

val_data_gen = image_gen_val.flow_from_directory(batch_size=batch_size,

directory=validation_dir,

target_size=(IMG_HEIGHT, IMG_WIDTH),

class_mode='binary')

# Creating models

model_new = tf.keras.models.Sequential([

tf.keras.layers.Conv2D(16, 3, padding='same', activation='relu',

input_shape=(IMG_HEIGHT, IMG_WIDTH, 3)),

tf.keras.layers.MaxPooling2D(),

tf.keras.layers.Dropout(0.4),# 0.4

tf.keras.layers.Conv2D(32, 3, padding='same', activation='relu'),

tf.keras.layers.MaxPooling2D(),

tf.keras.layers.Conv2D(64, 1, padding='same', activation='relu'),

tf.keras.layers.MaxPooling2D(),

tf.keras.layers.Dropout(0.3),# 0.3

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(256, activation='relu'),

tf.keras.layers.Dense(1, activation ='sigmoid')

])

#

# # Compile model

# # Choice here ADAM Optimizer and binary cross entropy loss function . To view the training and verify the accuracy of each training period , Please deliver metrics Parameters .

model_new.compile(optimizer=RMSprop(lr = 0.0005),

loss='binary_crossentropy',

metrics=['acc'])

model_new.summary()

print(model_new.trainable_variables)

# After successfully introducing the data expansion into the training sample and adding Dropout after , Train this new network :

history = model_new.fit_generator(

train_data_gen,

steps_per_epoch=total_train // batch_size,

epochs=epochs,

validation_data=val_data_gen,

validation_steps=total_val // batch_size

)

model_new.save('the_save_model.h5') # Save the model

print("model save")

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'bo', label='Training accuracy')

plt.plot(epochs, val_acc, 'b', label='Validation accuracy')

plt.title('Training and validation accuracy')

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training Loss')

plt.plot(epochs, val_loss, 'b', label='Validation Loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

Convolutional neural network data cleaning code :

import tensorflow as tf

import numpy as np

import glob

import shutil

import os

from PIL import Image

import cv2

from pathlib import Path

from tensorflow.keras.preprocessing.image import ImageDataGenerator

new_model = tf.keras.models.load_model('path') # Load network

new_model.summary()

for image_path in glob.glob(r'path /*.jpg'): # Read all the pictures

image = cv2.imread(image_path)

test = cv2.resize(np.array(image),(128,128)) # Resize the picture

test = test.astype('float32')

test = test/255.0 # normalization

test = test[tf.newaxis, ...]

test = np.array(test)

predict = new_model.predict(test) # To make predictions

print(predict[0])

if abs(predict[0]) < 0.05: # Unqualified

shutil.move(image_path, 'path') # Move to another folder

Neural network prediction code :

import tensorflow as tf

import numpy as np

import os

from PIL import Image

import cv2

from pathlib import Path

from tensorflow.keras.preprocessing.image import ImageDataGenerator

new_model = tf.keras.models.load_model('D:/Python/AI/catvsdog/__pycache__/87the_save_model.h5') # Load model

new_model.summary()

image = cv2.imread('d:/Python/AI/catvsdog/pre/predict/cat1.jpg') # Read the picture

test_img = cv2.resize(np.array(image),(128,128)) # Resize

test = test_img.astype('float32') # Adjust the format

test = test/255.0 # normalization

test = test[tf.newaxis, ...] # Increase the dimension

test = np.array(test)

test.shape

predict = new_model.predict(test) # To make predictions

print(predict)

if abs(predict[0]-0.5) < 0.05: # To classify

print("can not predict")

title = "can not predict"

else:

if predict[0]>0.5:

print("it is a dog")

title = "it is a dog"

else:

print("it is a cat")

title = "it is a cat"

cv2.imshow(title, image) # display picture

cv2.waitKey(0)

cv2.destroyAllWindows() # Destruction of the window

Conclusion and prospect

The neural network built this time can be used for binary classification , And it is applicable to most of the two classifications , But for multi classification problems , Its ability is insufficient , It needs to be improved .

As a simple introduction to convolutional neural network , It makes me understand the building process of neural network and parameter setting , With a certain understanding , It can pave the way for future study .

This neural network can be used in other places in the future , For example, the classification of male and female photos 、 Classification of peach and pear trees ; Increase the amount of training , That is, increase the number of training pictures and iterations to achieve better results ; Introduce breakpoint continuation training, etc , Improve operational efficiency ; Optimize the network structure to achieve higher accuracy .

边栏推荐

- Interview questions on mobile terminal, Android and IOS compatibility

- Fundamentals of Mathematics - Taylor Theorem

- TMUX common commands

- Explanation and explanation on the situation that the volume GPU util (GPU utilization) is very low and the memory ueage (memory occupation) is very high during the training of pytoch

- The Poisson regression model (posion) is constructed by GLM function of R language, and the poisgof function of epidisplay package is used to test the goodness of fit of the fitted Poisson regression

- 解决逆向工程Mapper重复问题

- StrVec类 移动拷贝

- Meter Reading Instrument(MRI) Remote Terminal Unit electric gas water

- Solve mapper duplication problem in reverse engineering

- HDLC protocol

猜你喜欢

Clarify the division of IPv4 addresses

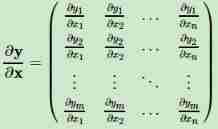

Mathematical knowledge - matrix - matrix / vector derivation

Introduction to coco dataset

Compiling principle on computer -- functional drawing language (V): compiler and interpreter

Talk about the four basic concepts of database system

Discrete chapter I

Literature reading: deep neural networks for YouTube recommendations

离散 第一章

EasyExcel导出Excel表格到浏览器,并通过Postman测试导出Excel【入门案例】

Search and rescue strategy of underwater robot (FISH)

随机推荐

Solve mapper duplication problem in reverse engineering

FPGA generates 720p video clock

visual studio2019的asp.net项目添加日志功能

Compiling principle on computer -- function drawing language (III): parser

Principle and example of OpenMP task

(P36-P39)右值和右值引用、右值引用的作用以及使用、未定引用类型的推导、右值引用的传递

Leetcode notes: Weekly contest 277

"Three.js" auxiliary coordinate axis

Pytorch profiler with tensorboard.

ASP.NET项目开发实战入门_项目六_错误报告(自己写项目时的疑难问题总结)

DUF:Deep Video Super-Resolution Network Using Dynamic Upsampling Filters ... Reading notes

In depth learning, the parameter quantity (param) in the network is calculated. The appendix contains links to floating point computations (flops).

Process terminated

The Poisson regression model (posion) is constructed by GLM function of R language, and the poisgof function of epidisplay package is used to test the goodness of fit of the fitted Poisson regression

KAtex problem of vscade: parseerror: KAtex parse error: can't use function '$' in math mode at position

R language uses rstudio to save visualization results as PDF files (export--save as PDF)

Discrete chapter I

C # hide the keyboard input on the console (the input content is not displayed on the window)

Database connection pool and dbutils tool

Uni app screenshot with canvas and share friends